It’s well-known that good typography matters. Reading a poorly typeset document can be difficult. Poor typesetting and poor typography can occlude information, driving readers away in frustration and defeating information exchange.

However, not everyone sees it this way, and some even see nefarious intent in typographic efforts to make information more approachable and easier to read.

In a recent blog post, Cambridge chemist and crystallographer Peter Murray-Rust argues that publishers are using typesetting and typography to slow down science, extract fees, and control business:

I am highly critical of publishers’ typesetting. Almost everything I say is conjecture as typesetting occurs in the publisher’s backroom process, possibly including outsourcing. I can only guess what happens. . . . The purpose of typesetting is to further the publisher’s business. I spoke to one publisher and asked them “Why don’t you use standard fonts such as Helvetica (Arial) and Unicode)” Their answer (unattributed) was: “Helvetica is boring”. I think this sums up the whole problem. A major purpose of STM typesetting is for one publisher to compete against the others.

Murray-Rust then goes on to heap virtues on LaTeX as having solved the typesetting problem in mathematics and physics.

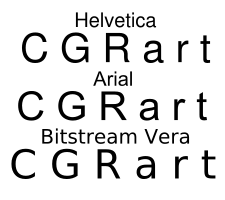

It’s interesting that Murray-Rust explicitly admits ignorance about how typesetting is done at most publishers while also implicitly admitting ignorance about typography in general. For instance, he conflates Helvetica and Arial above, as if they are the same fonts. They are not, as the graphic above shows. Helvetica is categorized as a Grotesque font, and was designed in 1957. Arial is a Neo-Grotesque font designed in 1982. Arial was a standard element in Windows until recently, when it was replaced with Calibri. The differences between Arial and Helvetica are subtle but real — the “R” and “G” above show some of these clear differences. The “1” is clearly different, as well. Arial is widely believed to be a hasty redraw of Helvetica by IBM in order to avoid licensing fees, and Microsoft took it over later, making it a Windows 3.x standard in 1992. The “G” in Arial is inferior to the “G” in Helvetica, for instance, with its lack of a right foot.

But the question he begs by claiming typesetting is done to further the publisher’s business is, “What is the publisher’s business?”

Well, the publisher’s business is to know how to take rough information, see if there is enough of an audience for it to merit the work involved in refining it, and then refine it. Part of this refinement is typesetting and typography. Recent research has suggested that some typographic choices lend more credibility to information, or make it easier to accept. This comports with what every typographer or typesetter has seen dozens or hundreds of times — the same word or words in different fonts affect people differently. In dense type, readability becomes an issue — legibility, comprehension, and endurance (how long you can read without fatigue). In headlines or signage, emotional aspects come into play.

And, yes, using LaTeX involves typography.

I remember in college taking a linguistics class, where the instructor asked us on the first day to write down what kind of accent we had. I have a nondescript American accent, but at the time I was stupid enough to write, “I don’t have an accent.” I was sharply and properly made an example of — “We all have an accent!”

All machine-published text is typeset and typographic. Even these words you’re reading now. There is no “non-typographic” type.

LaTeX is a machine language that uses XML or RTF as a substrate for some CSS or ML layer, which turns builds on the TeX typesetting language to transform the underlying information into a typeset page. Word and its equivalents do roughly the same thing. But because LaTeX includes a typographic approach, it is amenable to typographic improvements, as well. The Association for Computing Machinery has three different LaTeX typographic standards, matched to one or more journals. The journal Econometrica has different LaTeX typographic standards for different manuscript stages. PLoS Computational Biology has LaTeX standards and templates. The list goes on.

Why do publishers create, propagate, and maintain these LaTeX typographic standards on a per-journal basis? Because readers actually have aesthetic expectations of the journals they use. It’s why the PDF remains the format of choice, even this deep into the digital age.

There’s an interesting paranoia here — that visual display work is being done by publishers in order to tighten their dastardly grip on authors and scientists. The non-paranoid reality is that publishers are careful about the aesthetic they provide because they are serving authors and scientists, who are very sensitive to typographic and design issues, and react negatively when these deviate from their expectations. I’ve redesigned journals, and the band of acceptable typography and brand treatments is relatively narrow, the boundaries unequivocal.

A hard left comes in Murray-Rust’s complaint when he points out that in one formula, some typographic wannabe actually set a flipped and upside-down italic “3” as an epsilon (ε) in a calculation, rendering it unreadable for a speech translation program for the blind. Complaining about this is complaining about poorly done typesetting, not well-done typesetting. And one error doesn’t invalidate the entire practice of typesetting calculations. In fact, it underscores how important it is to typeset them well and accurately.

Now we get to my favorite part — his mockery of a publisher saying, “Helvetica is boring.” I’m pretty sure the publisher likely said more than that, but if he or she didn’t, allow me to. Helvetica is a poor choice for a reading font. It is a sans serif with a significant x-height, two factors that decrease its reading contrast and speed, and results in a blended reading field, which you can more easily lose your way in. If line lengths and leading aren’t carefully managed, a page of Helvetica can look like a gray jumble. And in a justified page, the rivers on the page can cause a Moiré pattern. Helvetica may or may not be boring (it is currently experiencing a resurgence among signage and public space designers), but it is a poor choice for a reading font.

Do publishers use typesetting to exploit the market? That’s a paranoid and ill-informed perspective. Publishers are careful about how they present information because serving authors and readers is fundamental to what publishers do, and readers and authors in the sciences have expectations about how their articles look in finished form. Typography, and the typesetting necessary to achieve it at a high level, is a big part of meeting those expectations, and also has a role in transmitting new findings effectively.

(Hat tip to DC for the pointer.)

Discussion

51 Thoughts on "Ignorance As Argument — A Chemist Alleges Publishers Exploit Typography for Money"

To the above I’d like to direct fellow TSK readers to the PeerJ blog entry on their thinking about the design of the pdfs that they use: http://blog.peerj.com/post/43558508113/the-thinking-behind-the-design-of-peerjs-pdfs It’s an excellent and highly informative write up.

Personally speaking, I really like what they’ve done. It just goes to show that this business of publishing is much more than just pushing a button, regardless of how one goes about trying to make the process sustainable.

I agree with your article (down with ugly Helvetica!) but I’m sure you’ll shortly see a huge pile-on of offended LaTeX users for this sentence…

“LaTeX is a machine language that uses XML or RTF as a substrate for some CSS or ML layer, which turns the underlying information into a typeset page.”

TeX defines its own peculiar typesetting language on which LaTeX builds. It’s similar to RTF in that it uses backslash commands but it’s not the same thing, and it’s very different from XML. CSS is not usually involved either unless you’re outputting to HTML files.

Shame on scientists for wanting their literature to be readable and functional. In a similar manner, they should be ashamed at not building their own computers from scratch and running Linux, choosing instead to increasingly buy well-designed and functional devices from companies like Apple. The tech industry sure has them over a barrel. The purpose of well-designed software and hardware is to further the software and hardware manufacturer’s business, after all. And don’t get me started on those fancy microscopes. Why aren’t more people grinding their own lenses?

I want to rate this as up several more times.

Thanks for an informative article – I am pleased you have highlighted this issue in the Scholarly Kitchen which will bring it to a wider audience. For the record I know that Arial and Helvetica are different (the brackets signified an alternative, not an equivalence).

If publishers had converged on standard PDF with Unicode I would have considerably less criticism. Variations from Helvetica (part of the PDF standard) to other fonts would have been tolerable.

You say “serving authors and readers is fundamental to what publishers do”. Why, then, in an electronic age do you serve double column PDF? I never print articles – the whole process of rendering scientific information to a numbered printed page is obsolescent. Why do you take high quality EPS and transform it to low quality JPegs? This destroys scientific information. An innovative industry would have moved to SVG – like Wikipedia has.

And how often do publishers ask the views of readers? If you can show public evidence of reader consultation (readers, not editors) I will be delighted.

For the record I have actually said reasonably nice things about PeerJ. It’s doing things better.

And your assertion that publishers serve authors – when every publisher has a different set of rules and many are arcane – doesn’t match with my exerience.

And how often do publishers ask the views of readers?

Most publishers constantly survey readers and conduct focus groups. Their opinions and practices may be somewhat different than you expect:

https://scienceintelligence.wordpress.com/2012/11/28/doctors-still-prefer-print-journals-vs-digital/

Publishers ask readers all the time, and also review actual behavior through usage reports. PDFs are by far the most popular form of article usage, and the classical scientific article layout has a lot to recommend it, both in form and function.

Your use of the word “I” is noteworthy, because you’re reflecting your own preferences. If you can show that you’ve actually gone beyond that, I’d be very interested.

Your “EPS” and “jpeg” shot is a new one. Are you talking about in the HTML version of articles? If so, a lot of the reason has to do with bandwidth. Not everyone is at Cambridge with a sizzling hot T1 or fiber line. Our users come from everywhere, with a lot of different bandwidth needs. Serving big fat EPS files all the time would not serve them well.

There are also storage issues. Believe it or not, it’s expensive to store tons of big files and serve them up. Downsampling from EPS to jpeg helps control costs while delivering the information. And what destroys scientific information is making the EPS in the first place. There are no data in an EPS.

I think this is part of the continuing confusion between the paper written about the research and the research itself, here a conflation of a graphical representation meant to summarize data with the actual data behind it.

I’m actually somewhat sympathetic to the point about rasterizing vector graphics. Vector graphics are often actually smaller files than the rasterized version (depending on the number of points plotted, for sure, though) and often do include more information than the raster version. In a map, for example, you may be able to zoom in to see detail that is not apparent in the version rasterized for print production.

You might think about highlighting the work several scientific publishers did (and the several hundred thousand dollars spent) in creating the freely available STIX font – a LaTeX package is in beta release right now. See http://stixfonts.org

Much like LaTeX, Latin is a language that uses German or Swahili as a substrate for some Klingon or Indo-European layer, which turns the underlying grunts into speech.

Ariel, Helvetica, who cares? Murray Rust’s ε example may be an exception (though I find that hard to believe), mixing up β and ß is absolutely rife (whatever the font). Try a simple Google search in the domains of major publishers if you don’t believe me. Is Kent Anderson saying he doesn’t care? Or is this the ‘added value’ so regularly referred to?

I do care about accurate scientific and mathematical notation. Heck, I used to typeset mathematics texts. As for mixing up the Greek “beta” and the German “B”, this isn’t limited to publishers. Many older versions of IBM and Windows OS’ didn’t support Greek characters, and put the German “B” in instead. This included DOS.

To me, these mix-ups are a sign of minimizing the importance of typesetting and careful editing. How would you fix it?

First of all, it’s not a B, German or otherwise. If anything it’s an s, although it is a ligature of sz (ſ and Ʒ, combined as ſƷ and known as a sharp s, often spelled as ss, not ß, and in German-speaking areas of Switzerland it’s always ss). How would I fix it? I’d just look if all the bètas were indeed bètas in the submitted text. That shouldn’t be too difficult for a publisher, or is it? If the author has naively used the ß, a simple ‘find and replace’ works well, even in a Word document. The fact that antediluvial Windows and DOS couldn’t handle Greek characters (and it could the ß?) is hardly relevant in 2013, is it? Yet I observe the ß/β problem in many a brand new scientific article published by many a reputable publisher, large and small.

You’re right that the importance of typesetting and careful editing is minimised. That’s perhaps (though barely) acceptable from authors, but from publishers? It should be their pride!

I concur with Kent here. When I see character mixups like this, it almost always originates in the author’s original manuscript. I just got a manuscript that used superscript O (capital oh) for degree signs and superscript periods for product dots (which was a new weirdness to me). Now our copyediting department is going to have to go to a lot of work to fix that to make it machine readable.

Flashbacks . . . I’m having flashbacks. You’re exactly right, Joel. I can’t tell you how much scrubbing some manuscripts take to ferret out homemade solutions like these. Roll-your-own ellipses, em-dashes, not to mention el’s instead of one’s, oh’s instead of zeroes. Then we get into attempts at diacriticals and math notation, often charming, sometimes handwritten into an empty space, and so forth. And that moment when you realize what they’ve done? So discouraging. You know you’re in for an editing slog.

Yep. This is how a proper publisher *earns* his money.

The only problem is that you could also argue that much of our business practices are “exploiting” others, in order to make a profit. This can be applied to tons of influence techniques, from anchoring to more commonly known sales tactics, or even the COLORS of brands and brand elements that are used. I may exploit your lack of education (if I am a doctor–just one example), or your lack of specific resources or skills in a certain area…I wonder if that is a problem? Time could probably be better spent on finding ways in which people are being deceitful about the ways in which they are exploiting others.

Authors who are expert in their own field need to rein in their egos when it comes to publishers’ production processes, of which they really know very little. Publishers work hard to get their production flow just right. Why should they fix what’s no broken? The fact that LaTeX has not gone the way of XYwrite is evidence of its sturdy resistance to hot entrepreneurial competition.

Look here, I’m writing my comment in san-serif and I suspect it will soon be serifed!

If you were to insist on consitency of American spelling on this blog, without accents, you should have. You’re probably saying ˈbeɪtə as well. I’m sure the accent threw you. ˈbeɪtə vs. ˈbætə. Almost as bad as ‘holy serif’ vs. ‘without serif’.

British spelling of “beta” includes an accent? News to me. Seems I’ve only seen that in non-English languages (Dutch, perhaps?).

If he was to insist on Yankee spellin’ for us all, I’m fairly certain ships would sail from every corner of the Empire on the next tide with instructions to apply the appropriate corrections. Speaking of which, you spelt consistency wrong. Now, Jan, what is your point? I’ve read all your comments on this blog and I’ve no idea what argument you are trying to make.

Touché, with or without accent. But y’all know what the beauty of English is, don’t you? It’s the ability to take a word from any other language, misspell and mispronounce it, and so make it into English. Guillaume Jacques-Pierre already did it and adding so many ‘new’ words that way is testimony to his great genius. Bèta with an accent is my small contribution in that same spirit. I also did spell consistency wrongly, I admit. Truly wrongly. Abject apologies.

My point, since you ask, is that copy-editing and typesetting are important, not for (a)esthetic or cosmetic reasons, but for the sake of integrity of meaning. Humans can, to varying degrees, deal with some fuzziness; for computers it’s much more difficult. And scientific publishing these days needs to be readable by computers. Particularly symbols, and codes, such as gene codes and chemical names (‘humdrum’ connecting waffle is in many cases less important). The tenor of this blog post seems to be that what counts is just how it looks to the human eye (it isn’t), that typesetting-introduced errors of meaning are rare (they aren’t), that any mistakes are mostly the author’s fault anyway, and that correcting them should not be a publisher’s responsibility. My point is that it is one of the principal responsibilities of a scientific publisher. Making articles truly fit for the record. For the future. There is little else left. As Steve Pettifer said in a talk to publishers earlier today: “Identify things, describe them, so that they can be read by machines. Make publishing a service, not an ordeal. Do things I, as author, genuinely can’t do myself. Educate me. Make my work better.”

Get it now?

Well now you’ve written it down, yes I do. But here’s the thing. There is a set of authors who, for want of a better expression, live in a future where articles are computable… And there’s a set that don’t. And the set that doesn’t is much larger… The question is, to what extent the former group will become the mainstream. Anita DeWaard is doing some fantastic work on elucidating statements etc from articles, and, in thinking about her work, it strikes me that there’s a need for two kinds of article – one designed for humans to read and for machines to do computation with. This blog post deals with the former. I think that it would be fantastic if authors were to at least initiate the process of doing a machine readable translation of their papers. This is because, as you note, machines don’t do fuzzy very well. Better to get the sentiment and the statement, direct from the brains that did the thinking and the work. But I’m told consistently that they just won’t do that. Peter, bitching at publishers, doesn’t really move anything on. Making a case to his fellow academics about the need for machine readable text, and working on how to bring that reality about, would be a more productive approach would it not?

Well, yes, I agree that it would be better if authors produced machine- and human-readable text themselves. And then publish in arχiv straight away, like physicists do. Some authors, outside physics, don’t or can’t and therefore pay publishers to help them. They pay money, typically to publish with ‘gold’ open access, or by means of copyright transfer, for which they should be able to expect proper typesetting at least. (In fact, I think publishers should start extracting and presenting semantically normalised assertions as well, to enable machine reasoning). There may well be more authors living in the past than in the present (not the future; articles *can* be computable already), but I don’t believe that to be the case in the sciences. In any event, publishers catering for the past rather than the future are not doing anyone a service, least of all themselves.

Ah, yes, publishers also organise peer review. Almost forgot that. But it’s a bit ‘thin’, if that’s all they do.

“publishers are careful about the aesthetic they provide because they are serving authors and scientists”

I have worked for fifteen years as the copy editor of the English translation of a Russian journal in theoretical and mathematical physics, initially working in AMSTeX and more recently, in LaTeX2e. I am quite clear about who I serve: I serve graduate students and advanced undergraduates in physics, mostly in American universities. I also keep their professors in mind (hoping a professor might sometimes think one of our papers is clear and would be good to recommend to students, but I know professor think mostly about the subject matter).

There are two kinds of effort involved in understanding the content. One kind of effort is applied to the scientific concepts and ideas in the paper. The other kind of effort is devoted to extracting the concepts and ideas from the arrangement of marks on a page or screen. I can influence the second kind of effort by improving word choice (translated journal), sentence structure, displayed equations, etc. My unattainable goal is for the author’s thought to move into the student’s mind effortlessly (for the student reading the paper to be unaware of the printed page and engage directly in the ideas, concepts, and images). I believe that a high information-to-effort ratio can move “my” journal higher on the list of papers given by a professor for additional reading after a lecture. When I was a student in such classes many years ago, I always read papers in certain journals first and the other papers only if I had time.

Several of the components of effort have been mentioned in the blog post and preceding comments. I think the information-to-effort ratio is crucial to the success of a journal, and increasing that ratio is value added by the publisher. I cannot directly influence the inherent information content of our journal. That is a matter for the editorial board and the refereeing process. My editing can directly influence the effort required to extract the information from the published material. By reducing the denominator, I can increase the ratio and add value.

As an example, some authors prefer “since” with the meaning “because” because they consider it more aesthetic. I explain that we never use “since” (or “for” or “as”) to mean “because” in our journal because “because” is a preposition with only one meaning, while “since” can be several parts of speech and has several different meanings as a preposition. Less effort is needed to understand “because” (there are fewer choices to make). The difference in mental effort is small, but many small differences can add up to something significant.

I wish I could give this comment multiple “likes,” Bill, partly because you have articulated so nicely a philosophy that I try every day to promote in my library. I generally express it less elegantly, though, by saying things along the lines of “our students should be stretched and challenged by the ideas they encounter in documents — not by the sucky, user-hostile search interfaces that stand between them and those documents.”

Correction: “because” is a subordinating conjunction.

Excuse: it is after midnight in Moscow.

What is a Grotesque font?? BTW, “font,” back in the real typesetting days–when pieces of type were actually set–meant a subset of a typeface. Typefaces often had five fonts: roman, italic, boldface, small caps, and bf ital. I guess I’ll have to accept that because Microsoft has long used “typeface” and “font” to mean the same thing, they’ve become the same thing, but I found “font” to be a useful word. Now it really can’t be used properly because it confuses people But back to Grotesque. What does it mean?

A Grotesque typeface (honoring your good technical distinction) is a sans-serif from the 19th century which may preserve some script elements (curlicue “g”) and have some line weight variation. A modern (Neo-Grotesque) typeface has a more plain and linear appearance, and may do away with script elements and reduce line weight variation to a bare minimum. But if there are better definitions, let’s hear them.

An example of Grotesque would be News Gothic, popular before Helvetica, etc. The use of ‘grotesque,’ seemed to me, came from confusion about the font used by The New York Times masthead also being called ‘gothic.’

On a slightly different note. Despite many investments in typesetting technologies in (and of) the past, publishers are investing very little in the primary typesetting technology of the future: HTML rendering engines.

A good example (though I’m biased) is MathML, the W3C standard for mathematical markup. Despite being used in XML publishing workflows for over a decade, and becoming part of HTML5, no browser vendor has ever spent any money on MathML development. Accordingly, browser typesetting “quality” is highly unreliable (unless you use MathJax — which is where I get my bias from).

Trident (Internet Explorer) has no native support (but the excellent MathPlayer plugin), Gecko (Mozilla/Firefox) has good support thanks to volunteer work and WebKit (Chrome, Safari, and now Opera) has partial support — again solely due to volunteer work. (Unfortunately, only Safari is actually using that code; Google recently yanked it out of Chrome after one release.)

This isn’t surprising from a business perspective — for the longest time, there was simply no MathML content on the web. But of course, this was a chicken-and-egg problem: no browser support => no content => no browser support => … And it ignores the impact MathML support would have on the entire educational and scientific sector where it would enable interactivity, accessibility, re-usability, and searchability of mathematical and scientific content. (Including ebooks — MathML is part of the epub3 standard.)

Now you might say MathML is just math, a niche at best. But very likely its success will determine if other scientific markup languages will become native to the web — languages like CellML, ChemML, and data visualization languages. These will probably see even less interest from browser vendors but will have enormous relevance to the scientific community.

Right now, scientific publishers (in my experience) have neither expertise nor interest in browser engine development. Unfortunately, they also don’t put pressure on browser vendors to improve typesetting (whether scientific or otherwise). That’s very short sighted, I think. Given that Gecko and WebKit are open source, a joint effort of publishers could very well fix things — and show the community that publishers have their eyes on the future rather than the past.

Credit where credit is due. A number of publishers are sponsors of MathJax — something that I like many others have been able to use on numerous websites. Likewise, the stix fonts, although I’m a little bit surprised its taken so long for the latex release of these.

ChemML — that would be good. Who was it that did that? Peter someone or other?

I think scholarly publishers are engaged in a conspiracy to disenfranchise us older readers from participating in scholarly communication by printing footnotes way too small! 🙂

As long as we’re all correcting each other’s typography and grammar here, I simply cannot resist pointing out that it should be the Association for COMPUTING Machinery rather than “COMPUTER” in the original post. At any rate, the society commonly just goes by “ACM.”

Too bad the Kitchen can’t afford a professional copyediting staff to do this stuff. (devil’s grin). Shoemaker’s children?

-Carol (who when she was publisher at ACM once pointed out a typo in a memo the Executive Director sent to staff asking everyone to be more careful about typos.)

Funny, you criticise PMR for not knowing anything about typography, and yet think latex builds on XML even though it predates it by about 20 years. Perhaps you have your own limitations in understanding the computational representation of text. You might want to read Peter’s blog; you might learn something about that.

As for journals supporting Latex standards most of them do this *not* to produce a single typography. Many journals cut and paste the latex into Word documents and then use this to generate out the final form. This is why they have “don’t use any macros” rules. Except for the CS journals, in which case they do no type setting.

All of which is irrelevant; look at this website for instance. It looks quite nice, it’s perfectly readable. All the type setting is done by my browser. If I want, I can put your words into any font I like.

Publisher type setting is a not a service it’s a hindrance. I want to stop getting daft double spaced manuscripts to review; I want to stop having all the figures at the end; I want to stop getting back “final proofs” and having to check that the publisher has not messed up the words that I have already spent a long time getting right. And if you can throw away some of the 3000 reference styles that publishers have thought up, that would be a good thing also.

Oh, I’ve never had a journal tell me helvetica is boring; I did have one tell me that the couldn’t do courier or any fixed width font because it doesn’t work on the web. Dearie, dearie me.

Yes, I looked up things about LaTeX to make sure I got it right, and grabbed the wrong technical information in haste. I also emailed an expert in it for more about how the typographic controls are written into the subsequent customized implementations, but didn’t hear back in time. Embarrassing, and I corrected it as soon as it was pointed out. You’ll get no argument from me that I messed up that sentence.

It’s dangerous to generalize from personal experience, which is one point of disagreement I have with Murray-Rust, and will have with you, as well. Part of what publishers do is address an audience. This means addressing more than one person’s idiosyncrasies or current state of mind, and finding out what a more general need and desire is. It’s expensive and time-consuming (and also takes long-term interests and engagement) to suss out what authors and readers need and prefer in a way that makes those needs generally addressable, with some compromises being inevitable around the edges. The fact is that the HMTL/PDF world is an accommodation — you don’t have to read the PDF, and you can search a lot more text and math than you ever could before. We’ll continue to make progress on this, but I personally doubt that the biological basis of reading texts using the English alphabet will change much no matter what technological changes come.

There are experiments underway for changing how manuscripts are sent to reviewers, but a major concern here relates to how closely the reviewed manuscript resembles a finished paper. That is, if the author’s submission looks too finished, the concern is that this experience would affect the reviewer’s opinion, possibly in a misleading way. Signals are integral to information transmission, and typography is a major signaling factor.

So, authors need the help of publishers to refine their rough work, so it’s suitable for publication. And, you are worried that if the manuscript looked too good, then the reviewers might get confused, and think that it was finished, and so do a poor review?

Yes, you are right that it is dangerous to generalise from personal experience; it is good to be reminded that there is a distinction between anecdote and evidence. However, I would say that I have met no one who likes having the figures at the end; moreover, I have been on the receiving end of some savage reviews in my time, which includes for conferences and workshops where the reviewed manuscript looks exactly the same as the final manuscript because both are author produced.

I think that the alternative explanation — that publishers are still using publication processes built around tree-ware — seems much more tenable.

We learned a lot during the hundreds of years of tree-ware (and inkware) about how readers expect and like information. You’re actually saying you like some of these things, too — intergrated tables and figures, for example. So, we’ll see what the future brings. But even e-devices are finding it hard to stray too far from conventions based on sound typographic principles.

Indeed, integrated tables, figures, nice typography, inline maths and so on. Then I am a LaTeX/TeX user. Written by a computer scientist, out of despair at publishers continually messing up the type setting of his equations. Or nowadays, I write for the web, and that gets type set fine by the browser (probably using the same para layout algorithms that TeX uses).

Who do you get to type set your articles on Scholarly Kitchen?

Complaining that it’s difficult to turn PDFs back into information has always felt a little to me like complaining that it’s difficult to turn hamburgers back into cows. Of course it’s difficult. PDF as a format was designed to allow electronic exchange of documents intended for human readers while preserving their visual fidelity, not exchange of information between machines for machine processing. There are of course other formats which are much better suited to this purpose, which is why many publishers have been mastering content in XML (or it’s predecessor) for a decade or more. In my experience, publishers today invest at least as much effort, if not more, in ensuring the consistency and quality of the XML as they do ensuring the quality of the typeset pages.

You may enjoy reading this article: Ceci n’est pas un hamburger, by Pettifer et al. http://www.ingentaconnect.com/content/alpsp/lp/2011/00000024/00000003/art00009

It’s open access and a must-read if you’re interested in PDF, HTML, XML and information content.