Those of us who labor in scholarly publishing can be forgiven for thinking that the world is a tiny place. The academic journal, the keystone of our industry, cumulatively brings in about $10 billion a year, not enough to get the CEOs of Uber or Pinterest out of bed in the morning; and the book, the much-despised book, is in retreat everywhere. While librarians continue to insist that there are huge publishers out there, corporations so big that they have a stranglehold on the academic community, if not the world overall, the actual figures for even the largest publishers are not much more than rounding errors for truly big concerns and industries. How is it that healthcare can be 17 percent of the U.S. economy while publishing about healthcare is worth pennies? Let’s play the game of Separated at Birth: We will pair Pearson with Apple, Reed Elsevier with Cisco, and John Wiley with Exxon Mobil. Now we can tell what big companies actually look like, and none of them send their kids to the same schools we do.

The scale of the Internet makes this point even more dramatically. An entertaining example of this can be found at the marvelous Web site Internet Live Stats. If you have never played around with this site, I strongly encourage you to do so. ILS has tapped into a number of Internet resources and presents some of the stunning numbers for Internet usage in real-time. So, for example, I just looked at the figures for YouTube videos viewed today–yes, just for today. It is 6:30 P.M. EDT as I write this, and already YouTube has logged 6,029,761,331 views. How is this possible? With numbers like these, why do we spend so much time thinking about the impact factor of The Lancet? The scale of Internet usage is staggering. It is time to think about what it means for scholarly publishing to operate at Internet scale.

A word of caution, however: thinking about the ultimate backdrop for any activity can invite foolishness. We can have all of our electronics burned out with the EMP of a solar flare, but should we factor in EMPs into our business plan? Or do we make the dystopian predictions of so many Hollywood films part of our forecast and insert a value for a breach of the San Andreas Fault, with Los Angeles sliding beneath the waves of the Pacific Ocean? I am particularly amused by asteroid paranoia: Should we stop what we are doing and think about how to alter our outlook as a civilization-destroying projectile makes it way to earth (probably landing in Los Angeles because it’s cheaper to film close to the studio)? Yes, there is a big picture — and it does not come from Hollywood — but it is not always relevant to what we do here and now in our little patch.

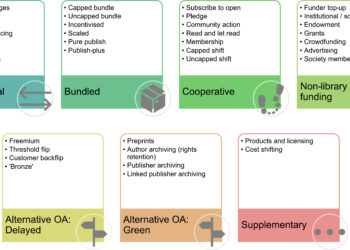

Superficially it would appear that open access services are more closely attuned to Internet scale than their brethren in the traditional publishing world. OA materials are placed on the Web, where they can be viewed, shared, sorted, whatever, subject only to the CC licenses that accompany them, assuming anyone actually reads or complies with these licenses. It’s often the case, however, that OA publishing is of the “post and forget” variety: put it on a Web server somewhere and then move on to the next task, which is likely to be unrelated. Such materials may get lucky and be “discovered” through search engines and social media, sometimes with a push from the publisher (PLOS has a sophisticated post-publication marketing program), but more often OA publishing is an effort in production rather than one of creating a readership. OA publishers often simply let the Internet to do the rest — and Internet scale sometimes does it. Here the properties of Internet scale are access from any part of the globe (infrastructure and law permitting) and Google and its ilk in the search and discovery space.

I say this is a superficial invocation of Internet scale because it touches only on access, which is a very small aspect of scholarly communications. Indeed it is one of the more remarkable achievements of OA advocates that scholarly communications has been shrunk down to a discussion of access, leaving aside what are arguably the more important issues of authority, importance, and originality — not to mention understanding. A more fundamental problem with access, however, is that it speaks to human scale, not to Internet scale.

How many books can the most assiduous reader read in a lifetime? How many articles can a cancer researcher read and learn from? While so much research goes into the science of life extension, how many years does a researcher in this field require to read the articles that came out just this past year? The great irony of scholarly communications today is that there are two fundamental issues warring in the world–access on one hand, information overload on the other — and they pull in the opposite direction. If you improve one (access) you worsen the condition of the other (information overload). So OA publishing can best be viewed as a benign, cultish activity lacking in long-term vision. It does the equivalent of solving the problem of climate change by shutting the light — one light — in the den. Traditional publishers should ask themselves if they do even this much.

To solve the information overload problem, which is a problem of enormous size even if the growth of accessible articles is limited to the paid-for collections in academic libraries, we have to find a new kind of reader, one that is not subject to the limited time of the human researcher. We know who that reader is: it is a machine. A well-designed machine, built for ingesting text and identifying meaningful patterns, is not subject to information overload. Indeed, the bigger the meal, the happier the bot. Here is where open access and Internet scale meet, in the world of text- and data-mining and the machines that conduct this task. (I first encountered this idea in a piece by Clifford Lynch.)

Publishers may choose to begin to think about this new readership. How will the nature of publications change when pattern-detecting machines develop into a partial or even full audience? How will we publish these new findings? It is easy and no doubt a great deal of fun to come up with jokey problems connected to this (How do you identify the PI of a pattern-recognition system? If a machine has a high citation count, is it eligible for tenure?), but there is a real and serious issue here. Scholarly communications sits upon a platform as solid and uncertain as the city of Los Angeles on the San Andreas fault. As Wikipedia says,

The San Andreas Fault is a continental transform fault that extends roughly 1300 km (810 miles) through California. It forms the tectonic boundary between the Pacific Plate and the North American Plate, and its motion is right-lateral strike-slip (horizontal). The fault divides into three segments, each with different characteristics and a different degree of earthquake risk, the most significant being the southern segment, which passes within about 35 miles of Los Angeles.

Maybe Hollywood had it right after all.

P.S. I just went back to look at the YouTube figures again. We are up to 7,185,269,451. Actually, that’s not accurate. While I was typing this the numbers went up. In fact, the numbers increased faster than I can type. For me to get an accurate figure, I would have to make a forecast. Please think about the implications of this: To understand the status of the Internet right now I have to make a prediction about the future.

Discussion

20 Thoughts on "Thinking about Internet Scale"

Great piece (it’s almost humbling). Joe’s correct, there is far too much for any person to read so machines will undoubtedly take the strain to help sort the wheat from the chaff, so to speak. But in order to be read by machines, content will need to be captured and presented in a machine-readable form and, certainly, where I sit, we are far from ready. Why? Because the downward pressure on publishing costs (driven in part by tight library budgets, in part by funders reluctant to spend money on publishing) combined with authors’ continued fixation with how their content looks on a ‘page’ is pulling us away from being able to capture content in XML. One of my biggest challenges at the moment is to find a cheap way to reverse-engineer ‘camera-ready’ PDF files being produced by authors – and they do this because they feel it is easier and quicker than going back and forth with a publisher, plus they retain control. Ironically, by making it more expensive to capture their content in XML authors are unwittingly making it harder for their content to be discovered, accessed and ‘read’ (especially by machine) but, even when we explain this, we’re finding it really hard to convince authors that capturing content in XML is worth it. If anyone has found a way to either convince authors or to reverse engineer PDF files cheaply, do let me know.

Toby Green

Head of Publishing, OECD

Toby, a Google search on “pdf to xml converter” gives lots of hits on software and services claiming to do this. Do they not work?

in a word, no – but I should qualify this – yes, there are lots of suppliers offering conversion services but they are expensive especially when there is no standard PDF to work from (each author tends to do their own thing)

And to augment Toby’s reply, this fundamentally _cannot_ work sufficiently well. With extremely few exceptions, PDFs have no structural semantics, just formatting (and things don’t even necessarily come out in the right order–especially anything multicolumn) so these converters have to infer a lot (“that looks like a block quote . . . oops, no, it’s a list”) and get a lot wrong. And don’t get me going on “what do you mean by ‘XML’?” Okay, maybe well formed XML, but that’s not what folks are thinking when they are thinking XML; what they really want is JATS, or DocBook, or XHTML, or TEI–four out of many models of XML that are not the same thing, don’t use the same tags, are typically designed for different purposes. So “PDF to XML” is useful, if at all, only as the barest starting point to get to what you really want and need. (And yes, I realize that even raw text as well formed XML is useful for text mining. I’ll concede that point.) Whereas authoring in actual XML with consistent semantics: well, now we’re getting somewhere. Which is why I’m such an advocate of XHTML5. Every blogging platform can (does!) create that, and every browser can display it, without any conversion. While what an author would create would still need work by a real publisher, that XHTML5 content is infinitely better, with meaningful structural semantics (and by the way is ACCESSIBLE), than the PDFs that Toby so appropriately laments.

BTW I was responding about PDF-to-XML conversion _software_, which folks too often think can just do the job with the push of a button. Obviously, as Toby points out, conversion services can do a fine job. That’s how a lot of the XML we have is made today.

The CCC’s new TDM program is precisely aimed at converting scholarly journal content into normalized XML.

I think the biggest threat to subscription publishers and libraries is question answering machines like IBM Watson. If I can get my scientific and engineering questions answered why do I need journals or libraries? Of course this assumes that answering questions is a major role of journals and libraries, which we do not actually know.

I think “delivering answers” is the current trend for discovery. In the context of discussions with librarians about Discovery Services, I’ve heard what we need is a Watson because researchers want actionable information instead of a list. We heard from IBM Frank Stein at the 2013 NFAIS Annual Conference about Watson as their grand challenge and headway they’ve made in healthcare. At the time, they were using mostly unstructured text (I say mostly because of course they crawled Pubmed) and the next prioritization would be to focus either more on multimedia or more on structured text. You can download his slides from NFAIS. At the same conference we also heard from Thomson Reuters about Eikon’s focus on answers, specifically their system for analyzing and answering English phrases about financial instruments.

Indeed, Susan, and thanks to the lead. Watson uses a complex set of algorithms that do not require XML. And others are going down this road as well, as Watson’s beating the Jeopardy champs was pretty convincing. Of course it also made some big mistakes, like not being able to name Toronto’s airport, so I would not rush into medicine.

I suspect that a researcher’s biggest question is “has anybody else done what I am thinking of proposing” or some such. For example, applying a disease model to the emergence of a new scientific subfield (or micro field, as they are now called), which my team did. A Watson like system might do very well with questions like this.

Whether these systems can actually discover anything, in the sense of original scientific discovery, I am much less sure of. A lot much less.

An extreme reaction to that would be the claim attributed to Picasso: Computers are useless — they only give you answers.

“The information overload problem” is something academic professionals can cope with quite adequately with the search tools now available. While not perfect, these tools allow a professional to rapidly sift wheat from chaff. The real problem is not the information per se, but that too often the gate keepers who have seized the research high ground have a narrow, often ahistorical, view that has to be addressed when writing papers and grant applications. This hampers the sifting process.

For example, if the internet had been available in Mendel’s time the professionals would have rapidly cottoned on to the importance of his work. But the Darwinian gatekeepers favored those bent on churning out examples of natural selection and demonstrating phylogenetic relationships. This was where the young men (they were mostly men in those days) went to advance their careers. It took 3 decades for Mendel’s work to be “rediscovered.”

Closer to modern times, there was the cult of Niels K. Jerne, who led the immunological community by the nose for decades. All this is now scrupulously documented in the biography by the historian Thomas Soderqvist, and in a tell-all account by a former disciple (“The Network Collective. The Rise and Fall of a Scientific Paradigm;” Birkhauser 2008).

Donald Forsdyke

Introducer in the 1990s of a precursor to Scholarly Kitchen (Bionet.journals.note)

So much stuff and so little time. Of course if you are a chemist with a budget you have CAS! And, if keyword searches are not sufficient, why haven’t other publishers duplicated this service for other disciplines? No market or as the art collector so sadly commented no Monet!

Yes, but (as always). Chemistry, at least traditional organic and inorganic chemistry, inherently lends itself to some pretty good standardized nomenclature and descriptive systems that other fields of study don’t.

I’d be surprised if CAS searches were as effective for physical chemistry as they are for organic and inorganics.

Isn’t TDM itself a partial response to the information overload problem? In the old days we had Reader’s Digest to deal with the problem. Maybe we need a new RD for today’s scholarly content?

Unfortunately, TDM is a very vague concept. It ranges from making new discoveries (yet to be demonstrated) to answering simple questions, which is indeed a possible help with the overload problem.

I have been doing research on the scale of subfields and even very specialized research areas may produce hundreds or thousands of papers a year. For example, Google Scholar returns over 1,800 2015 papers with quantum dots in the title alone.

Very thought-provoking, Joe, thank you.

Loved the question “How will the nature of publications change when pattern-detecting machines develop into a partial or even full audience?” And I concur with Toby Green and Bill Kasdorf that the more we can use machine-readable standards and formats, from linked data to XML and semantic mark-up, the more we are engaging in the machine-enabled world with publications.

I am not so surprised at the warfare over OA with entrenched views on both sides. It ties into the old argument between publishers and libraries – publishers say libraries want everything for free – and libraries say publishers are price-gouging and double-dipping. Now you are adding scholars into the picture. They care about tenure, which means traditional publishing – and they care about immediate, broadest and ideally free dissemination, which means OA to them. It’s also the old “freedom of information / information wants to be free” debate.

It’s interesting that much of the OA debate is becoming more acute as national governments change funding policies and develop OA requirements, such as in the Netherlands – and the action of the VSNU there against Elsevier.

Finally, what strikes me most of all is that no matter WHO publishes in WHICH model, you need the publishing mechanism. And who is best equipped to handle – publishers, libraries or authors – or new collaborations of all of them, such as in library publishing? I argue it’s important to continue the discussion on the value publishers provide.

Impressive number of YouTube videos, but note that emails sent is about 30x greater. We often overlook email as a form of sharing, because it is so pedestrian, yet it remains the backbone of Internet communication for most of us.

Moreover, the number of scientists and scholars is in the low millions, while the number of people is in the billions. The YouTube numbers, if true, require that some people spend most of their time there, quite a few actually. I doubt these are scholars, or serious students, so this has little to do with scholarly communication.