The world is full of strange associations. For instance, ice cream sales are highly correlated with the U.S. murder rate, but no one in their right mind would suggest that ice cream is responsible for violent behavior — that would give real meaning to “death by chocolate.” The underlying cause that connects murder with ice cream sales is heat. Heat makes people irritable and irrational, and given the right conditions, may lead to violent behavior. There is no theoretical basis of ice cream leading to violent behavior — this relationship is merely spurious.

The length of a paper’s reference list is also associated with the number of citations that a paper receives, writes Zoe Corbyn in this week’s Nature News (“An easy way to boost citations,” Aug 13), reporting on a study of more than 50,000 papers published in the journal Science. This association, writes Corbyn, provides evidence of widespread corruption in the citation process by involving a mutual “I’ll cite your paper if you cite mine” tactic designed to benefit both the citing and cited authors. She writes:

“There is a ridiculously strong relationship between the number of citations a paper receives and its number of references,” Gregory Webster, the psychologist at the University of Florida in Gainesville who conducted the research, told Nature. “If you want to get more cited, the answer could be to cite more people.”

Webster’s findings are based on statistical correlation — a simple measure of the association between two variables — and his plots look pretty impressive. But there is something odd about this relationship. I’ve never consciously cited papers because they include long reference lists. In fact, if you ask me about some of my favorite papers — the ones I cite over and over again — I’d be at a loss to give you even a ballpark estimate of their reference length. Number of references never comes into my mind as a rationale for citing an article. So why the correlation?

In the next few paragraphs, and with the help of my own dataset of Science articles published in 2007, I will demonstrate that this relationship between references and citations is entirely spurious. First I’ll replicate the association. Then I’ll make it go backwards. And for a final trick, I will make the association disappear completely. (If you are impressed, you can hire me for your kid’s next birthday party).

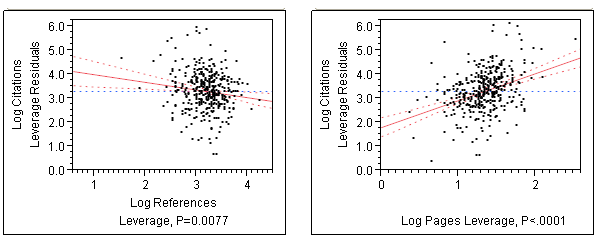

Ladies and gentlemen, on your right is my scatter-plot — similar to Webster’s (p.10) — showing the positive relationship between references and citations. Even for articles aged two years, the relationship is statistically significant (p<0.001), although it can explain only 4% of the total variation in article citations.

Now, let’s add to this simple, predictive model another variable: length of article in pages, since longer papers tend to cite more references.

As you’ll see in my second plot (below), the act of adding the length of the article changes the direction of the relationship. The relationship between references and citations is now negative. More references now lead to fewer citations, and this relationship is highly significant (p=0.0077).

By simply adding the number of pages to the model, I have turned a significantly positive relationship into a significantly negative one, and in doing so, I can also explain another 12% of the variation in article citations.

For my last trick, I’m going to make the relationship between reference length and citations completely disappear. To do this, I need to build a more realistic model. I’m going to add two more variables to the statistical model: the number of authors, and the subject section of the article.

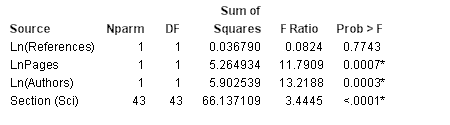

Science is a multi-disciplinary journal, publishing articles in the biomedical sciences, physical sciences, and, to a lesser degree, the social sciences. By including the subject of the article, we can control for the differences in citation rates across the disciplines. The section headings come straight from Science magazine — there is no easy field that can be used from ISI’s Web of Science. Below are my abbreviated model results (Note: Ln stands for the natural logarithm of each variable).

Controlling now for pages, authors, and section, reference list length is no longer statistically significant. In fact, it looks pretty much like a random variable (p=0.77) and adds no information to the rest of the regression model. Page count and number of authors are still highly significant. What you’ll note from the sum of squares is that the article’s section is, by far, the strongest predictor of article citations in our model. Adding section to our reference model allows us to explain a full 46% of citation variance.

So there you have it. The simple association between reference length and citations is spurious, confounded by such other simple explanations such as the length of the paper and the section in which it was published. There may still be evidence of mutual citation gaming in science, although this study cannot validate these claims.

Discussion

10 Thoughts on "Reference List Length and Citations: A Spurious Relationship"

Ha ha!

Awesome. There’s been a lot of people trying to rationalize the more citations = more refs observation; far fewer saying it’s so much hogwash.

Excellent takedown of the relationship. Just given that citation counts have a power law distribution, using an average of that makes little sense.

“given…a power law distribution, using an average…makes little sense.”

If only someone would shout that from the hills! Then we’d be spared many such articles in bibliometrics, economics and numerous scientific fields.

Although I cannot address the effects of section headings (which don’t go back to 1901) or number authors (which are not yet coded in my data), I can say that controlling for log number of pages has very little effect on the log-log references-citations relationship, which goes from a correlation of .70 to a partial correlation of .69.

http://fun-research.netfirms.com/ispst/results.pdf

Given that I am not an expert in this area, I would appreciate any constructive criticism interested readers of this blog can provide.

How about this? The papers that have the most references are usually review articles, which also have longer page counts and tend to be cited more often. So to some extent the relationship could be real, but meaningless. This whole discussion is a great example of a very simple principle that I learned in the first year of grad school:

A correlation, in and of itself, can NEVER prove cause and effect.

For what it’s worth, Nature’s coverage of the article notes that the study took into account review articles versus other types of articles and found that wasn’t what was responsible:

And — contrary to what people might predict — the relationship is not driven by review articles, which could be expected, on average, to be heavier on references and to garner more citations than standard papers…

“By most metrics it is considered a pretty big effect,” says Webster. “There was a small difference with review articles but, in fact, it was in the wrong direction. On average, review articles actually showed less of a relationship than standard articles.”

Nice- I think a bigger problem with the number of references is the arbitrary limits that some journals (Nature comes to mind, I think Science does this as well) place on manuscripts. Authors are forced to remove citations to works taht they actually used. Or move them to the supplemental data, which is a problem because they are then not captured by web of science etc. (I think).

The author of the paper in question has responded to the various comments here.