Author’s Note: I moderated a panel on text and data mining at last week’s Annual meeting of the American Association of Publishers–Professional and Scholarly Publishing. What follows are my introductory remarks and some information about the panel discussion. On the panel were: John Prabhu, Senior Vice President, Technology Solutions, SPi Global; Mark Seeley, Senior Vice President & General Counsel, Elsevier; and Christine M. Stamison, Director, NorthEast Research Libraries consortium (NERL), at the Center for Research Libraries.

At the dawn of digital content distribution there were many excited ideas about the possibilities of computerized information. In the 1960s, Ted Nelson and others theorized about the potential of computerized information and wrote excitedly about the possibilities of a network of interconnected digital texts. Nelson is a computer scientist and philosopher who, among other achievements, coined the term “hypertext,” as in the Hypertext Markup Language or HTML that we’re all familiar with. So many of this visionary’s ideas never came to fruition, at least not in the way he imagined them. Text and data mining, however, are outgrowths of his vision: a world of limitless possibilities in which increasing amounts of digital information are available online.

Let’s fast forward about 50 years to today. While the past 30 years of electronic content creation and distribution were essentially about recreating print, we are now beginning to see the implications and possibilities of having tremendous amounts of content available digitally. People are starting to move “Beyond the PDF,” to take a phrase from the conference of that name a few years back. We are starting to see the potential of moving content off the printed page and making it available in ways that go well beyond passively reading an article or a book. Researchers are beginning to expect to interact with the text or the underlying data that supports a paper, and now they have the capacity to do so.

There are several reasons for this. The first is the scale and availability of digital information that can be processed. There are numerous stores of petabytes of data around the web, and many of the organizational representatives reading this host them. The second is a network robust enough to disseminate that information. The FIOS line coming to my home is transmitting data at 150 Megabytes per second. At that peak data rate, my first computer’s hard drive would have been filled up 18 times per second. The third is the ubiquity of the computational power to crunch those data. Anyone who has a new iPhone in their pocket has immediate access to more computational power than all of NASA did when they landed human beings on the moon. So all of the infrastructure elements to provide text and data mining services exist and are ready to be used.

We also are beginning to see a variety of examples of the value that can be derived from text and data mining. These insights can be amazing, and I say this as one whose life nearly descended into the dark side of big-data analytics. My graduate degree program focused on Marketing Engineering, which is to say, data analytics for targeted marketing purposes. (A side note to parents: Never let your child grow up to be a marketing engineer, it’s just evil.)

One example of this is found in a 2013 article published in the Proceedings of the National Academies of Sciences that examined publicly available Facebook data. “Private traits and attributes are predictable from digital records of human behavior,” by Michal Kosinski and David Stillwell, both of Cambridge University, and Thore Graepel of Microsoft Research, demonstrated the prediction of a variety of notionally private information, not from obvious correlative patterns but from less-obvious ones based on patterns discernable from large-scale data analytics. They found highly correlative predictions about intelligence and “liking” “curly fries” on Facebook, and between male homosexuality and “liking” Wicked the musical.

Kosinski and Stillwell’s analysis was conducted on the basis of openly available data on Facebook. Imagine what can be done with the amount of data that is being held at the corporate level by Google, Facebook, Microsoft, or any of the dozens of data-analytics companies that you have never even heard of. A variety of research has shown that individuals can be identified to within 90 percent accuracy using just three or four seemingly random data points. Last fall, I was at a technology conference where I listened to the CTO from one of those relatively unknown data-analytics companies. He described the 200,000-plus data points that the company currently tracks about Internet users. Data about your behavior exists — and is being mined — that can predict things about your future behavior you might not even know about yourself. For more on this subject, see this great TedX talk by a University of Maryland computer science professor, Jen Golbeck.

Of course, we all get a bit creeped out by these types of marketing and analytics. They capture one’s attention. I reference them only to highlight the power of analytics on large amounts of data and the true power of these forms of enquiry. This predictive capacity is being used not just for marketing purposes or sociological analysis; it is being applied to all sorts of domains, from literature (changes in language use over time and computational analysis of poetic style) to chemistry/pharmaceuticals (computational drug repositioning), and from history (genomic history of slave populations) to agricultural sciences (predicting annual yield of major crops in Bangladesh).

The question for our community is, what is the role of publishers in this endeavor? How can publishers support the researcher community that is interested in undertaking these kinds of analyses with our data? What challenges do publishers and libraries face in provisioning and licensing these services? How can you do so in a way that does not appear, from a systems perspective, like wholesale theft of your company’s intellectual property?

—

During the panel, we discussed the licensing challenges, the expectations of users, some of the technical issues, and some of the future challenges and opportunities surrounding text and data mining (TDM). Mark Seeley focused on the business opportunities available to providing and supporting researchers in their work by provisioning TDM. Christine Stamison strongly advocated the role of libraries in TDM and the rights that libraries believe are covered in the existing licenses that they have negotiated, or that are covered by fair-use provisions of copyright law. Seeley and Stamison agreed that simplicity was needed, especially around the legal and licensing issues. Stamison focused on one library position, as articulated by the ARL Issue Brief on text and data mining: that algorithmic usage of content is covered under the fair-use provisions of copyright law and that special licenses specific to data mining for content aren’t necessary. While many contracts reference fair use, many licenses are ambiguous at best on whether TDM-related services are allowed. Although it wasn’t discussed, a related position statement issued by the International STM Association back in 2012 echoed many of the points discussed during the session. That statement included a sample contract addendum that is about five pages long. The International Federation of Library Associations (IFLA) also has a statement on TDM, issued in 2013. On the panel, Stamison and Seeley agreed that simplification and clearer understanding of these issues can help resolve some of the contractual battles about TDM.

John Prabhu discussed the technical infrastructure and content creation elements of providing these services, from metadata to systems management concerns. Some of the metadata Prabhu thought would be useful to improving TDM services included semantic tagging of content, matching content with existing ontologies, and TDM data provision. Stamison reported an informal survey of some of her member institutions and their regular requests for API, access keys, or specialized access to crawl publishers’ content. Despite some public complaints to the contrary, Seeley argued, Elsevier and other publishers are working hard to provide authorized access when it is requested. Concern about appropriate use is among the challenges faced here, of course, but more challenges exist technically around systems stability and managing the volume and systems demands TDM requests pose.

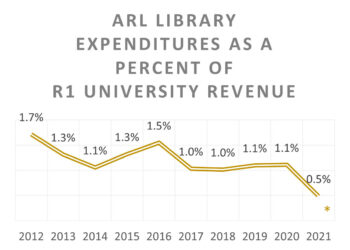

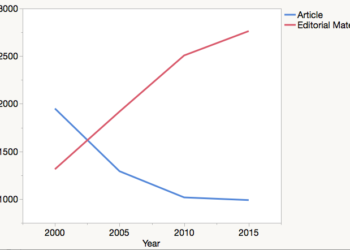

The session elicited a number of interesting questions, including one from Fred Dylla that caught my attention, about the volume of TDM requests and if there was any quantification of the actual use of text and data mining. While relevant data are limited, several data points are worth considering about the scale of this issue. The first is the anecdotal reporting of demand from the library community, which is supported by Stamison’s informal survey. More-rigorous data collection of this sort is necessary. Another factor I’ve noticed, through my informal exploration of various time-based Google Scholar searches on these subjects in preparation for this talk, is the increasing number of scholarly papers that use meta-analysis, textual analysis, or data-mining methodologies. Again, some quantification of this would be a useful addition to our understanding of the scope of text and data mining in the community. Finally, a third data point that could help would be a study of the usage of publisher APIs that have been set up to support TDM. All three of these areas are ripe for research to improve our understanding of the scope of TDM at present and its growth in recent years.

Stamison also discussed the potential for common practice among publishers and libraries around the provision of these services. If the pace of requests is going to increase, and several indicators point in that direction, then more common practice across publishers and libraries will simplify this process for everyone. One example of this is the Crossref Text and Data Mining Service support, but more reference services of this kind are necessary. For example, common APIs for TDM access, an accepted way for publishers and libraries to distribute access points or tokens in a secure way to authorized patrons, definition of standardized terminology around TDM access, and policies regarding capacity management might all be areas where consensus might make the process of TDM more efficient for the community.

Given its impact, the positive results, and the relative accessibility of content, computational power and approaches to mine these data, text and data mining will only grow as a research methodology. Publishers can and should do more to support these approaches, through tools, access and services. In some ways, this will help add value to the content publishers provide. In other ways, publishers allowance for TDM will help support the interconnected web of knowledge that was part of the original vision of what the internet could provide.

Discussion

6 Thoughts on "Text and Data Mining Are Growing and Publishers Need to Support Their Use – An AAP-PSP Panel Report"

At last summer’s SSP Librarian Focus Group in Chicago, one of the surprise (to me) results was that all the librarians reported interest in TDM on their campuses. I blogged this meeting here:

http://blog.highwire.org/2015/09/09/ssps-libraries-focus-group/

It is good to hear about this, since those of us on the Crossref TDM development group had not been picking up a lot of researcher interest. Though not much market communication has been going on.

One important comment from the librarians was that the student use case requires an API that is easy enough to acquire, learn and use that it can support a project that fits within a semester or even a quarter. That means that things like licensing have to be handled ahead of time.

Platforms and publishers might be starting to see the signals of TDM in institutional usage, even in advance of a TDM API. It would look like Google and Bing crawlers. Institutional librarians would start to see this in their usage stats.

At the APE 2016 meeting in January in Berlin, several speakers talked about the uses of the data that would be available via TDM. In particular, Barend Mons (European Open Science Cloud) and Todd Toler (Wiley) talked about being sure we don’t break the data by trying to feed it through a publishing pipeline built for narrative/text. One provocative suggestion — a thought experiment perhaps — was that publishers publish the data, and make the narrative the “supplemental information”. I thought their two talks were important signals about how we will need to be “writing for machines to read” in the future:

http://blog.highwire.org/2016/02/08/augmented-intelligence-in-scholarly-communication-the-machine-as-reader/

I found this session very informative and helpful because my organization is trying to figure all this out now–because someone asked. As noted in the piece and your post, there was a disconnect between what Ms. Staminson was reporting and what the publishers in the room are seeing. She put TDM licensing issues and researcher requests at the top of the list of things librarians are trying to help patrons with. This may very well be true but these researchers may not actually be trying to text mine from publisher sites all that much (Elsevier may be an exception). During the session and after the session, folks I talked to were starting to learn about the issues but very few publishers had ever gotten a request either from an individual or from an institution looking to include terms in their subscription contracts.

Either way, TDM requests are going to start showing up and it would be prudent to have a plan in place. On the other hand, I can see why a publisher may resist in taking up time and money to build an API when no one has asked for it… yet.

Librarians like Ms. Staminson seem to be treating fair use justifying mass digitization and TDM as settled law. The Google Books case is now on appeal to the Supreme Court, so this story is not over.

Here is an upcoming TDM event of possible interest. Clearly the librarians are looking hard at TDM as a potential new service.

“JCDL 2016: 5th International Workshop on Mining Scientific Publications (WOSP 2016). The workshop is part of the Joint Conference on Digital Libraries 2016, which will be held between 19th and 23rd June in Newark, NJ, USA.”

https://wosp.core.ac.uk/jcdl2016/ and http://www.jcdl2016.org/