This year, Clarivate Analytics, publishers of the Journal Citation Reports (JCR), suppressed 20 journals, 14 for high levels of self-citation and six for citation stacking — a pattern known informally as a “citation cartel.” In addition, it assigned an Editorial Expression of Concern to five journals after it became aware of citation anomalies following the completion of the 2018 report. Suppression from the JCR lasts one year.

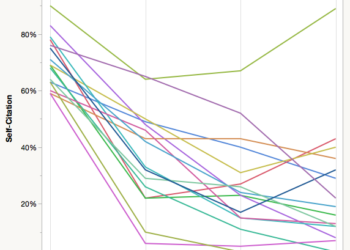

Titles are suppressed when either self-citation or stacking distorts the Journal Impact Factor (JIF) and rank of a journal such that it does not fairly reflect the journal’s citation performance in the literature. This can happen through self-citation, particularly when there is a concentration of citations to papers published in the previous two years, or when there is a high level of JIF-directed citations between two or more journals. The first citation cartel was identified in 2012, involving four biomedical journals. Other cartels have been identified in soil sciences, bioinformatics, and business. The JCR publishes annual lists of suppressed titles with key data and descriptions.

Suppression for High Levels of Self-Citation

| Full Title | Category | % Self cites in JIF numerator | % Distortion of category rank |

| Aquaculture Economics & Management | Fisheries | 56% | 35% |

| Archives of Budo | Sport Sciences | 45% | 34% |

| Canadian Historical Review | History | 63% | 49% |

| Chinese Journal of International Law | International Relations | 51% | 33% |

| Chinese Journal of International Law | Law | 51% | 34% |

| Chinese Journal of Mechanical Engineering | Engineering, Mechanical | 48% | 27% |

| Eurasia Journal of Mathematics Science and Technology Education | Education & Educational Research | 62% | 36% |

| International Journal of American Linguistics | Linguistics | 64% | 36% |

| International Journal of Applied Mechanics | Mechanics | 48% | 36% |

| International Journal of Civil Engineering | Engineering, Civil | 59% | 27% |

| Journal of Micropalaeontology | Paleontology | 51% | 40% |

| Journal of Voice | Otorhinolaryngology | 47% | 45% |

| Maritime Policy & Management | Transportation | 54% | 31% |

| Pediatric Dentistry | Dentistry, Oral Surgery & Medicine | 48% | 44% |

| Pediatric Dentistry | Pediatrics | 48% | 33% |

| Psychoanalytic Quarterly | Psychology, Psychoanalysis | 70% | 46% |

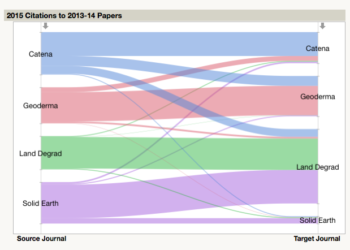

Suppression for Citation Stacking

| Recipient Journal | Donor Journal | % JIF Numerator | % Exchange to JIF Years |

| European Journal of the History of Economic Thought | History of Economic Ideas | 56% | 86% |

| Journal of the History of Economic Thought | History of Economic Ideas | 64% | 88% |

| Liver Cancer | Digestive Diseases | 40% | 95% |

| Liver Cancer | Oncology | 23% | 85% |

Journals Receiving an Editorial Expression of Concern

- Nanoscience and Nanotechnology Letters

- Journal of Biobased Materials and Bioenergy

- Journal of Biomedical Nanotechnology

- Journal of Nanoscience and Nanotechnology

- Bone Research

The JCR has a public document explaining the guidelines it uses to evaluate journals for suppression. However, as illustrated through reverse engineering previously suppressed titles, threshold levels are set extremely high, so high, that a journal that increased its Impact Factor by nearly 5-fold and moved to first-place among its subject category can escape suppression. Nevertheless, suppression from the JCR has been shown to drastically reduce levels of self-citation following reintroduction. If the JCR were traffic cops, they appear to be stopping only the most reckless drivers.

Discussion

8 Thoughts on "Impact Factor Denied to 20 Journals For Self-Citation, Stacking"

In the case of the two history of economics journals, this can be explained easily. One single survey paper in History of Economic Ideas, which provides an overview of the literature in the discipline of the past two years has just been published and it cites a lot of papers from the Journal of the History of Economic Thought and the European Journal of the History of Economic Thought (as well as other field journals). It is not related to any dishonest academic practice but just a question of size. History of Economic Thought is a small field. There are only one or two hundred scholars at our annual conferences. So if you publish a survey, there is a chance that this survey is going to account for a very significant amount of all of the citations in a single journal. This is what happens when a field is in relative decline. This is also what happens when an algorithm-based citation index owned by a private company acts as a cop on science. Removing that single survey article from the citation count would have avoided all the trouble but to do this, you need to have a brain first. This is evidence that bad ranking and indexing practices, not just bad scientific practice, can seriously harm a struggling subfield.

Correct. This is because neither Clarivate, nor COPE, nor TSK, nor any scholarly publishing network really understands or has representation from HSS/Business/Economics subjects. They take precedence from natural sciences and base their policies to generalize on all subject areas. For example, discussion papers and working papers are common practice in HSS areas since decades and lead to very important development of individual research papers. But the natural sciences folks call this now “preprints” and have new rules for that just because it wasn’t common in their fields. Now those rules apply to all fields.

Natural sciences boast of “double blind” review process as an innovation, but HSS has it since at least 40 years as a standard. Still, no representation from HSS in these debates or calculations or “editorial notes”.

A university faculty has instructed their staff to do exactly what is reported in your article, i.e. citation stacking. This would have helped boost their ranking!

It was picked up by “Retraction Watch” some time ago.

https://retractionwatch.com/2017/08/22/one-way-boost-unis-ranking-ask-faculty-cite/

As Editor of History of Economic Ideas, the journal that published the survey that seemingly caused the citation boost of the two suppressed history of economics journal, I can confirm that I am already in touch with the staff of Clarivarate Analytics to sort the thing out. Possibly by titling our annual survey in a way their algorithm may recognize in order to properly exclude the survey itself from citation counts. Needless to say, neither the JHET nor the EJHET bear any responsibility for the incident. I have expressly asked Clarivarate to revise their decision against those two journals and re-admit them to the list.

Nicola Giocoli (University of Pisa)

Archives of Budo is a sole journal devoted to martial arts and combat sports. For 13 years other martial arts and combat sports journals have not achieved the Impact Factor, then the increased self-citation by Archives of Budo was inevitable.

If you have a look at previous Journal Citation Reports you will see that since the first year of JCR inclusion, Archives of Budo had high self-citations rates, and this was never put into question the editorial and management team for the Journal Citation Reports.

It seems also that family of the journals: Archives of Budo, Archives of Budo Science of Martial Arts and Extreme Sports, Archives of Budo Conference Proceedings could have affected the wrong interpretation.

P.S. Unbelievable that out of 12,301 journals there has been no thorough investigation into 20 journals giving them a chance to defend.

In a small field not used to many citations of current works and without substantial citations coming from outside, any such surveys will produce an increase in citations that will be flagged by the quantitative indicators of Clarivate Analytics. The company adopted practices that are most harmful for smaller fields such as history of economic thought (HET). Moreover, it did not contact directly any of the editors of the 3 HET journals suppressed from the list, and did not give journals or publishers an advance notice asking for clarifications before suppressing journals. Intentions aside, the suppression does incriminate and discriminate journals as guilty of malpractices.

The editors and the publisher of HEI have already appealed to Clarivate, as did Cambridge University Press. The editors of EJHET and JHET wrote a joint letter that was sent to Clarivate on July 2nd and now made publicly available at the HES website: https://historyofeconomics.org/jhet-and-ejhet-editors-appeal-against-impact-factor-suppression/

We hope that this public statement not only clarifies the matter but also can be accessible to agencies and governmental bodies that do research evaluation in different countries and are going to use the 2017 JIF in their processes.

I am writing this comment as a ‘young’ historian of economic thought, as Clarivate Analytics’s decision to deny the impact factor to three history of economic thought (HET) journals for ‘citation stacking’ represents a serious and immediate threat to our small and (for this very size issue) constantly besieged field. However, besides the understandable discomfort and concern for this situation, I feel a quantum of hope which comes from the awareness that something unavoidable is happening, and that we will have the chance to put such an inevitable threat behind us soon and for good. I will try to be as clear as I can concerning what is ‘necessary’ in what is happening: it is inevitable that at some point every academic field will experience a clash between algorithmic assessment procedures and the most fundamental common sense. Now, what gives me hope is that these situations can act as healthy sanity checks for our assessment practices. What gives me further hope is that, in this case, such a big, gross, and frankly ridiculous violation of reasonability has been made that it cannot be simply overlooked. Therefore, this annoying situation may be a real chance that we have to expose and fix some fundamental contradictions arising from the narrow use of bibliometry.

There is a point that must be clarified concerning the true role played by the journal History of Economic Ideas (HEI) in this story. The contradictions in the assessment procedures would not have emerged had HEI not tried to introduce an important innovation to the historiography of our field. This case must make us understand that algorithm’ alarm bells ring unacceptably with the same sensibility both in the case of fraudulence and in the case of innovation. To be even clearer, HEI and our field are going to be penalized PRECISELY BECAUSE HEI has tried to introduce an innovation to the field. Needless to say, we are touching on an incredibly serious point for scientific research. Algorithms are programmed to detect deviations, but we all know that scientific research must sometimes be inherently deviant. If we agree on this diagnosis, we should equally agree that HET has so far escaped any bibliometric sanction just because it has avoided talking extensively about its current position. Had HET reflected on itself more seriously before, the flag of ‘citation stacking’ would have emerged much earlier. This is the huge issue we all have to face.

Many are talking about censorship. But the case may be even worse. While censors are at least concerned with ideas, here we are experiencing methodological prohibitionism. And, the effects of this methodological censorship are huge. Beyond the mere question of impact factors, what is at stake is the possible loss of much needed intellectual work. Wouldn’t it have been an intellectual loss had the 2017 survey article that caused the bibliometric alarm, ‘From antiquity to modern macro: an overview of contemporary scholarship in the history of economic thought journals, 2015-2016’ (https://store.torrossa.com/resources/an/4232943), not been published? And what about the fate of the next scheduled survey, ‘New Scope, New Sources, New Methods? An Essay on Contemporary Scholarship in History of Economic Thought Journals, 2016-2017’ (https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3175929)? Read these surveys and decide if they are not only very serious scholarship, but much needed contributions for our discipline’s advancement and self-awareness (I have not yet had the chance to read the other survey in the pipeline, but I guess that given the authors’ profiles it is excellent scholarship as well). And consider that what is good in those contributions stems from the fact that the authors’ thoughts have been elicited by the appointed task.

The bottom line of what I am saying is that there is very much at stake in this situation: the very survival of an entire field of study, the intellectual and methodological freedom for the practitioners of that discipline, but, even more generally and crucially, the way in which any scientific field may be harmed by algorithmic assessment. There is always much talk about ‘machine learning’. Well, today machines have failed. So, please, Clarivate Analytics: ‘teach’ them.

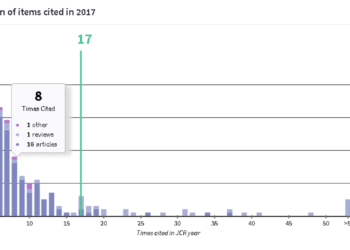

The recent discussion about the alleged “stacking activity” by history of economics journals, which resulted in a denial by @Clarivate to generate the IF of three journals, did not touch a methodological issue which I find obvious. Every user of data knows that outliers are mostly responsible for inaccuracy in summary statistics about a data set, and that the way to account for their influence is to exclude them from the data set. Apparently, the issue at stake consists of a single article published in a journal that heavily influenced the statistics of all the journals publishing a high percentage of history of economics contributions, two of which were excluded by the IF list. Why did not @Clavariate just remove the outlier from their citational statistics, instead of calculating it and then deciding who to punish? This is incomprehensible, and methodologically faulty. Note that there are other journals whose IF is substantially modified by the outlier, but are still listed. No user of data would ever consider the results of a statistics so heavily influenced by a single item: indeed the number of journals receiving an inaccurate IF is much higher than the two journals excluded because of the publication of a single article by the third journal. This seems to be a straightforward case, depending on just one item: if @Clavariate is unable to act accurately in this simple case, what is happening in more complicated ones? Why should the statistics of such inaccurately processed data be considered reliable?

(Conflict of interest: I have a forthcoming article in one of the two journals excluded, and my last published paper is in the third journal, although it is not the outlier…)