Editor’s Note: this is a guest post by Rob Johnson and Andrea Chiarelli of Research Consulting. Building on the findings of a recent study, ‘Accelerating scholarly communication: The transformative role of preprints’, commissioned by Knowledge Exchange, they consider how publishers are responding to recent growth in the uptake of preprints.

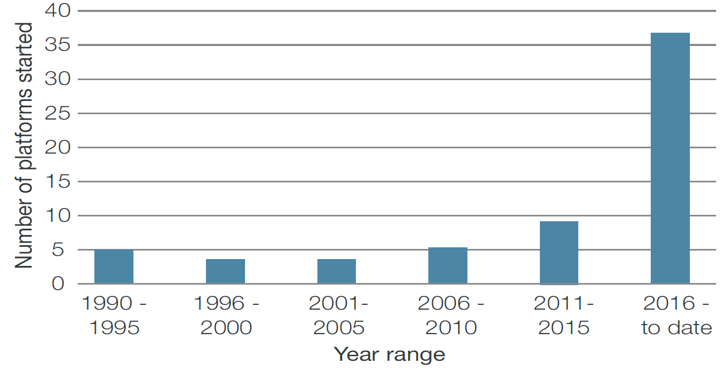

Preprint servers have been growing explosively over the last ten years: over 60 platforms are currently available worldwide, and the sharing of research outputs prior to formal peer-review and publication is increasing in popularity. Preprint servers have a long history in fields such as high energy physics, where extensive collaboration and co-authorship are the norm, and economics, with its lengthy review and publication process. Services like arXiv and RePEC emerged in the 1990s as a means of enabling early-sharing of research results in these disciplines, and have co-existed with traditional journals for decades.

Other disciplines have been much slower to embrace the posting of preprints, but this is now changing rapidly, as Figure 1 shows. The ‘second wave’ of preprint servers has been gathering pace in the last three years and raises afresh the possibility that preprints could disrupt traditional scientific journals.

Why are authors engaging with preprints?

Over the last 12 months we’ve been working on a project commissioned by Knowledge Exchange to explore the role of preprints in the scholarly communication process, speaking with researchers, research performing organizations, research funding organizations, and preprint service providers. Our interviews with authors indicate that early and fast dissemination is the primary motive behind preprint posting. In addition, the increased scope for feedback seems to be highly valued, with much of this interaction taking place via Twitter and email, rather than via direct comments on preprint servers. Early career researchers see particular advantages: the inclusion of preprints on CVs or funding applications enables them to demonstrate credibility in a field much sooner than would otherwise be the case.

The extent to which these factors lead to widespread uptake of preprints varies by discipline, however. The ‘second wave’ of preprint servers is developing quickly in areas such as biology, chemistry and psychology, and early adopters and nascent preprint servers can now be found in virtually all scholarly communities. Nevertheless, the level of adoption depends on a number of socio-technological factors, including individual preference, community acceptance of open scholarship practices, publisher and funder policies, and existing scholarly communication infrastructure.

In fields with a longstanding preprint culture, such as economics, scholarly practice has evolved to the point where ‘the working paper [on RePEc] is downloaded many times more than the article’. Similar patterns have been observed in mathematics, where arXiv-deposited articles appear to receive a citation advantage but see a reduction in downloads, and there are early indications of citation and altmetric advantages to biological science papers deposited in bioRxiv. For authors in other fields, however, evidence of the benefits of preprint posting remains largely anecdotal, and readers continue to privilege the version of record. In April 2017, Judy Luther asked “How long will it take to reach a tipping point where the majority of academic review and hiring committees recognize preprints as part of their body of work?” Looking at the current state of play, it appears this tipping point remains a long way off.

The evolving role of academic publishers in a preprint world

How publishers respond to preprints will depend in large part on whether the recent rate of growth continues. Nobody knows exactly what the future holds, but let’s consider three possible scenarios:

- Turn of the tide: The second wave of preprint servers fades, and preprints remain a major component of scholarly communication only in the fields where they are already firmly established, e.g. those served by arXiv and RePEC.

- Variable adoption: Preprints grow in some additional fields, such as those within the scope of ChemRxiv and bioRxiv, but not all.

- Preprints by default: Preprints grow in all fields (at different paces) and are accepted by the research community at large.

Recent trends in life sciences could lead us to think the second scenario is well on its way to becoming a reality. Meanwhile, the proliferation of new preprint servers means default adoption of preprint posting is now at least a viable possibility for virtually all researchers. However, the potential for an upward growth trajectory to go into reverse can never be discounted, as the recent decline in megajournals’ publishing volumes shows. The rapid increase of preprints in life sciences has gained much attention, but it is instructive to note that the number of biology preprints posted in 2019 relative to new publications in PubMed stands at just 2.3% – a far cry from a default position.

Are preprints disruptive?

Joshua Gans, author of ‘The Disruption Dilemma’, observes that established firms tend to hold back from reacting to disruptive innovations for two reasons: uncertainty (“will preprints ever really threaten subscription revenues?”) and cost (“can we afford to develop new services and workflows to accommodate preprints?”). He also argues, however, that many businesses do find ways of managing through disruption, and outlines three key strategies for doing so:

- Beat them – attack by investing in the new disruptive technology.

- Join them – cooperate with or acquire the market entrant.

- Wait them out – use critical assets that new entrants may lack.

The extent to which publishers are adopting each of these strategies provides a useful barometer of preprints’ potential for disruption.

Beat them

One response to disruptive events is for incumbents to try to replicate, or improve upon, the new market entrants’ approach. As Roger Schonfeld has noted, competition for content is moving steadily upstream in the research workflow. In this context, it is striking to note how few publishers have sought to develop their own preprint servers, or to replicate the functionality of new platforms such as F1000 Research, which enables the sharing of articles prior to and under open peer review as part of its publication workflow.

An explanation for this lies in the fact that most preprint servers have not in fact entered the publishing ‘market’ at all. One of the few publishers to develop a preprint server, MDPI, chose to establish preprints.org as a free and not-for-profit service, and community-owned and/or scholar-led initiatives remain the dominant model. While this raises questions over the scalability and sustainability of some services, it has not prevented rapid growth occurring in the 25 or so preprints services hosted by the non-profit Center for Open Science, for example. Most of the funders, librarians and researchers we consulted indicated a strong preference for preprint servers to remain not-for-profit and community governed. With existing preprint servers representing neither an immediate threat to subscription revenues, nor a source of significant revenues from other sources, the case for publishers to invest aggressively in replicating their functionality appears weak.

Join them

While investment can forestall disruption, another way to achieve this result is by acquisition. Elsevier’s 2016 purchase of SSRN is perhaps the clearest example of this in the field of preprints, and it has since sought to leverage the technology to launch additional services, such as the Chemistry Research Network (ChemRN).

With so many preprint servers provided by not-for-profit actors, though, acquisition is not always an option. ‘Joining them’ in this context is therefore more likely to be achieved through strategic partnerships and alliances. This has become a recurring theme in the last couple of years, with recent examples including:

- The American Chemical Society, Royal Society of Chemistry and German Chemical Society (GDCh) partnering with Figshare to launch ChemRxiv within a week of Elsevier’s 2017 launch of ChemRN. As of August 2019, they were joined by the Chinese Chemical Society and the Chemical Society of Japan as co-owners of the service.

- Springer Nature partnering with Research Square to develop its In Review service.

- PLOS and Cold Spring Harbor Laboratory announcing a partnership to enable the automatic posting of manuscripts to the bioRxiv and medRxiv preprint servers.

- Emerald Publishing partnering with F1000 to develop the Emerald Open Research

We can expect more such partnerships to emerge in the coming months and years. Meanwhile, those who lack the scale to negotiate bilateral relationships of this nature may come to be served by emerging ‘marketplaces’ like Cactus Communications’ PubSURE, which aims to connect preprints, authors and editors on a single platform.

Wait them out

It is rare that a disruptive innovation allows an entrant to build out all of the key elements in a value chain. In this respect little has changed over the last decade. Preprints servers cannot replicate the functions of validation, filtration, and designation served by scientific journals, and nor has the ‘unbundling’ of publishing progressed to the point where services such as portable peer review or overlay journals can address these needs at scale.

Journals with strong brands, or in fields that have yet to show much interest in preprints, may therefore find that a wait-and-see strategy serves them best. It remains unclear how many of the new crop of preprint servers will be able to develop a sustainable business model, and the recent decision by PeerJ to stop accepting new preprints lends credence to a cautious approach. Having established the first dedicated services for preprints in biology and life science, PeerJ’s management team have now opted to focus solely on peer-reviewed journals – effectively conceding the territory to not-for-profit preprint servers such as BioRxiv. As PeerJ’s CEO Jason Hoyt observes: ‘What we’re learning is that preprints are not a desired replacement for peer review, but a welcome complement to it.’

The second wave of preprint servers has much to offer the researcher community, but those expecting it to wash away existing scientific journals are liable to be disappointed. In our view, the biggest threat to academic publishers will come, not from preprint servers, but from other publishers that do a better job of addressing authors’ desire for accelerated dissemination, feedback and scholarly credit. This might be achieved through improved internal workflows, acquisition or strategic partnerships. In each case, seeing the integration of preprints into the research workflow as an opportunity, rather than a disruptive threat, is likely to offer publishers the best hope of continuing to identify and attract high-quality content.

The authors gratefully acknowledge the assistance of Phill Jones in the preparation of this post and the support of Knowledge Exchange, who commissioned the study ‘Accelerating scholarly communication: The transformative role of preprints’. The views and opinions expressed here are those of the authors alone.

Discussion

19 Thoughts on "The Second Wave of Preprint Servers: How Can Publishers Keep Afloat?"

Appreciate this analysis very much. A missing piece for me in understanding … what are you counting as a platform vs a service?

Hi Lisa, as you rightfully point out we have been a little loose in the deployment of these terms in this post, mainly for stylistic purposes to avoid constantly repeating ‘preprint servers’ (my preferred term). For what it’s worth, I defined preprint servers in the STM Report 2018 as ‘the online platforms or infrastructure designed to host preprints. They may include a combination of peer reviewed and non-peer reviewed content, from a variety of sources and in a range of formats.’ (https://www.stm-assoc.org/2018_10_04_STM_Report_2018.pdf, p.180). As this broad definition reflects, preprint servers are pretty heterogeneous in both how they work and what content they accept, nor is there anything like universal agreement on what constitutes a preprint. This is something we discuss further in the report which informed this post, and was also addressed here on TSK a couple of years ago: https://scholarlykitchen.sspnet.org/2017/04/19/preprint-server-not-preprint-server/ Rob

Interesting Read. I find myself stuck on the statement that pre-prints can “demonstrate credibility in a field much earlier”. Do pre-prints undergo the same basic analysis for production that published articles go through (other than peer-review)? Outside of the peer-review process (Which is obviously valuable), publishers also invest in other production mechanisms to ensure quality such as scanning the articles for fraudulent (i.e., stolen) images and graphs, etc. As well, pre-prints assume that other researchers in the field will review these papers and leave comments like what the peer-review process provides during publication. This could lead to the dissemination of pseudo-science which we are all so often trying to combat but we cannot detect these works easily without the help of publishing production. The scholarly kitchen has said time and again that researchers will most likely not engage with research in this way as they are so tired of doing free work and in this case where they get no credit for their work (as opposed to being able to document being a Reviewer or Editor). I agree with this article that it would take a huge overhaul in the philosophy of the publishing world (including the researchers) for these preprint services to take over.

I can understand the value of pre-prints to the author. I can see value in finding partners and funding. But I just don’t see how pre-prints have a place in the scientific record.

The journals I manage have acceptance rates around 35%. What happens when pre-print articles never get published in a peer-review journal? Giving pre-prints any long-term weight could lead to serious abuse by junk scientists.

Furthermore, a large percentage of articles accepted required major revisions for missing data, refinement of analysis and conclusions, etc. What happens when an item in a pre-print is no longer included in the version of record?

Making pre-prints temporary could make them more attractive to publishers and to more scientific communities. Once the version of record is published, the pre-print is removed from the server. Instead of a manuscript, the Pre-print DOI provides a notice of and link to the version of record. This notice probably should state that the versions could be quite different

If the pre-print is never published, the manuscript should be taken down in a reasonable time, say 18 months after submission, depending on the field of study. Here the DOI should link to a note saying the manuscript was not published in a peer-reviewed journal within the established timeframe.

I believe a system like this will allow authors to achieve early-research goals of feedback, collaboration and funding while maintaining the integrity of the scientific record.

Everyone knows that preprint publications have not been formally peer-reviewed and thus may have been contributed by “junk scientists.” That is hardly an argument for temporizing preprint publications. Indeed, one of the attractions of submitting a preprint publication is that it has permanency. Whatever vagaries determine that final peer-reviewed versions (or parts of those versions) never gets published, preprint publications provide permanent “versions of record” of the thoughts held by scholars at a unique point in time. Apart from its historical interest, a major turning point may be the day when a preprint publication entrances a Nobel committee.

But everybody doestn’t know: journalists don’t seem to; policy makers not always. Yet these are key mechanisms by which research is converted to public awareness and policy. That should give us pause for thought. Especially as some preprint platforms/servers ever more resemble the online packaging of journals.

And why is a pre-print server a guarantee of permanency? Are they paying in CLOCKSS? If the money runs out who keeps the server turned on? Preprints are not immune to the cash flow laws all enterprises must eventually obey.

Donald: it’s already happened. Most of the Physics prizes from now on (and the past few also) will be based on work people first read via the arXiv. Indeed, the scientific background released by the Nobel committee for this year’s award cites the arXiv: https://www.nobelprize.org/uploads/2019/10/advanced-physicsprize2019-3.pdf

Or take a look at the LIGO (last year) publication list: https://www.lsc-group.phys.uwm.edu/ppcomm/Papers.html

Perhaps the turning point is at hand? Publishers should be concerned. There comes a career stage in authorship when, because of pressures on time, it is easier to leave a publication in preprint form and move on. A major pressure on time is the writing of grant applications. If publishers would add their voices to those pressing for reform of grant peer-review processes, they might find an increase in the number of authors prepared to move beyond the preprint stage.

Preprints are useful, if only to establish publication priority, but to the extent that they don’t offer validation, filtration, or designation, I think it’s a mistake to think of them as part of the scientific record. I suggest thinking of them as part of the scientific process. They’ve always been that, when they were circulated informally among colleagues and/or presented at conferences.

If the research community will rely on preprint posting to establish priority, then preprints probably shouldn’t be removed. If preprints will be part of the scientific process, then it is important that they point to the version of record, and unfortunately this is something that not all preprint servers do. BioRxiv does do this automatically on the fly via web search. SSRN relies (I think) on the author to enter the VoR citation. But some notable preprint servers do not offer a clear way to link to the VoR nor do they encourage it, and this is a disservice to the community.

I would consider OSF to be one of those notable preprint servers — despite proliferation of new services on the platform, there does not currently appear to be a way to automatically link preprints to a VoR. I would be curious to hear where a feature like this falls on the Center for Open Science’s roadmap for OSF, especially given that they plan to start charging services beginning next year.

I know at least one prominent journal editor brought this need to OSF’s attention 3 years ago. So it would be good to know whether and where it is in OSF’s plans.

It isn’t mentioned above but Cambridge University Press decided to take the “join them” approach. We announced our early and open research back in August (press release here: https://www.cambridge.org/about-us/media/press-releases/cambridge-announces-open-research-platform) and APSA Preprints, our first partner’s preprint server for political science, launched at the end of August: https://preprints.apsanet.org/engage/apsa/public-dashboard.

Thanks for adding this, Brigitte. It’s particularly interesting to see in your announcement an emphasis on going ‘beyond content dissemination to provide services that support and encourage researcher collaboration and better connect different parts of the research lifecycle’. It seems to me that the successful development of these kinds of services, built on and around preprint servers but offering added value to researchers and potentially other stakeholders in the landscape, is going to be crucial to the long-term sustainability of preprint servers.

Dear Colleagues,

Thank you for the hard work the SK Chefs provide, and for the thoughtful guest post today.

Given how balanced this blog usually is, I’m surprised by how many one-sided posts I’m seeing lately on the topic of preprint servers.

At least in clinical medicine, serious concerns about patient safety have been raised such as this editorial policy adopted by four journals in one specialty: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6345289/

Journalists write about preprints and disseminate work in ways that will confuse the public, who are not well equipped to discern between work that has been reviewed and work that has not been; for example, see: https://www.nature.com/articles/d41586-018-05789-4

In addition, thoughtful observers — including a former Chef in the Scholarly Kitchen — have raised thoughtful and principled concerns about them, such as here: https://thegeyser.substack.com/p/biorxiv-preprints-in-the-press?token=eyJ1c2VyX2lkIjoxODQ2OTExLCJwb3N0X2lkIjoxMzQ1MjQsIl8iOiI2ak5HNCIsImlhdCI6MTU3MTI0Mjc5OSwiZXhwIjoxNTcxMjQ2Mzk5LCJpc3MiOiJwdWItMzM0NCIsInN1YiI6InBvc3QtcmVhY3Rpb24ifQ.sxoHjCgllQsCVVfu9kpY3uZyzqn2j51Ke98orJqRSDg

I’m not saying that the individuals whose work I’m listing above (including my own) are necessarily in the right. What I am asking is that the SK strive for a little more balance on this important topic.

Many thanks,

Seth S. Leopold, MD

Editor-in-Chief

Clinical Orthopaedics and Related Research

It’s eerie to overhear, in effect, publisher trepidation about the preprint surge. The nonprofit, community-governed world of preprints is an enigma for the big for-profits, since they can’t easily skim 37% off the top.

Thanks for the different viewpoints. I am personally comfortable with unpublished work being out there and understanding it might not be rigorously checked yet or ever. Perhaps the general public needs more visibility of this possibility. Like Donald I don’t think there is need to take down the pre-print versions as long as they are clearly identified as such.

Thank you Jeff and Valerie. But as for “unpublished work,” please Valerie make a distinction between “publication” and “formal publication.” Whereas the NIH paper preprints in the 1960s were limited in circulation, modern online preprints are freely circulated – they are made public. They are published. They are “preprint publications.” Final peer-reviewed versions can be referred to as “formal publications” in that they have gone through formal review processes.

Sure happy to agree with clarification of items being ‘formally published’ or not.

At Charleston this week Kent Anderson gave a series of arguments critical about preprints. Apparently these will appear (or have) in Learned Publishing, if I recall correctly. It was part of a lively and fun debate, one which both parties engaged very respectfully. That’s refreshing.

I’m not convinced Mr. Anderson’s criticisms about preprints extend to all disciplines (and presumably he does not think the criticisms apply to all fields with the same weight). But–and I will as always happily stand corrected–it seems his arguments hinge a lot on concerns that the public will be mislead by what they find in preprint servers. That could just as well be an argument for not publishing anything, including science popularizations, or newspaper articles. That’s not a path we want to go down, I think.

Mr. Anderson’s points in the debate seem to challenge some of the autonomy on the reader’s part that we should presume when they read *anything*. If journalists are duped by the preprint format, that is a failure to do their jobs. It’s not the task of preprint servers to police postings in anything more than a reasonably light way. They should deliver up *scholarly* reflections and preliminary data, and so need a bit of vetting, but no more than is proportioned to their role in the scholarly system, namely initial and fast disclosure of results, much as the patent system accommodates in the scholarly system.

The bad journalists will always be with us. Ultimately it’s caveat lector!–and has been since the advent of the printing press, and much longer before that.

The level of scrutiny that MedArxiv (in one accounting at the conference) provides should be a model for all preprint servers. “Peer review light”. I have an economist friend who used to vet preprints for SSRN; not sure if SSRN still does this or not, but it’s certainly workable.

A nominal fee could be imposed for posting to a preprint, as a source of funding. This would more importantly create a barrier to impulsive posting that might (like a sort of light peer review) create a barrier to posting trash research of the kind that vexes Mr. Anderson, not to mention all of us.

I really like the wild frontier, the free for all marketplace atmosphere, that preprints promise, plus how it offers to help contract the bloated market of peer-reviewed journals. They will not and should not replace peer-reviewed journals but will enable a contracted journal space, with journals moving more toward integrative highly critical review syntheses of research published in preprints–or in other peer-reviewed journal articles. Some people think this role for journals just amounts to an overlay-to-preprints mode. I disagree. It could play this role but a lot more too. Critical review of anything disclosed anywhere (even conference poster sessions, or proceedings), as long as it contributes to scholarship and the pursuit of truth, is fine. That term “overlay” now confuses issues.

Incidentally, I talked to the head of the NLM library while at Charleston. She agreed with the idea that merely posting a “not peer reviewed” label when NLM indexes (or posts?) preprints will not suffice. To Kent Anderson’s point, there is of course a danger that the public, in addition to benighted and/or poorly trained journalists, will not take the label “not peer reviewed” to heart. Some of his concern will be allayed if preprints are labeled in a way that is more transparent for people who don’t know what peer review is. E.g., perhaps, “this research has not been subjected to the usual high level of evaluation normally done in scholarly journals, namely peer review. [Peer review would be hyperlinked to information about what peer review is.] The government does not endorse this research in any way, but performs a public service by bringing it to the attention of researchers and the public. *Caveat Lector*. You’re reading a preprint, not a formal and fully scrutinized piece of research [hyperlink to meaning of preprint.” (This could be followed by a reminder to benighted journalists to take their job more seriously.)

It’s amazing how little the general public knows about peer review, or even what a journal article is. Those of us who teach “information literacy” (better termed research skills) to undergraduates know this all too well. Maybe the society publishers, SSP or whatever should make this an ongoing educational effort.

Anyhow, I’ve brought back from Charleston a bunch of insights I want to mention in my preprint about preprints, but it will take some time.