Editor’s Note: Today’s post is by Christos Petrou, founder and Chief Analyst at Scholarly Intelligence. Christos is a former analyst of the Web of Science Group at Clarivate Analytics and the Open Access portfolio at Springer Nature. A geneticist by training, he previously worked in agriculture and as a consultant for A.T. Kearney, and he holds an MBA from INSEAD.

Standing for Multidisciplinary Digital Publishing Institute, MDPI is no stranger to controversy. In 2014, the company was named to Jeffrey Beall’s infamous list of predatory publishers. After a concerted rehabilitation effort, they were removed from Beall’s list. Since then, incidents include editors at one MDPI journal resigning in protest over editorial policies and more recently, questions raised over waiver policies that favor wealthier, established researchers over those with financial need. Just last week, a leader in the scholarly communications community felt compelled to publicly ask, “Is MDPI considered a predatory publisher?

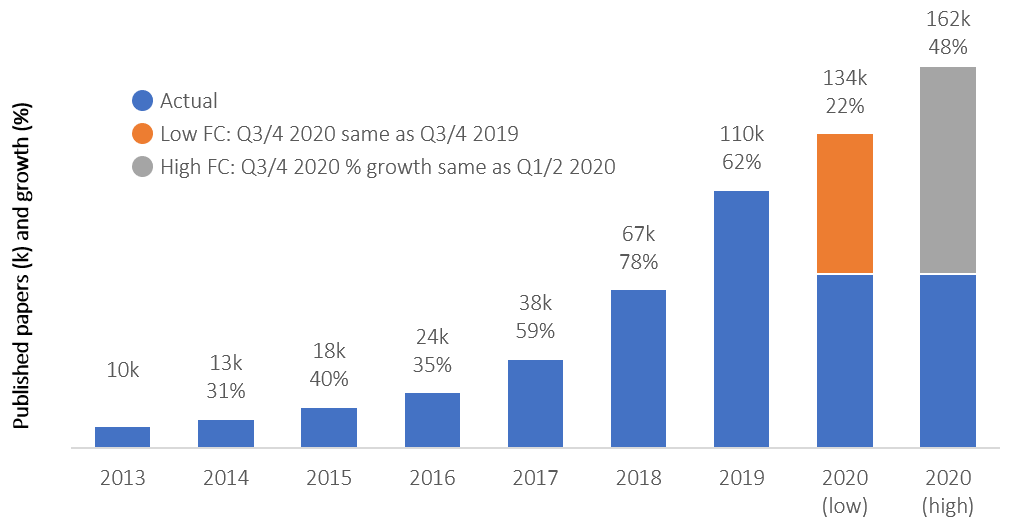

Despite these ongoing questions, MDPI has flourished as a publisher, and authors have flocked to their journals. Based on SCImago data, at least 16 publishers were larger than MDPI in 2015 in terms of journal paper output. As of 2019, 71 of MDPI’s 250 journals have an Impact Factor (Clarivate’s JIF), an indication of rigorous peer review and impact (measured in citations), and MDPI has become the 5th largest publisher, publishing 110k papers per annum, including 103k research articles and reviews. They are firmly positioned ahead of Sage, ACS, and IEEE. Growing at ~50% YTD (despite COVID-19), MDPI may soon overtake Taylor & Francis for the spot of the 4th largest publisher in the world.

In 2019, they also became the largest Open Access (OA) publisher, moving ahead of Springer Nature, which published 102k Open Access papers (93k research articles) in fully OA and hybrid journals.

I originally studied the MDPI portfolio expecting to find something nefarious behind their success, and I intended to contrast them to other “healthier” OA portfolios. Upon further study, I concluded that my expectations were misplaced, and that MDPI is simply a company that has focused on growth and speed while optimizing business practices around the author-pays APC (article processing charge) business model. As I discovered later, I was not the first skeptic to make a U-turn (see Dan Brockington’s analysis and subsequent follow-up). What follows is an analysis of MDPI’s remarkable growth.

Corporate structure, organizational structure, and profitability

MDPI is registered as a corporation (Aktiengesellschaft) in Basel, Switzerland. Its management team and its board of directors are a mix of (a) business and academia veterans and (b) young professionals that have gained most of their experience within MDPI. The company is spearheaded by Dr. Shu-Kun Lin (Founder and Chairman of the Board), Delia Mihaila (Chief Executive Officer), and Franck Vazquez (Chief Scientific Officer and former CEO).

Per MDPI, they had more than 2,100 full-time employees by end of 2019. As of July 2020 about half of their 1,650 employees that can be found on LinkedIn were based in China, and a third were scattered across six European locations (Switzerland, Serbia, the UK, Romania, Spain, and Italy). The US and Canada were home to 6% of their employees.

Using the numbers provided by MDPI for Coalition S’s pricing exercise and assuming those are accurate, I estimated MDPI’s average discounted APC at about $1,500, and forecasted their APC revenue between $190m and $230m for 2020, assuming a discount of 19%, 2020 content volumes as projected in Figure 1, and the latest APC prices of July 2020 for some paper types (APCs might have varied throughout the year). MDPI reports a cost per article of about $1,400, implying a rather slim margin of 1% to 6% per article, depending on whether the reported costs apply to all paper types or only some types, which translates to a projected 2020 APC profit between $2m and $13m.

It is noted that MDPI is debt-free, and the reported costs include taxes. In addition, while OA APC is their core business, they also have a broad range of other revenue-generating offerings for authors, publishers, and societies.

From rags to riches

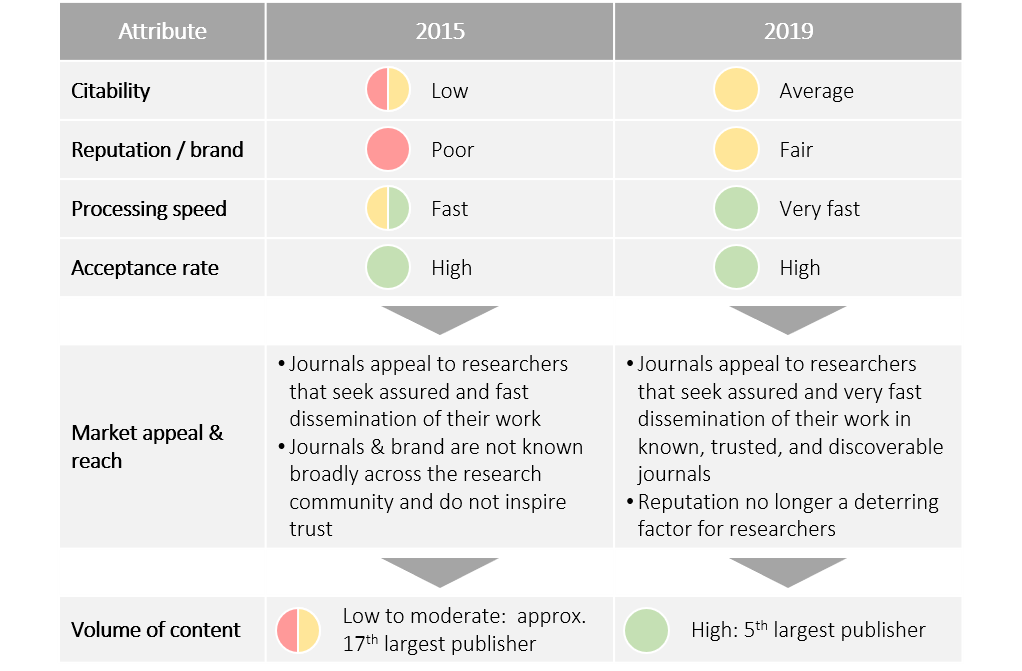

Some of the factors that affect the journal choice of researchers are its citability, reputation/brand, publishing speed, and odds of acceptance. Not all factors matter the same to all researchers at all times. For example, depending on the circumstances, some researchers may opt for speed instead of reputation and vice versa.

Fast publishing and a high acceptance rate have been constants for MDPI: its journals published about 40% of the submitted papers from 2016 to 2019, and they did so at an extraordinarily fast 68 days in 2016 that accelerated to 39 days in 2019 (median value). High odds of acceptance is a common feature of “sound science” journals, but fast publishing is not (more on that below).

Citability has not been a constant for MDPI, but it has improved year after year. In 2016, only 27 of its 169 titles were indexed on SCIE (Science Citation Index of Web of Science) and were on track to get an Impact Factor. By 2019, its leading journals were generally as citable as the average articles in the fields where they compete (more on that below). In summer 2020, 71 of MDPI’s 250 titles had an Impact Factor.

A potential explanation, summarized in Figure 2, for the continuing, phenomenal growth of MDPI that is demonstrable in Figure 1 is as follows:

- The constants (rapid and likely acceptance) combined with the improved citability have attracted increasingly more content to MDPI

- The increase in citability combined with the increase in content have led to improved brand/reputation: more researchers get to know MDPI and its journals (67k editors and 452k review reports in 2019), and a higher proportion of them form a favorable view about them

- A virtuous circle commences, where in turn, the improved brand/reputation help attract even more content

- In parallel, an increasing number of funders mandate that content is published OA driving researchers to publishers such as MDPI

When does this rapid growth stop? More on that below, but in principle, growth will slow down when the citability and reputation of MDPI stabilize. While these improve, MDPI is likely to continue growing at a fast pace. MDPI may also continue to drive growth through launching new titles.

Citability improving for 2019 content

The increasing proportion of MDPI journals that have an Impact Factor is an indication of improving citability across the portfolio. As mentioned above, 27 journals were on track to have an Impact Factor in summer 2016 and 71 journals had an Impact Factor in summer 2020.

But what happens to the citability of journals after they get an Impact Factor? Are they flooded with content of poorer citability as is the case with successful megajournals? And what is the citability for content published in 2018 and 2019? The latest Impact Factors reveal nothing about the citability of 2019 content and are only partly informative for 2018 content.

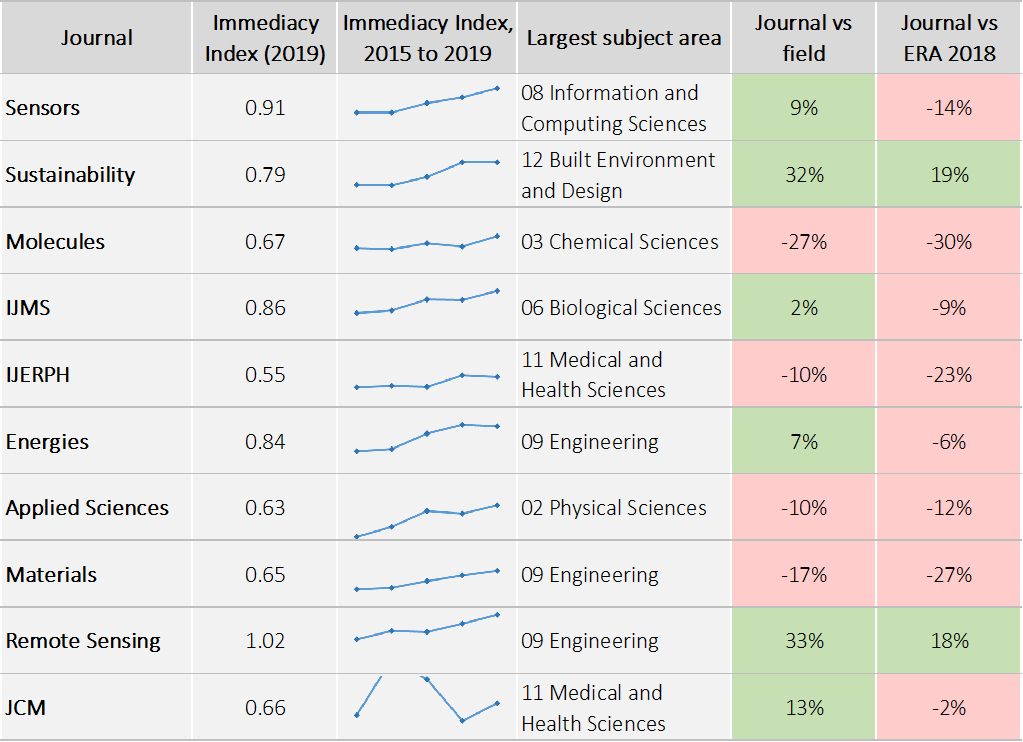

In order to answer these questions, I looked into the Immediacy Index of MDPI’s largest ten titles in 2019 (Table 1). The Immediacy Index is the ratio of citations achieved in a year for content published in that same year. It allows for the assessment of recent content but is less reliable than indicators such as Clarivate’s JIF or Elsevier’s CiteScore that are based on citations over a longer period of time.

According to Dimensions data, MDPI’s leading journals have been improving their citability, and their content is as citable as that of the “average” article in their respective fields. All ten titles improved their citability for content published in the same year (Immediacy Index) from 2015-19, and eight of them improved their citability from 2018 to 2019. Six of the titles had a better Immediacy Index in 2019 in their leading research category than articles of other journals, and two of them had a better Immediacy Index than articles of the selective ERA 2018 journals (25,017 journals in the Excellence in Research for Australia 2018 journal list).

Phenomenal speed, but at what cost

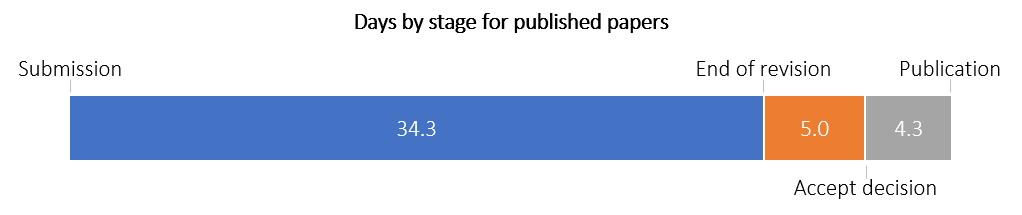

MDPI excels when it comes to processing speed. Despite high growth that typically leads to operational challenges, MDPI has achieved remarkably fast processing time from submission to publication. According to MDPI, the median time from submission to publication was 39 days for articles published in 2019.

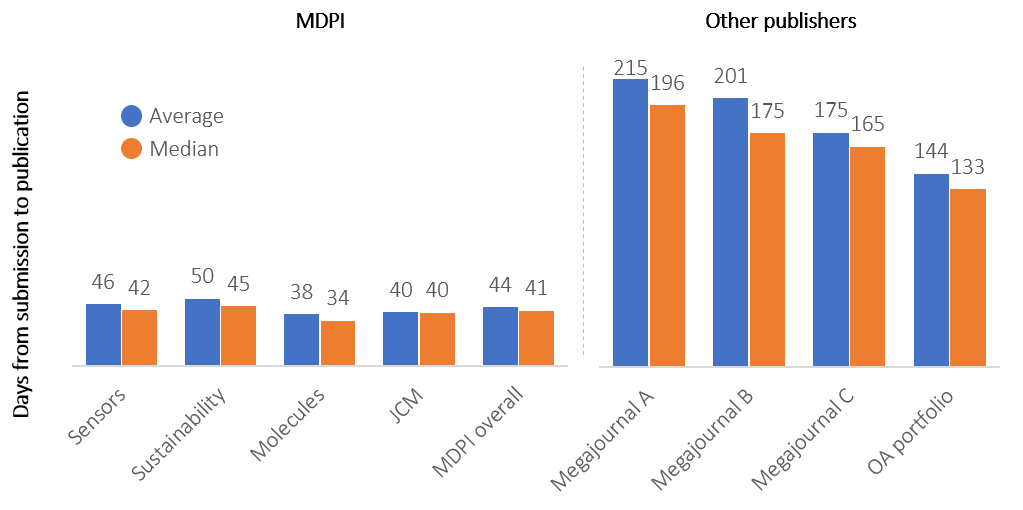

In order to test MDPI’s stated performance, I sampled 240 manuscripts that were published in Q3/4 2019 in four of MDPI’s journals (Sensors, Sustainability, Molecules, and JCM). Molecules, the fastest of the four journals, took on average 38 days to publish (median of 34 days). Sustainability, the slowest (but not slow) journal, took 50 days (median of 45 days) (Figure 3).

MDPI’s performance is possibly the fastest among top publishers. Earlier this year, I looked at the processing time of three megajournals and a prominent OA portfolio, sampling ~300 papers for each of them. Two of the megajournals took more than 200 days on average from submission to publication. The OA portfolio was faster, but still took 144 days to publish, three months longer than the slowest journal of MDPI.

The math is ruthless. Of the 1,090 papers that were sampled across the three megajournals and the portfolio, 86% took longer than 100 days to get published. On the contrary, of the 240 papers that were sampled for MDPI, just 2% were published in longer than 100 days.

MDPI’s processing time advantage constitutes a competitive advantage that is not easily replicated by competitors, and it gives it a unique selling point to authors that wish to disseminate the output of their research rapidly.

But how is this performance achieved? At its most basic form, the time to publish an article is the product of (a) actions taken and (b) time per action. MDPI may be skipping actions and/or completing them faster. If MDPI is accelerating actions, how is this achieved? And if any steps are skipped, are they essential or secondary for the peer review and publishing process?

In order to find out more about MDPI’s rapid publishing process, I approached Delia Mihaila, MDPI’s CEO, who kindly agreed to have a conversation about it.

Delia explained that publishing research fast has been a core value of MDPI all along. She said that it started with MDPI’s founder, Dr. Shu-Kun Lin, who had been a researcher and editor himself, and realized the importance of making content freely available to everybody as fast as possible as a means to accelerate progress. She recalled him saying ‘don’t make the scientists wait and don’t waste their time by unnecessarily delaying a response to them; this is the difference that we want to make’.

Delia attributes MDPI’s fast performance to getting the headcount and task allocation right. She said that large, in-house teams (as many as 70-80 FTEs for one of the large journals) take over the tedious part of the work of the academic editors. The in-house team pushes and negotiates with the other stakeholders (editors, reviewers, authors) to meet strict deadlines as well as possible. Delia said that adhering to such deadlines may sometimes lead to complaints, but MDPI always shows flexibility. She added that ultimately there is common understanding that a rapid process serves everyone’s interests.

I asked Delia whether, in addition to working fast, MDPI takes any editorial risks. She said that given its ascent, MDPI is in the spotlight and as result “we are very, very careful in everything we do, and we must always have evidence of a rigorous peer review process. Open Access publishers are always under the suspicion of skipping the peer review just for the sake of making money. We cannot afford to not conduct the peer review properly or to act unethically”.

In a previous interview with Lettie Conrad in 2017, their then CEO Franck Vazquez made similar points. He explained that their “objective for the future is to reduce the time spent by authors on administrative tasks (reformatting references, making layout corrections, etc.) and aspects of scientific communication that can easily be handled by publishers”. He added that, “this will allow us to make efficiencies in the editorial process”.

No matter how MDPI achieves a speedy publication process, it is clear that the research community has not rejected their approach so far. MDPI’s content has been growing, it has become increasingly citable, and it is not retracted at an alarming rate.

The publisher reported 19 retractions in 2019, equivalent to 0.5 retractions per 1,000 papers (assuming that retractions refer to year t-2). As a point of contrast, I could locate 352 papers on Elsevier’s ScienceDirect that included the phrase ‘this article has been retracted’ in 2019, implying 0.5 retractions per 1,000 papers (again, assuming that retractions refer to year t-2).

What comes next for MDPI

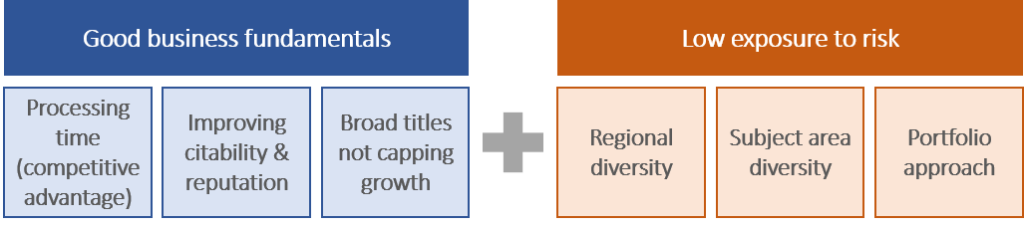

MDPI’s speed and citability have been at the heart of its growth so far and are likely to drive its future growth. Speed is a competitive advantage that sets MDPI apart from other publishers. Citability has been improving and continued to improve in 2019 (which will be reflected in Impact Factors that will be released in 2021 and 2022).

The broad scope of MDPI’s journals is a third growth factor. While it does not drive growth directly, it sets a higher growth ceiling per successful title. There are only so many papers a niche title can compete for; there are many more for a broad-scoped journal.

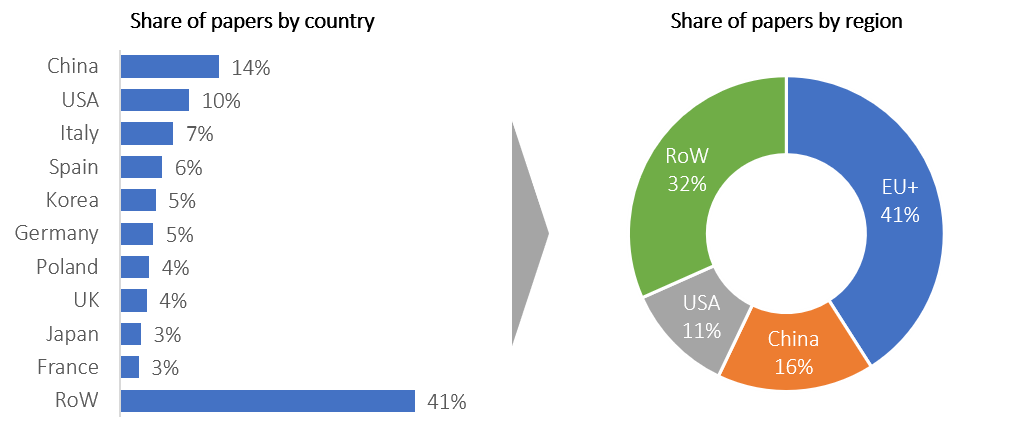

Speed, citability, and broad-scoped journals set the basis for strong growth of MDPI in the mid-term. This looks more likely in light of the portfolio’s low exposure to risk: MDPI has no over-reliance on few countries, subject areas, or titles (Figures 6 and 7).

MDPI is not overly reliant for content on any single country, making it less susceptible to policy changes, such as the new Chinese policies (here and here), or crisis, such as COVID-19. It has a strong footprint in European countries (41% of content from EU, UK, Norway, and Switzerland), where administrators have systematically favored OA with initiative such as Plan S.

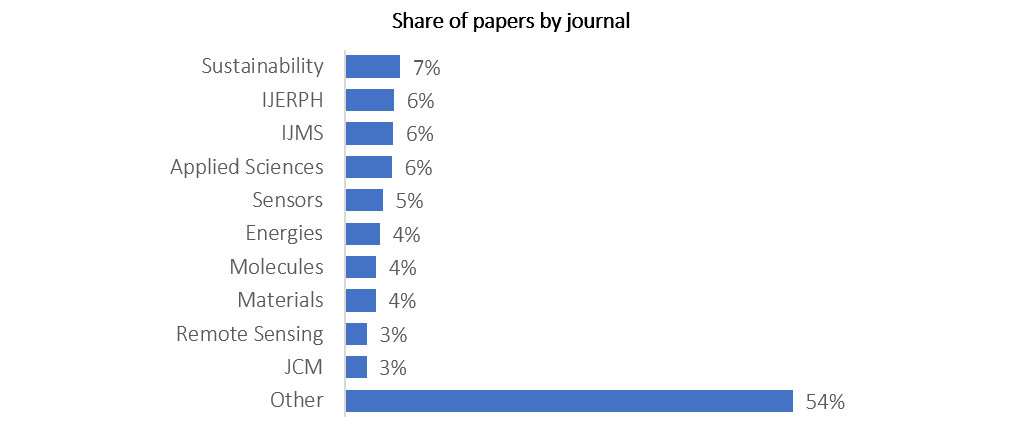

MDPI is also not overly reliant on any subject area. As shown on Table 1, its largest ten journals cover a wide spectrum of disciplines: Biological Sciences, Biomedicine, Physical Sciences, and Technology. In addition, the portfolio is not overly reliant on a few large journals, the same way that portfolios with megajournals are. For example, MDPI’s largest journal, Sustainability, accounts for just 7% of MDPI’s content (Figure 7).

The MDPI model: strategic design, market selection, and adoption

Did the MDPI executives anticipate that a series of very fast, broad-disciplined, OA journals would be the key to success? Or was the MPDI model designed out of necessity and, by virtue of market selection, happened to stand out among similar but slightly different models, such as OA portfolios that focus on one discipline, are built around megajournals, have multiple niche titles, or focus on prestige rather than volume?

In other words, is MDPI’s success the result of strategic design or the result of market selection? It could be either or both, and it matters little. Clearly though, the MDPI model has been successful, and other publishers may have taken notice.

For example, Springer Nature has just launched the Discover series that shares some characteristics with the MDPI model. The series is fully OA, aims to publish manuscripts fast (within 7-10 weeks from submission by utilizing AI and deep learning technologies), and will consist of 40 broad-discipline journals covering multiple disciplines.

The future of publishing

The emergence of COVID-19 and the necessity to disseminate research findings rapidly have brought preprints to the spotlight. Preprints allow for the distribution of findings half a year (or longer) ahead of publication, depending on the times that a paper gets rejected and the speed of the journal that eventually publishes it, and have been widely adopted by publishers.

Yet, while the early distribution of findings potentially accelerates discovery, the lack of peer review sets it back. No peer reviewed journal could survive with a retraction rate of 1%, yet akin to retractions, a much larger proportion of preprint papers do not make it to publication.

Could it be that the MDPI model of rapid peer review is better at accelerating discovery than preprints or traditional, slow peer review?

Preprints may accelerate dissemination by 200 days for papers that are published in journals such as Megajournal A & B in Figure 3 above, but their benefit is typically less than 50 days for papers that are published in MDPI journals. Is a time-saving of 50 days worth the risk of posting non-peer reviewed content?

Disclosure: MDPI was approached for comment on their rapid publication process, which resulted to a short interview with Delia Mihaila (CEO) on this topic. I have never communicated with any of their employees for any other purpose, and I have never worked for MDPI in any capacity.

Discussion

34 Thoughts on "Guest Post – MDPI’s Remarkable Growth"

Many thanks Christos for an interesting analysis. Figure 3 is particularly telling. I also think it’s important to mention the special issue model that MDPI uses. This effectively outsources paper acquisition and editing work to guest editors and their networks. This also helps to explains the growth IMO. But you can have too much of a good thing. Sustainability currently has 1500 (!) special issues now open and recruiting papers.

Hi Dan! Thanks for reading and commenting. Due to my lack of editorial expertise, I wouldn’t know how to evaluate the ‘special issues’ tactic. I thought this is not unique to MDPI. Is it that MDPI are using it much more than other publishers?

Special Issues are not unique at all – but the number that large MDPI journals can have on the go almost certainly is.

Hi. Frontiers uses research topics with a very similar strategy. I have been guest editor of both Frontiers and MDPI and what I have realised is that impact factor and publication speed is what matters most, at least in my field (psychology). I think it is something that is explained above all by the need for first quartiles (Frontiers in Psychology was Q1 until two years ago and IJERPH is and accepts articles on mental health) for academic promotion in countries like China and Spain. In many places they are talking about giving importance to the social and technological impact of contributions and not only to the number and impact factor of the journals where the articles are published (e.g. China: https://www.universityworldnews.com/post.php?story=2020031810362222), but the reality is that evaluations still rely heavily on these criteria.

It sounds to me like MDPI’s secret isn’t really that big a secret after all: if you want to publish quickly, but with a reasonable amount of rigor, and if you want to “reduce the amount of time spent by authors on administrative tasks,” the main thing you need to do is hire an enormous staff (“as many as 70-80 FTEs for one of the large journals”).

According to Wikipedia, MDPI employed 2,137 people in 2019. I do wonder how much they’re paid.

MDPI has a large operation in China, 4 offices and 1400+ staff (https://baike.baidu.com/item/MDPI/1336780).

If one trusts the numbers MDPI has provided for Coalition S’s pricing exercise (and given MDPI’s status as a privately held company, there’s no way to check their validity), they are making a 32% profit margin on every 2000 CH (Swiss Francs) APC they receive (https://www.mdpi.com/apc). They then plow 57% of that profit back into providing waivers and discounting articles. It will be interesting to see if this is a long term strategy, or a short term effort to increase citation and reputation (remember they have recently been criticized for preferentially offering waivers to well-known and well-funded authors rather than those in need), which will then trail off once they are better established.

Hi David, I am also wondering whether the thin profit margin is a short-term gambit or part of a long-term strategy that goes for volume. Hard to tell at the moment. Yet MDPI’s commercial potential is not their current profitability but the potential profitability they may achieve if they hike prices even by a modest 10%.

MDPI is like the McDonalds of publishing. They push reviewers with a 7 day deadline (fastest in the industry as far as I know) and send a lot of reminder emails. Their production values are pretty budget and 95% is done by the authors when using their Word template (proofs are also in Word).

I’ve published in an MDPI journal and reviewed at a few – most reviews are not very rigorous and I personally don’t put a lot of effort in when reviewing for MDPI because I know that even if I recommend reject it will come back for revisions. It doesn’t matter if none of the numbers add up, the paper will get up.

So like fast food it’s fine occasionally but not a good idea to go there all the time.

Totally agree with and share your experience. Ultimately, I think this is not a healthy cuisine.

I have looked at several papers on topics of my interest in MDPI journals. I rarely cite them, as most of the content is not novel/original enough in my opinion. I refuse to review for MDPI journals because a seven-day deadline is too troublesome.

While MDPI may be enjoying an advantageous moment with Swiss tax breaks, etc., other factors– slowing or otherwise–may may not make up for speed in their dataset. In tech, speed is relative.

Hi Rick, indeed it is about headcount and a non-clunky MTS (manuscript tracking system). I don’t think AI and fancy tech will do the trick without throwing people at the process. You need to give up margin in order to protect overall volume and revenue (been saying this a few years now).

The growth is rather worrisome. I did not notice any mention of the recent publications on MDPI not allowing free articles from LMICs in a special issue, in the above analysis. Yes, i realise that this analysis not to highlight those issues, that is one strategy they adapt. This is to increase visibiltiy. This is very critical for their growth, i believe. https://wash.leeds.ac.uk/what-the-f-how-we-failed-to-publish-a-journal-special-issue-on-failures/

https://retractionwatch.com/2020/06/16/failure-fails-as-publisher-privileges-the-privileged/

I am not sure whether you have received invitations from MDPI at all to guest edit their special issues. I doubt whether the MDPI office personnel send email invitations in their original names. I am always suspicious about their identity. Their “modus operandi” is rather strange!

Mention of and links to stories about their exclusion of authors from lower income countries can be found in the first paragraph of the post.

Hi Manor, as David said, some of this info is included in the article. I also plan to analyse waiver policies across OA publishers in another article.

But let me play devil’s advocate for a moment. Assuming that MDPI’s margin is as slim as I’ve estimated it, giving more waivers would mean one of two things: they either operate at a loss or they increase prices. The former won’t happen, so it has to be a price increase. Would you be happy to pay $200-$300 more per article in order to subsidise the research of those that can’t afford APCs?

Not taking sides here. Genuinely interested in understanding how the community views this.

Research should not bargained – either yes or no for APCs. Not discounts. It is a shame if they say that we get 10%, 20% and 30% discounts, based on various reasons. Do you know that some “publishers” send invitations with Christmas and New Year Deals?

I was on Energies’ editorial board, but I quit because of their review model. Often one editor would pick reviewers and the paper got a revise and resubmit but then I was asked to make a decision on whether the paper should be published. I would look at the paper and often it was really bad and should never have gotten an R&R in my opinion. The reviewers were not competent reviewers for the topic/methods. I felt bad though about rejecting papers that authors had revised specifically for the journal. I don’t know why the journal does this. I told the journal that this didn’t make sense to me, but they kept doing it. Anyway, I can’t work like that, so I quit. I had a better experience earlier as a special issue editor for the journal.

The last comment here is the one aspect that still leaves me worried and suggests that the metrics being used here do not pick up the predatory and peripheral nature of a publishing organisation like this one. I have reviewed for a few OA journals – some of them respectable – and I have found that a suggestion of reject or revision gets nowhere. The publisher is in a conflict of interest. They want the author fees and they want the speed. That means the peer review can be skimpy. So, then we have rapid and widely cited papers (apparently), but they have not benefited from adequate peer review and one wonders about the quality of them. Another thing missing from this otherwise erudite and impressive review article is the actual citation metrics (whether JiF or Google Scholar). So, how do we get into this debate not just the basic volume and commercial and superficial academic metrics, but basically their quality? I fear this is where we are giving too many supposed OA journals a pass and allowing publishers using predatory commercial tactics to pass muster. If we are not careful this will undermine the OA model being pushed by decent scholarly groupings.

I have reviewed some papers in OA journals and a reject and revision gets nowhere. This means that papers are not being properly reviewed. How do we capture this element? It is an important quality dimension that does not feature in this otherwise excellent review. For example, how about citation metrics from JiF and Google Scholar? Otherwise the danger is that these “predatory” journals that show up well on some of your metrics, but lack quality, will push the good OA journals out.

Hello Peter, and sorry for not replying sooner.

I thought that citability is one proxy for the quality of MDPI’s published content, and they appear to pass the test: based on Dimensions data, their Immediacy Index compares favourably with the ‘average’ journal content.

I refer to the JIF in the article as an another proxy for quality. Clarivate’s editorial team gives JIFs only to journals that meet certain editorial criteria. An increasing number of MDPI’s journals have met these criteria in recent years.

Perhaps I’m missing something but I can’t see what more we could have learnt from additional JIF analysis. The Immediacy Index covers citability and the JIF covers editorial rigor.

The last proxy I used is the retraction rate in comparison to Elsevier’s rate. I chose Elsevier because their data are easy to browse and because they have the most impactful, best curated portfolio among major publishers. MDPI performs equally well there. Of course this is a back-of-the-envelope calculation, and this analysis can probably be done more rigorously.

In short, MDPI passed all the quantitative tests I threw at them. Of course, there are limits to the analysis that can be conducted with publicly available data. There are additional limits at a personal level because of my limited edition understanding. I’d be grateful for any suggestions that will expand and help improve the analysis.

This is a very well done case study. Thanks!!!

I was thinking of JIF level or same for Google Scholar. Are they well cited in decent journals. Yes you have done a great job. But it is still troubling that we cannot somehow quantify decent peer review. I have seen articles improve hugely after peer review. But perhaps this is an illusion and speed and volume is pretty much all that matters!

That’s a good point: checking how they are cited in nature, science, etc. I’d be very interested to carry out this analysis but I don’t have access to WoS, Scopus, or the premium version of Dimensions at the moment.

Probably out of my depth here, but wondering whether editorial teams have had more clout than ops teams when designing workflows and as a result the peer review pendulum has shifted towards unnecessary and slow handling in order to achieve editorial perfection? Speaking as an ops person here 🙂

Interesting article, Christos. Actually, it is possible to make an estimation for the proposed analysis: counting references from Nature or Science articles to MDPI articles with the help of the freely available Crossref API (https://github.com/CrossRef/rest-api-doc). Nowadays many publishers deposit the references of their publications in Crossref, including the DOIs of the references. This is the case for Nature and Science. If we extract these DOIs from Crossref, then from the DOI prefixes it is relatively easy to identify the publishers of the cited articles. One possible way to obtain the data with the help of the Crossref API is to filter for the ISSN of the journal (in this case Nature or Science) and limit the search for a time-period. For example the following link will return all the available data in Crossref for Science publications in January 2019: “http://api.crossref.org/works?filter=issn:1095-9203,from-pub-date:2019-01-01,until-pub-date:2019-01-31&rows=1000” Note that the maximum results are limited in 1000, so it is advisable to filter the results in such way that the resulting number of search results is below 1000, or one should use the cursor/offset parameters in the API.

I did a quick test for Science and Nature and found the following:

In the year 2019 there were 4666 publications registered from Science in Crossref, and 1323 of these have at least one reference. Altogether there were 51550 references registered in Crossref for Science articles in 2019. 95 of these 51550 referred to an article with a DOI prefix of 10.3390, which is the prefix used by MDPI. The most frequently cited articles by Science articles are from Springer Nature / Nature Publishing Group (10.1038) 7730 references, followed by Elsevier (10.1016) 7609 references, and then by the American Association for the Advancement of Science (AAAS the publisher of Science) with 3999 references. MDPI has the 46th most cited DOI prefix in this dataset.

For Nature there were 3644 publications registered in Crossref in 2019, and 2044 of these have at least one reference. There were altogether 51468 references, of these 91 referred to a DOI with the MDPI prefix (10.3390). The most cited publishers by Nature articles are Springer Nature/Nature Publishing Group (10.1038) with 10380 references, Elsevier (10.1016) has 7941 references, and AAAS/Science (10.1126) with 2925 references. MDPI has the 50th most cited DOI prefix in this dataset.

Thank you, Gabor. This is very helpful. Definitely interested in carrying out this analysis when I get the chance, unless someone else gets to it first.

I guess one would need to take into account the time of publishing and the volume of the underlying content a la Citescore and JIF, e.g. [number of 2019 citations in Nature] divided by [number of MDPI articles in 2017 and 2018]? Might also need to repeat the calculation by subject area so that it will not penalise publishers that publish content irrelevant to Nature/Science and vice versa.

This is technically beyond me. Well done folks and good on you for pursuing this. We really have to find the metrics that will allow us to nail the predatory publishers once and for all! Can I mention Google Scholar-based metrics again? Unlike the ones you have been helpfully directed towards, GS works across all publication disciplines, not just STEM (like Nature and Science). There are various GS-based metrics available that are gaining credibility over JIF, such as number of citations over five years (rather than two years, which I think is the JIF standard).

I think Google Scholar is actually more comprehensive – and it is free and accessible. As far as the editing role goes. I was a senior editor at Social Science and Medicine for a decade in the 2000s. We had a very low acceptance rate, which was in part a rationing device (for limited space, in those days). But there were also really positive outcomes to the peer review process. We had to have three independent reviews and I saw papers improve out of sight. It was a very broad field so there was no way I could judge the papers in detail on my own, but I could see them grow in clarity, authority, comprehensiveness, and in scientific acumen and impact under expert peer review. Much of that was down to dedicated peer reviewers prepared to put in the time and come back to each draft. Usually one or two iterations were sufficient. But you would never get that in many of these OA journals. OA people argue that open peer review does the job – e.g. comments on open papers – but actually a well moderated peer review process is far superior in my view in bringing to the fore cutting edge published work that is in line with accepted professional norms of the particular scientific community hosting the publication. It is efficient because it gets rid of many of the obvious deficiencies, and then you are just left with fundamental scholarly and scientific debate without having to deal with errors that could have been sorted in the peer review process.

You are concerned, Peter, with pre-publication peer review. When supplemented by post-publication peer review – such as that which was afforded non-anonymously by accredited reviewers for a short period in PubMed Commons – editors are provided with quick feedback on the quality of their stable of pre-publication peer-reviewers and can discover potentially valuable additions to that stable. The same folk – voluntarily because of their intense interest in a subject – can provide important post-preprint-publication peer review that sharpens papers prior to the pre-formal-publication peer review that is your primary concern.

Worth noting that fewer than 10% of papers posted to biorxiv receive any public comments at all, let alone thorough peer reviews, that PubMed Commons was shut down due to lack of interest from users, and that while post-publication review sites like PubPeer can provide valuable insights, they’re also swamped with grudge-bearing and axe-grinding, which one has to carefully navigate through. As Peter notes, without someone actively driving and overseeing the peer review process, the results are a mixed bag at best, and far too inconsistent to be applied systematically across the literature.

This Kent Anderson piece from back in 2012 is perhaps also of interest:

https://scholarlykitchen.sspnet.org/2012/03/26/the-problems-with-calling-comments-post-publication-peer-review/

Kent Anderson’s piece on post-publication peer review appeared in 2012 just before the beginning of the five-year NCBI “experiment” PubMed Commons. From the start PMC seemed to be following his advice in that the reviewing was by non-anonymous, credited folk, who generally wrote courteously and had something serious to say. 7000 reviews later the panning of PMC met with numerous protests that are recorded in NCBI archives. With a little tinkering the experiment could have been continued and its merits more soberly assessed.

Thank you, Peter. That’s great insight to have.

Very interesting, thank you. I have been wondering about MDPI recently after receiving a typical “Dear esteemed professor” (which I’m not) email soliciting an article, although for a far more relevant journal than usual. At the same time, my boss (an actual esteemed professor) was approached by another of their journals with a guest editor who is well known within the field. I have certainly struggled to reconcile whether they are genuine or not.

Hello,

I have published in MDPI journals and did some reviews. There are good and bad things about MDPI.

On the good side, as mentioned already, the speed. Once the manuscript is accepted you get a proof with questions and edits. Meaning the editors actually really work on the paper in order to improve it. This includes grammar and typos as well as things like “what is the manufacturer of this chemical?”. I was quite impressed by this, because other journals put about no effort in such things. As well, I found communication with the editors quite good.

On the bad side, again speed. As reviewer you have 7 days to review a manuscript (however, it seems negotiable). And you have to agree to do the review right away. If you wait a day, then they will have asked someone else already. I found that quite annoying, because I would like to think about doing the review and ask myself, if I have the expertise to do that. The review form is OK, however, one important thing is missing: you will not get the authors’ reply. You just get some email saying “thanks for the review, the manuscript is now accepted and published, here is your discount voucher”. I found that quite worrying. On the other side, the few papers I had reviewed were quite good. So no idea what happens, if you suggest a rejection.