Earlier this fall, Clarivate Analytics announced that it was moving toward a future that calculated the Journal Impact Factor (JIF) based on the date of electronic publication and not the date of print publication.

If your first reaction was “What took you so long!” you are not alone.

Online publication dates back to the mid 1990s, with several forward-looking journals hosting some or all of their content on this futuristic thingy known as the World Wide Web. By the early 2000s, titles like the Journal of Biological Chemistry had a robust model of publishing accepted peer-reviewed papers online before rushing them off through typesetting, layout, and print distribution. Today, two decades later, terms like “Publish-ahead-of-Print,” “Early Online,” “Early View” and their variants seem quaint and anachronistic as we all got accustomed to a model where electronic became the default model of journal publication. Still, Clarivate stuck to a model that based publication date on print. While the lag time between online publication and print designation is short for some titles, it can be months (or even years) for others. For most online-only journals, there is only one publication date.

This discrepancy between how Clarivate treated traditional print versus online-only journals aroused skepticism among scientists, some of whom argued that long delays between print and online publication benefited the JIF scores of traditional journals over newer open access titles, and cynically suggested that editors may be purposefully extending their lag in an attempt to artificially raise their scores. Whether or not this argument has merit (their methods for counting valid citations are problematic), lag times create problems in the citation record, especially when a paper has been published online in one calendar year and print in another — for example, published online in December 2019 but appearing in the January 2020 print issue. In this case, one author may cite the paper as being published in 2019; another in 2020. Clarivate will keep both variations of the reference but link only the latter in its Web of Science (WoS) index. This is just one reason why it’s so difficult to calculate accurate Impact Factor scores from the WoS. By adopting a new electronic publication standard, Clarivate will help reduce ambiguity in the citation record. It will also make it easier and more transparent to calculate citation metrics. So, what took them so long?

The Web of Science began including electronic (“Early Access”) publication dates in its records since 2017 and now includes this information for more than 6,000 journals, according to Dr. Nandita Quaderi, Editor-in-Chief of the WoS. While this number sounds impressive, we should note that it represents only about half of the 12,000+ journals they currently index. More journals will be added “using a phased, prospective approach to accommodate timing differences in publisher onboarding,” which makes it sound like the delay in implementation is in the hands of publishers and vendors who are coming late to the table. I asked for a list of titles or publishers included in the program and have not heard back, so I created my own list (download spreadsheet link removed at request of Clarivate).

From this list, I counted 43,831 Early Access records from 4,991 sources in the WoS with a 2020 publication date. While I could identify titles published by Springer Nature, Wiley, and JAMA, conspicuously absent were titles from Elsevier (including Cell Press and Lancet), university presses (Oxford, Cambridge), together with publications from prominent societies and associations, like the American Association for the Advancement of Science, the American Chemical Society, the American Heart Association, and American Society for Microbiology, among others. Strangely, these publishers have been sending online publication metadata to PubMed for years.

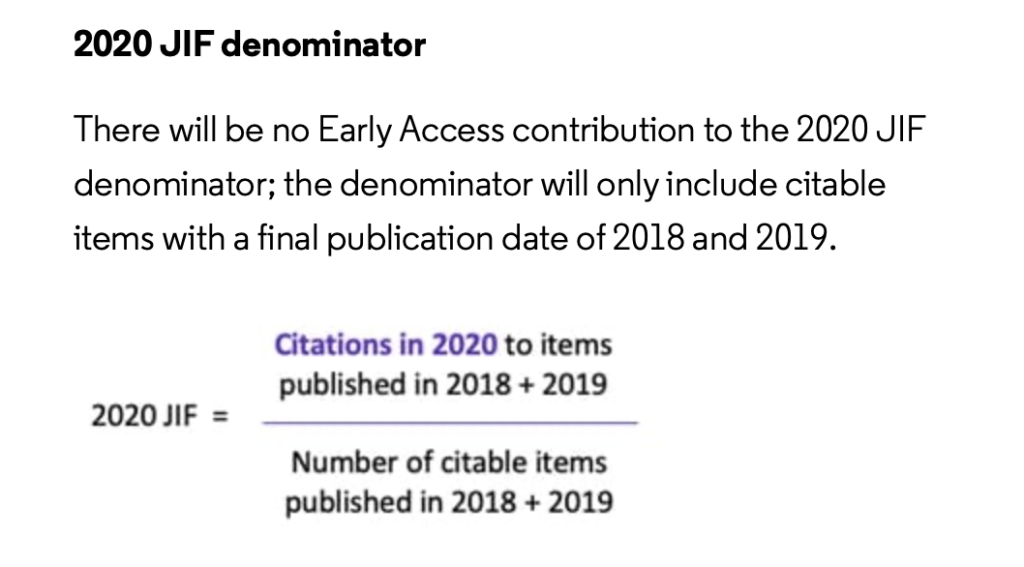

The next 2021 release (using 2020 data) will be a transition year, in which citations from Early Access records will be added the numerator of the JIF calculation but excluded from publication counts in the denominator.

According to Seven Hubbard, Content Team Lead for the Journal Citation Reports (JCR), the full switch to using online publication for the calculation of Journal Impact Factors (JIFs) will begin in 2022 using 2021 publication data. The next 2021 release (using 2020 data) will be a transition year, in which citations from Early Access records will be added the numerator of the JIF calculation but excluded from publication counts in the denominator.

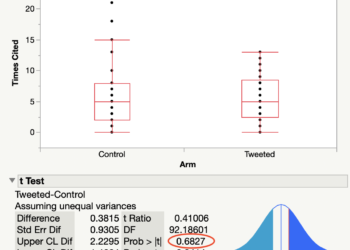

Adding possibly hundreds of thousands of citations to Clarivate’s calculations is expected to have an inflationary effect on the 2020 JIF scores of all journals that receive them. If we expect that these new Early Access papers are just like the papers that preceded them, they will distribute citations similarly across the network of interlinked journals. Like the ocean, the rising tide will lift all boats.

However, participating Early Access journals will receive an additional shot of self-citations, which may be sufficient to push their JIF scores above close competitors who are not currently in Clarivate’s phased implementation program. In other words, the tide will lift the journals of participating publishers much higher than non-participants. The inflationary effect to the calculation of the JIF will likely turn negative the following year as citations from these 2020 Early Access papers (published in print in 2021) are ignored for the 2021 JIF calculation.

Clarivate’s phased roll-out may preferentially benefit participating publishers, bias others

Given the potential of changing the ranking of journals, I asked Hubbard whether he or his team had tested whether adding Early Access citations changed the ranking of journals or whether it preferentially benefited participating publishers. At the time of this writing, I have not received a response.

The changes at Clarivate are welcome and long overdue. While it is not clear how a shift from print to online publication dates will affect the ranking of journals, it should help to reduce ambiguity and confusion in the citation record. A lack of transparency and communication on the anticipated effects of this transition are troubling however, especially because they currently involve just one-half of active journals. If arriving at a fair and unbiased assessment means waiting another year for all publishers to participate, I’m personally willing to wait.

Feb 1, 2021 Update: See estimates of JIF effect in Changing Journal Impact Factor Rules Creates Unfair Playing Field For Some.

Discussion

13 Thoughts on "Changes to Journal Impact Factor Announced for 2021"

RIP to January print issue stacking…

Won’t journals just stack February (and maybe March) issues to get a similar effect?

Yes, finally, this was long overdue. Print issues have become more and more marginal over past 15 years and still basing JIF calculation was meaningless. Unintentional JIF boosting for print journals by shifting end of year papers to the following year’s print editions finally comes to an end. It will be easier for everyone, including indexing databases, instead of collecting multiple dates for each bibliographic item. Worst is stuff that’s published online in 2020 but has the print edition in 2021 needs to be properly cited with the 2021 in references. A nightmare for anyone that has every worked as an editor (I mean a real editor: the guy or girl actually editing the MS Word or LaTeX file).

With this move further way from the print paradigm, maybe one day we’ll also see people stop using print subscription prices for economic analyses of the journals market.

What will people use in lieu of that? Aren’t the subscription prices and APCs what defines the journal market size (ie what people will pay for what is being sold)?

APCs and prices paid for subscriptions do largely define the market size (though one should not neglect advertising, licensing, and other revenues). But the list prices for print subscriptions have little to do with how the majority of customers purchase journals. Most are getting online access, not print copies. Most are buying that online access as parts of discounted packages of collections of journals, often doing so as consortia rather than individual libraries. This is the reality of the market, so doing analyses based on the list price (that no one pays) for print copies (that no one buys) makes little sense.

I’m perfectly willing to acknowledge that list prices for print subscriptions have very little to do with the prices that consortia and libraries actually pay. But I am not aware of any data sources that attempt to assign price tags to individual journals within these packages — indeed I don’t know how you’d even approach this. So is the answer simply that there is no intellectually rigorous approach to analyzing real-world costs of specific journals, and economic analyses of the journals market are impossible?

Kent Anderson wrote about this way back in 2013. The Library Journal Periodical Price Survey, which remains the gold standard, still uses this clearly irrelevant data.

https://scholarlykitchen.sspnet.org/2013/01/08/have-journal-prices-really-increased-in-the-digital-age/

We do have better knowledge of what libraries are paying for journals — many libraries have taken a stand against contracts requiring NDAs (at least for those publishers that have required NDAs), and through FOIA requests and similar processes outside of the US, the details of those NDA contracts have been revealed.

But as you note, it’s hard to determine the price paid for an individual journal when one is buying a package of thousands of titles. One way around this is to consider using the metric that is still used by many libraries to measure value, cost-per-download (although Lisa Hinchliffe has argued against this as a defining metric — https://scholarlykitchen.sspnet.org/2018/05/22/are-library-subscriptions-overutilized/). Still, it’s probably a better reflection of what’s being paid than using the prices that no one is paying for something that no one is buying.

I think there is confusion in this thread between the cost of a journal to the publisher and the price of a journal. Publishers absolutely know their costs. Prices are a different matter. The entire shift in this industry over the past decade has been to move price from individual journals to aggregations of journals, the Big Deal. It is an aspect of a portfolio strategy, which is the dominant model in journals publishing today. There is no mystery in any of this.

From a colleague who gave me permission to post this – I wonder how this will affect authors’ promotion and tenure reviews. I’ve had 3 requests in the last few days to push up print publication for authors who need it for P&T review or to meet PhD graduation requirements. Both journals involved have a 12-month lag between online and print publication for various reasons not worth going into here. So, will these programs start honoring online publication now as the version of record?

I myself can attest to this-I have also had a request in the past two weeks, the article must be in print, being online doesn’t count. These requests, especially from Asia, happen all the time.

In my opinion it’s still not perfect solutions. Early access data can sometime is not provided in regular format. Secondly it will also create ambiguity about regular print publication and online publication.

Better solution would have been to just include the citations gather between online publication and print publication to first year citation data. And leave it while counting second year data( for 2 year IF).

After all citations are gathered by paper and should be carefully counted.

All these sound nice,but most journals have to fix first their ridiculous waiting times for decisions over someone’s work. With 6 to 18 months for a decision plus waiting time from web to printed version the whole subject becomes pointless. PhDs and those seeking for Tenures literally suffer.

If the actors in the publishing world—i.e., authors, societies, publishers, etc.—moved from the traditional print-issue citation style to using/requiring a DOI-only style (with the year of publication listed as the date the DOI was first assigned to the article) and citation styles (APA, MLA, etc.) were updated accordingly, it seems to me this could be a simple first step for all parties to move forward. This is, of course, based on the assumption that there isn’t a single journal out there that publishes only in print.