Artificial intelligence (AI) is rapidly transforming the landscape of scholarly publishing and scholarly communication. AI tools are increasingly being integrated into publishing workflows, while questions around ethics, transparency, authorship, and trust are reshaping conversations about the future of research dissemination. It can be hard to keep up with these developments and the conversations happening among us.

SSP’s new polling initiative, Pulse Check, is a series of short, focused polls designed to track trends and challenges like this in real time. The first of these polls, “AI in Scholarly Publishing,” set out to understand how our communities are navigating this monumental shift.

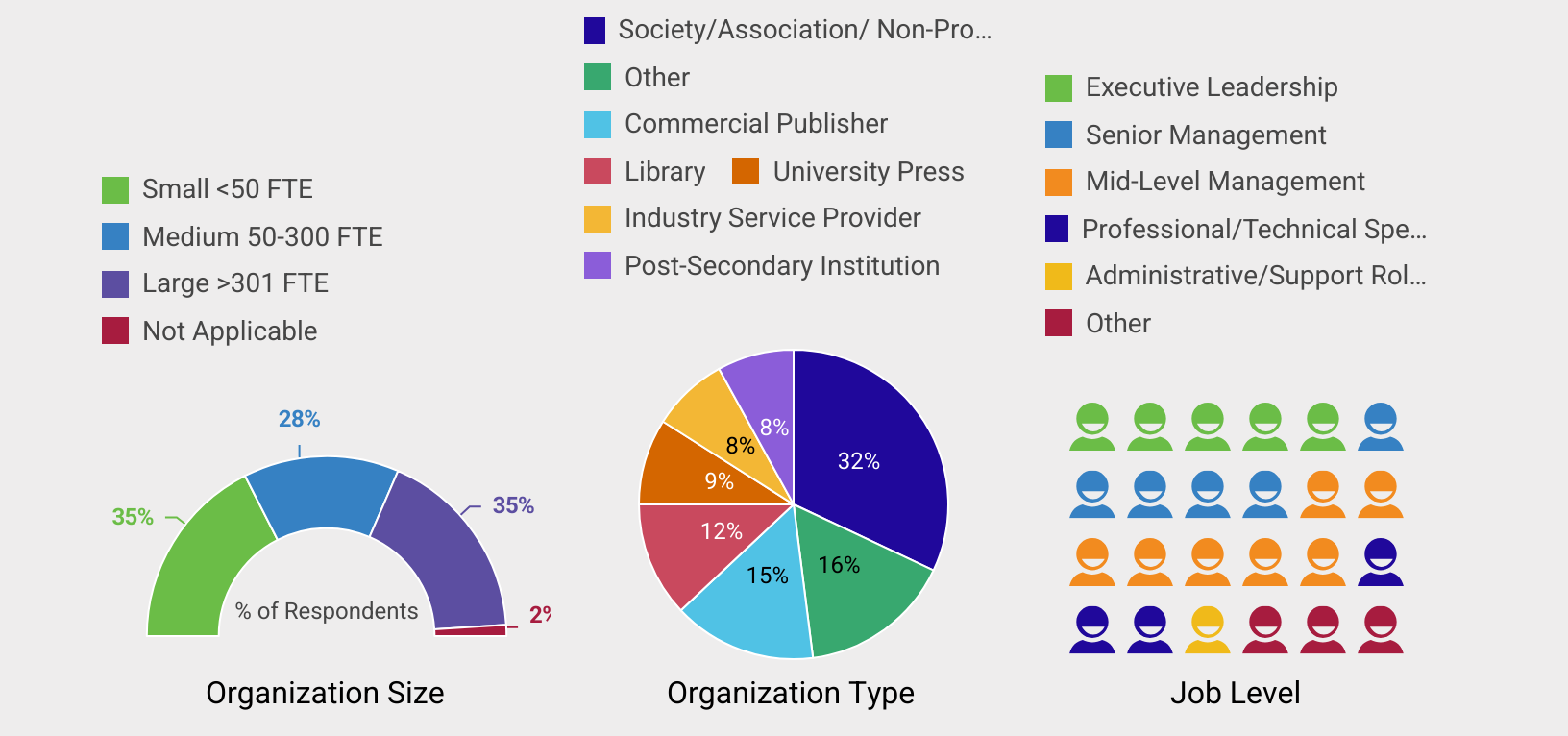

This report seeks to understand how publishers and scholarly communications professionals are currently using AI, how prepared their organizations are to navigate its impact, and what they see as the major barriers, opportunities, and concerns. The AI in Scholarly Publishing Pulse Check Poll was distributed to Society for Scholarly Publishing members, Scholarly Kitchen readers, and other professionals in the scholarly communications community. There were 563 respondents from a variety of organization types, sizes, and job levels.

This post provides the high-level aggregate results. The full report, available at no cost on SSP’s website (log-in or account creation required), provides additional detail and the ability to filter the data by organization size, type, and job level of respondents.

Key Findings

- AI Adoption Is Widespread, but Uneven

AI is no longer experimental in scholarly publishing. - Organizations Are Proceeding with Cautious Optimism

The dominant organizational posture toward AI is measured exploration. - Preparedness Lags Behind Adoption

Despite growing use, most organizations feel only partially prepared to navigate AI’s impact. - Ethical, Legal, and Capacity Concerns Dominate Barriers

The most frequently cited barriers to AI adoption are ethical or legal concerns, data privacy and security, and lack of expertise or staff capacity. - Perceived Opportunities Focus on Scale, Quality, and Access

Respondents most often point to workflow efficiency and time savings, followed by peer review enhancement, research integrity, and quality control. - Concerns Are Deeper and More Existential Than Opportunities

Respondents express a strong fear that AI could accelerate a “race to the bottom” in scholarly quality.

AI Usage

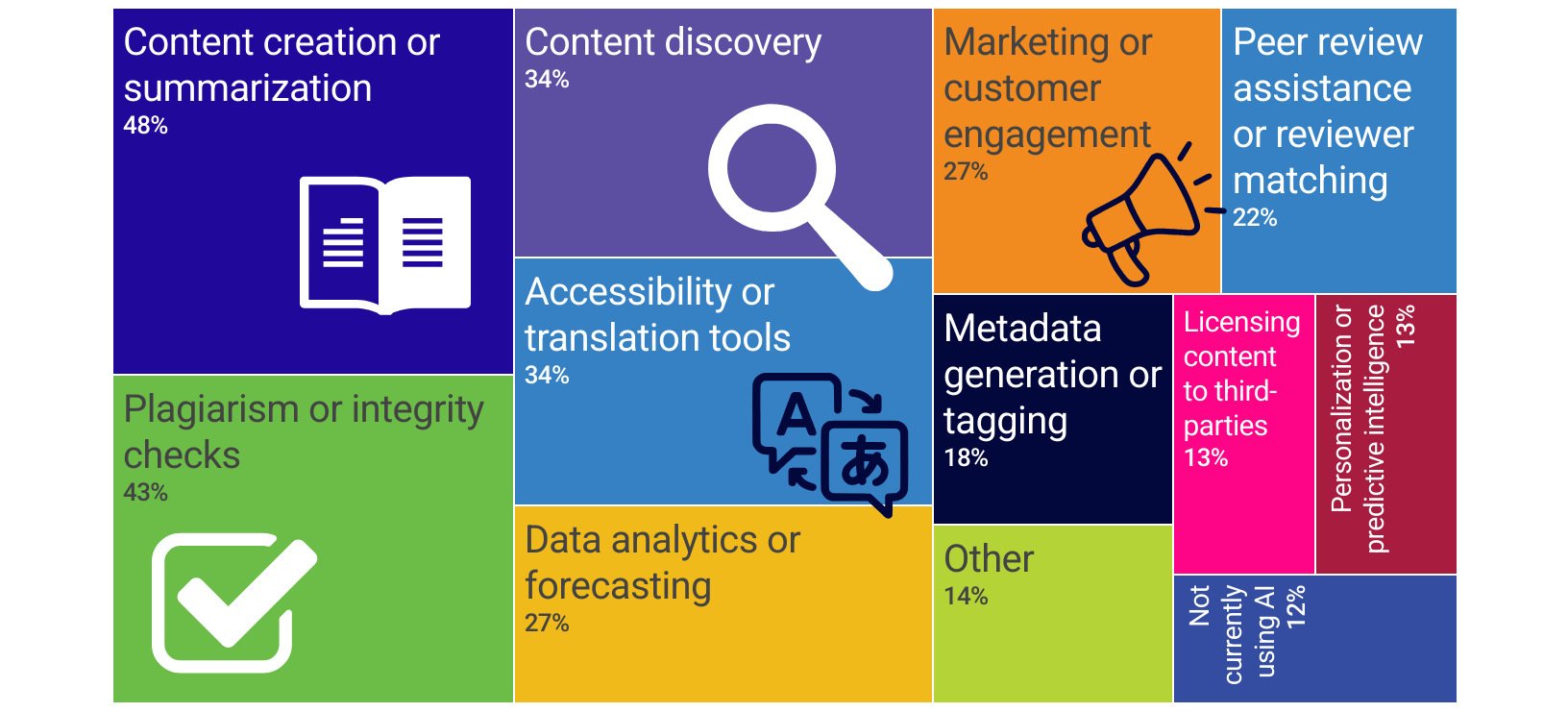

Poll responses show that AI adoption is already well underway across scholarly publishing organizations, with the vast majority reporting at least one active use case. The most common applications center on content-related workflows, particularly content creation or summarization (48%) and plagiarism or research integrity checks (43%). These uses reflect areas where AI can deliver immediate efficiency gains and scale support for existing editorial processes without fundamentally altering decision-making authority. Accessibility and translation tools (34%) and content discovery (34%) also rank highly, underscoring AI’s growing role in improving the reach, usability, and findability of scholarly content.

Beyond core editorial functions, organizations are increasingly applying AI to business and operational activities. More than a quarter of respondents report using AI for marketing or customer engagement (27%) and for data analytics or forecasting (27%), signaling growing comfort with AI-driven insights to inform strategy and audience outreach. Peer review assistance or reviewer matching (22%) and metadata generation or tagging (18%) appear as emerging — but not yet universal — applications, suggesting both opportunity and caution in areas that intersect more directly with scholarly judgment and quality control.

Attitudes Toward AI

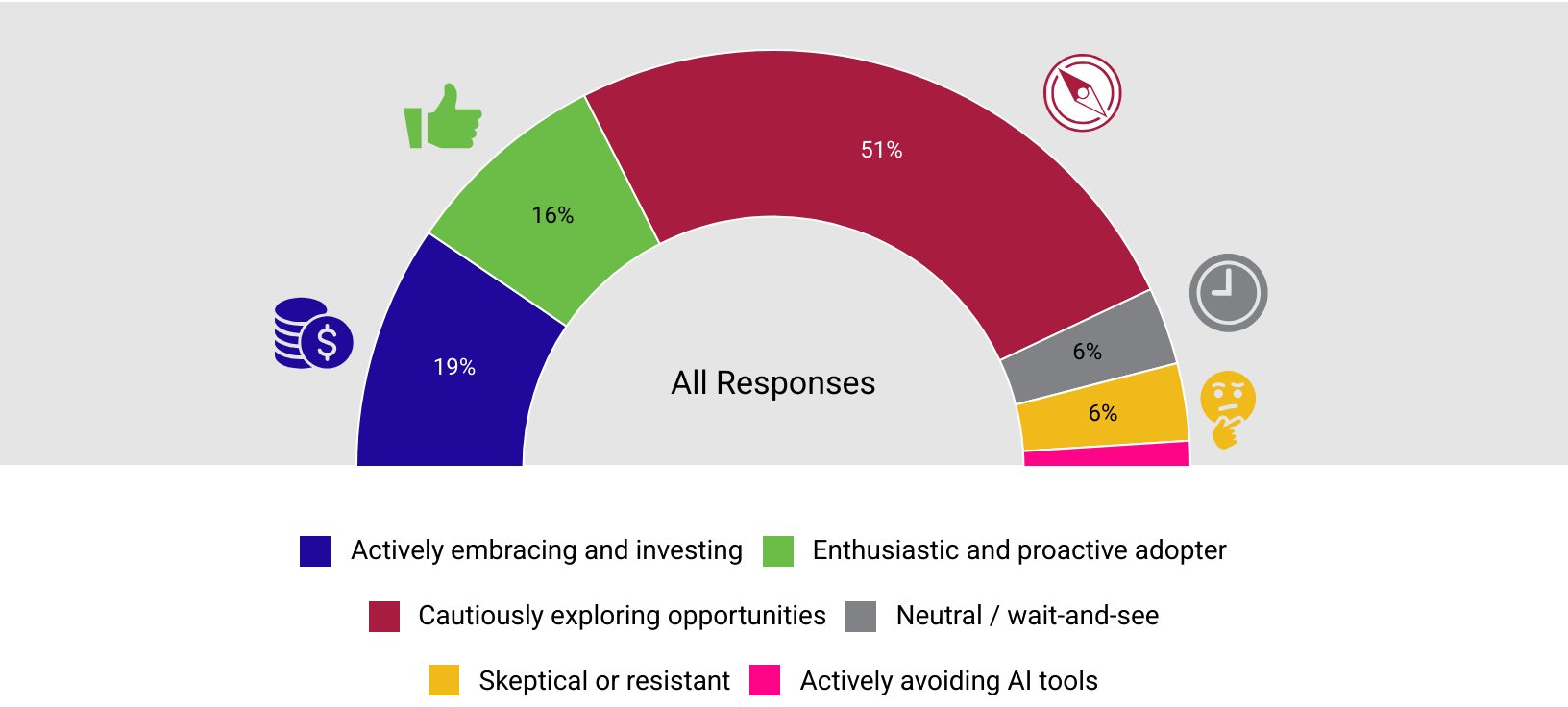

Poll responses indicate that most organizations are approaching AI with measured interest rather than unqualified enthusiasm. A majority (51%) describe their stance as cautiously exploring opportunities, suggesting active experimentation paired with careful consideration of risks, governance, and fit. At the same time, more than a third of respondents report a strongly positive posture toward AI, with 19% actively embracing and investing in AI and 16% identifying as enthusiastic and proactive adopters — together signaling meaningful momentum toward deeper integration.

Only a small minority of organizations remain on the sidelines or opposed. Just 6% report a neutral, wait-and-see approach, while another 6% describe themselves as skeptical or resistant, and only 2% are actively avoiding AI tools altogether. Taken together, these results suggest that while caution remains the dominant mindset, resistance to AI within scholarly publishing is limited, and the overall trajectory points toward broader adoption over time.

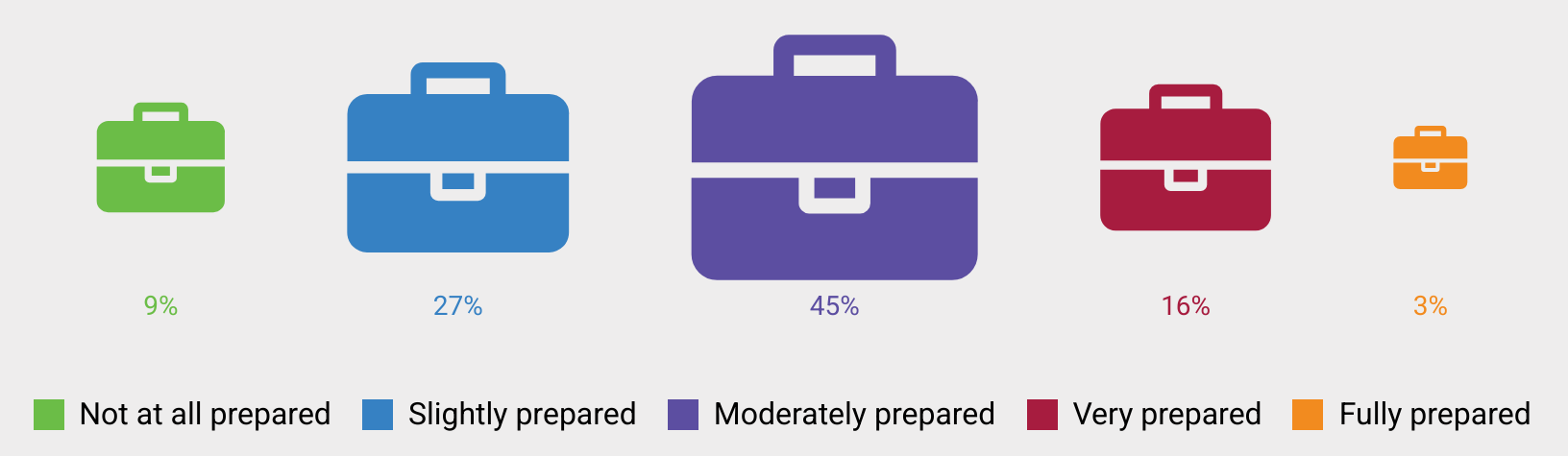

AI Preparedness

Responses suggest that most organizations feel partially prepared to navigate the impact of AI in the coming year. Nearly half of respondents (45%) report being moderately prepared, while another 27% feel only slightly prepared, indicating that many organizations are still building skills, policies, and internal confidence. A smaller share feel highly prepared, with 16% describing themselves as very prepared and just 3% as fully prepared, underscoring how rare a sense of full readiness remains. At the same time, 9% report being not at all prepared, highlighting a continued need for guidance, shared best practices, and capacity-building across the community.

AI Adoption Barriers

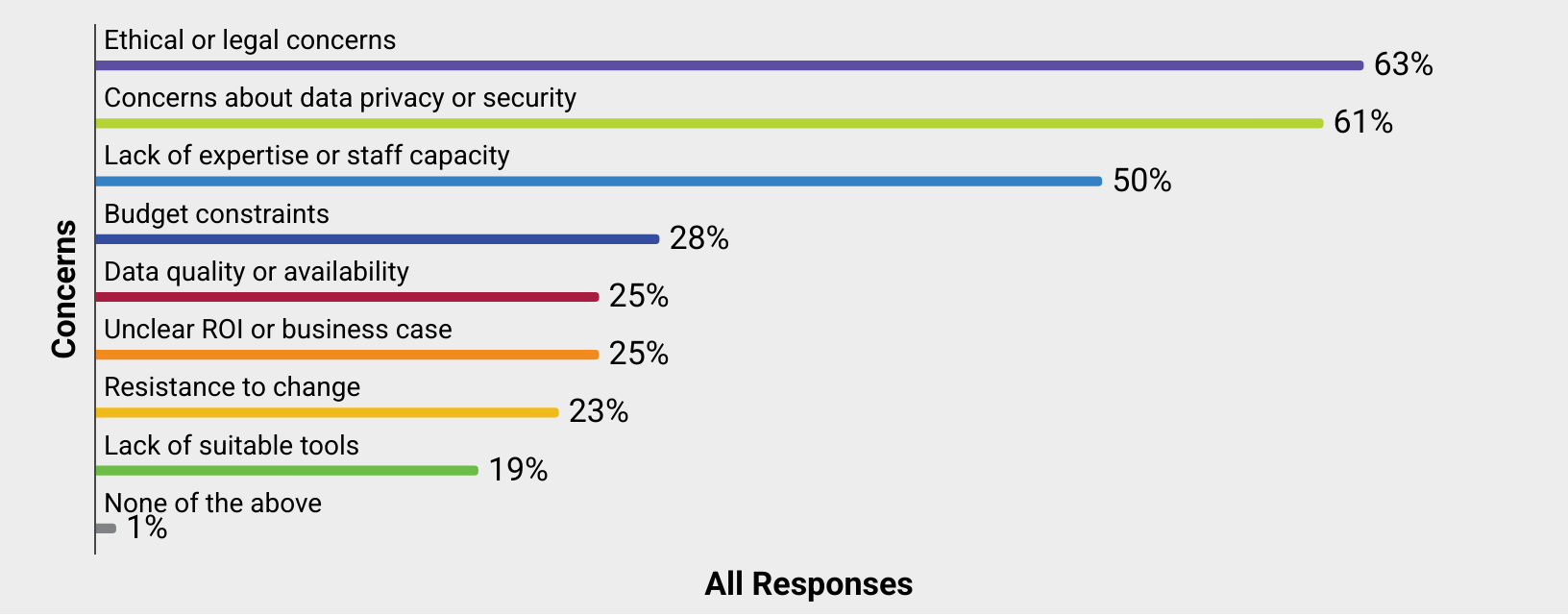

Respondents identified legal/ethical concerns, privacy/security, and a lack of expertise/capacity as the primary barriers to AI adoption.

Despite the strong levels of AI adoption and interest, scholarly publishing organizations still harbor serious concerns about its use in their sector. Ethical or legal concerns (63%) and data privacy or security issues (61%) top the list, reflecting widespread caution about compliance, intellectual property, and responsible use — particularly acute concerns in scholarly publishing. Organizational readiness also emerges as a significant challenge, with half of the respondents (50%) citing a lack of expertise or staff capacity, underscoring that human and institutional capabilities often lag behind technological interest.

Practical and financial considerations form a second tier of barriers. Budget constraints (28%), data quality or availability (25%), and an unclear ROI or business case (25%) suggest that many organizations are still weighing costs against uncertain benefits. Cultural and tooling issues — such as resistance to change (23%) and lack of suitable tools (19%) — appear less dominant but still meaningful. Nearly every organization reports encountering at least some friction in adopting AI.

Opportunities

A number of key themes emerged from the open-ended responses, possibly reflecting the biggest opportunities for AI in scholarly communications. Surprisingly, the terms licensing and revenue were only mentioned 10 times (collectively) out of more than 1000 responses. The top five themes are detailed below. Additional themes are detailed in the full report.

Workflow Efficiency and Time Savings

By far the most dominant theme, respondents emphasized AI’s potential to automate routine tasks, streamline processes, and free up time for higher-value work. This includes automating metadata creation, formatting, administrative tasks, and production workflows.

Peer Review Enhancement

A major area of interest focuses on improving the peer review process through AI assistance—from matching reviewers to manuscripts more effectively, to conducting preliminary checks, flagging potential issues, and reducing reviewer burnout.

Research Integrity and Quality Control

Strong emphasis on using AI to detect plagiarism, image manipulation, paper mills, fabricated data, and other ethical concerns. Respondents view AI as essential for maintaining scholarly standards at scale, particularly as submission volumes increase.

Content Discovery and Search

Significant interest in AI-powered tools that help researchers find relevant literature more efficiently, identify research gaps, and make connections across disciplines. This includes enhanced search functionality, personalized recommendations, and better content indexing.

Translation and Language Support

Widespread recognition of AI’s potential to reduce language barriers, particularly by helping non-native English speakers improve their writing and making research accessible across linguistic boundaries. This includes both translation services and language polishing tools.

Concerns

What’s particularly striking is the tension in the responses about the biggest concerns regarding the impact of AI on scholarly communications — many of the same professionals who saw opportunities in AI for efficiency and accessibility are deeply worried about its potential to undermine the very foundations of scholarly rigor. There’s a pervasive anxiety about AI enabling a “race to the bottom” in terms of quality, even as some see potential benefits.

The concerns are more urgent and existential in tone than the opportunities, suggesting that while respondents recognize AI’s potential utility, they’re deeply uncertain about whether the scholarly communications ecosystem can adapt quickly enough to prevent serious harm. Based on responses from scholarly communications professionals, here are the most frequently mentioned concerns ranked by prevalence. An expanded list of themes is detailed in the full report.

Quality and Integrity Degradation

The overwhelming top concern focuses on AI-generated content flooding scholarly literature with low-quality, inaccurate, or “slop” submissions. Professionals worry about fabricated data, hallucinated citations, superficial research, and the erosion of rigorous scholarship. There’s deep anxiety that AI will enable mass production of mediocre work that overwhelms quality control systems and degrades the entire scholarly ecosystem through sheer volume.

Transparency, Detection, and Authenticity

Major concerns about the difficulty of detecting AI-generated content and the lack of transparency when AI is used. Professionals worry about undisclosed AI use in manuscripts and peer reviews, fundamental questions about what constitutes genuine authorship when AI is involved, and whether AI-assisted work represents authentic scholarly contribution. Many emphasize the need for clear disclosure requirements but question enforceability and detection capabilities.

Erosion of Peer Review

Significant worry that AI-generated peer review will undermine the scholarly review process. Concerns include superficial reviews, reduced critical thinking, reviewers using AI to avoid actual engagement with manuscripts, and the potential collapse of the peer review system if both authors and reviewers rely heavily on AI without genuine intellectual engagement.

Research Misconduct and Ethical Violations

Widespread concern about AI facilitating plagiarism, enabling paper mills, making fraud easier to commit and harder to detect, and generally lowering ethical standards. Professionals worry about the increase in research misconduct enabled by AI tools and the challenge of maintaining research integrity when AI can generate plausible-sounding but problematic content.

Trust and Credibility Erosion

Anxiety that widespread AI use will undermine public trust in scholarly communications, reduce confidence in published research, and damage the credibility of academic institutions and journals. Concerns about whether the scholarly record can maintain its role as a trusted source of knowledge if AI-generated content becomes pervasive.

Conclusion

The findings reveal a scholarly publishing community at a pivotal inflection point. AI is already embedded in daily workflows, particularly in areas that promise efficiency, scalability, and integrity protection. At the same time, confidence, preparedness, and governance have not yet caught up with adoption. The prevailing mindset is neither resistance nor blind enthusiasm, but cautious experimentation shaped by deep concern for scholarly values.

What distinguishes this moment is the tension between opportunity and risk. Respondents clearly view AI as a powerful tool for addressing long-standing challenges, including reviewer fatigue, information overload, accessibility issues, and research misconduct. Yet they are equally concerned that, without strong ethical frameworks, transparency, and investment in human expertise, AI could undermine trust, quality, and credibility in scholarly communication.

The full report, available at no cost on SSP’s website (log-in or account creation required), provides additional detail and the ability to filter the data by organization size, type, and job level of respondents. If you have a suggestion for future Pulse Check Polls, send your ideas to info@sspnet.org.

Author’s note: Data was collected December 1 – 12, 2025. AI applications were used to assist in the analysis and summarization of the data in this report.