Editor’s Note: Today’s post is by Christos Petrou, founder and Chief Analyst at Scholarly Intelligence. Christos is a former analyst of the Web of Science Group at Clarivate and the Open Access portfolio at Springer Nature. A geneticist by training, he previously worked in agriculture and as a consultant for Kearney, and he holds an MBA from INSEAD.

Some call them Special Issues (MDPI & Hindawi), others call them Research Topics (Frontiers), and others Collections (Springer Nature). In this post, I will refer to them as articles published via the Guest Editor model, whereby a journal invites a scholar or a group of scholars to serve as guest editors for a set of thematically linked papers. The Guest Editor model fueled MDPI’s rise, yet it pushed Hindawi off a cliff. It has contributed to MDPI’s questionable reputation, yet it is becoming a permanent fixture in the industry.

Turbo-charging MDPI and Frontiers

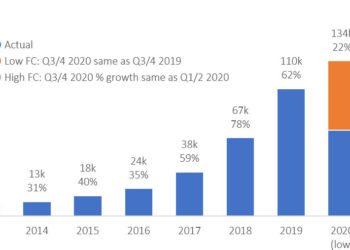

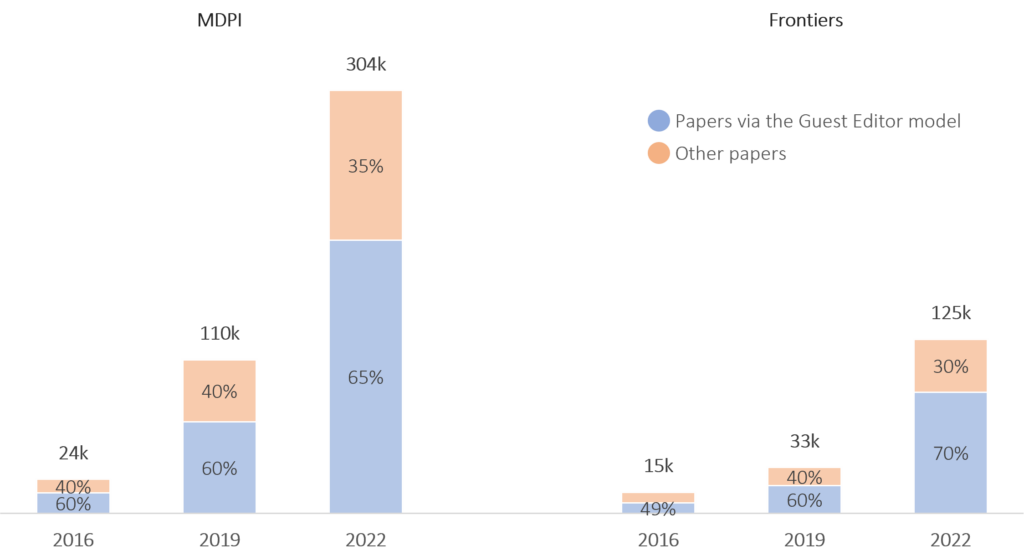

Back in 2020, I wrote about MDPI’s remarkable growth. My analysis was optimistic about their growth, and has been partly proven accurate, given that MDPI almost tripled in size from 2019 (110k papers) to 2022 (303k papers).

The analysis missed a trick, namely the importance of the Guest Editor model for MDPI. Papers via this model have been a staple for MDPI. In recent years, they have accounted for 60% or more of MDPI’s papers.

Frontiers is another emerging Swiss publisher that heavily relies on the Guest Editor model. Taking another leaf from MDPI’s playbook, they have grown their Guest Editor operations, currently publishing 70% of their papers via this model vs 49% in 2016.

Devastating Hindawi

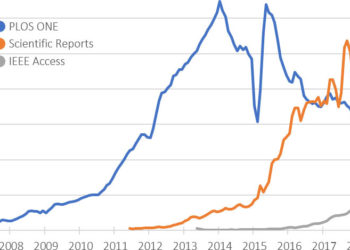

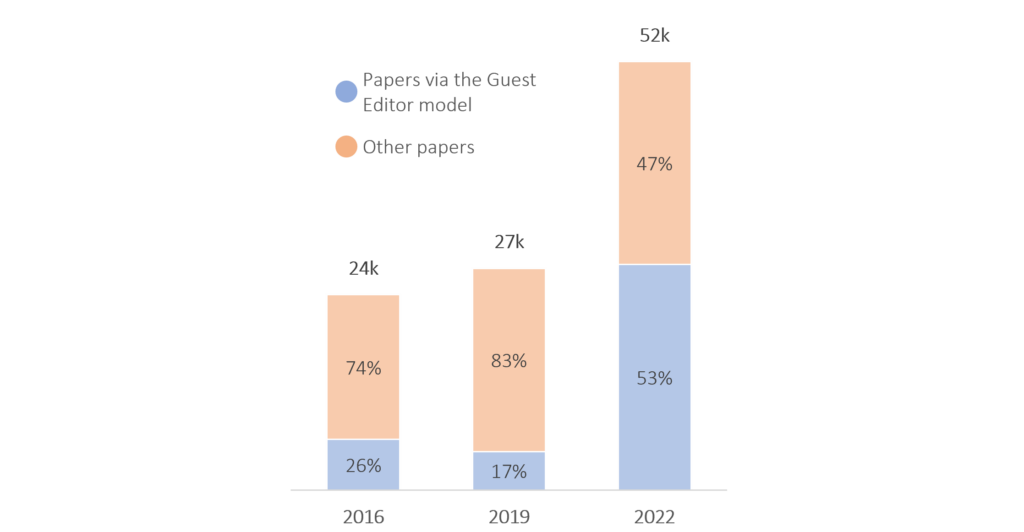

A latecomer to the Guest Editor model was Hindawi. Their adoption of the model took off at the time that four of their largest journals were added to the CAS Warning List. At that time, a Hindawi executive reached out to me to express their dissatisfaction for questioning the impact of the listing to their performance. Indeed, Hindawi’s 2021 performance was salvaged (contrary to that of other journals such as IEEE Access), likely thanks to the wholesale adoption of the Guest Editor model. But there was a price to pay, and the bill has now arrived.

As explained in detail by Retraction Watch, Hindawi’ Guest Editor model was exploited by paper mills, leading to more than 500 retractions from November 2022 to March 2023. In a random sample of 20 retracted articles, half of them were submitted in 2021, meaning that the paper mills were at work as soon as Hindawi ventured into the Guest Editor model.

The timing was most unfortunate for Wiley, which acquired Hindawi in January 2021 for the hefty price of $298m. In response to the paper mills, Wiley not only retracted the articles of concern, but also paused Hindawi’s Guest Editor program, resulting in a $9m decline in quarterly revenue, which is expected to reach $30m for the full year. As soon as the results were announced, Wiley’s stock value dropped by 17% to its lowest level since 2009 (except for a year-long slump during COVID). The decline translates to a $420m reduction in market capitalization.

But as things gets worse for Wiley and Hindawi’s financial performance, they get better for research integrity.

WoS giveth, and WoS taketh away

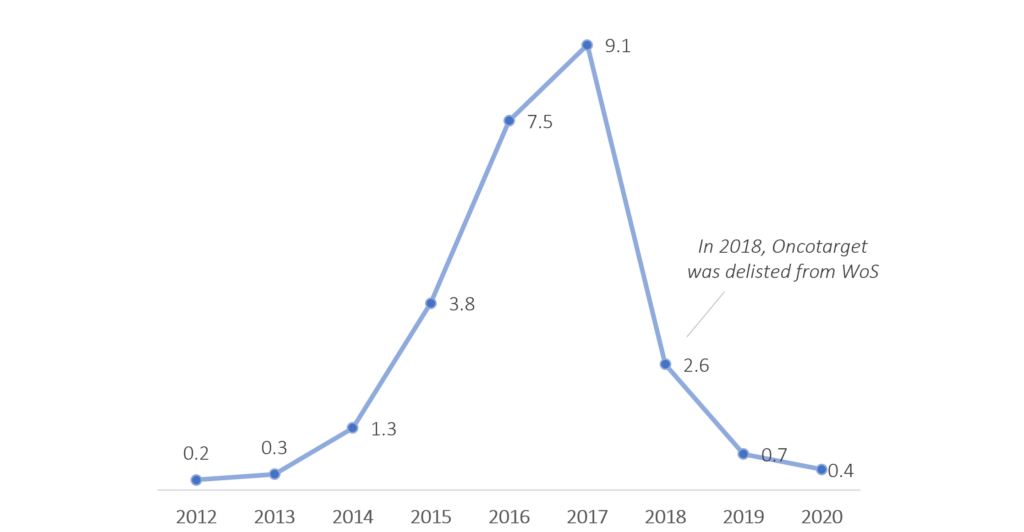

Getting indexed on the Web of Science (WoS) is an existential issue for journals. There are very few journals that could be commercially successful without an Impact Factor. Case in point, when Oncotarget got delisted, its output dropped from about 9k papers to under 1k papers.

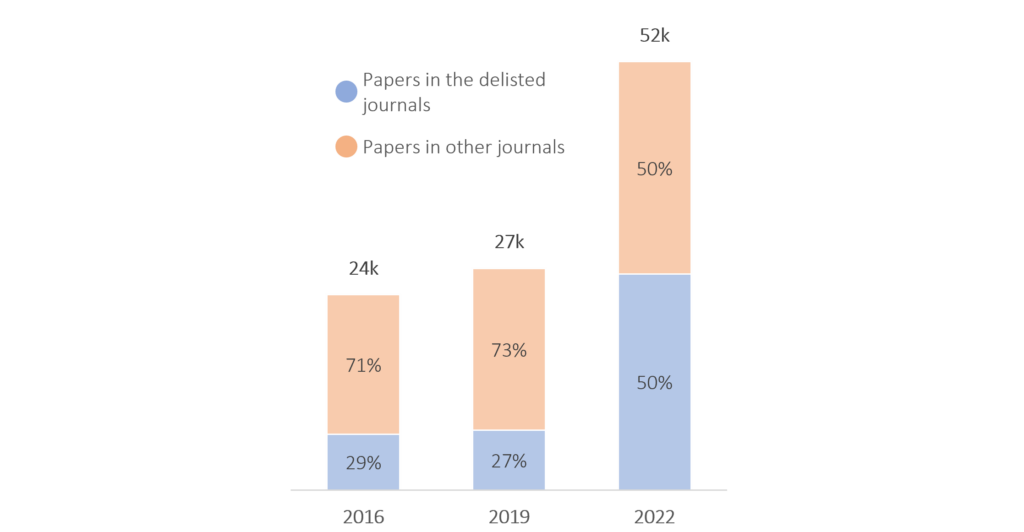

Last week, Clarivate announced the removal of more than 50 journals from the Web of Science, including 19 journals from Hindawi. The delisting came after Wiley announced its Q3 results, and its repercussions may not have been fully appreciated by analysts, given that Wiley’s stock value did not drop any further.

Specifically, the 19 journals accounted for 50% of Hindawi’s papers in 2022. In combination with the halting of the Guest Editor model across the portfolio, it is possible that Hindawi will not exceed 20k papers in 2023. That’s a decline of about 60% which may be accompanied by an even bigger drop in revenue, assuming that the delisted journals had higher APCs than the rest of the journals.

This might be a good point to mention that five of the 19 journals reported no retractions in 2022 and in 2023 so far, while an additional four journals that remain in WoS reported from four to 25 retractions in the same period. This might not be the end of the story for Hindawi and Wiley.

MDPI’s unremarkable reputation

When I previously wrote about MDPI in 2020, their reputation was not good, but it was arguably improving. I did not anticipate that their reputation would face repeated challenges since then, such as the listing of 22 journals in the CAS Warning List, which resulted into an unimpressive performance for MDPI in China in 2021, and the inclusion of five journals in the newly created Level X category of the Norwegian Scientific Index in 2021. The list goes on, and it includes several exasperated researchers that have special issues with the Special Issues of MDPI.

The over-reliance on the Guest Editor model contributes to MDPI’s questionable reputation. One has to wonder whether MDPI has really found ways to combat paper-mills, whether it has avoided them by being less exposed to Chinese content than Hindawi, or whether it has been affected by paper-mills and none has noticed yet. Within that context, the perplexing delisting of MDPI’s largest journal, International Journal of Environmental Research and Public Health (IJERPH), alongside the Hindawi journals, can make sense.

Guilty until proven innocent

WoS delisted IJERPH, a journal with an Impact Factor above 4.0 that ranked in the top or second quartile of its categories, on what may be viewed as a technicality. The journal failed the ‘content relevance’ criterion, meaning that the published content was not consistent with the title and/or stated scope of the journal. It is hard to believe that this criterion does not apply to hundreds of other journals. So, what might have prompted WoS to take this action?

My best and least conspiratorial guess is that WoS may have felt compelled to align with the public sentiment and go after MDPI. In the absence of a smoking gun of unequivocal editorial misconduct, it opted for a trivial criterion. Al Capone got convicted for tax evasion. MDPI got delisted for publishing papers out of scope. WoS sent a message by going after the largest journal of MDPI.

The delisting of IJERPH demonstrates that MDPI’s reputation is the biggest threat for the company’s longevity. MDPI is set to lose more than $30m annual revenue by the delisting of IJERPH, and researchers might grow reluctant to submit papers to its other journals, further compromising the publisher’s commercial performance. Ominously, MDPI’s business is one paper mill away from crumbling the same way as Hindawi’s.

Unless and until such a paper mill is discovered and acknowledged, MDPI will take comfort in more than tripling the volume of its journals that have an Impact Factor. Currently, 97 MDPI journals have an Impact Factor, and more than 200 are set to be awarded one this summer, thanks to the new policies of WoS. The reputational damage by the delisting of IJERPH may eventually be made up for by the awarding of the new Impact Factors.

Permanent fixtures, features, bugs, and transparency

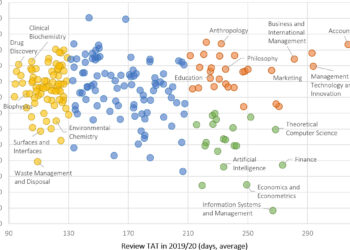

Whether MDPI declines or maintains its position as the third largest publisher of journals, elements of the MDPI publishing model are here to stay. Its speed has created a paradigm shift, and other publishers are reviewing their operations in order to close the gap between their performance and that of MDPI. The Guest Editor model is slowly being adopted by large, traditional publishers and faster by the more dynamic OA publishers.

The implementation of the Guest Editor model by Hindawi was marked by dramatic failure, which begs the question: is the compromise of editorial integrity a feature or a bug of the model? Are paper mills about to be discovered operating across MDPI and Frontiers, or did they only exploit a particularly poor implementation of the model by Hindawi?

In any event, publishers pursuing the Guest Editor model at scale ought to be transparent about the safeguards that they have in place to uphold their journals’ editorial integrity. Do they prevent malicious behavior with technological solutions and rigorous processes? Do they seek anomalous patterns post-publication, in historical records? Are they challenging the effectiveness of their own systems the same way a bank might utilize ‘ethical hackers’?

Publishers such as MDPI and Frontiers owe this level of transparency to the millions of researchers that have trusted their journals to publish their work. Likewise, WoS needs to be more transparent about its journal de-selection criteria rather than leaving it to the community to identify the journals that have been purged and to then speculate about the drivers of the decisions of WoS.

Addendum: The article has been updated to reflect that, while there is strong indication that a paper mill has been acting at MDPI (see Abalkina’s work and Perron’s work), MDPI has not publicly addressed these concerns.

Discussion

28 Thoughts on "Guest Post – Of Special Issues and Journal Purges"

Christos, Thank you for this excellent analysis. From what I can tell, it seems like Wiley/Hindawi handled the issue ethically when it was discovered. But given the delisting of their affected titles from WoS, I imagine there is a strong incentive for other publishers to take a different approach. So, one question I’ve been mulling is whether a publisher can address a paper mill problem “silently,” in a way that would not attract attention. For example, could a publisher evade the kinds of triggers that the retractions yielded for WoS and other analysts if it instead policed an integrity issue by forcing authors to withdraw their papers? In other words, are there other kinds of unexpected activities we should look for from other publishers?

Roger, thank you for the kind feedback. Your question is on point. I have more questions myself rather than an answer. I like the dynamism of the Guest Editor model, but it is evidently hard to police. So whose job is it to do the necessary policing? Part of it should be on individual publishers, but perhaps publishers can also act collectively. Then WoS and Scopus may need to produce policies that are specifically designed for journals utilising this model at scale. More broadly the ScholComm industry needs to provide more safeguards for researchers.

It is 100% the actual journal editor (if there is one) that is responsible for the editorial integrity along with the publisher whose staff should know better and are sitting at a vantage point to see problems forming a trend. As Christos hinted, they have turned editorial neglect into a business model.

Part of the challenge here is that publishers are spread too thin and can not move as fast as the fraud cases arise. They cannot be expected to police without a solid, modern, and most importantly not custom built technology stack to fully assess and understand the issue. Today, many publishers build insular solutions, and I strongly believe this needs to stop (no other industry works that way). We could achieve something really extraordinary – and prevent more of the integrity issues from occuring – if we relied on technology that builds and iterates in a modern way with APIs and advanced insights for integrity detection – and most importantly that operates fast and at scale!

“From what I can tell, it seems like Wiley/Hindawi handled the issue ethically when it was discovered.”

I disagree. The 500 papers that Hindawi promised to retract are an ablative heat-shield. Hindawi have been informed of thousands more papers where the editing and reviewing were equally corrupted.

Great piece Christos. I think the Hindawi situation was bound to happen sooner or later and, in my opinion, it was simply bad luck for Hindawi that they happened to be the first to suffer at this scale. Any publisher relying on the guest editor model is likely to be equally vulnerable to paper mills and fraudulent reviewer rings.

These systematic attacks on research integrity are difficult to monitor and identify, and doing so will likely require a combined effort across publishers, ideally with the support of institutions and funders. (Of course this is easy to say, but far more difficult to accomplish, but things can’t even begin until the problem, scope and potential approach are at least agreed upon.)

I find the WoS delisting of Hindawi’s 19 journals concerning as it indeed seems ot punish Wiley/Hindawi for being transparent about an issue that concerns all of scholarly publishing. I’ve had the pleasure of working closely with Hindawi and the organisation is sincerely committed to upholding research integrity in publishing. In my view the fact that Hindawi fell victim to this level of fraudulent behaviour shows how pernicious the problem is, rather than an indicator of any lax attitude or failure of research integrity efforts by Hindawi itself. I have high regard for the expertise and professionalism of the WoS editorial team, but there perhaps needs to be a more transparent process in place for understanding such decisions, appealing them and taking steps to ensure one’s journals meet whatever new criteria are being implemented.

“Do they seek anomalous patterns post-publication, in historical records?”

James Heathers is very active and seems to be one of the few actually doing this, detecting fake papers, retractions, citation circles, and more.

https://twitter.com/jamesheathers/status/1575109846050910208

https://www.chemistryworld.com/news/materials-science-journal-withdraws-500-papers-from-fake-conferences/4016504.article

I’m a research integrity officer at a uni. We’ve had huge issues with dodgy publication practices in MDPI journals. Gift authorship, paper mill-like behaviour, a lot of abuse based on guest editorship.

If you see a CV with a lot of MDPI papers on it, reject and hire someone else. One or two MDPI papers is probably fine, especially if a particular MDPI journal happens to be respected in your field. But 10, 20, 30 MDPI papers? I would bet they’re abusing or gaming the system somehow.

The issues are hard to detect and even harder to prove. Each individual case consumes a huge amount of resources. Many of the institutions I’ve seen affected don’t have enough resources to even begin addressing it.

So here is what I could not fit in the article, which I turned around in a personal-best under 24 hours.

1. I believe that it is more likely than not that paper mills have been at work at both MDPI and Frontiers, but affecting a much smaller fraction of papers than in the case of Hindawi. In the case of MDPI, it comes down to their huge size that would be very difficult to police. In the case of Frontiers, it comes down to their high exposure to Chinese content – as much as half of their papers come from China. Hindawi must have been at above 70% from China based on Lens data. In fact, if MDPI dodges this, it will be thanks to its low exposure to China.

2. Retractions does not equate WoS delistings. Elsevier’s Thinking Skills and Creativity publishes about 200 papers annually and retracted nearly 50 (https://retractionwatch.com/2022/10/18/elsevier-journal-retracts-nearly-50-papers-because-they-were-each-accepted-on-the-positive-advice-of-one-illegitimate-reviewer-report/). Guess what? It is still in WoS. See the WoS policy outlined here: https://clarivate.com/webofsciencegroup/wp-content/uploads/sites/2/2019/08/WS366313095_EditorialExplanation_Factsheet_A4_RGB_V4.pdf. MDPI is stellar by this account, even if some retractions do occur. That’s why it is crucial to understand what exactly has been the rationale for the delisting of Hindawi’s journals.

3. There are enough researchers out there that will tolerate MDPI’s reputation as long as it has good Impact Factors (which it does have) and fast turnaround times (whish it also has). Have you heard anyone ever praising McDonalds? Yet they are still out there feeding us plastic burgers. The only risk for MDPI comes from coordinated anti-MDPI policies by large institutional players, including WoS. A domino effect could be lurking out there.

4. This is yet another hypothesis. What would happen if MDPI (and Frontiers) were to cease their Guest Editor programmes? Suddenly, the journals of other publishers would have to absorb almost 300,000 papers. We would be facing at a very slow publishing year…

Having always worked – and still working – for very respectable legacy publishers, I would like to come to the defence of the “Guest Editor Model” – both in traditional subscription as well as open access journals. Ever since starting in STM publishing over 20 years ago, the traditional publishers I worked for published special issues edited by guest editors. Some journals even more than one issue per year, depending on the usual publication output, the topics suggested and the availability of eligible guest editors. Guest Editors and authors were carefully handpicked, suggested contributions carefully scrutinized and of course peer reviewed.

Our EiCs and we as publishers pride ourselves in delivering special issues that are highly topical and usually contain articles written by some of the best in their respective fields. They provide a tangible benefit for the research community, the readers, and promote a journal’s network within the field.

Let’s not throw out the baby with the bathwater but rather emphasize that compiling a special issue based on personal networks, scientific focus and research integrity makes great reading and contributes to the field in question – contrary to, as demonstrated by the cases of MDPI and Hindawi, when quantity, financial considerations, arbitrariness and anonymity are some of principle motivators.

Exactly right! The special issue with a guest editor can be an incredibly valuable resource for a field. The problem is that they must be done with care and rigor, and held to the same standards as the rest of the journal. Which makes scale on the level of these publishers impossible. There are MDPI journals that are scheduled to publish more than 10 “special” issues per day in 2023. There’s no way the journals’ Editors-in-Chief can perform the oversight that would be necessary to ensure quality for that insane amount of content. Please continue doing special issues, but let’s make sure they’re really “special” and not just a route to quantity (and the accompanying APC revenue).

I wouldn’t say impossible but (citing from my comment above): Part of the challenge here is that publishers are spread too thin and can not move as fast as the fraud cases arise. They cannot be expected to police without a solid, modern, and most importantly not custom built technology stack to fully assess and understand the issue. Today, many publishers build insular solutions, and I strongly believe this needs to stop (no other industry works that way). We could achieve something really extraordinary – and prevent more of the integrity issues from occuring – if we relied on technology that builds and iterates in a modern way with APIs and advanced insights for integrity detection – and most importantly that operates fast and at scale!

The STM Integrity Hub may be of interest:

https://www.stm-assoc.org/stm-integrity-hub/

But I still think that on some level, a human being needs to look at the papers for some final check, and that gets difficult when you are publishing 56,000 “special” issues per year as MDPI is currently on schedule to do.

A bit off-topic, but since the private companies Clairvate (nee Thompson-Reuters) & Scopus (Elsevier) are discussed, how about a campaign for them to make the abstracts of published paywalled papers free, once again?

See https://i4oa.org

oops, ignorant me! Looks like a good campaign, and I will look into it.

Let’s not call them paper mills or predatory journals. If someone says MDPI’s journal is a paper mill or predatory, I can call the so many so-called reputed journals as paper mills too!

The root of the problem is that the traditional (non-predatory non-paper mill) scholarly publishing system is BROKEN and various attempts are being made to fix it.

I have had extremely bad experiences with the traditional reputed scholarly journals that are never scholar-centric/author-centric, editors with preferences for nationality and university brand names, editorial rackets, and so on. I can go on and on and name these journals. But it would be publicly washing and drying academia’s dirty laundry.

Authors, reviewers, editors work for free in the name of prestige, tenure, etc. -> Big traditional publishing houses continue to consolidate their business and unfairly charge the universities -> universities pay hefty subscriptions or pay for the accepted articles on behalf of the authors.

MDPI, Frontiers, Hindawi (acquired by Wiley), etc. must be viewed as attempts to fix a BROKEN traditional archaic system.

Learn the way corporates work. Get inspired from the business models of initiatives such as Substack, Stratechery, YouTube, and so on where the authors are compensated fairly well. Can academia even dream of something like this? If this happens along with minor modifications in tenure/research policies, the problems (so-called paper mills, predatory, etc.) would be solved by itself.

Peace!

T S Krishnan

I don’t agree. At least that’s not my experience as an editor for a decade (admittedly with a legacy publisher, and in the 2000s). I would have known at most a fraction of one per cent of the authors submitting manuscripts, and we received at least one new manuscript every day. The gender, ethnicity, country, status, age or whatever of authors was immaterial (and usually unknown), and of course was not shared with peer reviewers. The main problem was finding peer reviewers with the appropriate skills. In over 90% of cases appropriate reviewers were actually found from the reference list of submitted manuscripts. Half of submitted manuscripts I desk-rejected on grounds either of scope or quality. Again, this had nothing to do with characteristics of the authors, although they may not have seen it this way.

Good to hear your opinion on the editorial part if my comment. You are entitled to your opinion. No worries. I voiced my opinion based on my 10 years of submitting papers to top management (operations management, economics) journals. Have had multiple opportunities to see this process close up. Quality and scope are always subjective (I know you will not agree with this too!). I have seen sloppy papers getting accepted in journals such as “Management Science”, “Production and Operations Management”, and many other similar ones.

This disagreement between you and me is a testimony in itself of the larger problem academia faces 🙂

They are ALL paper mills and predatory and out for a quick buck. The whole lot – Hindawi, Frontiers In, and MDPI. All journals from these three (at a minimum) should be delisted from the Web of Science. A strong message needs to be sent out to publishers and researchers that scientific fraud (which is what this is) will not be tolerated.

“The timing was most unfortunate for Wiley, which acquired Hindawi in January 2021 for the hefty price of $298m.”

Market commentary at the time justified the high price paid for Hindawi by pointing to the company’s low-staff, low-overhead structure, arguing that this would scale up easily and allow Wiley to quickly recoup the cost.

In other words, the guest-editor model with its invitation to papermills was not a bug – it was the main reason to acquire Hindawi.

While I agree there isn’t a focus on such evidence yet, and it’s unclear if the issue could possibly affect MDPI in the way it did Hindawi…

“Unless and until such a paper mill is discovered, MDPI will take comfort in more than tripling the volume of its journals that have an Impact Factor.”

A year ago, both Anna Abalkina (Abalkina, 2021; aRxiv.org, Fig 4) and Brian Perron (Retraction Watch) highlighted tens of papers advertised by the Russian Paper Mill International Publisher Ltd that sold authorshop to articles published in MDPI journals, notably Sustainability and Energies. In the same set of notifications, Perron is on record about how the journal iJet responded to his alerting of this similar issue by retracting 30 articles, and thanked him for his service. Yet MDPI Energies *still* hasn’t even posted an expression of concern to the articles highlighted by Perron.

In other words: “such a paper mill has been discovered.” It’s an inaccurate snow job to act like it hasn’t. This was disappointing to read from Scholarly Kitchen.

Thank you for highlighting this. I was not aware of that paper at the time I wrote the article. Clearly, it will take more than that to get to mass delistings.

Thanks Christos. Also didn’t mean this to be anonymous. This is Mark. Also late night comment… “snow job” probably unnecessarily confrontational. Apologies if it came off that way.

But I do think a correction is in order. This information has been public for over a year, and covered by, e.g. RetractionWatch. It’s even got a section on the MDPI Wikipedia page – just to say how readily this can be found.

The current article phrasing does a disservice to the readership.

p.s. 100% agree with Al Capone analogy!

No worries, Mark. No offense taken. I appreciate the candour.

My suggested correction would be ‘unless and until a number of such paper mills are discovered’ instead of ‘unless and until such a paper mill is discovered’. The point I am trying to convey is that MDPI’s business is facing risk, but in the short run they can keep performing well. I’ll have to discuss this with the editor here first.

Correction much appreciated. 🙂

I share the concern about MDPI not dealing with misconduct. No journal is free from risk of misconduct, and as COPE members they should deal with it when it is found. MDPI seems to take little/no action when misconduct is alleged. One only has to look at PubPeer and Twitter to find hundreds of examples of allegations on MDPI titles, yet I see no corrections, no retractions…

At least Hindawi appear to be taking some action, even if it’s later than it should be.

Publishers such as MDPI and Frontiers owe this level of transparency to the millions of researchers that have trusted their journals to publish their work. Likewise, WoS needs to be more transparent about its journal de-selection criteria rather than leaving it to the community to identify the journals that have been purged and to then speculate about the drivers of the decisions of WoS.

I would agree with that. In fact, it’s not just publishers who are affected by this one but many innocent authors as well. In the case of the IJERPH journal, for example, Clarivate stopped including articles from the journal on February 13. However, the journal was delisted from the WOS core collection until March 15. This means that the authors only learned of the news on March 15. Generally, there is a delay in the submission of articles by publishers to Clarivate. This resulted in articles accepted by the journal before that date not being included in the WOS Core Collection. This is in fact very unfair to the authors. This is because there are no anomalies in the master journal list that the authors relied on when submitting their articles to the journal. In the game between them, there seems to be no consideration for the situation of the innocent authors.