Editor’s Note: Today’s post is by Christos Petrou, founder and Chief Analyst at Scholarly Intelligence. Christos is a former analyst of the Web of Science Group at Clarivate and the Open Access portfolio at Springer Nature. A geneticist by training, he previously worked in agriculture and as a consultant for Kearney, and he holds an MBA from INSEAD.

Publishing speed matters to authors

A journal’s turnaround time (abbreviated as TAT) is one of the most important criteria when authors choose where to submit their papers. In surveys conducted by Springer Nature in 2015, Editage in 2018, and Taylor & Francis in 2019, the TAT of the peer review process (from submission to acceptance) and the production process (from acceptance to publication) were rated as important attributes by a majority of researchers, and were ranked right behind core attributes such as the reputation of a journal, its Impact Factor, and its readership. Based on the results of its in-house survey, Elsevier states that authors ‘tell us that speed is among their top three considerations for choosing where to publish’.

The surveys do not answer what level of speed matters and to whom, but they indicate that authors generally expect their papers to be dealt with expeditiously without compromising the quality of the peer review process.

The ‘need for speed’ has become a pressing matter for major publishing houses, following the ascent of MDPI, which typically publishes papers within less than 50 days from submission, and the further emergence of the preprint, pre-publication platforms that can function as substitutes for journals.

This article explores the TAT trends across ten of the largest publishers (Elsevier, Springer Nature, Wiley, MDPI, Taylor & Francis, Frontiers, ACS, Sage, OUP, Wolters Kluwer) that as of 2020 accounted for more than 2/3 of all journal papers (per Scimago). It utilizes the timestamps (date of submission, acceptance, and publication) of articles and reviews that were published in the period 2011/12 and in the period 2019/20. The underlying samples consist of more than 700,000 randomly selected papers that were published in more than 10,000 journals.

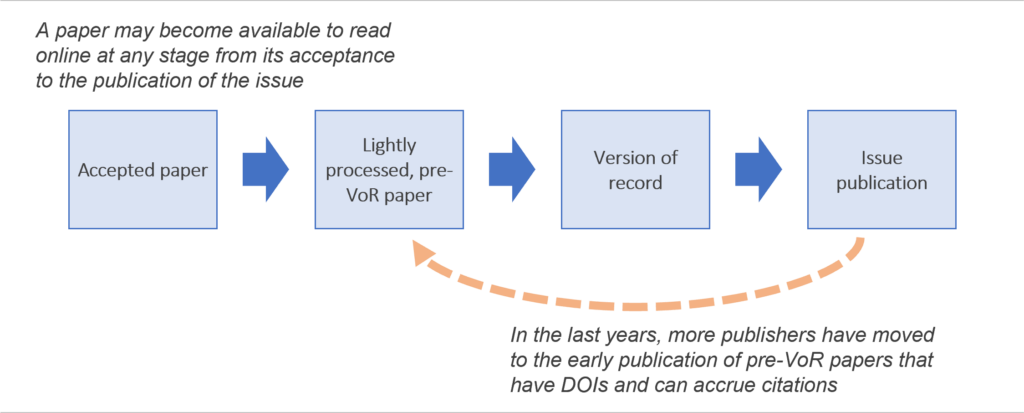

The publication date is defined as the first time that a complete, peer-reviewed article that has a DOI is made available to read by the publisher. As with the other timestamps, it is a case of ‘garbage in, garbage out’, as it relies on the accurate reporting of the timestamps by the publisher.

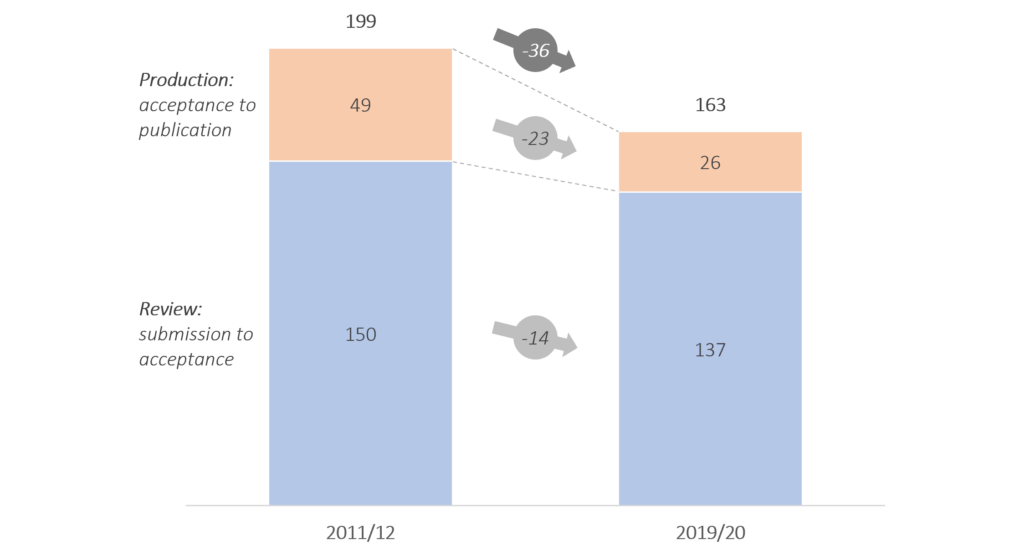

Publishing has become faster

First and foremost, publishing has become faster in the last ten years. It took 199 days on average for papers to get published in 2011/12 and 163 days in 2019/20. The gains are primarily at the production stage (about 23 days faster) and secondarily at peer review (about 14 days faster).

The acceleration from acceptance to publication is due to (a) the transition to continuous online publishing and (b) the early publication of lightly processed pre-VoR (Version of Record) articles. The acceleration at peer review is driven broadly by MDPI and specifically in the space of chemistry by ACS; both publishers stand out for being fast and becoming even faster. Meanwhile most other major publishers have been slower and either remain static or are deteriorating.

Once accepted, papers become available to read faster

In 2011, three of the four large, traditional publishers (Elsevier, Wiley, Springer Nature, and Taylor & Francis) took longer than 50 days on average to publish accepted papers. By 2020, all four of them published accepted papers in less than 40 days.

As noted above, the acceleration is partly due to the transition to continuous online publishing. Papers are now made available to read as soon as the production process is complete, ahead of the completion of their issue.

The acceleration is also partly thanks to the early publication of lightly processed pre-VoR articles. Papers may be published online before copyediting, typesetting, pagination, and proofreading have been completed. Such papers can have a DOI that will be then passed on to the VoR, and as a result they can accrue citations.

Barring MDPI, peer review appears static

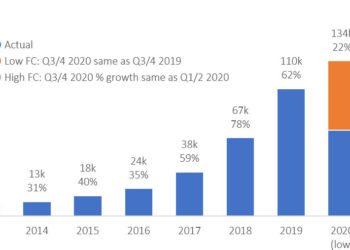

MDPI is a fast-growing and controversial publisher. It grew phenomenally in the period from 2016 to 2021 and has now cemented its position as the fourth largest publisher in terms of paper output in scholarly journals. MDPI has attracted criticism from researchers and administrators who claim that it pursues operational speed to the expense of editorial rigor.

Indeed, MDPI is the fastest large publisher by a wide margin. In 2020, it accepted papers in 36 days and it published them 5 days later. ACS, the second fastest publisher in this report, took twice as long to accept papers (74 days) and published them in 15 days.

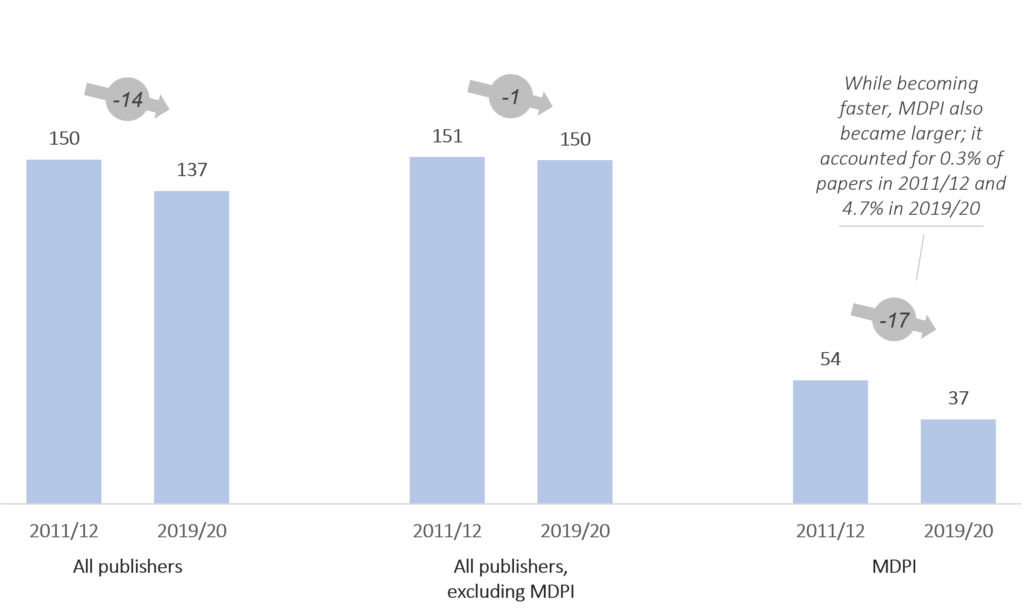

As MDPI became larger, it brought down the overall peer review speed across publishing. Including MDPI, the industry accelerated by 14 days in the period 2019/20 in comparison to the period 2011/12 (from 150 to 137 days). Barring MDPI, review performance did not show much change (from 151 to 150 days).

Peer review by category ranges from 94 days (Structural Biology) to 322 days (Organizational Behavior & Human Resources)

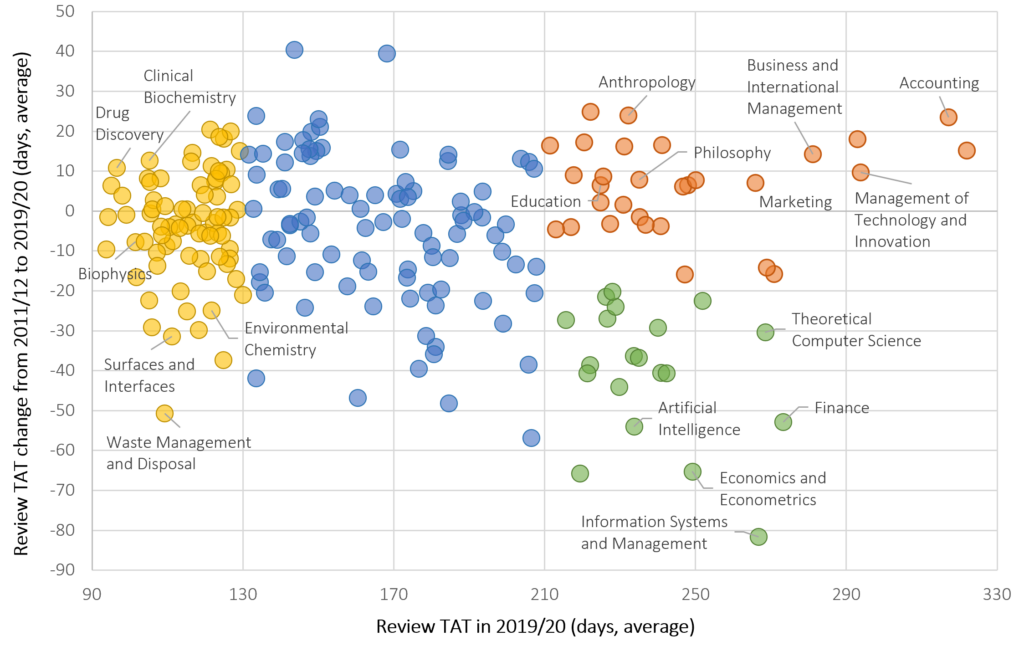

While the speed of peer review appears static in the last years (when excluding MDPI), the assessment of categories reveals a variable landscape. The chart below shows the peer review speed and trajectory by category from 2011/12 to 2019/20 (excluding MDPI) for 217 categories of Scimago (Scopus data) that had a large enough sample of journals (>15) and papers (>120) in each period.

About 35% of categories (77) accelerated by 10 or more days. About 20% of categories (44) slowed down by 10 or more days. The remaining 44% categories (96) were rather static.

Three blocks stand out. The bottom-right, green block shows categories that are slow but accelerating. It includes several categories in the area of Technology and some in the area of Finance & Economics. The top-right, orange block shows categories that have been slow with no notable acceleration. It includes several categories in Humanities and Social Sciences, with those in the space of Management achieving the slowest performance. The left, yellow block shows the fastest categories, where peer review is conducted in less than 130 days and in some cases in less than 100 days. This block includes categories in the areas of Life Sciences, Biomedicine, and Chemistry. Last, the central, blue block includes mostly categories in the areas of Physical Sciences and Engineering.

Slowing down is not inevitable

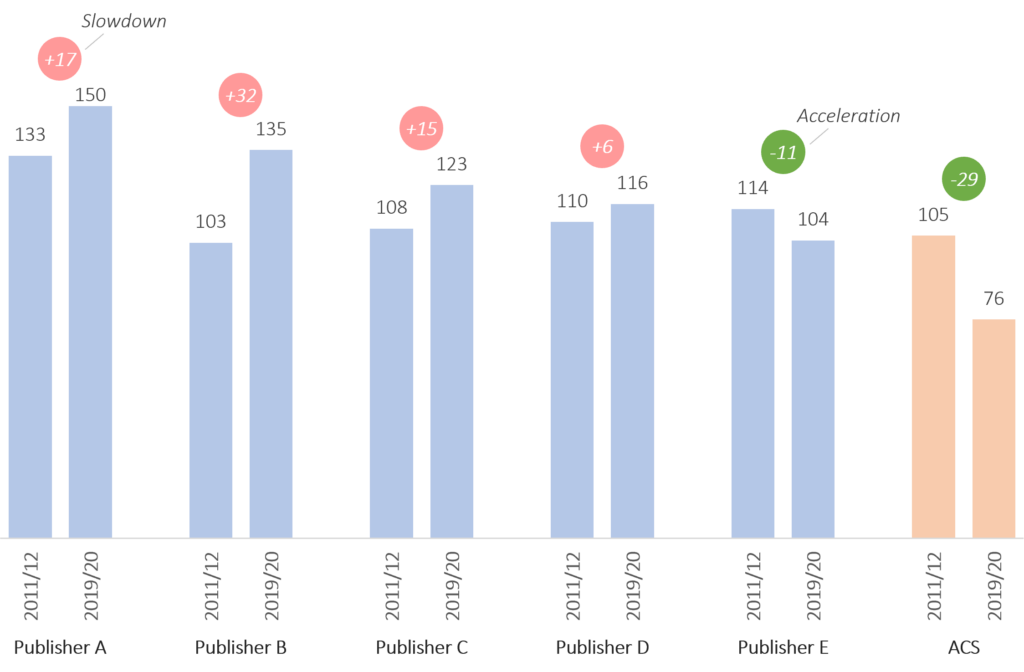

While MDPI’s operational performance can raise questions about the rigor of its peer review process, the same criticism is harder to apply to ACS, a leading publisher in the field of chemistry and home to several top-ranked journals. Notably, 55 of ACS’s 62 journals on Scimago were ranked in the top quartile, and ACS has 5 top-20 journals in the category of ‘Chemistry (miscellaneous)’, a category that includes more than 400 journals.

In addition to being selective, ACS is also fast. Across 12 categories that are related to chemistry, ACS accelerated by 29 days from the period 2011/12 to 2019/2020, eventually accepting papers 76 days after submission. Meanwhile, the largest traditional publishers have either maintained their performance or slowed down in the same period, with one publisher slowing down by more than 30 days.

ACS shows that it is possible to have high editorial standards and be fast at the same time. Its performance also suggests that the slow(ing) performance of traditional publishers is not inevitable but the result of operational inefficiencies that persist or worsen over time.

Gaps and coming up next

This article shows the direction of the industry, but it does not address a key question, namely, is there a ‘right’ publishing speed? What TAT achieves the right balance across rapid dissemination, editorial rigor, and a quality outcome? In addition, what TAT do authors consider sensible? And how do perceptions vary across and within demographics, and have they changed as preprints and MDPI set new standards?

I don’t have answers to these questions, but publishers should be able to get some answers by analyzing their in-house data from author surveys, contrasting paper TATs and author satisfaction.

The analysis concludes with 2020 data. It is likely that publishers have improved their performance in response to the rapid growth of MDPI. I do not have data for 2021 and 2022 at this point, but I am in the process of collecting and analyzing it in order to produce a detailed industry report.

TAT information can also be useful to authors, who may wish to take it into account when choosing where to submit their paper. While there are several impact metrics available, publishers are the only source of TAT information, which is provided with gaps and inconsistently. Setting up a platform that provides TAT metrics in the same way that Clarivate’s JCR provides citational metrics will allow authors to make better-informed decisions.

Discussion

22 Thoughts on "Guest Post – Publishing Fast and Slow: A Review of Publishing Speed in the Last Decade"

This is very interesting analysis of the industry and it confirms what most authors desire and what most editors attempt to deliver–an increase in review and publication speed. Two points that require noting:

1. Measuring speed requires consistent definitions of submission dates. Some editorial offices start the clock with the initial submission date to the editorial office. A revise decision will continue the clock, and multiple revisions just keep the clock running until a final decision has been made. Another office may request a revision but require that the paper be resubmitted de novo, meaning the clock will be reset to zero on that manuscript. While it is impossible to know how the editorial office works for any given paper from the dates printed on each published paper, you noted some huge differences among publishers, which may signal that they are measuring time differently. Investigating publishers that report dramatic changes over your time period may answer whether the review process has indeed changed or whether publishers have simply changed their clock.

2. Analysis of random samples requires a probability statement. You noted that your study is based on a large random sample. Any generalizable statements you wish to make are inferences back to the entire population of papers, journals, and publishers. These inferences need to be qualified with statement of accuracy, like a confidence interval. As you divide your dataset into smaller and smaller categories (by discipline, by publisher), these statements become even more important. Elapsed time is a continuous variable, but it is both bounded (can’t have negative days) and skewed. This makes bold statements of large changes less reliable, as a few manuscripts that lingered in peer review would skew the results for small categories. If you were not comfortable doing statistical inferences, reporting the median change in days would be a better measurement of central tendency.

Sorry if this sounds like a peer review. Decision: Revise and resubmit ;@)

Hello Phil,

Both are fair points, partly not addressed in the article for the sake of brevity. Happy to elaborate here 🙂

Regarding the definition of the submission date, it is true that publishers report it inconsistently. Some report it as the first time that an author presses the ‘submit’ button (IMHO that’s the right definition). Others report it as the time that a paper clears the early editorial checks, which occurs 2-4 days later in journals that do not have performance issues. A small minority of journals report other dates, such as the time that revision is cleared. This is outright wrong, but in my experience it has not been done at scale (now or historically), and it should not affect this analysis. Publishers that slowed down or accelerated did so gradually, not instantly.

Regarding samples, I only report categories that have more than 120 papers in more than 15 journals. I also exclude papers that took longer than 1,000 days to clear peer review, although some of them are legit. This should give some confidence to the reader that the results are accurate enough. Adding confidence intervals would be welcomed by 10% of the readers and alienate the other 90% (I made this up).

Regarding medians, I do use them in longer reports about TATs. But I choose to use averages in shorter reports because you can add them up. The sum of the average time to review and the average time for production is the overall average time to publish.

I think this might mean a rejection 😉

Lets see. I started in 1970 and then a paper could be turned around in about a year if everything went smoothly. As this old curmudgeon says: Those young nipper snappers will soon want them new fangled motor carriages that go 20 MPH!

For those, curmudgeons or otherwise, who would like to see some historical data, see my data on ‘time taken to publish’ at the Royal Society journals c.1950-2010. I can’t break it down neatly by ‘peer review’ time and ‘production time’, but I will note that a major reason for the long time-taken (over 200 days until the new millenium) seems to have been authors making revisions after review (and maybe not feeling under time pressure to do so…)

But also production/technology reasons in the days before author-generated electronic text, email reviewing and digital typesetting etc.

See https://arts.st-andrews.ac.uk/philosophicaltransactions/time-taken-to-publish/

Fascinating data. Thank you for sharing. Puts modern-day performance into perspective.

“ACS shows that it is possible to have high editorial standards and be fast at the same time.”

I seem to have missed the bit where the author proves that ACS have maintained ‘high editorial standards’ in these fast journals of theirs. Did I miss that bit or is that evidence just not provided?

The author makes reference to the position of ACS journals within the proprietary SCImago journal rankings, but my understanding is that SCImago is a citation-based journal ranking system. High numbers of citations to content published in a journal is not the same thing as evidence of ‘high editorial standards’. Retracted papers for instance are known in some instances to receive a fair amount of citations.

Citations to papers published in a journal are not indicative of ‘high editorial standards’.

More work needed if the author wants to demonstrate that.

No matter how you slice it, ACS has a decent reputation. They don’t regularly have Twitter (or soon, Mastodon) threads about how they are terrible and they don’t receive as frequent coverage on RetractionWatch. No data, just my impression. But I bet if you dove into their rejection rate data you’d see something more sensible than MDPI, which always surprises me when they reject a paper.

Hi Ross, my answer is pretty much the same as that of the anonymous commentator right above (below?). ACS are well-cited, are well-regarded, and do not have a controversial history. I am happy to work with the assumption that they have high editorial standards too.

Hi Christos,

Thanks for confirming that ACS’s fast journals having & maintaining ‘high editorial standards’ was merely an assumption of yours. That is useful.

My personal opinion is that I would like to see more robust testing of assumptions. Rather than just putting an assumption out, as if it is true.

Not to mention the use of the postal system!

Now that is fast peer review: publish in The Scholarly Kitchen, and voila, two reviews in within the same working day.

If it worked like this for papers, ah…

ACS is the premier publisher of chemical literature. In short, they are the gold standard. There reviewers tend to be leaders in the field. Additionally, one does not send a paper to ACS unless it has been unofficially read by others before it is submitted. They have a very active and growing list of reliable reviewers.

Very interesting data. How much do publishing houses differ in the extent to which they do copyediting? Those that omit this step will obviously take a shorter time to produce the VoR. How much do authors care about copyediting? (I ask as a person who started his career in publishing as a copyeditor.)

Has any similar study been done about time to acceptance of monographs by academic publishers? Since university presses have a more complicated set of procedures than commercial publishers do, there could be significant differences between the two types. How much do authors care about timing with monographs?

Part of the speed is independant from the journal’s managers

The longest step in the process is getting answers from identified reviewers (yes/no) and finally the reviews themselves. Because the number of publications submitted has increased much more than the number of researchers, more decline to review (more time is needed to search for other reviewers), and many take longer to review (and exceed deadlines). Very quick reviews cannot be good and indeed there is suspicion that some are even computer-generated. That’s why normal journals stay around 150 days.

‘Market demand’ one more time has perverse consequences: a premium goes to those who are able to game the system at the cost of scientific quality.

Publons. You may know Publons services that track, verify, and showcase scholars’ peer review and editorial contributions for academic journals. All journal publishers joined Publons, now?

This year Professor Sharpless received his Nobel prize the second time, for click chemistry. One of his click chemistry papers is https://www.mdpi.com/1420-3049/11/4/212 which was published in Molecules in 11 days from submission. Of course it may take 150 days or more for many papers if the revision takes long time in some cases. On average the publication time is in between for MDPI journals.

The operational speed of MDPI journals is very fast. One of the reasons is that it is professionally operated, meaning that the assistant editors and managers are dedicated 100% to their jobs, quite unlike many of the traditional journals where most if at all the staff are volunteers and receive no compensation for their work.

Let’s be real here. When the journal sends you a review with a deadline of 30 days, would you look at it before the deadline?? No, right. Most of the time, you read it on the last day and scribble down something seemingly intelligent, no? Only when you either love/hate the article, you return your review early, no?

Don’t just look at MDPI. Most of their journals are ok now, and some of them are exceptionally good. Take a look at “Frontiers”, especially their special issues. There you will find the worst articles. In the past, I have tried to post comments on the fatal mistakes of articles on Frontiers’ website, but they banned my account and deleted all my comments shortly after. Frontiers may be the new low now.

Exactly right; most reviewers sit on manuscripts until being reminded of the deadline. Also, the quality of a paper should be judged on its own merits, and just because it has been published by a certain publisher should not disqualify that paper on its own – as it may be a great study. Indeed, many MDPI journals for example are Web of Science indexed with respectable impact factors. In a world where speed is of importance, MDPI does its job well in my opinion.

This is true; giving longer time frames does not help much getting the reviews back.

One comment: do you really think a journal is ‘respectable’ because it has a ‘respectable’ impact factor?

What if this impact factor has been manufactured in a not-very-respectable manner? MDPI journals and many others have used citation rings, paper mills and many other devices at an unbelievable scale. This is now starting to be exposed but the damage is done; see for example https://forbetterscience.com/2022/08/23/maybe-stop-accepting-submissions-herr-prof-dr-sauer/ and other posts at https://forbetterscience.com and other blogs trying to bring sanity to scientific publishing.

Really interesting analysis, but where did you collect the data and information you analysed in this article? Specifically the publication times for different companies?

I could not find any information about the data pipeline and data sources you used.

I would be interested to see if there is a public repository or dataset that you can look at and use.

I think it is also important to describe and cite sources in order to lend credibility to the interesting analyses you have presented.

Thank you very much,

A.G.

Hi A.G., timestamps are common fields among a paper’s metadata. In order to replicate this analysis, you need to access DOIs in bulk, and then seek their metadata either on databases or on the articles themselves. I won’t be sharing specifics, as I would like to use this data commercially. I understand the trade-off with credibility, and it is a trade-off I am happy to make. If you would like to have a demo about the accuracy of the data, drop me a line at christos@scholarlyintelligence.com.

This is a fascinating analysis, but I wonder how much individual publisher outcomes are related to the type of journals in their portfolio. If OUP has more journals in ssh, for example, wouldn’t that mean that it would have slower overall publication times a priori? I suppose the question is whether the publication times are more related to disciplinary needs and norms, and how much is related to the publisher.

This also may have an impact on the conclusions of the article, since Chemistry is one of the disciplines with the fastest turnaround, implying that speed is of high value. It therefore makes sense that a publisher who specializes in this field, like ACS, would put particular effort into becoming faster.

Fascinating analysis nonetheless, and I realize the conclusions presented may be preliminary!

Hi Dina, this is a fair point. HSS publishers will appear slower because they are operating in slower categories. That said, publisher performance varies within category, as illustrated in the example of chemistry. Even when excluding ACS from the analysis, the gap between Publisher A and Publisher E is a month and a half, not negligible (fun fact, Publisher E is faster and much better cited).

Indeed, the findings are preliminary. I am deep diving into various dimensions (impact, category, publisher, OA status, etc.) to better identify the factors that drive speed. I am using all 2022 articles of the ten publishers for that exercise.