I recently co-authored a paper published in Learned Publishing, “Adapting Peer Review for the Future: Digital Disruptions and Trust in Peer Review”, which addresses the intersection of digital disruptions and trust in peer review. The ongoing discourse on the integration of artificial intelligence (AI) in academic publishing has generated diverse opinions, with individuals expressing both support and opposition to its adoption.

The scrutiny of peer review and research integrity has raised questions both in the presence and absence of AI. Is the current inquiry into research integrity during peer review solely prompted by the advent of AI, or has it always been a concern, considering past incidents involving retractions, plagiarism, and other unethical practices?

Harini Calamur, the co-author of the paper says:

“The research integrity problem has many facets. At the top is the sheer volume of papers to be peer reviewed, and the lack of enough peer reviewers. It is next to impossible to be true to each and every paper, and to ensure that everything is as per requirement. Unless we fix that, we are not going to know which paper has dodgy ethics, and which does not. And therefore, institutional science will always be playing a catch-up game in terms of research integrity. The integration of AI offers potential solutions, but it’s important to recognize that technological advancements alone cannot fully address these structural challenges. Fundamental changes in the peer review process are essential for a proactive stance on research integrity.”

Indeed, research integrity has consistently been a cause of concern. Therefore, the blame lies not with AI but rather with the established processes, particularly as the dynamics of academia undergo transformations, pushing against traditional boundaries and introducing a multitude of new opportunities and challenges.

In the era of digital advancements and the expansive information landscape, it becomes imperative to establish robust mechanisms that uphold research integrity and ensure the trustworthiness of academic output. While this is not a novel concern, adapting to this evolving landscape requires time and resources. Take Google, for example. Despite being a valuable information resource, the sheer volume of data makes it challenging to discern which information it offers via search results is credible. The realm of research increasingly resembles this scenario, with continually growing numbers of academic papers published annually. This expansion of literature has strained the peer review process, burdening experienced reviewers and leading to either hasty evaluations or delays in publication. Addressing the implications of the ever-growing volume of publication is essential for maintaining the integrity of academic publishing. Hence, the question arises: How can we refocus the community on research integrity?

To gain a deeper understanding of the challenges and opportunities within the evolving academic landscape, Cactus Communications (CACTUS – full disclosure, my employer) conducted a comprehensive global survey during Peer Review Week 2022. The survey, encompassing 852 participants from diverse academic backgrounds and career stages, explored their perspectives on research integrity and the efficacy of the peer review process.

Source: Calamur, H. and Ghosh, R. (2024), Adapting peer review for the future: Digital disruptions and trust in peer review. Learned Publishing, 37: 49-54. https://doi.org/10.1002/leap.1594

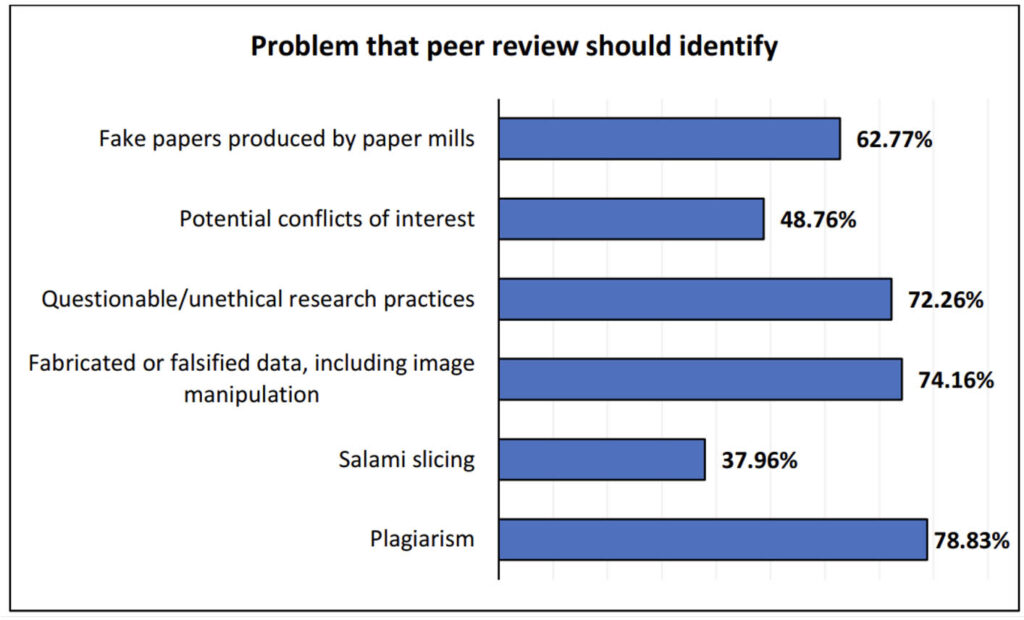

Figure 1 is a visual representation of participant opinions on the issues that peer review is expected to address. Notably, 78.83% of participants expressed the view that peer review should include identifying plagiarism. But does this really fall in the purview of what a peer reviewer is expected to do? These findings set the stage for a more thorough examination of the expectations and challenges associated with upholding research integrity through the peer review process. What is the responsibility of the peer reviewers and what should instead be handled by the journal’s editors and staff? Given the surge in academic paper production and the presence of cutting-edge plagiarism detection tools available to editorial offices, we need to determine the most efficient allocation of responsibilities.

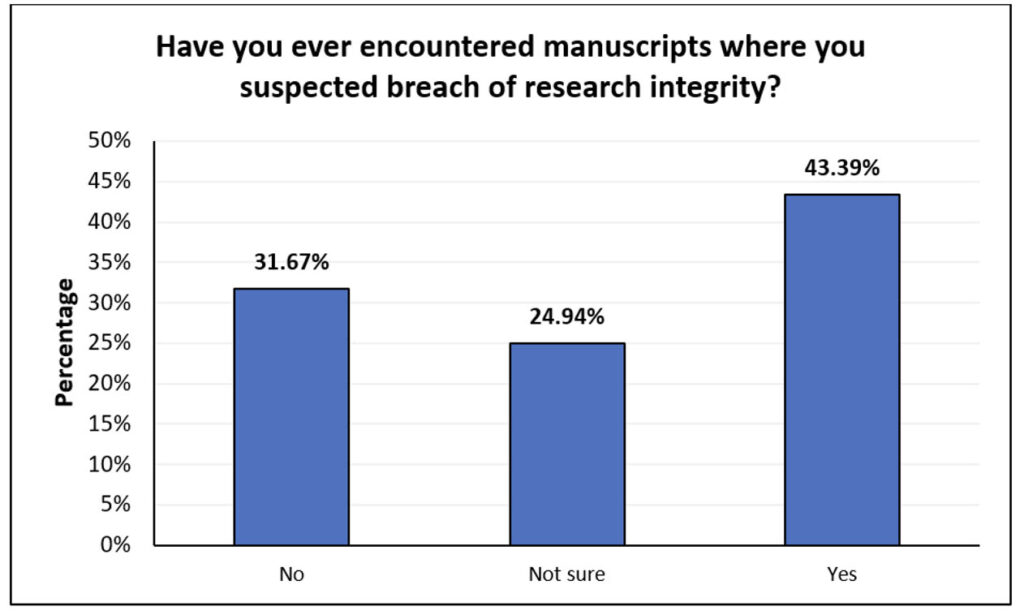

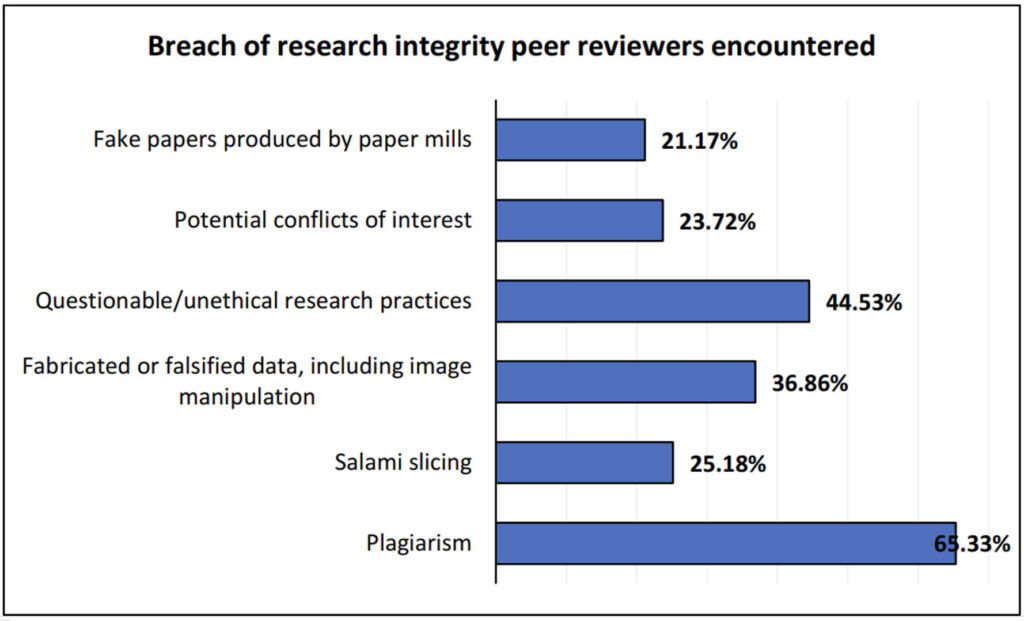

Additionally, the survey investigated the frequency of reviewers observing potential breaches of research integrity. Out of the 852 participants, 401 engaged in peer review, with 43.39% encountering breaches of research integrity. This highlights the gravity of the issue, emphasizing that it should not be trivialized. Given its prevalence, the industry needs to strategize ways to enhance the rigor and efficiency of the review process.

Source: Calamur, H. and Ghosh, R. (2024), Adapting peer review for the future: Digital disruptions and trust in peer review. Learned Publishing, 37: 49-54. https://doi.org/10.1002/leap.1594

Grasping the nature of these incidents is vital for addressing weaknesses in the existing peer review system and investigating potential avenues for improvement.

The survey revealed that 65.33% of respondents identified cases of plagiarism, and 44.53% detected instances of questionable or unethical research practices.

Source: Calamur, H. and Ghosh, R. (2024), Adapting peer review for the future: Digital disruptions and trust in peer review. Learned Publishing, 37: 49-54. https://doi.org/10.1002/leap.1594

Does this suggest a misalignment between the feedback given by reviewers and the criteria that peer reviewers are supposed to adhere to when evaluating manuscripts? Are reviewers concentrating on the appropriate aspects? How can the reviews be adjusted to align with the necessary criteria for manuscript acceptance?

Here are possible solutions and considerations:

- Optimizing Reviewer Feedback:

How can we ensure reviewer comments effectively align with the broader goals of peer review to facilitate a more efficient evaluation process? What role do clear expectations regarding the type and depth of comments play in standardizing the peer review system? Is it crucial to focus specifically on aspects influencing research quality to avoid providing unnecessary feedback that does not align with manuscript acceptance criteria? By trying to fix unexpected problems, could reviewers miss important things because they’re too focused on tiny details? Ultimately, how does this contribute to enhancing the overall quality and integrity of scholarly publications? Addressing these questions can help optimize the peer review process, promoting consistency, relevance, and constructive feedback. - Roles and Responsibilities:

In what ways do clear communication and collaborative efforts play a crucial role in ensuring an efficient and transparent review procedure? How does a mutual comprehension of respective roles uphold the integrity of the research and enhance overall efficiency? What can be done to foster a seamless and effective peer review experience, where each stakeholder contributes to the shared goal of maintaining research integrity and advancing scholarly discourse? - AI-driven Transformations:

How does the transformative potential of AI-driven systems impact different facets of the peer review process? In what ways does the automation of tasks, such as manuscript screening, reviewer selection, and language enhancement, enhance or detract from the efficiency of the review workflow? Can AI’s contribution extend beyond efficiency to encompass improvements in the overall quality and objectivity of the peer review process? In what ways can AI serve as a positive complement to, rather than a substitute for, human expertise? - Reviewer Training and Guidelines:

What comprehensive training programs can be implemented to cover both technical and ethical considerations of peer review effectively? In what ways do standardized guidelines contribute to maintaining consistency and fairness during the peer review assessment process? How might we incentivize high-quality and ethical peer review to foster a culture of excellence, possibly through recognition and rewards? - Data Privacy and AI Integration:

Rigorous measures to protect data privacy become imperative with the implementation of AI in the peer review process. What measures can be implemented to ensure the safeguarding of sensitive information throughout peer review, especially when considering more reliance on AI-driven tools? How do we strike a delicate equilibrium between leveraging the benefits of AI and prioritizing the protection of data privacy? In what ways can the confidentiality and security of sensitive information during the peer review process be maintained?

Optimizing Peer Review Participants – both human and machine

In charting the course forward, it is important to recognize the importance of maintaining a delicate balance between AI capabilities and human expertise in shaping an unparalleled peer review system. This convergence is the very linchpin that fortifies the effectiveness and trustworthiness of the scholarly evaluation process. The continued growth in publication volume makes it essential that peer review time be spent in the most efficient and most effective ways possible.

The efforts of peer reviewers can be supported and enhanced through the collaborative integration of AI tools. AI must not be seen as a replacement but as a powerful complement to human judgment, preserving the indispensable nuanced insights provided by human peer reviewers. As AI-driven tools strategically enhance quality control and detect plagiarism, image manipulation, and data falsification, they have the potential to contribute to a more rigorous evaluation of scholarly submissions. However, the ethical considerations surrounding AI, including addressing algorithmic bias and ensuring data privacy, are pivotal.

Establishing comprehensive guidelines and proactive measures becomes the bedrock for the responsible use of AI in peer review. As we embark on this transformative journey, the question remains: How can we further refine and advance this collaborative synergy between AI and human judgment to foster an even more efficient, objective, and ethically robust peer review process?

Discussion

12 Thoughts on "Unveiling Perspectives on Peer Review and Research Integrity: Survey Insights"

Thank you for sharing your questionnaire results Roohi. It is very clear that research integrity screening – and indeed arguably data quality control screening – cannot be delegated to academic referees who lack training and access to relevant tools (not to mention time). The answers in the questionnaire are probably partially down to how the question is asked, as ‘peer review’ in fig. 1 is typically conflated with ‘Journal quality control processes’ – it has to be made clear that peer review is just one component of the process. Similarly, I assume the responses on the integrity issues referees came across (fig 3) were not always identified by them during standard peer review in the first instance.

The wording of the original question (https://cdn.editage.com/insights/editagecom/production/2023-09/CACTUS-Peer-Review-Week-2022-Research-Integrity-Survey-Report.pdf) is revealing. The participants were asked: “In your view, peer review should be able to identify which of these problems?”. The report authors and the commenters here seem to interpret the results in terms of the duties of a peer reviewer, but the reference to opinion and the ambiguous use of the word “should” in the question may have conflated a swathe of related opinions and understandings relating to both how peer review is expected to work and how it actually works in practice.

We know from experience that by reviewing a paper in detail, flagrant research integrity issues sometimes surface. I expect some respondents reported what can be detected (as in “sure, if you review a paper properly, you should be able to detect some integrity issues”), not what we should rely on peer review to detect. From the reported information, I don’t really see that these two possibilities can be parsed, which leads me to question how the results have been interpreted.

I want to highlight that the ambiguity in the question was intentional, as we aimed to capture not only respondents’ perceptions of what peer review should entail but also their understanding of how it actually functions in practice. By examining the dissonance between these two perspectives, we hoped to gain valuable insights into areas where there may be discrepancies or misunderstandings. Your point about the dual roles of respondents as both authors and reviewers is particularly pertinent. The survey results not only shed light on what individuals believe should be the responsibility of reviewers but also offer insights into authors’ expectations and perceptions of the peer review process. This dual perspective underscores the importance of providing adequate training and education to both authors and reviewers to bridge the gap between expectations and reality.

Thank you for your response to my article and for highlighting important considerations regarding the questionnaire results. I’d like to clarify that the survey was designed to capture perspectives from both peer reviewers and authors. Consequently, some of the responses may indeed reflect a sort of merging of “peer review” with “journal quality control processes,” as you noted. This observation underscores a significant aspect of the current landscape: the need for clearer delineation of roles and responsibilities between authors and reviewers. Your point about the confusion surrounding these roles is particularly salient. Authors, who later transition into the role of reviewers, must be adequately educated about the distinctions between peer review and journal quality control processes. By ensuring authors understand these differences, we can promote greater clarity and effectiveness in the peer review process overall.

Thank you for your response and for highlighting important considerations regarding the questionnaire results. I’d like to clarify that the survey was designed to capture perspectives from both peer reviewers and authors. Consequently, some of the responses may indeed reflect a sort of merging of “peer review” with “journal quality control processes,” as you noted. This observation underscores a significant aspect of the current landscape: the need for clearer delineation of roles and responsibilities between authors and reviewers. Your point about the confusion surrounding these roles is particularly salient. Authors, who later transition into the role of reviewers, must be adequately educated about the distinctions between peer review and journal quality control processes. By ensuring authors understand these differences, we can promote greater clarity and effectiveness in the peer review process overall.

Am I the only one who feels like the respondents do not fully understand the role of peer review? It is not the role of peer reviewers to uncover fabricated data or image manipulation or conflicts of interest. Certainly they can (and have), and it’s appreciated when they do, but it’s not their primary role. Also, submission systems used include tools that help screen for plagiarism but again, not the main purpose of peer review. The primary purpose of peer review is to assess the research submitted, not to uncover bad actors. Again, it is great when it does, but IMO these are things which fall primarily to the editorial office and/or publishers, not to peer reviewers. Now, if we are considering everything that occurs prior to publication “peer review” that’s a different story. Curious to hear what others think!

Absolutely agree – hence also my earlier comment. Referees cannot be expected to – nor can they – assess research integrity issues systematically. It is appreciated if they alert editors to potential issues of course. The same goes for many academic editors who lack training, experience, tools. It is crucial to distinguish between assessment of the ‘scientific plausibility of data’ (typical peer review in the biosciences), ‘technical peer review of source data’ (still very rare but important) and assessment of integrity.

Agreed, this survey seems like it is probably fairly ambiguous so the respondents were probably a bit confused.

We published a bit more of a systematic piece on this, I would love to know Roohi’s thoughts on this:

Is the future of peer review automated?

Schulz R, Barnett A, Bernard R, Brown NJL, Byrne JA, Eckmann P, Gazda MA, Kilicoglu H, Prager EM, Salholz-Hillel M, Ter Riet G, Vines T, Vorland CJ, Zhuang H, Bandrowski A, Weissgerber TL.

BMC Res Notes. 2022 Jun 11;15(1):203. doi: 10.1186/s13104-022-06080-6.

PMID: 35690782

of course during the pandemic the issue was that we were putting a lot of information out without any review, so the bots were the only way to get any review:

Automated screening of COVID-19 preprints: can we help authors to improve transparency and reproducibility?

Weissgerber T, Riedel N, Kilicoglu H, Labbé C, Eckmann P, Ter Riet G, Byrne J, Cabanac G, Capes-Davis A, Favier B, Saladi S, Grabitz P, Bannach-Brown A, Schulz R, McCann S, Bernard R, Bandrowski A.

Nat Med. 2021 Jan;27(1):6-7. doi: 10.1038/s41591-020-01203-7.

PMID: 33432174

I went through the paper and found the insights very interesting. Thank you for sharing!

Exactly! That’s what the survey brought to the fore—that not everyone, especially authors may understand the role of peer review.

Thank you,

I think that there is a lack of clarity, but also that we really can’t offload work to peer reviewers that they are untrained, unpaid, and largely unwilling to do.

Professionals that work for publishers can do this type of work and have been doing it, though the training may also be an issue because these individuals may not be as knowledgeable about the science being described.

The solution that we proposed was that we have trained AI bot(s) that can lend a hand in peer review process where reviewers and editorial staff are not going to look, e.g., in tasks that are usually too tedious for humans such as following a checklist or making sure that all catalog numbers are accurate for reagents.

Undoubtedly AI will (is!) dramatically alter(ing) how we quality control and indeed peer review papers, but there is still a clear difference between 1) classical peer review (mostly a high-level plausibility assessment; AI helps assess conceptual advance in this context); 2) technical peer review (requires actual data to analyze, rarely found currently at journals; AI helps with data analysis e.g. stats); 3) Quality control/curation (data structure & deposition, protocols/method reporting etc.; AI assists in checklist compliance); 4) Research integrity screening (data, images; AI tools already assist, currently mostly at the level of duplications). These are all very different activities that require a different skillset and different tools. They they should not be conflated. Needless to say, very few journals currently achieve this.