Since its launch earlier this year, Scopus AI has been positively reviewed in several forums, including the Scholarly Kitchen back in February. To learn a bit more about the product, I had an exchange with Maxim Khan about how Scopus AI was developed and how it functions. Max is Senior Vice President of analytics products and data platform at Elsevier, and he leads the team that developed Scopus AI. We discussed the underlying technology behind this new generative AI product, some of the principles behind its development, and how Elsevier envisions it can help researchers with summaries and potential research insights. Here is a transcript of some of that exchange.

First question as a bit of an introduction to those who don’t know about Scopus AI: Tell us a bit about Scopus AI and what service it provides to users above the existing Scopus service?

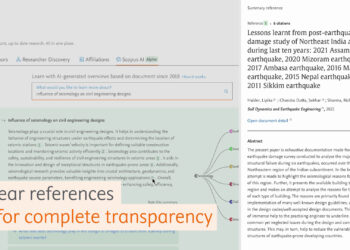

Scopus AI is a new generative artificial intelligence product that Elsevier launched in January. It extends the functionality of Scopus, a comprehensive, multidisciplinary, trusted, abstract and citation database. Its development was motivated by two pieces of feedback that we kept hearing: researchers asking for help to navigate the increasing volume of research articles and learning about new research areas. This was especially true for those working across disciplines. The emergence of Large Language Models (LLMs) and generative AI allowed us to even better meet this need and complement existing functionality on Scopus.

Scopus AI was developed in close collaboration with the research community and helps users in several ways:

- Expanded Summaries: providing quick overviews of key topics for deeper exploration and sometimes exposing gaps in the literature.

- Foundational and Influential Papers: Helping researchers to quickly identify key publications.

- Academic Expert Search: Identifying leading experts in their fields and clarifying their expertise in relation to the user’s query, saving valuable time.

Scopus AI combines Scopus’ curated content and high-quality linked data with cutting-edge gen AI technology to help researchers.

Can you tell us a bit about the technology that underpins Scopus AI? What is unique about this approach?

Scopus’ trusted content is the foundation of Scopus AI, with over 29,200 active serial titles, from over 7,000 publishers worldwide, 2.4 billion citations, including over 19.6 million author profiles. Content is rigorously vetted by an independent review board of world-renowned scientists, researchers and librarians who represent the major scientific disciplines.

Scopus AI uses custom retrieval-augmented generation (RAG) architecture including models and prompts on abstracts in Scopus; it consists of a search module, reranking and a large language model (LLM) module for text generation.

The search module uses “vector search”, small language models used to assign numerical values to query words and sentences based on language vector space. The results of the vector search are then fed into our large language model, along with your original query. Prompt engineering provides the LLM with clear rules it must follow while generating the Scopus AI response.

We are using OpenAI’s large language model (LLM) ChatGPT hosted on a private cloud provided by Microsoft Azure. We have an agreement in place with Microsoft that ensures all data is stored in a protected and private environment exclusive to us and not shared with other parties. This is an important feature of our implementation, designed to provide privacy and peace of mind to both data publishers and authors.

You’re using a new version of the RAG technique, called Fusion RAG. Since most are not familiar with these versions of tools, can you first briefly describe what RAGs are and how they work?

Simple RAG combines a retrieval model, which searches large datasets or knowledge bases, with a generation model, such as a large language model (LLM), to produce readable text responses. This method enhances search relevance by adding context from additional data sources and supplementing the LLM’s original knowledge base.

Scopus AI incorporates Retrieval-Augmented Generation Fusion (RAG Fusion) in its search module – a pioneering search method to enhance query depth and perspective by merging results from expanded queries. A user enters a search query, variations of that query are then automatically generated to help enhance accuracy, vector search is then run against each of these queries, the results are then merged and ranked using a reciprocal rank fusion algorithm, the summary is then generated based on the top result.

Scopus AI was built in-house, and we shared this technique with the community. RAG Fusion significantly enhances the depth and perspective of the query, allowing it to identify blind spots and provide a more comprehensive and nuanced answer.

Scopus AI is currently generating results based on the past 11 years of content, from 2013 onward. Why was this break chosen?

We felt that this was a good starting point that balanced relevance, recency and depth of results. This is a starting point, and we will expand the corpus of content over time. While Scopus AI primarily uses content from 2013 onward for generating responses, it’s important to note that the foundational documents include the entire Scopus corpus. Technically, a RAG model could be used on any scale of data, so there wasn’t a technical reason for the break.

Elsevier has adopted a set of principles governing your use of AI systems. First can you give people a brief overview of those principles?

For more than a decade, Elsevier has been using AI and machine learning technologies in its products. At every step of development, from design through to deployment of machine-driven insights, Elsevier’s Responsible AI Principles are deeply embedded in the process. Customer experience, data privacy and integrity, and responsible AI are at the heart of Elsevier’s product development.

More importantly, I’d like to dig into a few of them, and their role vis-a-vis Scopus AI. I’d like to start with privacy. To what extent is the system improving based on user interactions?

It’s worth reiterating, we use OpenAI’s GPT and Large Language Model (LLM) technology in a private cloud environment. There is no data exchanged with OpenAI and the cloud provider doesn’t use the data outside of our environment.

Respecting and protecting user data and privacy are key priorities at Elsevier as maintaining the trust of the people who use our products and services is critically important to us. We are committed to behaving with integrity and responsibility regarding data privacy. Our Privacy Principles guide our approach to personal data and further information is available here.

Bias is another important element of your principles. How have you sought to address bias in the training of the models you’re using? Obviously, you can’t impact bias in the base model, ChatGPT, I’m asking regarding your own trained Small Language Model, that supports the RAG system.

Bias is indeed a crucial element of our principles. To address bias in the training of our models, particularly our own Small Language Models (SLMs) that support the RAG system, we have implemented several measures:

- Data Source Integrity: We ground our responses in the trusted and vetted Scopus database, ensuring that the content is reliable and peer reviewed.

- Prompt Engineering: Our prompt engineering process is designed to identify and mitigate potential biases, by crafting prompts that guide the model to consider diverse perspectives and avoid reinforcing stereotypes.

- Bias Detection and Mitigation Tools: We employ tools to test and detect harmful biases in our AI-generated content.

- Continuous Monitoring and Feedback: We have established an evaluation framework that continuously monitors the model’s outputs for coherence, quality, and bias. This framework represents a wide range of perspectives and disciplines. By including diverse sources and viewpoints, we aim to minimize the risk of bias in the model’s responses.

- Focus on Harmful Bias: While it is impossible to eliminate all biases, biases can exist in academic literature itself, we focus extensively on identifying and mitigating harmful biases. Active testing aims to elicit and address harmful responses, ensuring that the model remains as fair and unbiased as possible.

Through these measures, we strive to provide more accurate information with reduced bias.

Earlier this year, I wrote about the need for caution when ceding reading to machines. I’m interested to hear your perspective on the potential pitfalls of this approach. What should users be wary of as they approach these tools? What would you say to assuage users’ fears?

We have developed ethics, bias, and toxicity evaluation functions in Scopus AI evaluation.

Accuracy in AI-generated content is crucial, especially for academic research. Hallucinations, instances of AI generating non-factual or unverifiable information, can significantly undermine the value of AI tools. We have hallucination evaluation checks in place to determine whether results have generated misinformation.

Obviously, you’re doing a lot to improve this system. Can you tell us a bit about your process to engage users? How are you using human experts to improve the system?

Product innovation is an iterative process of testing, learning, refining. We released a very early version of the product, or ‘alpha’, to existing Scopus users who were randomly selected to take part in testing, which helps us obtain feedback from a large cohort through webinars, 1-2-1 user interviews and focus groups. This live community feedback informs continuous enhancements across all areas:

- User experience validation: Feedback gathered during testing helps us refine the product interface, streamline workflows and enhance user experience and satisfaction.

- Performance testing: Real-world usage scenarios during testing also help assess performance under different conditions, such as varying network speeds, hardware configurations, or usage patterns. This enables us to optimize performance and ensure the stability of the final launched product.

- Feature validation: To validate whether the implemented features meet users’ needs and expectations. This feedback helps prioritize, refine functionality, and eliminate unnecessary or redundant features.

- Quantitative engagement metrics: User satisfaction scores on individual features helps drive development and validation.

- Validation of compatibility: Users will be working in different environments with different operating systems, devices, configurations. Testing helps ensure the software functions correctly across various platforms and configurations.

How can users of Scopus get engaged in the process of testing and improving the system?

The needs and insights of the research community are at the heart of product innovation. We continually welcome feedback from customers and individual users. People can register their interest in Scopus AI and get further information at: https://www.elsevier.com/en-gb/products/scopus/scopus-ai

Discussion

3 Thoughts on "A Look Under the Hood of Scopus AI: An Interview with Maxim Khan"

According to our analysis, Scopus is not a reliable and credible abstracting database. Please read our story “Elsevier Unethically Promotes its Journals via Scopus: The Case of Heliyon” here to learn how Scopus is scamming: https://scholarlycritic.com/elsevier-unethically-promotes-its-journals-via-scopus-case-of-heliyon.html

What a strange article. You do realize that the conflict of interest law you cite applies only to US government employees, right? Not privately held companies?

Regardless, if Scopus did allow an Elsevier journal to jump the line for indexing, it wouldn’t be the first time that’s ever happened:

https://scholarlykitchen.sspnet.org/2012/10/22/somethings-rotten-in-bethesda-the-troubling-tale-of-pubmed-central-pubmed-and-elife/

https://scholarlykitchen.sspnet.org/2012/11/12/how-valuable-is-pubmed-centrals-early-publication-of-elife-content/

https://scholarlykitchen.sspnet.org/2013/02/06/answers-finally-how-pubmed-central-came-to-help-launch-and-initially-publish-elife/

https://scholarlykitchen.sspnet.org/2013/10/15/pubmed-central-and-elife-new-documents-reveal-more-evidence-of-impropriety-and-bias/

Hello, Thanks you your comment. We cited conflict of interest law, as in instance, just to make people realize that such practices are not acceptable not only according to law but ethically too. Do you know every author has to submit a conflict of interest statement to academic journals. Most of the authors are not government employees. Companies should also consider Conflict of Interest situations, and responsible companies do. A publisher of journals operating an indexing/abstracting database is a clear conflict of interest as we identified that Scopus earned a hell of money just by indexing Heliyon in their database without following their own quality criteria. Keeping this instance in mind, we will assume that Scopus doesn’t follow the inclusion criteria for journals published by Elsevier (its parent company) that are imposed on non-Elsevier journals. In our another story, we proved that Heliyon is publishing papers without any credible peer review or quality control practices. For more details, please visit: https://scholarlycritic.com/heliyon-ataei-etal-2024-instance-of-relaxed-nescient-peer-review.html