Editor’s Note: Today’s post is by Lutz Bornmann and Christian Leibel. Lutz and Christian are sociologists of science in the Administrative Headquarters of the Max Planck Society in Munich. Christian is also a doctoral student at the LMU Munich.

Data sonification, the process of translating data into sound, has emerged as a compelling complement to traditional data visualization. By mapping data attributes (e.g., magnitude, frequency, and temporal patterns) to auditory parameters such as pitch, volume, and timbre, sonification leverages the human auditory system’s acute sensitivity to temporal changes and complex patterns. Rooted in early applications such as the Geiger counter, the interdisciplinary field of data sonification integrates acoustics, psychology, and engineering to transform data exploration, monitoring, and communication.

While visualization dominates the scientific discourse, data sonification offers unique advantages: it enhances accessibility for visually impaired researchers, supports multitasking in environments where visual attention is limited (e.g., operating theaters), and enables intuitive pattern recognition in temporal datasets. Projects like Microbial Bebop – which sonifies oceanic microbial data into jazz – demonstrate the potential of data sonification for public engagement, bridging science and art. However, challenges persist, including the need for listener training for understanding sonified data and a lack of standardized methods for the sonification of data.

Metrics sonification, a specialized application, adapts data sonification to bibliometrics – the quantitative study of scientific output and impact. Lutz Bornmann and Rouven Lazlo Haegner introduced metrics sonification and sonified bibliometric data in a first application, translating publication and citation metrics into auditory sequences to honor Loet Leydesdorff – a giant in the field of scientometrics who passed away. Metrics sonification provides alternative data representations for diverse audiences. By converting abstract numerical trends into perceptible soundscapes, metrics sonification fosters novel insights, particularly in the bibliometrics field that is dominated by visual graphs.

Although bibliometrics has become an essential tool in the evaluation of research performance, bibliometric analyses are sensitive to a range of methodological choices. Subtle choices in databases, indicator variants, and options of regression models can substantially alter results. Ensuring robustness – meaning that findings hold up under different reasonable scenarios – is therefore critical for credible research evaluation. Of particular importance is the specification of bibliometric indicators. The difficulty of connecting the epistemic properties of research publications with empirical quantities renders the process of finding appropriate indicators challenging. This may lead to situations in which several equally defensible variants of a bibliometric indicator yield incompatible results. We refer to this methodological challenge as “specification uncertainty”.

The problematic consequences of specification uncertainty become particularly apparent when analysts arrive at contradictory answers to the same research question. Such is the case in the recent discussion between Lingfei Wu and coauthors and Alexander Michael Petersen and coauthors. In a highly cited article in Nature, Wu and coauthors leveraged bibliometric metadata to explore how the increasing prevalence of large research teams in science affects research output. Their study marked the first application of the disruption index – a bibliometric measure of scientific innovation. According to Wu and coauthors, there is a clear distinction between the roles of large and small teams in scientific progress: While large teams predominantly focus on established scientific questions, small teams are more likely to initiate new directions in scientific discovery. Contradictory evidence was reported by Petersen and coauthors who claim to have refuted the hypotheses of Wu and coauthors by using a superior estimation strategy as well as an alternative definition of the disruption index.

In our recent article, we add to the literature on specification uncertainty by proposing that the uncertainty of bibliometric analyses should be made transparent via multiverse analysis. Multiverse analysis is an umbrella term that refers to a set of statistical methods that were proposed by researchers from different fields such as clinical epidemiology, social sciences, psychology, and sociology with the common goal of strengthening the robustness of research outcomes. Multiverse analyses are conducted by defining a set of model specifications that can be regarded as equally valid. Multiverse analysis runs and visualizes the estimates from every model specification in order to communicate the robustness (or lack thereof) of the findings to readers (and policy makers).

We investigated the divergent results of Wu and coauthors and Petersen and coauthors with a set of 62 regression models that also includes several alternative specifications of the disruption index (besides several modeling assumptions). Even though we only considered relatively minor modifications of the disruption index, the multiverse analysis revealed that the results are affected by the exact definition of the disruption index (e.g., the time of measurement). Presenting results based on just one, or only a few, variants of the index might not give the readers an accurate impression of the possible bandwidth of effect sizes. The multiverse analysis revealed a range of team effects, from small negative to very small positive, which have contradictory implications for science policy decisions.

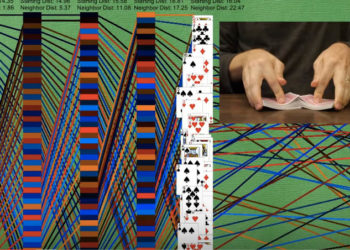

In our article, we included a visualization of the 62 team effect sizes from the multiverse analysis. To reveal the potential of metrics sonification to the bibliometrics community as alternative to the visualization, we composed a track including the sonification of team effect sizes from the multiverse analysis. We mapped the effect sizes to the sound parameter “pitch” including the lowest tone for the model of Wu and coauthors and the highest tone for the model of Petersen and coauthors. The track has been composed in C major. To compose the track, we used the software Ableton Live in combination with sonification tools from Manifest Audio. The track can be heart on SoundCloud. The track demonstrates in a similar way as the visualization in our article the great differences in the model outcomes. Since the track has been composed as a self-contained product, the track not only includes the sonified effect sizes, but also spoken words that explain the background of our study. We are grateful to Marc Rossner – an English native speaker – who provides the background information. We are also grateful to Rouven Lazlo Haegner who mastered (optimized) the track for hearing on different platforms.

Our metrics sonification demonstrates that the 62 coefficients from the multiverse analysis vary depending substantially on the specification of the disruption index and several modeling assumptions. In terms of practical relevance, it seems appropriate to conclude from the results that small teams and large teams contribute about equally to scientific progress. Beyond the mechanisms through which research teams generate their research output, our results have methodological implications. As specification uncertainty is almost omnipresent in empirical bibliometric research, multiverse analysis may strengthen the robustness of bibliometric analyses by reducing the risk that research outcomes and their policy implications hinge on (more or less) arbitrary modelling assumptions. Future research could contribute to robust bibliometrics by using multiverse analysis to shed light on the “hidden multiverse of bibliometric indicators”.

Our metrics sonification exemplifies the synergy between scientometrics and auditory design, promising to enrich both research analysis and communication. Metrics sonification balances aesthetic engagement with analytical clarity, ensuring sound remains a conduit for data-driven storytelling.

Discussion

4 Thoughts on "Guest Post – Metrics Sonification of Team Size Effects on Disruptive Research"

Thank you. Wonder to what extent your metrics can take into account the time-lag between small teams (who tend to be less political) and large teams? The solitary Gregor Mendel discovered what we now refer to as “genes” in 1865 while his contemporaries gazed in awe at Darwin’s revolutionary “natural selection” comet that had appeared in the skies of evolutionary biology. Mendel lay largely undiscovered for 35 years! Check out some Nobel acceptance speeches and you will find more. examples.

Thanks for the very interesting comment! In our study, we looked at the relationship between disruptivity and team size. Following your comment, it would actually be interesting to consider another variable: the time between discovery (publication) and recognition. Does research by small teams take longer to be recognized as disruptive research? If that is the case, it would be interesting to see why this is so. For example, are these scientists less networked than scientists in large teams?

As a former professional musician and current digital librarian with an interest in scientometrics, I love the idea of metrics sonification!!

I also have several specific and general questions: Did the publication seek out musical experts for the peer review? If not, why not? If so, how rigorous was the musical peer reviewing? (e.g., Did you get any comments about composing in C Major? Were there questions about whether you used synth presets or made your own patches? Did any reviewers propose xenharmonic scales for higher metrics granularity?) When considering interdisciplinary that involves art-making, I wonder how often are the art experts are deferred to in the publishing process?

Thanks for the comment! We have no information on how the peer review process for our blog post was organized. Basically, we are very interested in cooperations, and in the past we (LB) have also worked with musicians on metrics sonification.