- Image via Wikipedia

Kent and Phil have done a superb job so far covering various sessions at the Society for Scholarly Publishing meeting today, and I wanted to pitch in as well with some notes from a session they missed, one which asked the question, “How can publishers maximize the value and reach of their content using new technologies?”

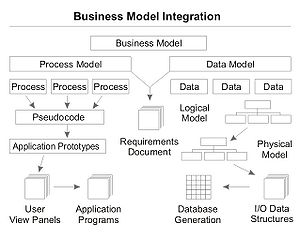

First up was Jim King, Director of Software Engineering for the American Chemical Society (ACS), who discussed their XML-based workflow. The goals were to eliminate all human interaction with the content beyond intellectually driven activities of the editors, to reduce time to publication, and most importantly to make the end product “media neutral,” meaning content that can be used and re-used in a wide variety of formats.

King suggested that this was more of a business initiative than a technology initiative and that the toughest part was getting buy-in from the operational staff, changing their mindset to start thinking online before print. He also discussed the ACS’ mobile platform, their iPhone app, which they developed internally in just a few months. The app sells for $2.99, and they’ve sold around 3200 copies. Because of their inexpensive internal development process, they don’t tend to think of the app as a profit-driver by itself, and just needed it to break even. The sales numbers though, may be daunting for publishers without the same internal capabilities as in a later session it was suggested that mobile apps cost $25-30K to develop.

Next up was Keith Wollman, VP of Web Development and Operations at Cell Press, who walked the audience through the latest iteration of their “article of the future” (discussed here as well). It was interesting to see their various prototypes, and how different groups, from scientists to interface design experts added their own expertise. Wollman did add a caveat that this sort of experimentation takes a large and talented staff. Given that so many readers of science journals just download the pdf and print it out to read it, it would have been interesting to hear whether their new design and presentation has impacted user behavior but Wollman said it was too early in their analysis to say much yet. Also of note, they have a commenting function built in but as seen in so many other journals, it’s only very rarely used, averaging around 8 comments per month across all Cell Press journals.

Ryan Jones of PubGet was the final speaker as he talked about their system, which he describes as “a workflow tool” for use with a library’s existing subscriptions. Their user surveys put fast delivery as the top requested quality for readers and their system brings those readers directly to the pdf version of articles, saving time and clicks. They feel this increased ease of access will lead to more usage of a publisher’s content, which should lead to more subscriptions. Again, it would have been nice to see some data here, as PubGet presents the pdfs framed on their own website, taking traffic away from the publisher’s website, potentially reducing any ad revenue a publisher generates themselves. PubGet sells their own ads next to your content and offers partners a share of the revenue, but it’s unclear how that balances with the potential lost ad revenue or if increased subscriptions will make up the difference. It was also surprising to hear that their search engine is based around the metadata the papers provide, and thus does not access the full text of the article. That may make it tough for them to woo users away from other search facilities that offer more detailed results.

Discussion

6 Thoughts on "From a Production Industry to a Technology Industry"

The problem (or challenge) with the article of the future, or any new kind of content that takes advantage of digital technology, is that it will be a lot more difficult to produce than simply stringing words together. Is this generally recognized or are people just kidding themselves?

In this regard I am curious if the STM new article community has any contact with the hypermedia people, who tend to be focused on computer based training (CBT)? See http://www.salt.org. They have been at this for a long time and have very good authoring systems.

Still, it is hard to imagine asking researchers to submit, or even help build, hypermedia instead of articles, because it is so much more work. On the other hand, there are logical structures within and between articles that it would be useful to exploit.

King did mention that their goal is to get the material into XML as early in the process as possible, and ideally authors would submit in this form, but that’s probably unlikely.

The Cell-style article includes a video abstract, which they’ve managed to get authors to create and submit themselves, along with a “visual abstract”, a diagram explaining the basic concept of the paper. For those diagrams, they’ve relied on an in-house art team. They committed to creating these for authors for the first year, hoping not to do so after that. At this point, they’re still doing about 60% of those illustrations in-house.

There is no concept of the structure of thought or language here. XML without purpose.

I also think that full text search makes abstracts obsolete, but that is just me. A different topic.

Bit harsh! You have to walk before you can run – starting with XML is about putting data into the right envelope so that you can easily transform or extend it later, not a shortcut to building some semantically aware future of publishing. 😉

But why believe that XML is the proper input format for the Article of the Future? For example, one of my candidates is the issue tree. Here is a sketch and a brief explanation:

http://www.bydesign.com/powervision/NOx_trees/NOx-08.pdf

http://www.bydesign.com/powervision/mathematics_philosophy_science/How_sentences_fit_together.doc

Issue trees do not use sentences or paragraphs, because they present the structure of the thinking, which has neither. I don’t see how XML fits in here (but then I don’t know that much about it).

My point is that we should be thinking about the science of articles, not the input format to something we do not yet understand.

Just to elaborate the points made above, the issue tree (which I discovered in 1973) is a useful exercise in thinking about the article of the future. Every article has an underlying issue tree structure so nothing is lost. Plus the issue tree shows the logical structure of the thinking, which can be quite useful. In fact I had my Carnegie Mellon students do issue trees instead of essays, until I left academia in 1976. Many preferred diagramming their thinking to crafting sentences.

But how would publishers handle large format graphs like this? How would the researchers be trained to do them, which is far from trivial? In fact it is rather laborious to learn to do an issue tree, as well as to do one, along the lines of learning and doing algebra.

The point is that if one is looking for a digital alternative to ordinary writing one is looking at a very big change indeed. Nor is this primarily a technological issue. As with any technological revolution, the big cost barrier is in the new use, not in the tool. The article of the future will be no different.

(Anyone interested in issue trees per se please contact me at dwojick@hughes.net)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=351b7c53-e2e4-4952-812a-449adbc357b9)