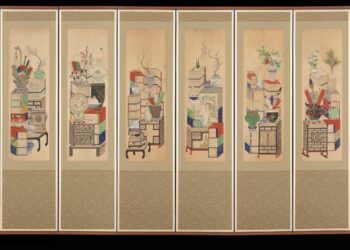

- Image by compscigrad via Flickr

I’m old enough to remember phototypesetting, manual paste-up with waxers and grid paper, bluelines, Linotypes and loops, lead, knives, chemicals, and the rest of the paraphernalia of old-fashioned publishing. It wasn’t long ago that publishers were still in the process of moving to pure digital workflows and getting used to not sending film anymore.

I’m old enough to remember three distinct recording speeds — 33 1/3, 45, and 78, and to own records at all three speeds.

I also remember driving home with my first CD player during a snowstorm in the early 1980s. I was barely old enough to drive, and in my excitement I took an offramp too fast and my little VW Rabbit skittered down an embankment while somehow staying upright. I landed on the opposite onramp, which was blessedly empty. Nobody was around, and my trusty Rabbit and I recovered, leaving behind nothing more than strange slaloms in the snow and jangled nerves.

My first exposure to digital printing came in the mid-1980s, when my college newspaper bought a Linotronic capable of taking Macintosh files and producing finished pages. Within months, we had stopped driving pages to the printer and had bought a modem.

By this time, the digital revolution had been in full swing for years, but was only hitting the consumer music space, and was truly new for most publishing professionals.

Efficiencies often hit production systems first. It’s where experts can implement them, where major financial benefits can accrue, and the logical upstream from a budgetary (production houses can afford pricey new technologies) and system standpoint.

For instance, the music industry had been producing digital music for a while at the point CD’s and CD players became widely available. Numerous LP’s were released during this interlude which were “digitally remastered” and sold at a premium price.

Type and pages went digital first in the production systems. Music went digital in the production house first.

Is the production house still a place that can predict trends?

The most sophisticated production house we encounter today — by my definition, the one still most cloaked in mystery, trade secrets, specialized skills, and “oooh” factor — isn’t Web development. Already, blogging platforms, RSS, and things that sound like a beverage at a bookstore coffee shop (Javascript), unfortunate miscues in fashion magazines (cascading style sheets, or CSS), and drug experiences (Ruby on Rails) have moved Web site development into the mainstream. People can launch working, sophisticated social media or textual sites in minutes these days.

The production system that hasn’t been exposed by technology to standardization, normalization, and digitization is editorial knowledge generation.

People work in this every day. Looking in, it’s almost how I remember the typesetters and layout artists of old — noisy, dirty, detail-oriented work that required great concentration and a lot of judgment and craft. Nobody wanted to be anywhere near it, or its practitioners. They swore, grumbled, and got it done. It was best to leave them alone.

But some new trends in editorial knowledge generation have the potential to make this much different. It could be the next digital breakthrough — more important than social networking, search engines, and the lot.

It could be the end of private editorial knowledge generation.

When music, layout, or type went digital, the translation of various analog elements into digital created a unifying framework that yielded both explicit and implicit standards while delivering enough flexibility to let various practitioners do things their way, if they knew enough and saw fit.

For instance, in layout programs, you could work in picas, inches, centimeters, or points. If someone received the file and needed to work in picas, they would just change a setting, and that measure would be rendered. You could work in CMYK or RGB, and change the output to match the device. Standardization and flexibility.

The same thing could happen in the realm of editorial knowledge generation, if semantic technologies are implemented properly. Open standards, vendor sophistication, user sophistication, and enough of a track record of what works and what doesn’t — it all has the potential to combine into what’s pretty close to a toolkit of interfaces, interaction models, user expectations, and core functions.

Blog platforms represent one of the best stabs at making editorial knowledge generation approachable to the masses. Tools like Zemanta, which introduce suggested links to terms, articles, images, and tags, have the kind of smarts and flexibility to bring a lot of disparate content together in surprisingly useful ways. More sophisticated taxonomic approaches are being rolled out all the time — some work well, some are proprietary, some don’t quite cut it yet.

In my mind, the biggest impediments of seeing editorial knowledge generation transform into “human judgment supplemented by well-represented domain expertise, algorithmic expansion, and pan-domain harmonization” are:

- Continued adherence to the one editor:one article framework, which creates levels of disjointed, silo’d information — editors should have to edit inside a common system

- Adherence to the article framework, insofar as print is still viewed as the ultimate destination for information instead of a byproduct of a broader information solution — this limits thinking, layers of information accretion, and functional obligations

- Unfamiliarity with emerging toolsets, including a lack of awareness of them, a lack of chances to see and use them, and inexperience among senior editors — but once they use them, they see how cool these tools are, and how much they help

- An information ecosystem that seems to harmonize information (e.g., Google), slowing the increasing pain of specialists seeking information — until we become more demanding of the information around us, or someone models something better, the Googlepool will continue to suffice

This is a rambling thought, I’ll admit, but looking across the realms of what we do, everything seems to be assisted, expanded, and abetted — and improved — from living fully among digital toolsets. Editorial knowledge generation — editing, writing, contextualizing, relating, prioritizing, targeting, and promoting — remains locked up in silent decisions. They aren’t pushed out into the digital world through digital toolsets, ones that are currently available or being built.

Not yet, anyhow. Someday, we may see articles that reflect all this and more. And when the production system we currently know as the editorial office becomes fully digital, what a different information world we’ll live in then.

Discussion

3 Thoughts on "Look to Production to Predict the Next Digital Breakthrough — Editorial Offices"

Can you clarify what you mean by “editorial knowledge generation”? Do you mean op-ed pieces, or something broader, like writing articles per se?

In either case it is not clear that knowledge is being generated, merely expressed. Presumably we know what we know before we write it. But it is then generated in the reader, if they accept it.

Yes, as I said, this piece was trying to capture a will-o’-wisp of thought, so it’s not as solid as some other things. My intention was to capture the notion that editorial offices parse through content submissions and try to bring forward those things they think add to the collective knowledge base. This action generates — in the sense of transforming and bringing forth — knowledge. So, it’s basically editorial office functions. Leave it to me to use a $5 phrase for a $0.50 concept.

On the contrary Kent, this is a very important concept and you are right on the money. You are basically talking about how we understand science and how digital tools can help us do that.

This is an emerging new field (where I play). The focus is on semantic analysis, mapping and visualization of science. See for example my little essay on the issue tree structure of science: http://www.osti.gov/ostiblog/home/entry/sharing_results_is_the_engine.

A good editorial example is the Perspectives section found in every issue of Science. The author of a Perspective takes a research article in that issue, which only experts can understand, and explains in less technical terms what it means in the context of the subfield in question. This is certainly new knowledge because the article just appeared. It is also very different from what the original article presents.

The basic issue is how to understand what we understand, but human understanding is a very poorly understood thing. That is why it is so hard to talk about. There is a lot of work going on here, from basic research to visualization and tools development, but we don’t even have our own journal yet. See for example: http://scimaps.org/.

My vision is for a 3D visualization of networked galaxies of scientific understanding that we fly through, as in a starship. With a million new papers every year it is a big universe. It would also be an interesting publication.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=fa24f166-faea-4751-b97b-a4a9eec54a00)