Editor’s Note: Today’s post is by Christos Petrou, founder and Chief Analyst at Scholarly Intelligence. Christos is a former analyst of the Web of Science Group at Clarivate Analytics and the Open Access portfolio at Springer Nature. A geneticist by training, he previously worked in agriculture and as a consultant for A.T. Kearney, and he holds an MBA from INSEAD.

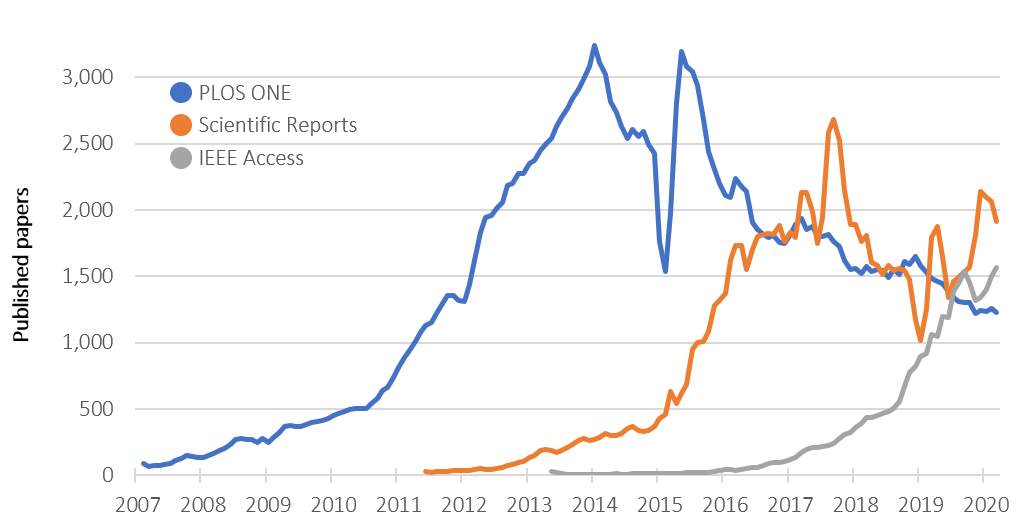

Megajournals have been at the heart of the Open Access (OA) publishing model, spearheading its growth over the last 15 years. Titles such as PLOS ONE and Scientific Reports have been enormously influential and commercially successful. Nonetheless, the commercial success of megajournals is not guaranteed and their long-term performance has been occasionally unreliable, introducing uncertainty in an industry that has been particularly attractive to investors for its ability to generate low but sustainable growth.

Megajournals are defined as journals that are ‘designed to be much larger than a traditional journal by exercising low selectivity among accepted articles’, meaning that they do not reject articles for lack of novelty or significance as long as they are original and scientifically sound. In addition, they accept articles from more than one discipline, and they are Open Access, typically charging article processing charges (APCs).

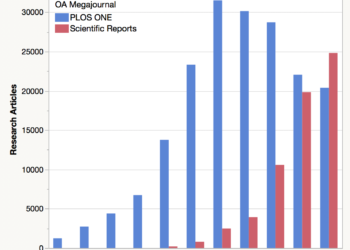

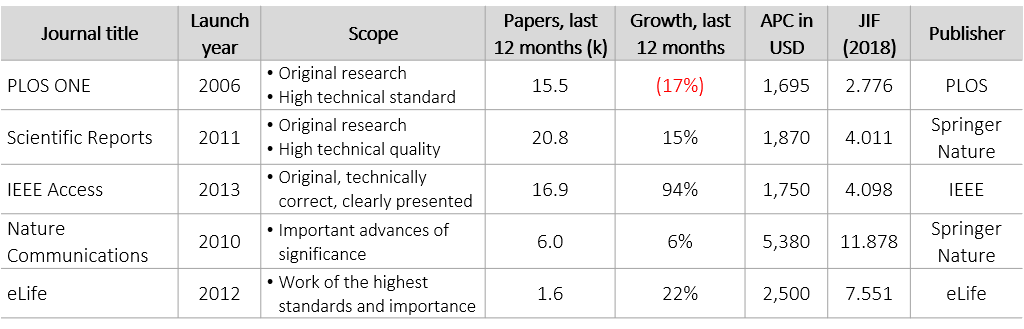

Three journals that meet the definition and have also been commercially successful are PLOS ONE, Scientific Reports, and IEEE Access. Their profiles are shown on Table 1 and on Figure 1 alongside the profiles of two selective, large OA journals (Nature Communications and eLife) that have been publishing more impactful research (at least in terms of citation metrics) and serve as a point of contrast in this analysis. The three megajournals publish about 53k papers per annum, collectively accounting for more than 2% of global journal output.

As shown in Figure 1, PLOS ONE and Scientific Reports have similar profiles, accepting about ⅔ of their content in the space of Biological Sciences and Medical & Health Sciences. Either journal can act as a good substitute for the other. On the contrary, they have little overlap and competition with IEEE Access, which focuses on Engineering affiliated disciplines.

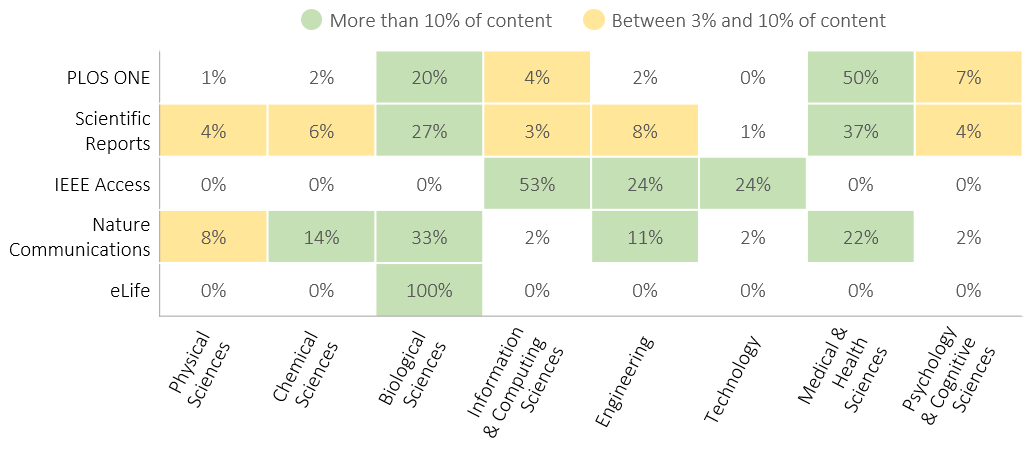

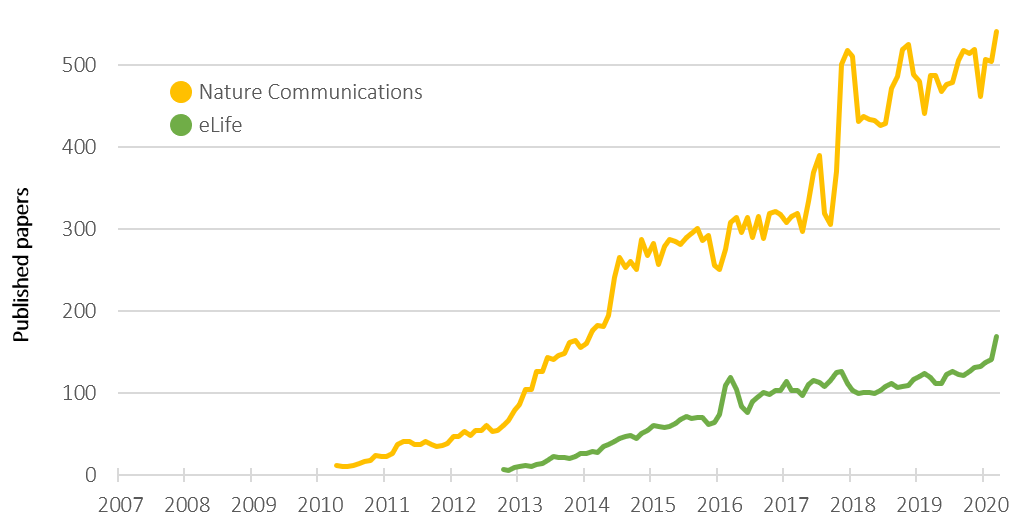

Figures 2 and 3 show the monthly publication output of each journal since they were launched (Figure 2 for the three megajournals and Figure 3 for the selective journals). Figure 2 indicates that megajournals may face turbulence and even decline after a period of rapid growth. On the contrary, the two selective journals have grown less rapidly but steadily, indicating that unpredictable commercial performance is not a universal property of large OA journals.

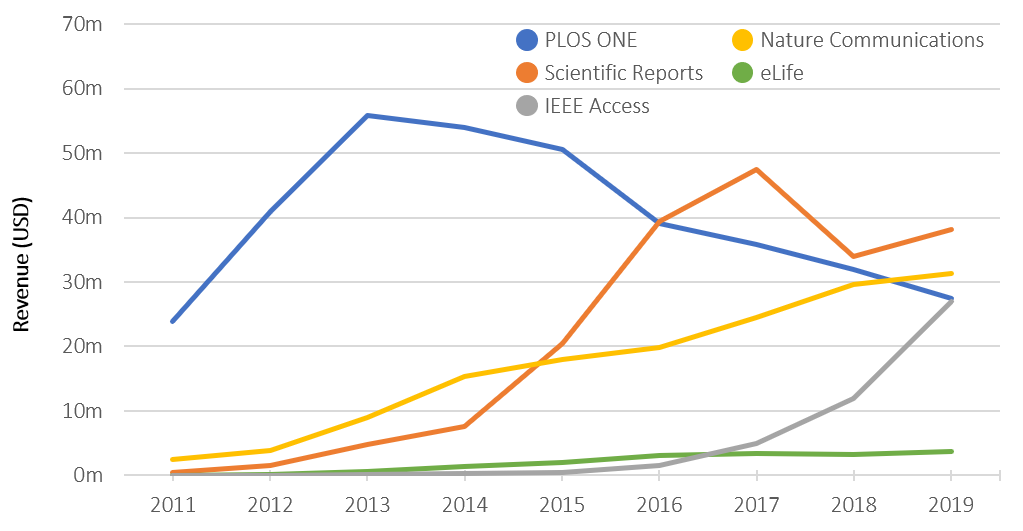

Figure 4 shows annual APC revenue by journal, applying the current APCs and no discounts. Note that Nature Communications did not launch as Open Access, but it flipped to this model starting in 2014; APC revenue calculations prior to flipping are merely theoretical.

The roller coaster performance of commercially successful megajournals

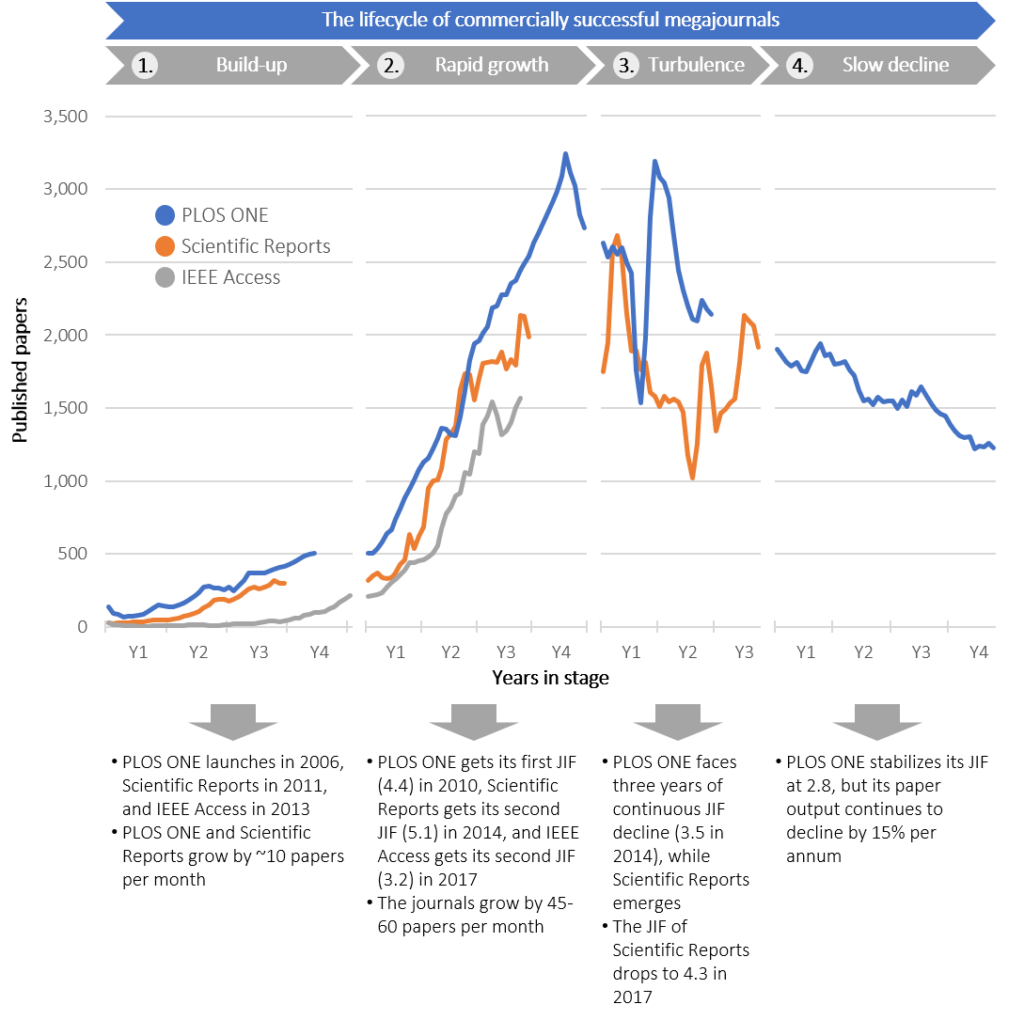

Plotting journals by chronological order as in Figure 2 is not the most suitable way to compare performance and identify growth patterns. Instead, journals can be plotted with common starting points, such as the launch date or the date they got a high JIF (Journal Impact Factor). This has been attempted in Figure 5, which shows four distinct growth periods for megajournals: build-up, rapid growth, turbulence, and slow decline. The third stage, turbulence can be observed for PLOS ONE and Scientific Reports, and the last stage, slow decline, has only been reached by PLOS ONE.

The first stage, build-up, appears to last 3-4 years. This is the period before the journals get their first noteworthy JIF. That was 4.4 for PLOS ONE (first JIF, released in 2006), 5.1 for Scientific Reports (second JIF, released in 2011), and 3.2 for IEEE Access (second JIF, released in 2013). During that period, the journals grow well but unremarkably; PLOS ONE and Scientific Reports were growing by about 10 articles per month.

The JIF release triggers the second stage, rapid growth. Journals make their mark during this period: they almost quadruple in size, growing by 45-60 articles per month, and they temporarily claim the title of the largest academic journal in the world. PLOS ONE published more than 30k articles per annum at its peak, accounting for almost 2% of global journal output. IEEE Access is still in the period of rapid growth, but PLOS ONE and Scientific Reports have come out of it, moving into the next stage.

The third stage, turbulence, is characterized by uneven performance that makes it hard to infer how well the journals perform. It commenced for PLOS ONE after three years of continuous JIF decline from 4.4 to 3.5 in 2013, which coincided with the rise of Scientific Reports. It commenced for Scientific Reports in summer 2017, when its JIF dropped steeply to 4.3. The subsequent 2-3 years were turbulent for both journals. PLOS ONE declined by about 18% in that period and Scientific Reports by about 7%, but neither decline was linear. Article output was erratic with monthly peaks of ~3,600 articles for PLOS ONE and ~3,300 for Scientific Reports, and monthly lows of 3-4 years, ~700 articles for PLOS ONE and ~500 for Scientific Reports. This may have been the result of operational challenges and ensuing bottlenecks along the pipeline.

The fourth stage, slow decline, is where PLOS ONE is right now. PLOS ONE has lost about 15% of articles per annum in the last four years, despite stabilizing its JIF at 2.8. Despite the drop in volume, PLOS ONE remains a tremendously innovative and commercially successful journal.

Between a rock and hard place

Most megajournals will not become as commercially successful as PLOS ONE or Scientific Reports. For example, Elsevier’s Heliyon (2015 launch), Springer Nature’s now defunct SpringerPlus (2012 launch), and PeerJ (2013 launch) have grown to about 2,000 papers per annum but have not achieved the citability or brand recognition that will make them truly competitive and may propel them to the stage of rapid growth. Nonetheless, once a journal reaches the stage of rapid growth, it is likely that slow decline will eventually follow.

If they can achieve a high JIF, megajournals are likely to attract and publish scientifically sound but less citable content by authors that seek to benefit from connection to the high JIF. This will lead to JIF decline, which makes the journal less attractive to authors chasing the JIF, and hence fewer articles and a drop in revenue. If the journals opt for stricter editorial criteria (moving from ‘original’ works to ‘important’ works), they will have to reject a higher volume of submissions in addition to introducing other changes (e.g., pricing, operations, marketing) that can be confusing for the market. This will lead to fewer published articles and less revenue. Different path, same result.

Of course, there may be a mid-way, somehow compensating for the loss of citability through very aggressive marketing. Highly capable marketing teams or specialized marketing tools such as TrendMD might make the difference. Is it worth for publishers to boost marketing, thus giving up margin per article, in an effort to maintain high volume and overall profit/surplus?

What comes next

Will IEEE Access continue growing rapidly or will it eventually face challenges? Will Scientific Reports follow the path PLOS ONE or will it defy the odds and maintain growth? It comes down to the citability of the journals for content published in the last 2-3 years.

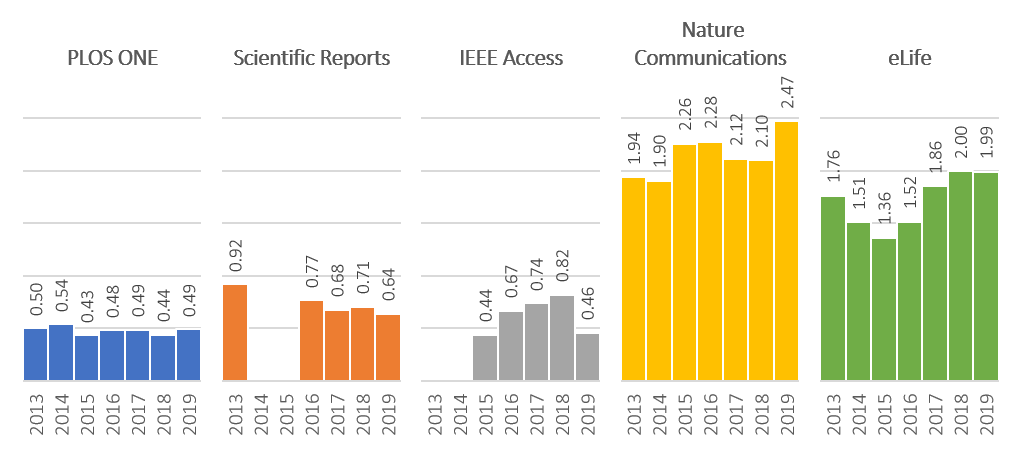

It is hard to accurately predict the future citability of new content. Article access, downloads, and shares can be used as proxies. Another proxy can be the Immediacy Index, which captures citations per article in the same year that the articles are published. For example, it shows that PLOS ONE articles published in 2017 had 0.49 citations on average in the same year, per Dimensions data (Figure 6).

This approach trades accuracy for immediacy and ease. The Immediacy Index (weighed by annual article volume) predicts correctly the year-on-year JIF direction (increase or decline) in 10 of 13 cases in the five journals. It also captures correctly the citability differences between journals. For example, it suggests that Nature Communications is 4x more citable than PLOS ONE, which reflects the actual JIF difference between the two journals. This level of accuracy is not good enough for many applications, but it can be cautiously applied here.

The weighed Immediacy Index of two consecutive years corresponds to the JIF that will be published in summer two years later. For example, the performance of Nature Communications in 2015 and 2016 would feed into the JIF that is released in 2018. According to the results on Figure 6, eLife is likely to see a JIF increase this summer, following four years of decline. Nature Communications is likely to see a small drop in 2020 and then a higher increase in summer 2021.

Regarding the megajournals, the data suggests some loss of citability for Scientific Reports that may eventually be reflected on its JIF. IEEE Access is likely to increase its JIF even more in summer 2020, but eventually its JIF will decline in 2021, possibly commencing the stages of ‘turbulence’ and ‘decline’. Whether and when this will happen also depends on the availability of journals competing in the same disciplines and JIF range. While the decline of PLOS ONE was accelerated by the rise of Scientific Reports, there may not exist similar substitutes for IEEE Access.

A caveat for this analysis is that the 2019 network of citations may be incomplete (or less complete than in previous years). That could explain the loss of citability that is implied for IEEE Access. However, the 2019 data looks within expectation for all other journals, making it likely that it is accurate for IEEE Access too.

More broadly, predicting future journal performance is especially challenging at this time. COVID-19 is likely to affect research output in the mid-term as well as researcher budgets for APCs, thus penalizing APC Open Access journals. The same goes for the new Chinese policy for research assessment (here and here). Journals with high volumes of authors from China may lose content irrespective of their JIF performance.

Implications for publishers, investors, and research evaluation

PLOS ONE and Scientific Reports have been very successful journals. Any publisher would be thankful to have them in their portfolio. Nonetheless, their unstable performance should also serve as a warning. In the year of their steepest decline, each journal shrunk by about 7,000 articles, which can translate to a loss of more than $10m year-on-year. That will reflect poorly on the balance sheet of any publisher.

The takeaways for publishers are simple:

- Do not get carried away; the revenue of megajournals can be inconsistent, so avoid overselling their success to investors and avoid reckless investments

- Invest heavily in marketing; if the journal is shedding 10% of citability every year, marketing should try plug this hole as well as possible

- Build around their success; launch affiliated, higher impact journals that will absorb some of the eventual content loss

- Do not put all your eggs in one basket; pursue a less risky, broad portfolio approach rather than a smaller, focused megajournal approach

Investors in the space of scholarly communications should also evaluate megajournal performance as part of their due diligence. They need to look for large journals that grow rapidly, understand what drives their growth, assess whether their trajectory may be disrupted, and assess the risk for the broader portfolio.

Finally, the erratic behavior of megajournals is not healthy for research or for any of the stakeholders involved in it, including publishers. It is driven by a combination of (a) research evaluations and incentives that are partly reliant on JIFs and (b) the lag between journal performance and the JIF release. If a journal gets a high JIF in summer 2020, this can be ‘exploited’ by the publisher and/or authors for almost three years until any change in the performance (citability) of the journal becomes apparent in the JIF released in summer 2023. The current system of evaluations leaves a window for questionable behavior by publishers and researchers.

Discussion

18 Thoughts on "Guest Post – The Megajournal Lifecycle"

Great article. What impact is there because of competing OA journals in the same field, i.e. Plos vs Nature. Are these two journals feeding at the same trough? Additionally, discipline specific OA journals are now being published and are these publishing articles that have high JIF and thus accounting for the decline in mega journal JIFs? In short, are the multiplicity of OA journals impacting mega journals?

Hi Harvey, I think this is a solid point. There could be flight of high impact articles toward more selective OA journals, thus driving down the citability of megajournals. I am afraid that I did not put much thought into it when writing the article. Wondering how this could be best assessed (other than surveying authors). Also, which titles do you have in mind, if any specific? Some selective OA journals have been out there for a while.

Interesting post Christos thanks for sharing these insights, one additional point, have you looked at the geographical effect on the decline in articles published in mega-journals ? I believe China has some directives and interesting trends around mega-journals … from what I can see (via Dimensions) IEEE Access has sky-rocketing submissions from China, whereas the other mega-journals seem to be on the decline … from my anecdotal insights, I don’t think this is all down to changes in JIF, but could account for some of the points you note above, certainly worth a closer look to build on this excellent study and post.

Hello Adrian, thank you for reading. In my experience, Chinese authors are rather JIF-sensitive, given that they are (used to be?) incentivised to publish in high-JIF journals. I do not have access to country data at the moment, but would be curious to see the proportion of China-based authors in IEEE Access before and after it got its high JIF.

Annual number of publications in IEEE Access according to Scopus (and the % of the annual publications with at least Chinese address in parentheses)

2011: 81 (1%)

2012: 72 (13%)

2013: 64 (6%)

2014: 194 (11%)

2015: 442 (17%)

2016: 825 (47%)

2017: 2892 (55%)

2018: 6127 (64%)

2019: 13894 (67%)

2020: 5510 (65%)

Gabor, is there a typo in either the 2019 or the 2020 number?

I think the numbers look right (thanks for sharing, Gabor!). 2020 is YTD, hence look smaller – they are up 46% YoY. It seems that submissions from China skyrocketed already in the first year that the journal got its JIF (summer 2016) and kept going up since. Still, I am surprised that the share is that high. Shows a lot about the responsiveness of authors to incentives and the lack of alternatives.

I don’t think so. Respective numbers from Web of Science

2017: 2341(1325, 57%)

2018: 6586 (4247, 64%)

2019: 15213 (10481, 69%)

2020: 4136 (2729, 66%)

There are some differences between the databases (lag of data registration, different publication year definitions, etc.), but the trends are very similar.

Here is the Scopus data for the other 5 journals, if someone is interested:

PLOS ONE

2006: 137 (3%)

2007: 1235 (3%)

2008: 2717 (5%)

2009: 4390 (6%)

2010: 6751 (7%)

2011: 13799 (12%)

2012: 23470 (15%)

2013: 32055 (18%)

2014: 31983 (19%)

2015: 29825 (18%)

2016: 22994 (16%)

2017: 21185 (15%)

2018: 18859 (10%)

2019: 16318 (9%)

2020: 5020 (10%)

Scientific Reports

2011: 208 (9%)

2012: 810 (19%)

2013: 2566 (29%)

2014: 3995 (38%)

2015: 10959 (39%)

2016: 21049 (36%)

2017: 25866 (29%)

2018: 18670 (20%)

2019: 20423 (15%)

2020: 7254 (15%)

Nature Communications

2011: 452 (10%)

2012: 725 (11%)

2013: 1677 (14%)

2014: 2840 (16%)

2015: 3352 (16%)

2016: 3676 (16%)

2017: 4695 (19%)

2018: 5661 (21%)

2019: 5835 (25%)

2020: 2098 (27%)

eLife

2012: 45 (4%)

2013: 328 (4%)

2014: 673 (3%)

2015: 1042 (5%)

2016: 1244 (6%)

2017: 1512 (6%)

2018: 1411 (6%)

2019: 1675 (6%)

2020: 539 (7%)

Other 4 journals… The fifth was IEEE Access

Gabor, thanks so much for sharing these figures. I plotted them against the JIF and wrote a short note on my personal blog on LinkedIn (https://www.linkedin.com/pulse/megajournals-jifs-chinese-authors-christos-petrou). I think it reflects that Chinese authors have been over-incentivised to publish in high-JIF journals. They have been quick to publish in the three megajournals when their JIFs go up, and they have been equally quick to move elsewhere when their JIFs decline.

Chris, can you really deduce a generalized, predetermined lifecycle of OA journals (Build-up, Rapid growth, Turbulence, Slow decline) on three titles, one of which has not made it past step one, and only one making it to step 4? What we learn from successful OA models may have little to do with size and everything to do with specific editorial strategies, competition, business models, economics, etc.

Hi Phil, I do think that this trajectory for megajournals is likely, and I don’t think it is inevitable. I also think that the combination of their size and their uncertain performance should matter to publishers who might be tempted otherwise to put all their eggs in one rather shaky basket.

Btw, would you have in mind any sound science, OA, smaller journals (500-1000 articles per annum) that got a sky-high JIF and maintained it and their performance for several years? Might be worth looking into that next.

Hi David, that’s spot on. The three not-so-successful examples in the article (Heliyon, PeerJ, SpringerPlus) are only scratching the surface of megajournals that never really got ‘mega’. I would speculate that it takes a very strong brand and some degree of self-selection by authors in order to get a high JIF and kick-off the stage of rapid growth.

Thanks so much, Christos, for this really interesting analysis. Another reminder that the business of scholarly publishing continues to look more and more like commercial publishing, especially with respect to the importance of marketing and branding in the ultimate sustainability of the journal. A small comment: I’m surprised that you did not mention how this long-term trend toward slow decline correlates to the rise of preprint servers. Especially in the biological sciences, the use of preprints for rapid dissemination has at least somewhat neutralized a key competitive advantage that was initially enjoyed by PLOS ONE and Scientific Reports.

Hi Bert, thank you for reading and commenting. Good point about preprints: they can compete for the author segment that seeks rapid dissemination. Not sure though if PLOS ONE and Scientific Reports have been competitively fast in the first place. I am not sure about historical performance, but I pulled data for 2019 and the processing did not look exceptionally fast.

This piece is definitely fascinating. Based on my necessary condition analyses, I have produced this take on it: https://openresearch.community/users/342784-pablo-markin/posts/the-product-lifecycle-dynamics-of-mega-journal-performance-indicators-in-the-context-of-supply-and-demand-forces.