We’re all familiar with the economic scarcity principle in which the limited supply of a good, coupled with a high demand, results in a mismatch between the usual balance of supply and demand. Think of the luxury Hermès Birkin bag, the almost mythical and obsessive quest for which is wittily described in Wednesday Martin’s anthropological study of the rich wives of the upper-east side, Primates of Park Avenue. Its scarcity made Martin want one more than ever – owning a Birkin is a to be a member of an exclusive club – “Once you’re in, it makes you feel worthy. It gives you identity.”

For those who care about the future of science, it should be of central concern that a not entirely dissimilar set of incentives and rewards drive decisions about where and how to publish. In the early days of digital, we were led to believe that the economics of scarcity would be repealed by the removal of supply constraints in the digital world. But that hasn’t happened: behavior and reward are still driven by demand for prestige which in turn has the potential to undermine good science. This stranglehold is clearly taking much longer to break than many had hoped, but alongside initiatives such as DORA and the TOP guidelines, megajournals represent a viable approach to change.

The first megajournal – PLOS ONE – was founded on the principle that properly executed science deserves publication and that work should be judged on the value of its own contribution, rather than the title of the journal in which it is published. (Full disclosure: I am the CEO of PLOS, publisher of PLOS ONE). For the past decade, new megajournals have continued to launch – some more and some less successfully. Yet they have clearly had relatively small impact on the grip of elite journals and at worst, are derided as dumping grounds for mediocre research.

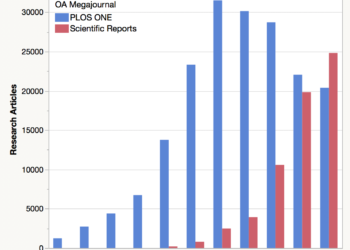

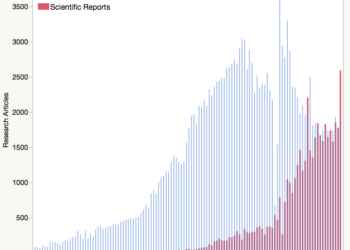

Does this mean that we have hit “peak megajournal” and that the era of the megajournal will be short-lived? First and foremost, the data contradicts this. The number of megajournals continues to grow (with at least 20 now publishing), and their output continues to grow at a rapid rate, even if we just add together the publications of PLOS ONE and Scientific Reports as the most recently available data shows.

But rather than spending time responding to critiques of the megajournal, I want to focus on the contributions that megajournals are making to improving research communication. And I want to move beyond the issue of access, although the open access (OA) model of course underpins the mission to more easily share discoveries with both peers and the public.

In spite of the incredible progress of modern science across many fields, there are core concerns that an “open” agenda seeks to address. In discussing his recent book The Seven Deadly Sins of Psychology: A Manifesto for Reforming the Culture of Scientific Practice, Chris Chambers describes his own science as one that “has now become a contest for short-term prestige and career status, corrupted by biased research practices, bad incentives and occasionally even fraud.” While it may seem pessimistic and even extreme, this growing unease across fields is being taken seriously by key players – especially funders – due to concerns that the current scientific culture is fostering bad practices. While not a panacea, megajournals have demonstrated an ability to make a major contribution to fostering open practices and strong, reproducible science:

- The value of a peer review process that doesn’t rely on subjective assessments of significance. A methodology that is all-too-frequently dismissed as “peer review lite”, the soundness-only methodology for peer review differs in scope rather than rigor. The technical soundness review itself is rigorous and for most megajournals, involves a number of internal and external checks and assessments. Frequently coupled with forms of open review, it helps to disperse and make transparent assessments about what is significant to the scientific community as a whole both before and after publication. While there are heated debates about a perceived move from expert gatekeepers to the wisdom of the crowd, there’s also a strong case to be made for democratizing assessment and challenging reigning disciplinary power structures.

- The importance of outlets for negative studies and replications. The most important part of a study for gaining acceptance into a high-impact journal is all too frequently the results – the one part of the study that should be beyond a scientist’s control. Many scientists have told the tales of pressure to make ambiguous results tell a compelling story, fearing that quality studies that produce negative or less conclusive results are unpublishable. Many megajournals not only accept but encourage replications and null results on a large scale, both of which are critical to moving science forward.

- Moving beyond legacy models. This is not to say that only megajournals innovate – this is clearly not the case. But megajournals have been willing to experiment with models that challenge an entrenched status quo. Think of the variants of open review at PeerJ or Collabra, or PeerJ’s leadership in the preprints space. Or PLOS ONE’s requirement to deposit data alongside a paper to support both assessment and replication. Key here has been scale and thus the ability to drive innovation beyond any individual title. Not only does PLOS ONE have more than 80,000 published data availability statements, but this scale has helped to change attitudes and practice more widely.

- The value of an outlet that is broad enough for an era of “big science”. More and more science crosses boundaries both within the natural sciences and beyond – the biggest problems in our world cannot be solved by any single discipline. Megajournals are broad enough to accommodate that breadth and complexity, and to build networks across disciplines and national boundaries. Collaboration and team science is also another tenet of improving reproducibility: more collaborators bring greater theoretical and disciplinary perspectives.

Of course, such features are not all unique to megajournals but their breadth and openness makes them a critical player in moving towards an expanded research cycle and scientific culture that is collaborative, transparent and accessible.

The future of the megajournal

So what of the future? Given demand, it doesn’t seem that the megajournal is going to disappear any time soon. The range of models, which already incorporate more focused journals (such as BMJ Open), arguably extends to include different models beyond STM such as Open Library for the Humanities, and perhaps even the megajournal-that-isn’t, F1000 Research. Growing diversity and evolution of the model is a strength, but none of this means that there aren’t some real challenges:

- The filtering function has always been a critical one for journals, and many have made the case that this is lost or at best watered down in megajournals. I’ve already made the case that screening hurdles and rejection rates demonstrate one form of filter. In terms of search and browse, PLOS ONE added overlays through PLOS Collections and PLOS Channels, through which content can be fed and curated, and other journals have clear content streams and filters. But as for all journals, there’s more work to be done to efficiently guide users to the research results likely to be of greatest value to them.

- In focusing peer review on soundness, megajournals have also sought to supplement that with forms of post-publication and ongoing community review but as we know, to date crowdsourced review has not taken off. Various forms of altmetrics show how a paper is cited and discussed, but institutionalized reliance on a single, static review of a work’s qualities has inhibited the development of robust mechanisms of assessment that would give users of the literature an accurate and evolving picture of a published work’s reliability and significance throughout its useful lifetime. Simple, easy-to-use tools, perhaps seeded with formal reviewer evaluation, would also help to address the filtering challenge.

- Another key issue is price. One of the many disadvantages of high selectivity is its cost: the 10% of accepted papers also have to cover the cost of the 90% of rejected papers. A megajournal like PLOS ONE still has a 50% rejection rate and thus still bears significant cost for rejected papers, meaning that the hoped-for steady reduction in the cost of publishing has not materialized. Some combination of AI and machine-learning will likely be able to automate initial screening, but that won’t address the other side of this coin: it’s clear from APC pricing at Scientific Reports, PLOS ONE and PeerJ that scientists aren’t shopping around based on price. Which brings us neatly back to where we started, and the perverse incentives to publish in high status outlets.

Clearly, the kind of broad changes in scientific practice and culture that have been called for require action from many stakeholders over many years. But as the numbers show, megajournals are now a key player in the scholarly publishing landscape. While they may struggle to compete with the über-brands at their own game, they can play a significant role in challenging the status quo and facilitating a transition to open, transparent system of research communication.

Discussion

10 Thoughts on "Countering the Über-Brands: The Case for the Megajournal"

I think OA and megajournals are all wonderful developments, but where I find myself not in agreement with many advocates is that I simply don’t see why anyone is being asked to choose OA and megajournals over, say, the careful editorial work at JAMA or Science. The traditional journals do something very well, the new (if maturing) OA journals do something very well. Why must someone who likes steak being told that you must not under any circumstances like the fish? To my mind the problem is not OA or traditional models but the discourse that has grown up around them, which is needlessly political in nature. It’s a big world; enjoy it all.

I was struck by similar comments you made on a previous post in SK, Joe, and agree with your basic point. But OA advocates aren’t the only ones guilty of forcing a false choice by dismissing any value in other options. I think that PLOS’s portfolio – and others – demonstrate the value of a portfolio that also includes more selective titles alongside a megajournal.

Well, of course, Alison: there are foolhardy comments (including some on the Kitchen) about the horrors of OA. But let’s not fall into the “talking to stupid people” mistake, where one spends time in a dialogue with someone who isn’t really grasping the issues. Let’s hear it for the megajournals, and let’s hear it for the Uber-journals. Let’s make the discourse around scholarly communications as thoughtful as the content of the publications themselves.

As I hope is clear in my post, my critique is not of elite journals themselves or any dispute that they provide strong service and publish some excellent science. My focus is on the damage done by the pursuit of an elite publication (well documented by scientists themselves) and the ways in which mega journals can contribute to fostering a better culture for science. This isn’t to say that other journals can’t do that too, but I think that some of the unique characteristics of mega journals make them particularly well suited to this task.

A valuable review of the state and challenges facing megajournals, thanks, Alison. But I have some questions:

1) Can the assessment based on methodological soundness work for megajournals in the humanities like OLH and the newly launched megajournal from UCL? A philosopher who coimes from the Anglo-Amderican analytic tradition is not going to accept the “soundness” of a methodology that comes from the Continental tradition, and vice versa, just to use one example of how sharp disagreements about methodology are in the humanities.

2) If assessment based on methodological soundness works for journal articles, why not apply it to book publication as well? Would you have accepted this approach for the Luminous monograph series at UC Press?

3) I’m even suspicious of basing publication on methodological soundness in the social sciences. There are still fierce ongoing debates about what methods are sound in the social sciences. I can’t speak as much about STEM, but would physicists enamored of quantum physics think methods of assessing work in relativity theory to be sound.

4) The success of AI and machine-learning in assessing soundness presumes that there is unanimity about what counts as sound, does it not?

Sandy, I don’t think that the methodological soundness approach translates directly to the humanities. As those of us who’ve spent time in those fields know, peer review there – whether of journal articles or books – plays a substantively different role (which we discussed here in the Kitchen https://scholarlykitchen.sspnet.org/2016/09/21/peer-review-in-the-humanities-and-social-sciences-if-it-aint-broke-dont-fix-it/). And yes, methodological soundness also varies across the sciences broadly – a post such as this is necessarily somewhat high level, but there are always important differences to be recognized across fields and communities.

A question about the continuing growth of megajournals. How much of the overall growth in the total number of articles published in megajournals can be attributed to annual growth in the total number of articles overall? Does megajournal growth continue to outpace growth in other types of journals, or is it merely keeping pace with the rest of the market?

That’s a good question, and I’m not sure if we yet have data to answer that (the most recent “actuals” I could find were from 2015). I think that the growth in output of megajournals has been driven by (1) overall output growth, as you suggest, and (2) the creation of efficient cascades that move articles from more selective titles into a megajournal in the same portfolio (rather than resubmission to an alternative selective title). Both of these trends seem to be continuing, and the the second suggests that megajournal output may continue to outpace growth elsewhere.

Hi both,

It’s a good question. The short answer: best case, around one quarter to one third of the growth in Megajournals can be attributed to underlying growth in scholarly output.

Figures from Delta Think’s OA market analytics suggest that megajournal output grew by 14% and 18% in 2015 and 2016 respectively, compared with around a 5% annual growth in total article output. We don’t yet have reliable data for 2017 output. (If pushed, we would speculate that data will show that MJ output will slow in 2017 given the decline in PLOS One – sorry, Alison! – but that it is unlikely to lag behind the market.)

As a biologist and author, I have begun to wonder what is the point of a megajournal in the preprint biorxiv et al world? Why should one pay to publish in a non-selective non-prestigious journal if one can simply deposit the study in a free preprint archive?