Unsub is the game-changing data analysis service that is helping librarians forecast, explore, and optimize their alternatives to the Big Deal. Unsub (known as Unpaywall Journals until just this week) supports librarians in making independent assessments of the value of their journal subscriptions relative to price paid rather than relying upon publisher-provided data alone. Librarians breaking away from the Big Deal often credit Unsub as a critical component of their strategy. I am grateful to Heather Piwowar and Jason Priem, co-founders of Our Research, a small nonprofit organization with an innocuous sounding name that is the provider of Unsub, for taking time to answer some questions for the benefit of the readers of The Scholarly Kitchen.

As background, what is the origin story of Our Research? Where are you today with respect to mission, funding, and staffing?

Origin story: We got started in 2012 in a hackathon.We worked all night on the Impactstory Profiles website, and then when we got home we couldn’t stop! Eventually the Sloan Foundation gave us a grant that allowed us to do it full-time. Since then, a number of projects have branched off from Impactstory Profiles.

Mission: Research (as distinct from, say, alchemy) has always been done together with a community, making it less mine and more ours. Our Research works to make research effective by making it more ours. To do that we build scholarly communications tools that make research more open, connected, and reusable — for everyone.

Funding: We have been funded by grants, including from Sloan, NSF, Clarivate, OSF, and Shuttleworth. We have a current grant from Arcadia, a charitable fund of Lisbet Rausing and Peter Baldwin. However, these days most of our funding comes from premium services built on top of our free Unpaywall database.

Staffing: We have a very small staff of three permanent full-timers. We do a lot of contracting out as well; we’ve found that this helps us stay agile and cost-effective.

What is Unsub?

Unsub is a tool that helps librarians analyze and optimize their serials subscriptions; it’s like cost-per-use (CPU) analysis on steroids. Using Unsub, librarians can get better value for their shrinking subscription dollar — often by replacing expensive, leaky Big Deals with smaller, more custom collections of a-la-carte titles.

How’s it work? There are three stages:

1. Gather the data: Libraries (or consortia) upload their COUNTER reports; we take it from there.

For each journal, we collect:

- Citation and authorship rates from researchers at the library’s institution,

- costs of different modes of access (e.g., a-la-carte subscription, interlibrary loan (ILL) or document delivery fulfillment), and

- rates of open access and backfile fulfillment.

This last category is where a lot of the value of the analysis comes from; we find that up to half of content requests can be fulfilled via open access, for free.

2. Analyze the data. We process all of this data into a customized forecasting model that predicts a given library’s costs and fulfillment rates for the next five years, for each journal. Libraries can customize all the model’s assumptions, reflecting different levels of risk tolerance and creating worst-case and best-case scenarios.

3. Act on the data. In most cases, the models demonstrate that the Big Deal delivers great coverage, but poor value. By relying on open access, and strategically subscribing to high-value titles, libraries can often deliver around 80% of the fulfillment at 20% of the cost. Armed with this data, librarians can a) negotiate with publishers more successfully and b) support decisions to cancel, should they decide to.

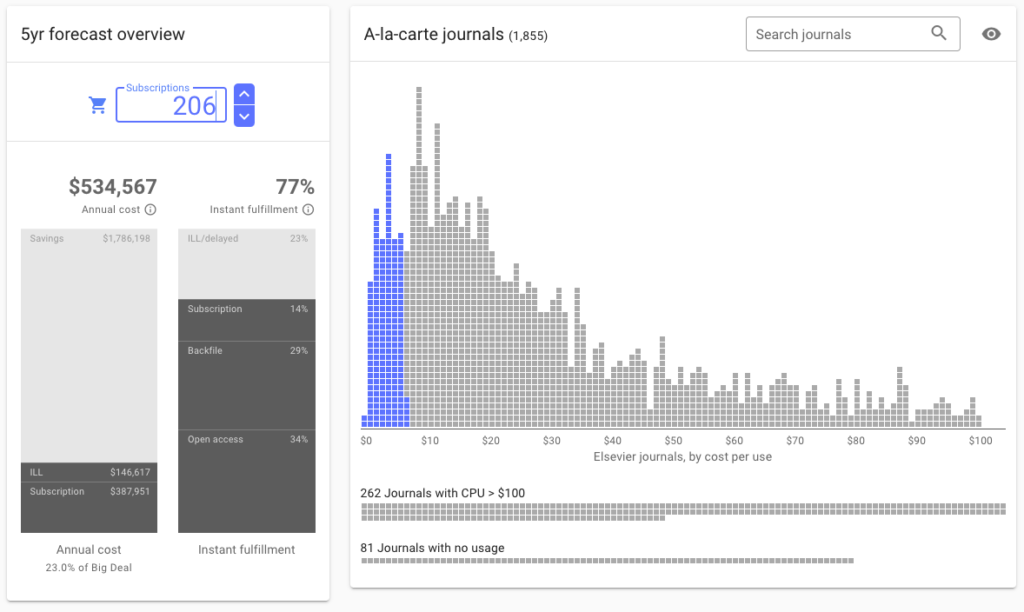

You can explore a free demo of the tool on our website, but here’s a quick summary of what the reporting looks like:

This demo institution uses real data from an anonymous university. On the right we see the journals in their Elsevier Big Deal, arranged by cost-per-use (CPU), accounting for open access.

By subscribing to the 206 most valuable (low CPU) titles, one can see on the left that:

- They’ll spend about $535k per year on subscriptions and ILL/document delivery. That’s only 23% of their previous Big Deal spend.

- They’ll deliver instant access for 77% of content requests, via a combination of a-la-carte subscriptions, perpetual access backfile, and open access. The remaining 23% will be met via ILL/delivery.

It is up to the library, of course, how it uses the data from Unsub. But, regardless of what a library does, it will do so better informed.

We’re sustaining the service by charging $1,000 annually per institution (consortia pay $800 per member institution). We’ve set that price as low as possible; for us, this service is about fulfilling our nonprofit mission, not maximizing revenue. We think it’s a pretty solid value.

How are libraries using Unsub? Are there uses besides driving the cancellation of journal subscriptions?

We are really focusing on “driving cancellation of journal subscriptions” for now. We are hearing from our users (about 300 libraries) that this service is a game-changer for them, and that seems especially true in these challenging times.

Most institutions will be making cuts. That’s unfortunate. But in most cases, it’s not as bad as librarians or faculty fear. Open access provides a lot of headroom that didn’t exist even five years ago. Librarians can limit the impact of cuts, while hitting budget goals, if they have the data they need.

We think it’s a bit like the early days of “Moneyball” (true story and later a Brad Pitt movie), when the Oakland A’s realized that they didn’t have the budget to sign the big-name baseball stars. Their solution? They started bringing better data and more advanced statistics into evaluating players, focusing on signing players who were good value for money rather than big names. And it worked. Data-driven decision-making turned the A’s into a winning franchise. A lot of libraries are about to go from New York Yankees budgets to Oakland A’s budgets. We think Unsub can help them flourish, even as they cut costs, by bringing more and better data to their decision-making.

We’re happy to report an early success story (many thanks to SUNY Library Senior Strategist Mark McBride for permission to reproduce this quote):

“Unsub was instrumental in SUNY’s decision-making process that led to us cancelling the ‘big deal’ with Elsevier. Their efforts resulted in us saving our campuses nearly $7 million.”

How are publishers responding to Unsub? Have any used it to analyze the titles they publish?

We’ve received off-the-record feedback from several publishers amounting to this: “Unsub is going to kill our subscription model…thanks! We’ve wanted to flip, but haven’t had the courage yet.”

We were surprised at that, but pleased.

We haven’t heard much other feedback from publishers. But we are looking forward to working with them. Unsub provides a new, more complete picture of the value publishers provide. We reckon that’s great news for publishers: If you’re delivering solid value right now, fantastic! You’ve got a new way to prove it. If you’re delivering less value now, you’ve got a great opportunity to make some changes.

You also have a project called Unpaywall, which is a free database of open access scholarly articles. What is Unpaywall and how are Unsub and Unpaywall related?

The question covered it pretty well: Unpaywall is a free and open index of all the world’s open access articles. We use the data in the Unpaywall database to assess open access prevalence by journal, which makes it a very important data source for the Unsub tool.

Until recently, I would have said your most well-known project was Impactstory. How is that project progressing with the current attention on Unsub?

Unfortunately Impactstory is in a bit of a holding pattern for now. The plan was always to move from grant funding to charging users for sustainability. We did that, but the user demand wasn’t sufficient to cover our costs, so we’ve had to put that plan on hold.

That said, we deem the project a big success, because it helped further the conversation around alternative means of evaluating researchers — and it led directly to the creation of Unpaywall, which was originally just a feature designed to enrich Impactstory profiles.

How do you get your ideas for projects and do you have any new/upcoming projects that you can tell us about?

We’ve got a big long list of project ideas, and 99% of them never amount to anything. But sometimes all the forces align: folks have a real problem, we can fix it, it aligns with our mission, and (this is key!) it looks like fun. Then we start talking to people about it. If it still looks good after that, we try to launch a prototype ASAP, to get more feedback, and then we iterate from there. That’s probably from our hackathon roots…all our projects are Skunkworks projects.

Right now we are very focused on Unsub. It’s growing very quickly, and so we’re pretty full-on keeping up with demand.

How are you evaluating your success as a nonprofit?

Well, there was an era when “did we die this month” was our main evaluation criterion! Like many nonprofits in this space, we’ve struggled to make ends meet at times. Our stance on Open Everything (we insist on all open source code, all open data, etc.) closes some revenue doors, which adds to the challenge.

But we’re happy to say that we’ve been doing very well on that particular criterion — ability to stay operational — for many years now! And the future is looking quite bright as well. It feels nice to be able to say that! And of course, we are still Open Everything.

We also measure various smaller KPIs like everyone else does. For instance, we track the number of API requests fulfilled by Unpaywall (about 2 million/day). For Unsub, we’d like to see 1000 library subscribers by the end of the year, which we’re on track to hit. It’s hard to make short-term goals for subscription savings (since libraries’ contracts with publishers are often multi-year), but we expect to see Unsub collectively save libraries more than $10 million by year’s end.

Is there anything I haven’t asked you yet that you hoped to be able to tell me about?

Nope! Other than that we’re delighted and honored to be interviewed in the Kitchen — thanks very much! Oh, and also we’re super excited to hear feedback about Unsub, good and bad, from anyone. It’s still early days and we know we have a lot to learn.

Discussion

50 Thoughts on "Taking a Big Bite Out of the Big Deal"

My concern with Unsub is that it essentially rewards bad behavior by publishers and punishes progressive actions by publishers looking to drive an open agenda. It enables libraries to cancel subscriptions from publishers that have responded to community and library demands and made content as openly available as possible, while preserving and concentrating funds on publishers who have ignored the community and kept all content under strict lockdown. The logical conclusions are going to be demands for longer Green OA embargoes (we can finally put rest to the nonsensical notion that short embargoes have no impact on subscriptions), and a sharp decrease in so-called “Bronze” OA, as publishers realize that libraries are going to punish them for voluntarily making content publicly available. The financial hit of canceled subscriptions may be devastating for smaller publishers, resulting in them either partnering with, or selling their journals off to the larger publishers, particularly those with more closed programs that will continue to thrive under Unsub, meaning more market consolidation toward the least progressive companies.

Unsub also makes clear the reality of library budgets and the pragmatism they require, rather than the idealism and lofty goals many libraries espouse. Using this tool to cut spending on journals that have taken an open approach to access sends a very clear message that this is something libraries wish to discourage. The market will take heed and respond accordingly.

So far zero of our customers have expressed an interest in canceling journals from small publishers — all of them are interested in reassessing the value of their Big Deals from the big publishers.

“So far” being an important qualifier, particularly with major budget cuts on the post-COVID horizon. I suspect that most libraries will be happy to save money any way they can.

Regardless, even if only applied to big entities, a tool that preserves investment in the most conservative and locked-down publishers while promoting the financial punishment of progressive and open publishers seems counter-productive to me.

The tool will not hurt the most progressive and open publishers — those who publish Gold or Platinum OA journals.

The days of making money from restricting access to scholarship are numbered. Those wheels are already in motion.

But it will harm those who are trying to get there, much more so than those who refuse progress at all. The shift to fully-OA has creates an enormous number of financial hurdles for most publishers, and reducing revenues during that transition (while fully funding those who are not making the transition) will slow progress and cause backward movement.

I wouldn’t characterize not providing bronze or green OA options as “bad behavior by a publisher,” personally. It’s a business decision. (I realize other librarians may not share my perspective on this. )

Unsub allows libraries to make informed decisions. We can’t spend money we don’t have and we are going to have a lot less of it going forward.

We should remember; however, that libraries do spend money on content they don’t have to — Open Library of the Humanities, the libraries that added Annual Reviews under Subscribe to Open, etc. — are clear examples. What Unsub also allows libraries to do is make their decisions on far more than OA data alone. Usage data, cost of ILL provision, etc. Personally, I do not expect libraries to rely OA data solely.

To me, small publishers have little to fear from Unsub. They didn’t have access to the Big Deal with libraries to begin with so there’s no Big Deal to pull out of with them. If anything, this could free monies currently locked up in a Big Deal, paying for low value titles, to be spent on smaller publishers providing higher value content.

I think Unsub draws a clear line between the pragmatic nature of managing a library budget and the aspirational idealism that many librarians espouse. Also, assuming that funds saved from cutting Big Deals will remain in the library should not be a given.

Definitely not a given. Of course, right now it’s more likely that the budget has been cut already. We just have to figure out where to take it from. My vote is that libraries make that choice as informed as we can. Unsub is a competent of that.

Does Elsevier have any power to do anything about its costs? If they are a mindless black-box monolith that cannot operate other than they do? If they won’t change without a fight we should fight them, if that hurts in the end, it was as much (or more) Elsevier’s fault, right? How else would we hold these companies accountable? Begging? Wishing on a star?

I can’t agree with you, David. Why should libraries pay for publishers’ poor decisions? You use the word “progressive” for publishers where I would use “foolish.” There continues to be this notion that anyone speaks for Science or The Community. No one does; there is no angel in the heavens assigned to science, knowledge, or publishing. People responsible for an organization should be working on behalf of their organization, not an abstraction. Libraries should do the same, and they are, as predicted by yours truly many years ago. Priem and Piowar are simply exploiting publishers’ weaknesses, like a boxer who waits for an opponent to drop the left hand before swinging with the right. Green, not Gold, OA was the great blunder that cannot be undone.

Ouch.

Well, one man’s mission-driven activities to improve scholarship and the spread of knowledge are another man’s poor business decision I suppose. As has long been a subject of discussion, many publishing organizations act not primarily to drive profits but as part of a larger program to improve the research process and benefit the community (https://scholarlykitchen.sspnet.org/2018/10/01/societies-mission-and-publishing-why-one-size-does-not-fit-all/). Though they aren’t the end goal, revenues are needed to accomplish those missions. I think there’s also an assumption of good faith from our community partners in these goals, and tools like Unsub, as you note, exploit that assumption. Green OA may indeed have been a mistake, or at least believing the repeated promises that it wouldn’t influence library subscription decisions was the mistake. Libraries are not necessarily acting in bad faith here, but due to chronic underfunding (http://deltathink.com/news-views-library-spending-and-the-serials-crisis/) have little choice. The tool is going to cause publishers to become more conservative and slow their willingness to make materials publicly accessible, which to me is a bad thing for the research community and world at large.

“The tool is going to cause publishers to become more conservative and slow their willingness to make materials publicly accessible, which to me is a bad thing for the research community and world at large.” Wait … are you suggesting that publishers are going to act in their self-interest and that is a bad thing for research and the world at large? How dare they!

I think Heather has ably covered our angle on this, but just wanted to add my 2¢. David, if I understand you correctly, you’re saying that not all toll-access publishers are the same: some are good guys (more open), and some are bad guys (less open), and Unsub hurts the good guys while rewarding bad guys. There’s the sense that this puts the cold logic of budgets ahead of the idealistic goal of libraries (and publishers) to support scholarly communication and make the world a wiser, better place.

I love that you see things this way, as about ideals and not just revenues. I couldn’t agree more! This is why we built Unsub, in fact: we think that toll-access publishing does not serve the ideals of scholarly communication as well as Open Access does, and we want to help move all journals to an OA model.

Currently the “good guys” are in a middle ground between toll-access and OA. Unsub will make it very hard to hold this middle ground. Publishers will have to choose.

One group of publishers will double-down on closed. They’ll lock down Green OA, by forbidding authors to self-archiving their own work. And they’ll end Bronze OA, which will decrease the visibility of authors’ work. The market will decide the wisdome of these choices. But keep in mind that the ultimate customer in this market is the taxpayer (who is footing most of the bill), and they have shown decreasing interest in supporting this approach (with good reason, imho).

The other group of publishers will follow their ideals. They’ll flip to an Open Access model (Gold or Platinum), moving from partly open to fully open. This model has decades of proven viability for thousands of successful journals. It’s financially sustainable. And it support the ideals we all care about, ideals like “communism” (in the Mertonian sense of “common ownership,” https://en.wikipedia.org/wiki/Mertonian_norms).

At the end of the day, we’re optimists. We believe most publishers are in this second group; we believe they’ll follow their ideals, not money. Unsub helps give them a nudge by making it harder to ride the fence. As for the publishers who care more for money than ideals…I think their days of 30% profit margins are over, and few will lament their passing.

I think you’re being a bit glib as far as the ease with which a publishing program can flip to OA. Many are committed to this, but need time to evolve to a point where it is possible. If your tool drastically reduces the funds those publishers need to survive this transition, it will be hampered. As noted in previous comments, the likely outcomes are a reduction in Green and Bronze OA as publishers try to stem the tide of losses in order to survive long enough to flip, or they will end up partnering with or selling off to the publishers in your locked-down group with 30% margins, because they will be able to offer financial stability. You offer a nice idea, but I expect there will be a significant number of unexpected consequences that will result.

Also, I think this statement is inaccurate: “…the ultimate customer in this market is the taxpayer (who is footing most of the bill)…” Can you point to any data showing the majority of subscription budgets (at least in the US) are paid by public funds? My understanding is that it varies enormously from institution to institution (private and public), and library budgets come from a combination of tuitions, student fees, and in some cases, grant overheads. Would love to see some data if you have it.

All their tool does is help librarians do analysis that they could do themselves, especially with the new COUNTER 5 data that breaks out OA much better than COUNTER 4 did, so I don’t think it’s fair to say “your tool drastically reduces the funds…” – it’s the librarians reducing the funds as they make decisions they need to make with the best possible information. It sounds like you’re arguing in favor of a publisher kind of “security by obscurity” where it’s somehow unfair to smaller publishers to give fully accurate information to librarians to make their painful but necessary budget decisions. I reject philosophically any argument which opposes providing decision makers with maximum relevant and accurate data.

I do believe this tool goes a bit further in finding freely available versions of articles than COUNTER does (correct me if I’m wrong).

And I’m not making any “should” arguments, just noting the end results here, which is that publishers that make lots of stuff free are going to see their subscription revenues diminish as opposed to those who lock things down. Libraries are in an untenable position, I get that, but it saddens me that those who have worked hard to drive progress are going to take the hit here, rather than those who have fought against it. No good deed goes unpunished after all and this is another reminder that asking libraries to voluntarily spend money on things they can get for free is a non-starter (see https://scholarlykitchen.sspnet.org/2019/10/09/roadblocks-to-better-open-access-models/ for more).

I do a little bit of this kind of analysis myself with our COUNTER reports, but one big problem I run into that I’m interested in knowing how Unsub deals with is that title level COUNTER reports don’t distinguish titles in one package from another. For example, we have an Elsevier Big Deal, but we also have several titles we pay for outside that deal, and they are among the most heavily used titles on ScienceDirect, so their usage data is far from trivial for cost-per-use calculation. But from the COUNTER title reports, you’d never know those titles aren’t part of the same price-package as the rest. Do you allow your customers to also upload for you their titles and individual pricing on the journals from the same platform that are outside the Big Deal so you can exclude them from the analysis?

Yes we do!

This may be because there aren’t many small publishers left who are independent of big publisher contracts, hence Big Deals. Those that are left usually have tentpole journals, but the recent announcement of JBC (ASBMB) contracting with Elsevier shows that even this defense is eroding.

Most Big Deals are bundles of smaller journals, especially with more society publishers being driven into the arms of big publishers because of OA and a difficult subscription marketplace. Because Unsub’s proxy measures deal with CPU — which will inevitably be higher for low-usage titles, meaning those in smaller fields, because the fixed costs of running a journal are spread over a smaller audience — “reassessing the value” of Big Deals will inevitably lead to the incidental effect of hurting smaller journals in smaller fields, given your use of proxy measures.

Unsub seemed positioned to accidentally wipe out journals from smaller disciplines simply because its financial assumptions don’t factor in how hard it is to spread fixed costs when the audience is limited.

I’m unfamiliar with the specific terms of the JBC contract. Does that deal keep the title out of the Big Deal packages like the Cell Press and Clinics journals are? If so, then Unsub is no threat to JBC/ASBMB revenue as per my earlier question and Heather’s response about how they handle subscriptions on the same platform outside of the Big Deal. Of course, all journals that are outside Big Deals are under constant threat of cancellation, as we all know, but having a major publisher providing the elite user services that only a well-developed platform can provide (eg EZproxy and OpenAthens support, “My research” personalized accounts, COUNTER-compliant usage data, MARC records, KBART reports to openurl resolver companies, etc.) will probably help, not hurt, the publication’s subscriptions.

> Unsub seemed positioned to accidentally wipe out journals from smaller disciplines

If that’s smaller journals at the big five publishers, I call that a feature! More incentives for their editorial boards to flip the journals to a fully open access publisher.

The big deal creates innatural incentives. Depending on how you look at it, you may say that the big titles are used to subsidise the poor smaller disciplines, or that publishers multiply quantity above quality to make the dollar-per-download figures look better. In either case, there’s nothing rational about it, and it’s not a free market.

It seems to me that librarians and library leadership are the most astute when it comes to recognizing that CPU does not singularly equal value to their stakeholders. With Unsub, they simply have a tool. The ones I know are pretty sharp and would argue for the journals that are valuable for “smaller disciplines.” It’s a tool, not a mandate. So its not Unsub causing the damage and the library leaders I know understand nuance enough to use it as a tool.

I think David’s assessment is spot on. Unsub is a tool that can be valuable for libraries to assess packages beyond traditional library ROI in the form of CPA, and I like that we are finally branching out of using one metric to assess library value. However, Unsub is defined by the notion that content will continue to grow in favor of OA, which at this point (Especially given COVID pandemic), I don’t think is easy to predict. At the very least, OA growth will slow tremendously given the current pandemic. Not to mention that the transition to OA will most likely put small publishers out of business, which Unsub has continuously not addressed. With Unsub, They advocate that libraries can get 77% of content through either perpetual access or OA. So what happens when OA slows or when perpetual access is no longer relevant in a few years? Curious about long-term effects of such a tool.

On another note, while I think this tool is great to help libraries to expand their thinking about value, it does have a sort of reductionist effect on the role of the library itself. I wish that this tool was more about how to demonstrate library value on college campuses – the main reason why we are seeing reductions in library budgets.

Also – has anyone thought about the student/researcher experience in all this? Curious to hear about how this could impact discovery, particularly for populations that are not up on the best ways to discover peer-reviewed literature.

Sorry you haven’t heard us address it! Unsub will have a large impact on large publishers, but little or no impact on small publishers. Most libraries have already made severe cuts in offerings from small publishers — it is unbundling their Big Deals from Big publishers where they are now looking for savings.

Is there anything to prevent a library from entering their COUNTER data and doing the same sorts of analyses on their holdings with small publishers? If you’re a library forced to cut costs, wouldn’t you want to look at everything? Assuming this is only ever going to be used against Elsevier seems short-sighted to me.

Yes, of course. Heather is simply wrong here. There will be more consolidation, not less, in the months (and years?) ahead. This trend may be separate from the tool she provides, but there is no way Elsevier and Wiley will be reduced to make room for smaller publishers. Libraries had that option for 20 years and never exercised it. If the big guys are reduced, the provost will pocket the money.

I agree that further consolidation is likely. I’ve been talking about the tool: I think that trend will be separate from the tool we provide.

We are adding support for publishers one by one, with the order based on customer requests (modeling and QA has to be done for each journal, so we can’t just turn on All Journals at once). No small publishers are yet supported, and we’ve received no requests for any. We do plan to support all publishers eventually, but we are a small team and are prioritizing based on customer request.

Libraries already easily analyze individual titles – though of course a dashboard product improves the abilities to do so. Let’s remember too that Elsevier is not the only publisher offering a Big Deal nor the only publisher that libraries are breaking from. I understand why publishers would bemoan losing the information asymmetry and having libraries come to the table as more powerful negotiating partners but the notion that employing good business practices (evidence-based decision-making) is short-sighted … well, that seems rather thou-doth-protest-too-much to me.

Do the libraries have access to clear reports on the availability of non-subscription content from those individual titles, and the quantities of their campus usage that falls under that category when doing their analysis? That’s very different from just looking at usage or cost-per-download.

But I agree with you that libraries are acting rationally here, and in their own self-interest rather than the interest of the community or scholarship in general. They are under an incredible amount of financial pressure and need to optimize everything they do. That may mean acting against open access progress in general in favor of supporting their own patrons.

And as Joe notes, the information asymmetry allowed for an era of Green OA that may now be at an end.

Some libraries do and some do not. Really, give libraries some credit. We aren’t just looking at CPU alone nor do we look at it in a non-contextual way. Also, I reject the dichotomy that library self-interest is at odds with the interest of the community or scholarship in general. Just as I reject it when people say that publisher self-interest is necessarily at odds with the interest of the community or scholarship in general. It isn’t at all clear either that breaking the Big Deal acts against open access progress in general … some think that dis-incentivizing Green OA is a mechanism for making progress to full OA and is not an impediment.

Many small publishers don’t provide COUNTER compliant usage data. Some (cough, Longwoods) don’t provide any usage data at all, leaving us to try to use our ugly raw proxy servers to figure out if their journals are even being used at all.

David, if library budgets continue to be cut for many years running, then yes, eventually libraries would be using tools like Unsub to evaluate smaller and smaller packages.

(To be clear, the following numbers are all completely made up – it’s the value proposition I’m trying to illustrate here).

If a large academic library needed to cut $0.5M out of its materials budget between now and when serial invoices are issued less than six months from now (and many shops will be needing to cut far more than that this fall), are they better served to spend X hours per package analyzing 10 smaller packages that might save them $10,000 each (so 10X amount of time to save $100,000, or only a fifth of their dollar target) or should they spend half that much total time (5X) to analyze one bigger package that might save them $500,000, the entire total target they need to cut?

In an ideal world every library would be able to take the time to analyze every package they have every year they have to make a cut, and would make changes to (or completely break out of) the combination of packages and individual subscriptions that provide the worst value in order to free up the money needed to meet a needed cancellation target. But doing that kind of “analyze everything” analysis in large libraries every year a library had to make _any_ sort of cut to their materials budget would take many hundreds of person hours EACH TIME, hours that librarians and staff in those libraries would otherwise be spending supporting the research and teaching missions of their institutions.

In years when libraries receive a small budget cut, many never look at their large packages, preferring instead to trim individual titles or small packages in order to meet a particular cancellation target. But in years when those libraries receive a big cut, or when smaller cuts for many years in a row have led them to already trim out most of the small stuff that provides the least value to their users, then those big package deals become pretty obvious targets.

Thanks Mel, that’s a useful angle to consider. I think you’re right that all the low-hanging fruit has already been trimmed from most budgets. But I wonder if the effort needed to cut a bunch of remaining individual journals or some small packages in their entirety might still be less than dismantling parts of a big package of thousands of journals, with the subsequent renegotiations for the remaining hundreds or thousands of journals that are being kept. My understanding is that big package negotiations can be lengthy and drawn out processes, so it still might be easier to aim for the smaller targets.

David, you are absolutely right that the low hanging fruit is long gone. For smaller libraries like mine, so are almost all of the individual journal subscriptions except a very small number of absolutely critical titles like Science and Nature. I can say for sure that we’re at the point where, if the CAD doesn’t get much better against the USD between now and December, even if we cancelled every single individual journal sub we have left, including Science and Nature, it still wouldn’t add up to our budget shortfall if we don’t get additional funds this year (we don’t have our budget yet for the year). Every institution is going to be different in this aspect of course, but that gives you a sense of scale of what we small libs are dealing with. Re: Mel’s hypothetical about staff time to analyze more smaller packages, that’s exactly why having our publishers be COUNTER usage data compliant is so important. With the new COUNTER 5 data and ability to harvest many of them automatically, it would not take hundreds of staff hours to do that analysis on dozens of packages, just minutes per each.

My question was less about the time needed to analyze the packages, rather the time needed to re-negotiate the larger packages from the larger publishers.

Unless a library is going to just accept and pay whatever the publisher wants to charge re an increase in price for the new deal, the library is entering negotiations for the Big Deal. For what it’s worth, while the timeline is long for many of those who have broken the Big Deal, my understanding is that the actual hours of work in negotiations aren’t that many. Either side may be looking to lengthen/shorten the timeframe of the process overall. But, it takes fewer hours than we might think when we see 1-2 years of negotiations.

David, to address your question, yes, it DOES take a long time to work through big package negotiations, although in some cases the process stretches out over a long period of time, but with huge gaps of time between conversations between the two bargaining entities. We just re-did our Elsevier deal this past year and my wife was on the UW negotiating team, so she gave me a pretty good idea of the time and effort they put into that process.

To Melissa and David, the numbers I was talking about previously referred to all the time that went into ALL facets of a serials cancellation project, not just gathering and crunching the usage data. When we go through a serials review and cancellation we put together a data package that includes every subscribed title, publisher, ISSN, package name (if it’s part of one), any cancellation restrictions (like if a title is part of a package and we’re in the middle of a multi-year deal, meaning that title is off the table for cancellation consideration), what we paid for each title or package for the last 3-5 years, metrics (like JIF), and more. Then that data is shared with over 70 subject librarians across three campuses and our health sciences operation, who in turn spend umpteen hours each communicating with their liaison areas throughout the course of that “serials review” process. Then, once cancellation decisions are made, our serials staff then spend dozens of hours contacting publishers to let them know we’re cancelling a title or package completely, cancelling a package and picking up a subset instead, cancelling a package but picking back up individual titles, etc. So the time spent pulling together the usage data is only a fraction of the total amount of time that gets invested in our serials review and cancellation process. And in terms of total time (serials review and cancellation vs. negotiating a big package deal), it’s no contest. We had four librarians on the Elsevier negotiating team, plus four faculty. While they met many times over the course of the entire negotiation process, that time investment didn’t come close to the amount of time we would collectively spend in a full serials review and cancellation. Obviously for smaller institutions/libraries you might be talking about the same number of people and time involved in a big package negotiation, but less time spent by librarians and staff in the serials review and cancellation process, just because there are few librarians to involve, fewer faculty/departments/centers/schools/colleges, etc. to talk with, etc. But in the case of a place our size, a full serials review and cancellation takes far more time start to finish than does a big package negotiation.

Wow, what a different world a big institution is! At my library, I’d just pull up 3 years of COUNTER data, summarize it in a short table with the cost and cost per FT use, and present it to our 6(!) librarians and University Librarian (head of the library) and we’d talk together orally and make a decision. That’s it. We don’t consult faculty because there’s no point – they’re only going to complain that they just can’t live without such and such titles, regardless of what the usage data says they are actually using. It’s our responsibility to balance the library budget, therefore it must be our authority to make budget decisions. What’s the point of talking to them when the usage data is telling us everything about what they actually use? We do check to make sure we don’t violate any program accreditation requirements but we don’t need a complex consultation with a committee of faculty to determine that.

Yes, Melissa, size of institution/library system and approach to a series review/cancellation have a huge impact on the time investment that is required to follow that approach at that scale. As for faculty consultation, it does indeed often result in just what you described, pretty much everybody saying they need everything. But we want our faculty to feel invested in the library and what the library brings to the university’s mission, so one way we do that is to be as consultative as possible on many things. We believe it has helped us gain faculty support on a number of issues, including lobbying the Provost for stronger monetary support (which is actually their own self-interest coming through, since more money for the library means more access for them) and, perhaps most recently, support voiced to the library and to campus administration for a faculty campus open access policy.

As for the cancellation process, yes, the final decision is in the hands of the library. But as I mentioned above, many faculty appreciate being asked, even if they sometimes don’t have a lot to contribute to the decision-making process. The situation in which involving the faculty in specific subject areas is most often useful is when you’re at a point of needing to cancel either X or Y to meet a cancellation target, when both have comparable costs and usage. When it’s that close to a toss-up, why not let the people most impacted weigh in? Then take that situation and multiply it by a couple dozen disciplines across the the entire institution (a perhaps conservative estimate in our case) and when the dust settles you find out that yes, librarians have made 90-95% of the cancellation decisions, but that, by definition, then means the faculty have helped make the other 5-10%.

It is not necessary to apologize – this is the nature of scholarly discussion. I think that your messaging around the effect of Unsub on large publishers and not small publishers is slightly overreaching in that it only addresses the direct effect of breaking down larger deals (whether it’s just Elsevier or eventually all of the large publishers). What I continuously don’t see addressed are three things that i mentioned in my first post (which ironically were not addressed yet again):

1. Much of Unsub’s mission is in support of OA; the estimated value of subscription packages is predicated on a continuous growth of OA and you work to provide OA content. What is not addressed is how you will support the OA transition for small publishers because they are the ones who will suffer most in this transition. Unsub has the power to have an effect on small publishers in this way because there is no guarantee that the library budgets you help to free up by reducing the cost of the big deal will now be spent on either smaller publisher subscriptions or APCs. Much of the research on the OA transition talks about the dangers of such a transition in that in the end only the large publishers could be left standing, so then unsub was not really that effective because customers will eventually have to re-sign deals with big publishers where they have now lost any historical relationship pricing benefits because your models told them to cut packages.

2. what is the effect of the researcher-as-customer experience now in terms of finding resources? what does this customer journey look like? You have to address this point if you are going to suggest chopping up subscription deals – you cannot just leave libraries out to dry.

3. my last point from above which i think was overlooked, but Unsub does not come off as the humanitarian product that it so claims to be. Yes, you are helping libraries with a terrible situation in the interim, and it’s great that you are a non-profit. But, just like large publishers make money off the work of others, you too are gaining traction in a field where there is something really terrible happening and that is the decreasing value of the library. if the library stops spending (partly because they have to as they have no money and partly because your data has shown them how they can chop up their publishing deals), then what role does the library play in terms of providing resources that are now free for everyone? Seems like you are in turn contributing to the cause (that libraries have low value on campus) rather than helping them get out of that rut. That is really off-putting.

that said – the analytical component of unsub is great and I will continue to advocate that. It would be amazing if you could take your analytical skills and develop tools to support libraries in their increasingly business-centered role as a negotiator for budget and value. Libraries need to be able to speak to how they support the university faculty recruitment and student retention – and at this point part of those initiatives are still centered around library resources (OA or not.)

Thanks for your questions, Kris!

1. Agreed! It’s vital to help publishers (both large and small) who want to flip journals to Open Access. Many journals have successfully flipped already, so it’s possible–but it’s also scary, especially for resource-constrained societies. Thankfully there are some great resources out there to help idealistic publishers and societies flip to OA, and stay sustainable. The SPARC website has a good list of links to these [1].

Again, I’d like to emphasize that this is not wishful thinking! This is cold, hard, market reality. Dozens of journals have already made this flip. Thousands more journals (including very small journals) have been OA since their beginnings. This can be done, it has been done, and it is being done. We are just providing a nudge in the right direction.

That being said, I don’t think all is roses. The long-term industry trend toward consolidation (driven by economies of scale in publishing) indeed poses problems for smaller publishers. That’s been true for twenty years, toll-access and Open Access alike. I don’t think Unsub will make this any worse, but time will tell. What I can say is that I have talked to dozens and dozens of Unsub users, and they all say the same thing: we’re not too worried about cutting the little guys–it’s the Big Deals that need to go. The tool is designed to meet that need.

2. Agreed, OA is not much use if it’s not part of the researcher workflow. Our Unpaywall database is designed to meet that need! It’s a free and open index of all the world’s OA content, and it can be easily integrated into library discovery and fulfillment platforms for no cost. For instance, we serve about 2 million API requests every day from thousands of libraries, helping them deliver free OA articles via their link resolvers.

3. “Accelerate the transition to universal Open Access” is (part of) our nonprofit’s mission. Maybe that’s a good mission, maybe not, but it’s ours.

“Show that libraries are valuable” is a good mission, too! But it’s not ours. Lots of other folks are doing a great job at this–particularly librarians themselves! We try to support them.

We are hearing from our librarian users that Unsub is making their lives easier. And we’re hearing from library administrators that Unsub frees up money that can be used in support of their own imaginative and far-reaching goals. There’s so much energy crackling out there around reimagining libraries for a post-subscription future, and we’re excited to be helping out with that in our own way.

[1] https://sparcopen.org/our-work/transitioning-your-journal/

If small specialist journals that have high real-world impact but relatively low use – so high CPU – are lost, how will people in those fields communicate knowledge? Will we see e.g hand surgery articles in PLOS One, or somewhere, which will then curate their content and have channels for each specialty? Overlay journals? Back to the future portals maybe.

Fortunately, libraries look at more than CPU. Nonetheless, if certain journals cease, I have complete faith that the scholars in those fields will find a way to communicate knowledge. First will be … by creating new journals!

Small specialist journals can probably find a better home with a publisher which can treat them for what they are, rather than a mere number to inflate downloads from customers who don’t even know they’re buying those titles.

DOAJ can help find such a publisher to flip a journal; it currently has 6 matches for “hand surgery” including a CC-BY journal with no APC which seems to need more submissions, didn’t check the content though. Or yes, just use an open archive with open peer review and be happy, if your incentives aren’t distorted by factors external to science.

https://doaj.org/search?ref=homepage-box&source=%7B%22query%22%3A%7B%22filtered%22%3A%7B%22filter%22%3A%7B%22bool%22%3A%7B%22must%22%3A%5B%7B%22term%22%3A%7B%22_type%22%3A%22journal%22%7D%7D%2C%7B%22term%22%3A%7B%22index.license.exact%22%3A%22CC%20BY%22%7D%7D%5D%7D%7D%2C%22query%22%3A%7B%22query_string%22%3A%7B%22query%22%3A%22%5C%22hand%20surgery%5C%22%22%2C%22default_operator%22%3A%22AND%22%7D%7D%7D%7D%7D

Hi Heather – thanks for the update on Unsub. Yesterday you may have seen that new Plan S price transparency requirements were published by cOAlition S (https://www.coalition-s.org/coalition-s-announces-price-transparency-requirements/). You and Jason are clearly busy, and so may not yet have had time to digest these but when you do it would be interesting to learn your thoughts on whether/how these new data might be useful in the Unsub service. Thanks kindly and with best wishes,

Alicia

Some libraries do and some do not. Really, give libraries some credit. We aren’t just looking at CPU alone nor do we look at it in a non-contextual way. Also, I reject the dichotomy that library self-interest is at odds with the interest of the community or scholarship in general. Just as I reject it when people say that publisher self-interest is necessarily at odds with the interest of the community or scholarship in general. It isn’t at all clear either that breaking the Big Deal acts against open access progress in general … some think that dis-incentivizing Green OA is a mechanism for making progress to full OA and is not an impediment.

We have just begun to look at Unsub and I do not understand how they reach their conclusions. We give them our JR1 reports and somehow they come up with how much would be open access, and how much backfiles. This is without JR1 GOA or JR5 or the C5 equivalents.

I don’t think it is realistic for anyone to ask libraries *not* to use the tools available to them to further the interests of their organization. It’s reasonable to debate the quality of those tools, and it’s reasonable to propose the use of other tools, but it is unreasonable to insist that libraries view the world as publishers do. At the same time, publishers who seek to view the world as librarians do are in for trouble. Publishers should be able to understand those perspectives in building their own outlook, but there is no requirement that they adopt them. In fact, what I am describing here is precisely how the world works: why would anyone try to deny this? Why would anyone want to pretend that we are being good guys when what we mean, whether we are publishers or librarians, is that we are responsible for some things and not others?