Editor’s Note: Today’s post is by Michele Avissar-Whiting. Michele is the Editor in Chief at Research Square, a multidisciplinary preprint platform that launched at the end of 2018.

At the end of 2021, I enjoyed the rare perk of a sabbatical offered by Research Square to its decade-long tenured employees. I took the opportunity to focus for a month on a single question that I care about: “What happens to a preprint when its published version gets retracted?”

Of course, given my role, I already had some sense of the answer, and it wasn’t good news. In many cases, I knew that the answer would be that sometimes nothing at all happens to the preprint when its associated paper is retracted, and I saw this as a chance to explore the scale of the issue more deeply, while also calling attention to this topic as a relatively unexplored area in the science of scientific publishing.

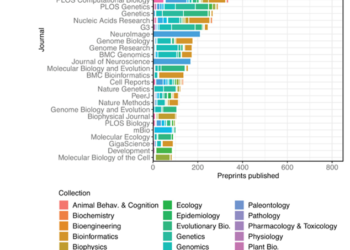

At the tail end of a period where so much critical research was shared so quickly, checking in with our own vigilance in closing the loop on faulty research just seemed like the responsible thing to do. Preprints in biology and medicine have experienced a gradual awakening in the last five years — and a real boom since the start of the pandemic. As of today, EuropePMC clocks the number of preprints posted since 2018 at nearly 430,000, with roughly 75% of those being published since the start of 2020. Given the volume and speed of this growth, there is bound to be a proportion that is later found to be fraudulent, ethically compromised, or just wrong. Like preprinting, the practice of retraction is much more common now than it was a decade ago. Rising concern about unethical or incorrect publications languishing in the literature (probably facilitated by public discourse around problematic research on the internet) has placed pressure on publishers to act on these cases and correct the record.

To get at the question of how often preprinted research is later retracted by a journal, I focused on the three servers that house the lion’s share of life science preprints: Research Square, bioRxiv, and medRxiv. Importantly, these servers are among the few with automated mechanisms in place to link preprints to their downstream journal publications. I compared the list of preprints associated with published journal articles against the entire Retraction Watch database, a clearinghouse of nearly 32,000 retracted journal articles. Knowing that retractions are still quite rare and that these preprint platforms haven’t been around all that long, I didn’t expect a long list. And I didn’t get one: only 30 preprints were associated with downstream retractions. This represents 0.03% of all preprints with linked journal articles on these servers. This proportion is not unlike that of total retractions in the literature, cited by one source as 4 out of 10,000 (0.04%). My own “back of napkin” calculation using Retraction Watch records puts the figure at just over 0.06%.

Once I’d identified the retracted papers, I explored the reasons for the retractions. Twenty of the 30 cases (or 67%) could be broadly categorized as misconduct (plagiarism, falsification, and fabrication of data, or mistreatment of research subjects). And in 18 of the 30, it was clear that the conclusions of the study could no longer be trusted. Next, I took a look at whether and how we (Research Square) and the other servers mark the preprints in light of that important downstream event. In only 11 cases was the retraction noted on the preprint. And in five of those instances, the preprint itself had been marked as withdrawn.

The lack of acknowledgment of a downstream retraction is concerning, because readers can easily land on these preprints as part of a routine literature search and have no sense that something went wrong somewhere down the line. They may even unknowingly cite the preprint in their own papers. There is already a serious problem with the persistent citation of retracted research, and it would be unfortunate if preprints further exacerbated this phenomenon. Therefore, to the extent that a preprint server like ours is able to automatically link preprints to journal articles, we should also have mechanisms in place to discover retractions and update our preprints appropriately. Ideally, this process should be automated, since retractions are indexed by Crossref and the preprint-to-version-of-record connection lives in the Crossref metadata of the preprint.

It is worth noting that none of the 30 retracted journal articles I analyzed indicated the existence of an associated preprint. This is not surprising considering only 6% of journals claim to have such a mechanism; and follow-through, at least based on my anecdotal assessment, seems to be rarer than that. In a world where posting a preprint has become much more commonplace – upward of 80% of journals support the practice – I would have expected to see better acknowledgment of preprints associated with journal articles. Among other benefits, this would certainly facilitate the transmission of information (like the occurrence of a retraction or editorial expression of concern) backward to the preprint. Another interesting finding was that all 30 of the papers had been published in open access journals and the time from publication to retraction was considerably lower among this set relative to the average of 33 months previously reported.

To control for the possibility that this observation was due to a general speeding up of the retraction process in recent years, I looked at the average time to retraction since 2014, when the first bioRxiv preprints were posted. The average for this set, 22 months, was still much higher than the average of those found in my analysis. Future studies might ask whether problematic articles that were shared as preprints are retracted more quickly due, perhaps, to a longer period of exposure to scrutiny from the scientific community. My worries about missing context in the scientific record extend well beyond the failure to update known retractions of journal articles linked to preprints, which was the focus of this particular analysis.

The fact is, there are several related known unknowns. First, given that the mechanisms for matching up preprints to articles are imperfect, we don’t know how often we (and other servers with such mechanisms) fail to establish these links and thus lose the potential to capture an important downstream event. Plus, given that there are now nearly 70 preprint servers, and many of them do not have automated linking systems, we don’t know how many preprints never get linked to their related publications. We also know that journal articles are sometimes marked with editorial expressions of concern, designed to give readers pause before using or citing those papers as they undergo (often protracted) investigations. Like retractions, these notes are becoming increasingly common, and currently, we have no sense of how many preprints are associated with expressions of concern.

The conclusion of my analysis is, essentially, that we all need to do better. In the global supply chain of scholarly communications, we have a shared responsibility to maintain and distribute accurate metadata that represents the publication lifecycle. Preprint servers should do their best to connect their preprints with related versions. Authors who take advantage of preprints should follow up to ensure linking occurs once the paper is published. Journals need to start consistently acknowledging the existence of previous versions of the work they publish; and aggregators and indexers of research outputs should focus on improving the mechanisms by which these relationships are established.

Needless to say, I strongly support the practice of preprinting and believe its growing adoption is a ‘net good’ for science and society. But there is no question that the proliferation of preprints within servers with disparate budgets, policies, and features, as well as the lack of consistency among journals in the treatment of preprints, are contributing to disorganization in the scholarly record. This disorganization prevents readers from seeing the full picture of a paper’s progression — from its origin to its publication and potential retraction. Everyone would benefit from improving the systems that establish provenance and progression in the scholarly record.

For more details about this analysis, view the full article in PLOS One.

Discussion

7 Thoughts on "Guest Post — Building Stronger Chains Together: Keeping Preprints Connected to the Scholarly Record"

What a fascinating and rewarding way to spend your Sabbatical, Michele! Thanks for these interesting findings. I was particularly interested in your findings on the speed of those retractions and the potential connection to those journals being OA. I wonder whether any publishers have examined that relationship and the potential factors. There’s potential there for a further example of benefits to authors via the OA publication route.

Thank you, Mithu! Indeed, this was one of the most interesting findings. Presumably, you’d expect at least 50% of the articles to be in subscription journals. But it may just be that authors who post preprints are much more likely to publish OA (both because of the compatible philosophies and also because OA journals are more likely to be preprint friendly). There was a paper a few years back following up on the destination of bioRxiv preprints but I can’t remember if OA/subs was a specific focus and I’m not finding it just now.

Project numbers should be assigned by the institution to track each author’s research work (project), and so that all outputs can be associated with that project.

Can Google Scholar help flag such papers? Just an icon next to such articles/pre-prints displaying a warning to the reader, perhaps?

By the way, SK should have a Guest Post from someone at Google Scholar.

+1 for an article from someone at Google Scholar.

What of other research artifacts in the progression? Posters, conference presentations? Visiting lectures? Especially as these become more regularly part of the record?

Right. Ideally, any medium with a doi that is related to/derived from the article would be linked to it. It’s theoretically possible; it just needs attention and intention, since automating that would be even trickier than automating preprint-journal article links. But imagine would an enriched experience this would be for the consumer…