With Peer Review Week fast approaching, I’m hoping the event will spur conversations that don’t just scratch the surface, but rather dig deeply into understanding the role of peer review in today’s digital age and address the core issues peer review is facing. To me, one of the main issues is that AI-generated content has been discovered in prominent journals. A key question is, should all of the blame for these transgressions fall on the peer review process?

To answer this question, I would like to ask a few more in response. Are you aware of the sheer volume of papers published each year? Do you know the number of peer reviewers available in comparison? Do you understand the actual role of peer review? And are you aware that this is an altruistic effort?

The peer review system is overworked! Millions of papers are being submitted each year and in proportion, the number of peer reviewers available is not growing at the same pace. Some reasons for this are that peer reviewing is a voluntary, time-consuming task which reviewers take on in addition to their other full-time academic and teaching responsibilities. Another reason is the lack of diversity in the reviewer pool. The system is truly stretched to its limits.

Of course, this doesn’t mean sub-par quality reviews. But the fundamental question remains “Should the role of peer review be to catch AI-generated text?” Peer reviewers are expected to contribute to the science — to identify gaps in the research itself, to spot structural and logical flaws and to leverage their expertise to make the science stronger. A peer reviewer’s focus will get diverted if instead of focusing on the science, they were instead asked to hunt down AI-generated content; this in my opinion dilutes their expertise and shifts the burden onto them in a way that it was never intended.

Ironically, AI should be seen as a tool to ease the workload of peer reviewers, NOT to add to it. How can we ensure that across the board, AI is being used to complement human expertise — to make tasks easier, not to add to the burden? And how can the conversation shift from a blame game to carefully evaluating how we can use AI to address the gaps that it is itself creating. In short, how can the problem become the solution?

The aim of AI is to ensure that there is more time for innovation by freeing our time from routine tasks. It is a disservice if we end up having to spend more time on routine checks just to identify the misuse of AI! It entirely defeats the purpose of AI tools and if that’s how we are setting up processes, then we are setting ourselves up for failure.

Workflows are ideally set up in as streamlined a manner as possible. If something is creating stress and friction, it is probably the process that is at fault and needs to be changed.

Can journals invest in more sophisticated AI tools to flag potential issues before papers even reach peer reviewers? This would allow reviewers to concentrate on the content alone. There are tools available to identify AI generated content. They may not be perfect (or even all that effective as of yet), but they exist and continue to improve. How can these tools be integrated into the journal evaluation process?

Is this an editorial function or something peer reviewers should be concerned with? Should journals provide targeted training on how to use AI tools effectively, so they complement human judgment rather than replace it? Can we create standardized industry-wide peer reviewer training programs or guidelines that clarify what falls under the scope of what a peer reviewer is supposed to evaluate and what doesn’t?

Perhaps developing a more collaborative approach to peer review, where multiple reviewers can discuss and share insights will help in spotting issues that one individual could miss.

Shifting the Conversation

Instead of casting blame, we must ask ourselves the critical questions: Are we equipping our peer reviewers with the right tools to succeed in an increasingly complex landscape? How can AI be harnessed not as a burden, but as a true ally in maintaining the integrity of research? Are we prepared to re-think the roles within peer review? Can we stop viewing AI as a threat or as a problem and instead embrace it as a partner—one that enhances human judgment rather than complicates it?

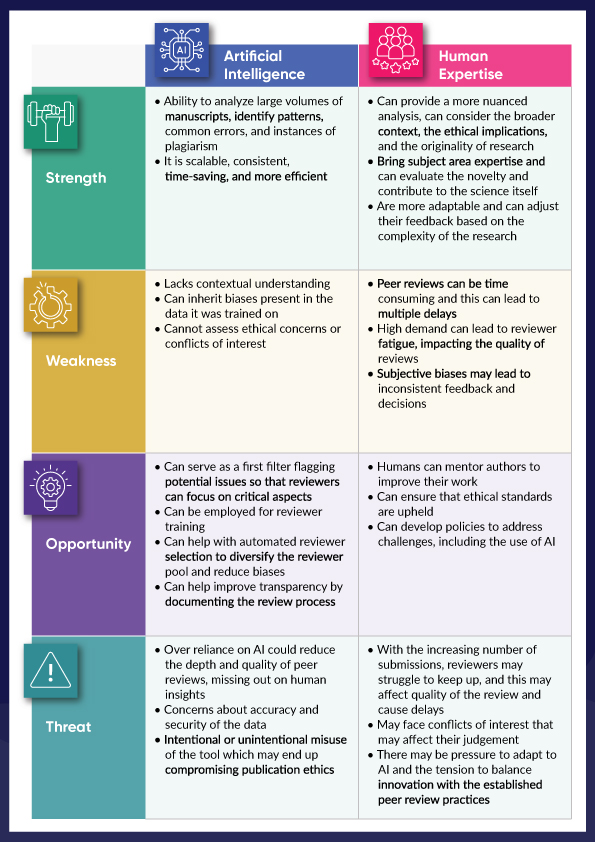

Let’s take a step back and look at this SWOT analysis for AI versus human expertise in peer review

The challenge presented before us is much more than just catching AI-generated content; it’s about reimagining the future of peer review.

It’s time for the conversation to shift. It’s time for the blame game to stop. It’s time to recognize the strengths, the weaknesses, the opportunities and the threats of both humans and AI.

Discussion

7 Thoughts on "Strengths, Weaknesses, Opportunities, and Threats: A Comprehensive SWOT Analysis of AI and Human Expertise in Peer Review"

AI-generated *text* isn’t necessarily a problem. I would focus on AI-altered data and images. Does a reviewer’s skill in detecting misuse of AI correlate with the quality of their scientific review? Because I don’t assume that an author’s writing skills correlate with scientific merit. Translation, editing, and writing assistance are legitimate uses of GenAI. I think we want reviewers to focus on the authenticity and legitimacy of ideas more than language.

Some publishers have invested in AI tools to flag AI written content in the papers. I think the deployment is before papers being sent for peer review. One such example is of Springer Nature. More @ https://group.springernature.com/gp/group/media/press-releases/new-research-integrity-tools-using-ai/27200740

The Springer Nature press release you linked to says that their AI-detection tool called Gepetto works by checking for consistency across different sections of a manuscript. If it detects a certain level of inconsistency, then the text is flagged as potentially AI-generated content, “a classic indication of paper mill activity.” This is tricky because it’s not uncommon for coauthors to write different sections of a manuscript. The writing style can be noticeably different across sections because different humans with different writing skills wrote them. As a manuscript editor, I’ve detected that plenty of times. So it would be interesting to hear more about how this works.

In my experience, AI is totally incapable of producing sensible comments on scientific questions. As you’d expect of an auto-complete machine, it merely reproduces common misapprehensions. The fact that it does so in good English gives its verdicts a plausibility that they don’t deserve. Even its use to improve English is suspect, unless the person using it has good enough English to check the accuracy of the results.

AI will indeed prove to be very useful -for criminals.

The ethical use of AI is beneficial for expediting everyday tasks such as transcription, paraphrasing, proofreading, and structuring research papers. AI can assist in analyzing data and interpreting the results, provided that it is given enough details. However, it is crucial to be able to identify when the AI is incorrect.

People are unable to discern whether the output provided by AI is accurate or not. Therefore, it is essential for us to learn how to ethically utilize AI. Unfortunately, AI is sometimes misused as a shortcut by lazy writers.

These are some of the reasons why reviewers must verify whether the work has been written with the assistance of AI. I would advise academic writers to rework the AI-generated output in their own words to avoid any misinterpretations and false claims.

Furthermore, it is important to acknowledge the use of AI in your work for transparency with reviewers. Failure to do so might create the impression that AI knowledge has been utilized without giving credit.

Overall, AI should be viewed as a tool for positive change in writing. However, users of AI must adhere to ethical practices when using it.

Thank you! 😊

Roohi, can you please explain how that SWOT analysis was conducted, by you, or by AI? How was the figure generated?

“time-consuming task which reviewers take on in addition to their other full-time academic and teaching responsibilities”

– or, in many cases, they are otherwise overworked and exploited adjuncts or independent scholars who don’t have full-time jobs and are expected to do the thing for free

(In case it comes up – depressingly – no, the only folk qualified to peer review are NOT tenured academics, and journal editors certainly don’t hesitate to flood adjuncts’ university inboxes with such requests.)

This is relevant to the AI question because, amongst academics jaded by the exploitative pressures of peer review, I would imagine some don’t feel any kind of moral responsibility for the review. They’ll check it’s as right as can be, but I doubt they’ll feel they should do even more than what’s expected.

IMO, the responsibility for dealing with this AI problem must sit with those paid to do their part of the process