Each spring, editors and publishers eagerly await the release of ISI’s latest Journal Citation Report, which contains details of how well their journals have been cited. Contained in this report is a single measure, the impact factor, which for years has become a measure by which journals were ranked with their competitors.

Previous citation measures consider citations to hold similar weight, that is, a citation from a journal like Science is equal in merit to a citation from the Journal of Underwater Basket Weaving. In reality, some citations are clearly more valuable than others.

Eigenfactor.org, a free tool from Carl Bergstrom’s lab at the University of Washington, attempts to do to the impact factor what Google did to the web search — weight citations according to their importance. They call this measure an Eigenfactor.

The concept is not new. In 1976, Pinski and Narin are credited with developing the iterative algorithm of calculating influence weights for citing articles based on the number of times that they have been cited. Brin and Page apply the notion of weighting hyperlinks in their PageRank algorithm used to calculate the importance of web pages.

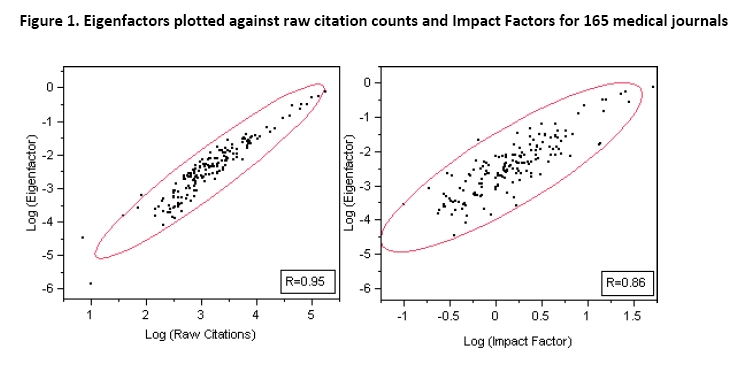

This leads us to the central question: Does the Eigenfactor provide a different view of the publishing landscape than raw citations or the impact factor?

Being a graduate student, I decided to find out. You can find the full paper in the arXiv — for now, I’ll just give you the skinny version.

Eigenfactors and total citations to journals correlate very strongly (R=0.95), meaning that journals which receive a lot of citations tend to also be those who receive a high Eigenfactor. This is less so with the Impact Factor (R=0.86), but it is still a strong relationship. Correlation by ranking gives similar results. Click the graph below to zoom in.

So what does this mean?

At least for medical journals, it does not appear that iterative weighting of journals based on citation counts results in rankings that are significantly different from raw citation counts. To put this in terms of human citation behavior, those journals that are cited very heavily are also the journals that are considered very important by authors. Or stated another way, the concepts of popularity (as measured by total citation counts) and prestige (as measured by a weighting mechanism) appear to provide very similar information.

That said, Eigenfactor is a free site whereas subscribers have to pay big bucks for ISI’s Journal Citation Reports, and the developers of Eigenfactor have putting a lot of work into merging citation data with subscription prices and developing tools for visualizing the data. I don’t want to seem that I’m looking a gift horse in the mouth. On the other hand, we need to question whether new tools for evaluating the importance of journals, articles and authors are providing us with any more information than we already have. And lastly, that we need to be careful about the validity of viewing citations only as a means of conveying merit.

References:

Pinski, G., & Narin, F. (1976). Citation influence for journal aggregates of scientific publications: Theory, with application to the literature of physics. Information Processing and Management, 12(5), 297-312.

Brin, S., & Page, L. (1998, Apr). The Anatomy of a Large-Scale Hypertextual Web Search Engine. Paper presented at the Proceedings of the seventh international conference on World Wide Web 7, Brisbane, Australia, from http://dbpubs.stanford.edu/pub/1998-8

Davis, P. M. (2008). Eigenfactor : Does the Principle of Repeated Improvement Result in Better Journal Impact Estimates than Raw Citation Counts? Journal of the American Society for Information Science and Technology, v59 n12 p.2186-2188 http://arXiv.org/abs/0807.2678

Discussion

6 Thoughts on "Eigenfactor"

my comments just got wiped out. That makes me mad. Your wee ditty above also makes me mad – please, read the literature about IFs from Garfield and all the rest before pontificating; read the literature to see how to analyse data and describe analyses (try Stringer et al in PLOS ONE for a strat); do some brief web search and find out there’s plenty mroe than just 2 tools out there. Cripes.

Phil is right that the main point here is that free alternatives are emerging. As a recent paper stated (http://www.mathunion.org/fileadmin/IMU/Report/CitationStatistics.pdf), getting the numbers to match isn’t surprising at all: “This correlation is unremarkable, since all these variables are functions of the same basic phenomenon — publications.” If we’re all describing the same elephant, the real issue is which tool does it faster, more reliably, and less expensively. These new online tools seem to offer some distinct advantages.

Your query about whether we need new tools for evaluating journals touches on the key issue here: not a given journal metric but journal metrics themselves. The Impact Factor is flawed, and we are right to decry its tyranny. New metrics will just produce other tyrants, however, with their own flaws (I can think of plenty in the case of a metric that counts citations in one periodical as being more important than citations in another). The real problem is our over-reliance on journal impact factors when assessing individuals and their papers. Those ranking candidates for tenure, etc. would do better to examine the merits of the papers themselves rather than cut corners by guesstimating this on the basis of a generalized journal metric – and then quibbling about which one is best.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=c68d901f-194a-4a4c-b552-d9d6a241a091)