Developing countries with free access to scientific information experienced a six-fold increase in article output since 2002, a study by Research4Life reports.

Research4Life is a public-private partnership between publishers, the WHO, FAO, UNEP, Cornell and Yale Universities, and the International Association of Scientific, Technical, and Medical Publishers. Research4Life is the collective name of three separate programs that focus on providing free access to literature in the health sciences (HINARI), agriculture (AGORA), and environmental research (OARE).

The analysis, conducted by Andrew Plume at Elsevier, compared article output between 1996 and 2008 for countries participating in the program with the rest of the world. Article counts per country were taken from ISI’s Web of Science.

According to the report, growth in article output increased by 194% for countries with a gross national income (GNI) between US$1,251-3,500 compared to only 67% for countries not eligible in the Research4Life program.

The language of the report implies a causal relationship between access to the literature (as an input) and article publication (as an output). If you get through the promotional language, you will find the analyst, Andrew Plume, to be much more hesitant:

The massive and sustained growth in scholarly output from the Research4Life countries, over and above the growth for the rest of the world, is probably the result of many related factors such as scientific policy, government and private research funding, and other global developments. However, such a dramatic increase in research output also reflects a clear correlation with the launch of the Research4Life programmes. These statistics point to Research4Life’s profound impact on institutions and individual researchers’ ability to publish.

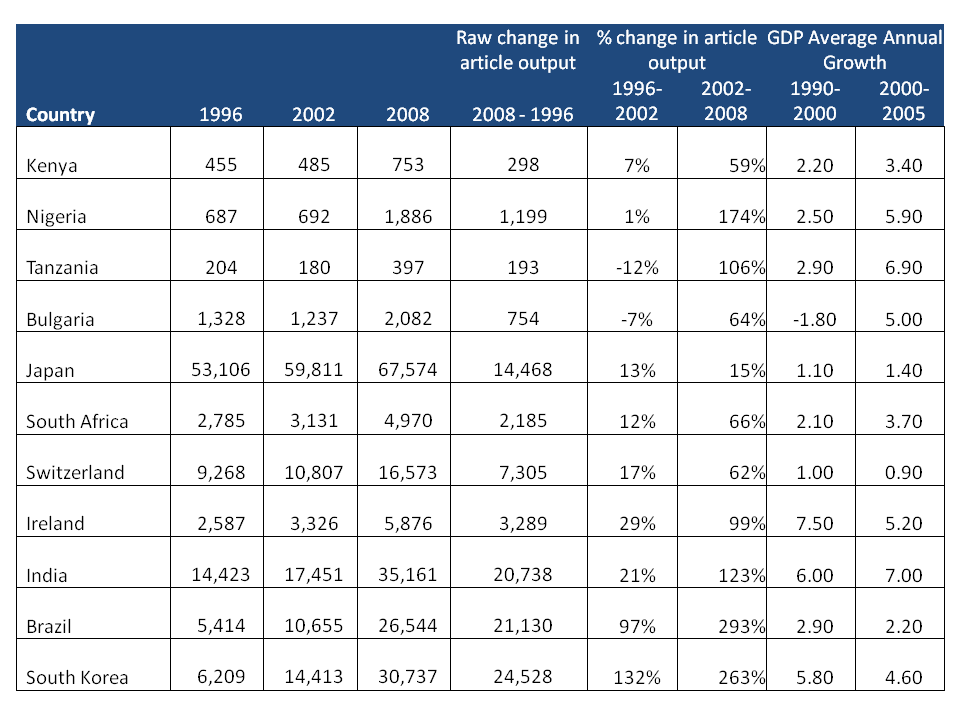

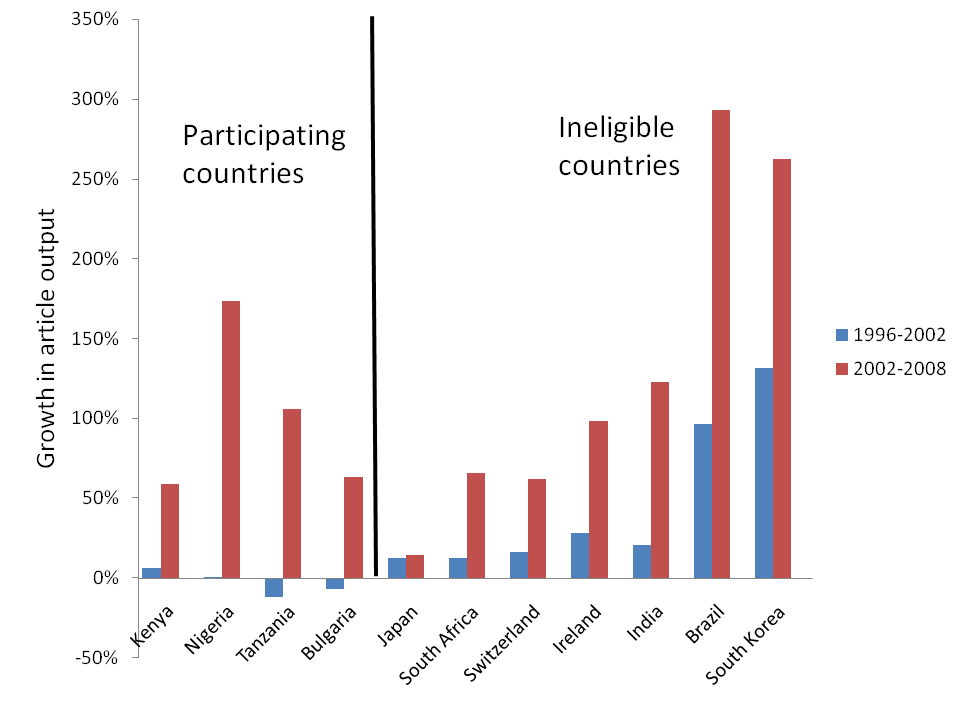

Plume plots the output of four participating countries (Kenya, Nigeria, Tanzania, and Bulgaria) with Japan. While we are privy to the percent change, we do not see the raw data behind the graphs. If you go to the source of data (ISI’s Web of Science and the World Bank, as I report below) you find that Tanzania published only 180 ISI-indexed articles in 2002 which rises to 397 in 2008; an increase of 217 articles (or 106%). In comparison, Japan’s output increased by nearly 8,000 articles yet this represents a growth of only 15%.

Surprising? It shouldn’t be. Developed countries cannot sustain triple-digit growth like developing countries. A focus on percent change, rather than absolute growth, obscures what is really going on in the data.

If you compare article output from other growing economies not eligible for the free literature programs (as I plot below), you will find examples of massive increases in their article output as well: India (123%), Brazil (293%), and South Korea (263%). All three of these countries have also seen huge growths in their economy and significant input into research and development.

What’s more, scientists in these growing economies are finding increasing pressure to submit their work to ISI-indexed journals with impact factors — hence their articles are now being counted when in previous years they were ignored.

In sum, the present analysis simply cannot adequately evaluate the effect of these free literature programs on research output. More rigorous analysis would have:

- Counted the frequency of citations to the list of journals included in the Research4Life programs instead of tracking total article output.

- Paired Research4Life eligible countries with similar (but not eligible) countries to control for bias.

- Control statistically for other variables which are strong predictive of article output (like R&D funding and number of professional scientists).

- Focused on usage rather than article output.

There is no doubt that providing free access to the scientific literature to those in developing countries is a good thing. These programs, and those who made them happen, should be extolled for delivering scientific knowledge to those institutions that could previously afford only a tiny portion of the world’s published literature. But making causal claims on such rudimentary analysis should have been more tentative.

The fundamental problem with this report is that there is no report. It’s a four-page press release dressed up with elements of a proper analytical study. In that sense, Research4Life got things backwards, issuing the press release and calling the journalists before a report was ever written.

Addendum (16 October, 2009)

A recent study, as reported in The Scientist, indicates that developing countries have greatly increased their spending on science:

Developing countries more than doubled their annual spending on research and development between 2002 and 2007, from $135 billion to $274 billion. That spending accounted for 24% of the world’s total R&D budget in 2007, an increase of 7% from 2002. They also increased their global share of researchers, from 30.3% (1.8 million) to 38.4% (2.7 million).

This may explain why article output increased as well.

Discussion

8 Thoughts on "Research4Life’s Dubious Claims and Missing Report"

Thank you for the opportunity to explain further the research I did on the impact of Research4 Life (R4L) in eligible countries. Let me say at first that we are in agreement that the three constituent R4L programs do a lot of good, and that they should be applauded for continuing to provide low-cost or no-cost access to the scholarly literature in the health, agricultural and environmental sciences to inform both research and public policy in the world’s least developed economies. This research was done on a pro bono basis using existing resources, and was never aimed at proving a causal relationship between the provision of R4L in eligible countries and the growth in research outputs. Indeed, it may still be too early to see the real extent of the effect, since the oldest of the programs, HINARI was launched in 2002 but AGORA and OARE date only from 2003 and 2006 respectively.

It is interesting that you choose ask what reporting percentage changes “obscures what is really going on in the data” and then go on to selectively highlight the article counts from WoS (which is actually not the source of my dataset, but an aggregated extraction thereof produced by Thomson’s Research Services Group) of the country with the lowest absolute counts. I am not at liberty to publish my raw data owing to the terms of my license agreement (and thus no ‘report’ could be produced, as you lament), but my counts are in line with your own analysis. I would be interested to know if you are also able to recreate the second chart from the press release showing a clear upswing in article output coinciding with the launch and uptake of HINARI around 2002 for these selected R4L-eligible countries, but with no comparable effect in the non-R4L country Japan. I accept your arguments about sample sizes and the confounders that we acknowledged, but how do we attempt to explain their simultaneous upswing after 2002 that is not also seen in non-R4L countries? In my data I see no such trend for India, Brazil or South Korea, whose outputs grow constantly across the period 1996-2008 in a way similar to that of Japan. The change from around 2002 onward also fits well with the qualitative data available from testimonials of R4L beneficiaries, including that from the Nigerian librarian quoted in the press release.

I am delighted with your suggestions for improvements to the methodology for future studies. The point on studying cited and citing behaviour is already in hand, with a study currently in preparation by Thomson. I am unclear on how the pairing you suggest would control for bias, since the socio-political, economic, education and innovation systems of each country will differ markedly – how would ‘similarity’ be measured?

Dr Andrew Plume, Associate Director – Scientometrics & Market Analysis, Elsevier

Thank you for your detailed response. An inability to share your data, even for verification purposes, makes this a non-starter. A scientific study which cannot be validated is highly problematic.

Making general categorical comparisons between countries participating in R4L and those what do not is difficult because the two groups are not equal. Pairing is a common method in social sciences and economics of getting around this problem: If the eligibility guideline (based on the country’s GNI) is arbitrary, then selecting one just below the cut-off and one just above the cut-off may work to reduce (but not completely eliminate) systematic bias in the analysis.

An analytical model in which other predictors of article production were included (number of scientists, R&D grants and funding, etc.) would enable you to estimate the effect of R4L independently of other potential causal variables.

Even if access to R4L programs does not appear to have an effect on research output, there are many other benefits of these programs on teaching, medical practice, agriculture, government policy making, among others. It is not necessary to promote a causal claim between R4L and article production on such rudimentary analysis for everyone to understand the benefits of these free and subsidized literature programs.

You reaction to this article is quite good but let me remind you something very important. For many years, we struggled to get access to those journals. As researchers from poor countries no body listened to us.

Now that those programmes are there, I can ensure you that our problem to access science is solved. There is no doubt about the benefit of R4L programmes.

For the first time we have a real programme that can help us. You register, you get a password then you have access to more than 7000 scientific journals. Let me not talk about the cost of those journals and our financial capacity!!!.

Education, scientific research, and transfer of knowledge and technology to developing countries are a very complicated equation. Your reaction shows clearly your ignorance of our reality as researchers from the developing world.

Instead of attacking Dr. Plume I advise you to go and look for something else that can help developing countries if you are really willing to help.

Good Luck for !!!.

Juma

Accra, Ghana

==================================

Response from Phil Davis:

Dear Juma,

You are misunderstanding my argument. I am not attacking Dr. Plume nor the merits of the R4L programs. I am merely pointing out that the argument that R4L is responsible for a large and “profound impact” on increasing article output from participating countries is based on very rudimentary and methodologically weak evidence. As I wrote:

There is no doubt that providing free access to the scientific literature to those in developing countries is a good thing. These programs, and those who made them happen, should be extolled for delivering scientific knowledge to those institutions that could previously afford only a tiny portion of the world’s published literature. But making causal claims on such rudimentary analysis should have been more tentative.

The great puzzle to me is why so many advocates of open content, free culture, open access, and, I suppose, open marriage continue to be so sloppy with the numbers. There are many important reasons for material to be open access, but there is no reason to exaggerate or falsify the data. When you have captured a bishop or a knight, there is no reason to claim it is the queen.

Let’s put science back into scientific publishing.

I think another factor that might be responsible for the growth in publication rate is the fact that the developed/developing world is increasingly paying more attention to the problems and issues in the undeveloped countries. In the 21st century issues are being looked at in a global perspective – suddenly the world is more interested in the Tiger population in Tanzania, traffic congestion in Nigeria etc and articles with such firsthand information and data is usually generated by researchers in those countries.

Management decisions are often based on figures that would not survive serious scientific scrutiny. Simply because these are the best figures available. Development organizatins are particularly keen on monitoring and evalualtion, and especially on quantitative evidence. So if these figures serve their purpose that is fine. A real analysis of the impact of research4life would better focus on other things, such as uptake of scientific publications that are meant to have an impact locally.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=9d294474-137a-406a-90ed-dbc66e162736)