- Image by dullhunk via Flickr

The study Phil Davis covered above — published in PLoSONE — reminded me of what I think is a sad story, one that hasn’t been told outside of private discussions, at least as far as I know. It’s the story of an opportunity sacrificed at the altar of open access, of a radicalism blunted into tradition, of audacity channeled down the path of least resistance.

It’s the story of the Public Library of Science.

The Public Library of Science had an opportunity a few years ago, which it now seems destined to squander completely. When PLoS first appeared on most publishers’ radar screens, we held our collective breath — here was a well-funded, radical, energized group coming into scholarly publishing right as the digital age was showing its full promise. It seemed possible that PLoS might be the group to reimagine scholarly communication — from peer-review to publication practices to form and function. Advocates claimed that their aspirations extended beyond merely creating an alternative economic model for publishing.

Fiery rhetoric, impatient academic leadership, the kind of arrogance possibly concealing a grand idea — all were present at PLoS’ inception. It was an entrance ripe with portent and peril. Traditional publishers were a bit nervous and certainly watchful.

Then, very quickly, PLoS underwhelmed — it went old school, publishing a good traditional journal initially and then worrying about traditional publisher concerns like marketing, impact factor, author relations, and, of course, the bottom line. PLoS fell so quickly into the traditional journal traps, from getting a provisional impact factor in order to attract better papers to shipping free print copies during its introductory period to dealing with staff turmoil, it soon looked less radical than many traditional publishers did at the time.

Within a few years, PLoS had become just another publisher.

Throughout, PLoS has been fundamentally invested in the open access movement, so its concerns about the bottom line — which emerged as grant funding started to taper off — caused it to begin building business models streaming from what’s possible using the author-pays model. And there are only so many things you can do around an author-pays model, just as there’s only so much you can do with a reader-pays model.

One of the easiest ways to maximize revenues with an author-pays model is to publish as many papers as possible. This is the path of least resistance for author-pays publishing. And thus, starting in late 2006, PLoSONE became their financial salvation, a salvation achieved via bulk publishing.

Now, because of its reliance on PLoSONE and revenues from bulk publishing, PLoS is teetering on the edge of becoming viewed as a low-quality, high-volume publisher — a far cry from the promise the initiative once held.

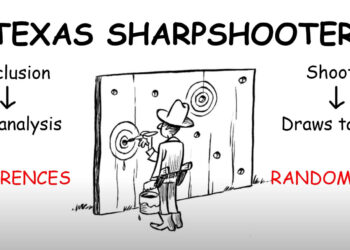

The study critiqued above talks about “bias” in publishing stemming from the pressures to be published (“publish or perish”), and potentially how scientists, institutions, and others encourage this bias for “positive” results (the author of the paper in question uses quote marks as a “weak” rhetorical technique, I should point out).

But is there a larger problem in scholarly publishing, a bigger “bias” toward alleviating the “publish or perish” pressures? And does PLoS, through PLoSONE, feed into this?

Does scholarly publishing itself need a path of least resistance?

Scientific publishing is a domain separated from others by things like peer-review, editorial selection, quality controls of various kinds, disclosures, and a strong preference for signal over noise. In this realm, it’s reasonable to assert that one of the biggest problems the “publish or perish” culture has created is a predatory set of high-volume, author-pays journals that provide venues for weak studies with trumped up positive findings.

Ultimately, these “journals” trade off the trust other journals and the journal culture itself have created.

I’m not anti-PLoS or anti-open access. Both can work, and other publishers have appropriated bulk-publishing, author-pays techniques as open access has shifted funding sources toward author-pays. However, author-pays and open access journals need to be high-quality, which usually means low-volume. So, yes, I am anti-noise and pro-signal, anti-chaff and pro-wheat, anti-pollution and pro-filtration.

Noise, chaff, and pollution in science should be controlled upstream by scholarly publishers. Journals are well-positioned to make a difference here, but the way PLoSONE and similar publishers are bulk-processing manuscripts into journal dress could possibly devalue the journal form at its roots.

If being published in a journal no longer immediately carries the imprimatur of having cleared a high bar of scrutiny, then the form itself is at risk. Journals could become merely directories of research reports. And publishers who are truly setting standards should take notice of the risk the drift toward directories poses.

Does the “publish or perish” pressure in academia create a penchant for positive results? Not any more than the penchant for interesting science drives the search for positive results. Positive results are inherently more interesting and worth writing up than flawed hypotheses or badly run experiments. Positive results reflect good science that people can build upon.

More troubling is that “publish or perish” pressures have created publishing businesses ready to exploit academics and authors.

The PLoSONE study Phil covered, which shoves a sloppy hypothesis into a forced positive finding, proves its point in its own perverse way. And it also proves that the journal publishing ecosystem is far too susceptible to noise pollution thanks to a combination of “publish or perish” pressures, predatory, high-volume publishers, and the ability of new entrants to conflate their practices with those of more rigorous, reader-focused journals and publishers.

Traditional reader-pays journals derive their revenues from the trust their readers place in them. If these journals lose this trust, they lose subscriptions, authors stop submitting their best papers, and a vicious cycle can begin. Reader-pays publishers and editors worry constantly about this. It’s a backstop to their actions and choices. Advertisers pay more to be in these venues of trust, reinforcing the need to preserve the power of the venue through the creation of advertising/editorial firewalls and the like.

Author-pays journals make money when authors publish. Some, like PLoSONE and Bentham Science, work to get as many papers as possible published in order to bolster their bottom lines. As of this writing, the PLoSONE site lists 122 articles published in the past week. At $1,350 per article, that’s ~$165,000 last week in publication charges, equating to ~$8.5 million per year. This doesn’t factor in the institutional charges that can offset some of these.

Clearly, there’s a lot of money on the table, and PLoSONE is getting that money by publishing 5,400+ articles per year. According to Wikipedia, PLoSONE publishes 70% of all submissions. That’s not a tight filter.

There seems to be a conflict of interest with author-pays at the journal level, at the business model level. The author-pays publisher’s interest isn’t in the readers or the results, but in the number of papers processed and published. Shouldn’t there be disclosure of author-pays as a conflict of interest element? That is, a statement like, “The authors paid the publisher $1,350 upon acceptance of this manuscript”?

What would readers think of such a statement?

In the PLoS family of journals, two other articles appeared in April dealing with publication “bias” — one in biology and the other in medicine. In a normal journal family, you might think that the editors coordinated the publication events and are somehow slightly obsessed with the topic. But given that authors paid to be published in these journals, perhaps the editors aren’t obsessed with the topic. Maybe this is where papers about this ill-defined and paranoid topic end up.

With business interests focused on fees from authors, it’s hard to know why things are in high-volume, author-pays journals. And that’s a problem.

PLoSONE believes that it’s “innovative” post-publication peer-review approach alleviates some of the obligations of publishing a scholarly journal. This innovation is essentially a form of commenting. But as a just-released report on peer-review’s role in academia states in discussing PLoSONE:

While submitted papers undergo a form of internal pre-publication peer-review, all “technically sound” papers are published. (A scan of articles suggests that reader comments are, in fact, rare.) We suspect that most competitive scholars will continue to submit their most important work to more prestigious, traditionally peer-reviewed outlets.

Luckily, other outlets like ArsTechnica covered these studies, including the one Phil discussed above, and provided reader-friendly critiques. As John Timmer writes in ArsTechnica:

[W]e could propose an alternate hypothesis: researchers in competitive environments are better at presenting their results in a way that’s likely to get them published. The data presented here is consistent with that hypothesis as well. . . . The authors themselves raise some significant questions about their interpretations without even going into some of the basic issues with the data—the corresponding author may not always reside at the site where much of the work was performed, and a US state is generally not a fine-grained measure of a research environment.

But should material like this have gotten this far? Is PLoSONE just a directory service for science, with paid listings?

Editors at a reader-focused journal would have very likely forced the authors of these papers to confront some of the problems ArsTechnica and ScienceBlogs found with them, or the paper would have been rejected as part of the 80-95% of papers rejected by these journals. Instead, it found its way into a journal that gets paid when it publishes, and therefore has a 70% acceptance rate.

In its early days, PLoS had an opportunity to break free from the herd. Instead, it joined the herd. That’s poignant enough. Now, it’s potentially carrying a business model with PLoSONE which could make people doubt the quality or safety of the herd, including PLoS and stretching beyond, even if many members are in fine shape.

Credibility isn’t just a problem for PLoS or PLoSONE. Credibility is a problem for journals everywhere.

Wouldn’t PLoS, and scholarly journals in general, be better off without author-pays, high-volume publishing practices?

Discussion

100 Thoughts on "PLoS’ Squandered Opportunity — Their Problems with the Path of Least Resistance"

SYMPTOMS OF PREMATURE GOLD OA — AND THEIR CURE

“Gold” Open Access (OA) journals (especially high-quality, highly selective ones like PLOS Biology) were a useful proof of principle, but now there are far too many of them, and they are mostly not journals of high quality.

The reason is that new Gold OA journals are premature at this time. What is needed is more access to existing journals, not more journals. Everything already gets published somewhere in the existing journal quality hierarchy. The recent proliferation of lower-standard Gold OA journals arose out of the drive and rush to publish-or-perish, and pay-to-publish was an irresistible lure, both to authors and to publishers.

Meanwhile, authors have been sluggish about availing themselves of a cost-free way of providing OA for their published journal articles: Green OA self-archiving.

The simple and natural remedy for the sluggishness — as well as the premature, low-standard Gold OA — is now on the horizon: Green OA self-archiving mandates from authors’ institutions and funders. Once Green OA prevails, we will have the much-needed access to existing journals for all would-be users, not just those whose institutions can afford to subscribe. That will remove all pretensions that the motivation for paying-to-publish in a Gold OA journal is to provide OA (rather than just to get published), since Green OA can be provided by authors by publishing in established journals, with their known track records for quality, and without having to pay extra — while subscriptions continue to pay the costs of publishing.

If and when universal Green OA should eventually make subscriptions unsustainable — because institutions cancel their subscriptions — the established journals, with their known track records, can convert to the Gold OA cost-recovery model, downsizing to the provision of peer review alone (since access-provision and archiving will be done by the global network of Green OA Institutional Repositories), with the costs of peer review alone covered out of a fraction of the institutional subscription cancellation savings.

What will prevent pay-to-publish from causing quality standards to plummet under these conditions? It will not be pay-to-publish! It will be no-fault pay-to-be-peer-reviewed, regardless of whether the outcome is accept, revise, or reject. Authors will pay for each round of refereeing. And journals will (as now) form a (known) quality hierarchy, based on their track-record for peer-review standards and hence selectivity.

I’m preparing a paper on this now, provisionally entitled “No-Fault Refereeing Fees: The Price of Selectivity Need Not Be Access Denied or Delayed.”

Stevan/Kent – an interesting response to an interesting post. The key to me is, as Stevan says, that pay-to-publish becomes irresistible in a publish-or-perish climate.

Two related questions: 1) what is the evidence that ‘everything’ eventually got published somewhere in the old journal hierarchy? 2) Has the appearance of author pays journals increased the overall number of articles published (beyond the rate it was increasing in subscription journals)?

If more papers are indeed published, in a commercial author-pays environment this could well mean ‘publishing’ ends of costing the academic community more than before. This would be ironic given that ‘cost’ is frequently one of the arguments used by proponents of OA who favor author-pays models (rather than the self-archiving approach Stevan advocates).

(1) I have no recent evidence that everything gets published somewhere in the old journal hierarchy (Stephen Lock made the same observation in his 1985 book, “A Difficult Balance”). Perhaps others have collected systematic data. My conclusion comes from two facts: (i) There are an awful lot of junk journals (and junk papers) at the bottom of the journal quality hierarchy, and it’s hard to imagine even worse papers, that did not even manage to get published at all. (ii) Most editors (and referees) have had the experience of seeing the same paper (sometimes unaltered) that they had already reviewed and rejected, appearing in another (usually less selective) journal.

(2) There are so many articles published that it would be difficult to show statistically that the still relatively small number of author-pays journals has significantly increased the number, if it has. It’s possible. Then again, maybe bottom-rung subscription journals are just getting a slightly lower submission rate.

Yes, cost reduction is frequently one of the arguments used by[some] proponents of OA, but it’s not a compelling argument (and for today’s overpriced and unnecessary pre-emptive pat-to-publish Gold OA, the argument is probably false). My argument is usage/impact enhancement.

Richard (and Stevan),

There have been several studies on the fate of rejected manuscripts. While most of these manuscripts eventually find a publisher, the success rate is far from 100%. For example:

75% — Epidemiology [1]

66% — British Journal of Surgery [2]

56% — American Journal of Neuroradiology[3]

50% — American Journal of Ophthalmology[4]

47% — Cardiovascular Research [4]

Naturally there are methodological limitations with discovering whether a rejected manuscript was eventually published, but the evidence shows that the rejection process keeps many articles from finding the light of day.

Cameron Neylon http://bit.ly/9R6m4t has provided further useful references to estimates of the percentage of rejected papers that eventually get published in another journal (50% – 95% depending on field) — and of course there’s always the fallback of unrefereed book chapters!

Lest there be any misunderstanding, my own remarks about refereeing standards and pay-to-publish journals were generic, not specific to PLOS journals (in which I myself publish!).

See: Beall, Jeffrey (2010). ““Predatory” Open-Access Scholarly Publishers”. The Charleston Advisor 11 (4): pp. 10-17(8). (Alas not OA!) http://bit.ly/9dKvk8

Kent – I agree with a lot of the individual points you make in this post but I’m having a hard time seeing why PLoS One tarnishes what PLoS have done with their top shelf OA titles from the perspective of an author or reader (as opposed to a publisher).

> becoming viewed as a low-quality, high-volume publisher

Yes, but by who? Other publishers? Surely authors usually care about journals over publishers (you submit to Cell, not to Elsevier) so maybe it doesn’t matter.

If you ask a researcher what they think of PLoS Biology they say “high impact open access journal” not “run by a company that also publishes any old tat in a sister journal”.

> If being published in a journal no longer immediately carries the imprimatur of having

> cleared a high bar of scrutiny, then the form itself is at risk. Journals could become

> merely directories of research reports.

I’m not sure that this is necessarily a bad thing. Why couldn’t this model *co-exist* with the more traditional editorially moderated one?

It could be a good fit for niche subjects that have a close community of determined researchers who’re willing to go in and spend time reading and discussing each others papers. Think of what synthetic biologists did with OpenWetWare or the tight knit groups that used to be centred around sites like WormBase. Collectively these researchers are faster and better at detecting flaws in a papers than the traditional system; furthermore negative results or incremental improvements that wouldn’t necessarily warrant publication could be very relevant. In these cases it’s more important for publishers to disseminate material quickly than to spend time vetting it too closely.

The post publication peer review stuff hasn’t panned out for PLoS One to date but that’s not to say that the concept is without merit – maybe there just hasn’t been enough time for people to get used to the idea, maybe their approach needs more thought or most likely the journal is just too broad in scope to build a community of engaged users (leaving a comment on a paper doesn’t benefit me as a scientist – I don’t even know if anybody else will ever see it).

Brand problems are hard to detect, often until it’s too late. As more people (mathematically) publish in PLoSONE, more reputation will drift that way for the PLoS brand, and soon the other journals will have a problem. On a broader scale, it also devalues the journal model overall.

The simple question is, Would PLoS be a stronger brand without PLoSONE? The clear answer is, Yes. The same goes for journals overall. Are journals overall more likely to be perceived well without high-volume, author-pays practices in their midst? The clear answer is, Yes.

The post-publication peer-review stuff isn’t new. I tried similar things at other publishers over a decade ago, BMJ has done it for years, etc. It works infrequently, and mostly in the UK, but a lot of it is drifting out into Twitter and Facebook and blogs, where it comes more naturally and provides clear separation from the journal audience.

I don’t think you can ask that simple question, because it begs another: Is PloS financially sustainable without PLoSONE?

I think it is safe to say that, without continued charitable donations, the answer is “No”.

The other big question in high-volume low-overhead science publishing is how much it will scale, will the market support more than a few journals taking this approach? PLoS One is facing some new stiff competition from Nature Rejects, er, sorry, Nature Communications. Either there’s an unlimited supply of these low-level papers and more outlets won’t affect the numbers that PLoS One publishes (keeping their profits intact in order to continue funding their other journals) or there’s a limit to how many of these type of papers exist on a monthly basis, which means they’ll see a drop-off in submissions with more competition.

If the Nature name continues to carry the weight it does, this may be problematic. Nature does charge a lot more to publish OA in Communications (there’s also a non-OA option), but if your funding agency or institution is paying, many authors may choose the glamor name, all things being equal.

Disclaimer:

I volunteer time as Academic Editor for PLoS One.

You write:

> So, yes, I am anti-noise and pro-signal,

> anti-chaff and pro-wheat, anti-pollution

> and pro-filtration.

> Noise, chaff, and pollution in science

> should be controlled upstream by scholarly

> publishers.

You’re kidding, right? You say this with reference to journal selectivity? In other words, some ex-scientist choosing according to his/her own whim what he/she thinks deserves to be spoon-fed to scientists? On what planet does this actually work? Definitely not on the one where I live.

Some examples from the area I know best, my own field:

In my personal rank of my own 15 peer-reviewed experimental papers, this one

http://www.plosone.org/doi/pone.0000443

ranks substantially higher than this one:

http://www.sciencemag.org/cgi/content/full/296/5573/1706

Obviously, my ranking of my own work is different from how other people see it. That’s definitely not what I want from my filter. That’s like asking a stranger to pick for me from the menu even though I already know what I would like to eat.

In fact, the two of my papers which have enlightened my personal understanding of my own field the most, have made it into one of the ‘highly selective’ journals, but not into the ones which published work by me which I consider less important/novel. Thus, the people you think we should outsource the filtering or selecting to, can’t even do this the way I want for my own stuff! Why would I outsource such an important task to someone obviously not capable of accomplishing the task the way I need it?

But it gets worse. Because the sorting algorithm of professional editors doesn’t necessarily always correspond to that of professional scientists, stuff gets on my desk I rather wouldn’t want to see. According to my experience and judgment, in my field of Drosophila behavioral Neurogenetics, this paper is arguably the worst I’ve come across in the last few years:

http://www.nature.com/nature/journal/v454/n7205/full/nature07090.html

See here why:

http://bjoern.brembs.net/comment-n519.html

What kind of filter lets this sort of ‘pollution’ through? ‘Pollution’ BTW, is your wording. I would never use that sort of word on any of my colleagues’ work, no matter how bad I personally may think it is (because I know I may be dead wrong).

Anyway, with gaping holes big enough for airplanes, that’s not much of a filter, is it?

> If being published in a journal no longer

> immediately carries the imprimatur of

> having cleared a high bar of scrutiny,

> then the form itself is at risk. Journals

> could become merely directories of

> research reports. And publishers who are

> truly setting standards should take notice

> of the risk the drift toward directories

> poses.

ROFLMAO! Only someone who has never worked as a scientist could make such a naive statement. It’s about as close to reality as the idea of politicians paying themselves a seriously high salary, with the argument that it keeps them from taking bribes.

The filter you are talking about is non-existent and only serves to maintain the status quo, nothing else. I personally know two colleagues (one male one female) who regularly take ‘filter-units’ (read editors) out to expensive dinners to explain to them why their work should pass through the filter.

Add to that the fact that your filter of ex- (or non-)scientists proudly proclaims they don’t even care when professional scientists tell them how to do their job:

http://www.nature.com/nature/journal/v463/n7283/full/463850a.html

e.g.: “there were several occasions last year when all the referees were underwhelmed by a paper, yet we published it on the basis of our own estimation of its worth.”

It is exactly research repositories, or directories if you will, which is the future of scientific publishing, combined with a clever filtering, sorting and evaluation system that of course still includes human judgment, but doesn’t exclusively rely on it, and definitely not for the publication process itself.

Pre-publication filtering is a fossil from Henry Oldenburg’s time and its adherents would do good to have a look at the calendar (and I don’t mean the weekday, lol).

I guess that it’s an ironic coincidence that my comment on a Nature opinion article on how flawed journal rank is for evaluating science will be published in Nature this coming Thursday (according to Nature sub-editor Janet Wright):

http://bjoern.brembs.net/comment-n606.html

> Traditional reader-pays journals derive

> their revenues from the trust their

> readers place in them.

Where do you get all these naive ideas from? There isn’t a single library on this planet that covers all the journals where I have ever wanted/needed to read a paper in. Our library isn’t even close to covering my needs (but has plenty of subscriptions of no use at all to me). Publishers shove journal packages down libraries’ throats, to make sure that any such effect, if it even ever existed, doesn’t hurt their bottom line.

What would work, though, is to have a subscription service in the future that relies on the expertise and judgment of today’s journal editors for some portion of my post-publication filtering and then, for some subscription fee, I get to chose which ones come closest to my needs. True competition for the best editors serving the scientific community!

In contrast to the way things are now, this would be a kind of personal filtering that actually could work as you envisage it.

> Clearly, there’s a lot of money on the

> table, and PLoSONE is getting that money

> by publishing 5,400+ articles per year.

I can’t believe you’re seriously mentioning money without quoting the record 700m dollar *adjusted profit* of Elsevier in 2008 alone (I seem to recall the rest of the economy tanked that year) and how they make their money: slide #32 here:

http://www.slideshare.net/brembs/whats-wrong-with-scholarly-publishing-today-ii

I find it highly suspicious if you talk about a few thousand dollars for a non-profit organization, without mentioning private multinational corporations making record profits in the billions every year in taxpayer money off of the status quo.

> There seems to be a conflict of interest

> with author-pays at the journal level, at

> the business model level.

Which, I would tend to hope, is precisely why PLoS is not a corporate, for-profit publisher like almost everybody else in the business.

> Editors at a reader-focused journal would

> have very likely forced the authors of

> these papers to confront some of the

> problems ArsTechnica and ScienceBlogs

> found with them, or the paper would have

> been rejected as part of the 80-95% of

> papers rejected by these journals.

Why? Because professional editors are somehow god or at least better than professional scientists at predicting the future? Does every journal editor now have an astrologist at his/her disposal? What reason is there to believe that these ex- (or non-)scientists should be any better than Einstein in evaluating science? Einstein famously opposed Quantum Mechanics all his life. Are you seriously trying to tell us that a bunch of editors and publishers know their areas of science better than Einstein did his physics?

I don’t know what scholarly publishing system you are describing in this post, but if it ever existed on this planet, it was a dinosaur long before I got into it 15 years ago.

We, the scientists, are producing more valid, important, potentially revolutionary results than anyone could ever read, sort, filter or comment on. Nobody knows if one day our society will depend on a discovery now opposed by the Einsteins of today. Then, it will be a boon to have a single entrypoint to the open access scientific literature (yes, hopefully a directory!!) and send text- and data-mining robots to find the results on which to build the next generation of scientific explanations.

Because we produce more (and more important) discoveries than ever before, the old system of pre-publication filtering is the most epic failure among many in our current scholarly publishing system. Granted, we are still in the process of building a better, more suited one. But you’re not helping by putting your head in the sand and pretend everything is a-ok. So get on with the program and support publishing reform with the scientists and the taxpaying public in mind instead of defending corporate publishing profits!

There is not a single rational reason for having 2 places where scientific research is published, let alone 24,000.

It also strikes me as odd that you make creating a brand name sound like a bad thing:

> Then, very quickly, PLoS underwhelmed — it

> went old school, publishing a good

> traditional journal initially and then

> worrying about traditional publisher

> concerns like marketing, impact factor,

> author relations, and, of course, the

> bottom line. PLoS fell so quickly into the

> traditional journal traps, from getting a

> provisional impact factor in order to

> attract better papers to shipping free

> print copies during its introductory

> period to dealing with staff turmoil, it

> soon looked less radical than many

> traditional publishers did at the time.

It is the very system you defend in this post which forces anybody trying to modernize a fossilized organization to start by creating a brand name with the help of a little artificial scarcity. It worked for potatoes in France (see link above) and it worked for high-ranking journals such as Nature, Science or PLoS Biology. Once a brand name and reputation were established using the old system, only then was it possible to start a new, truly transformative publishing model. This was a very clever strategy! What else could PLoS have done and succeed? It was the only way how anyone would try to get a foothold in a 400 year-old market, dominated by three or four billion-dollar corporations.

Finally, it needs to be emphasized, that the above is not in any way intended to belittle, bad-mouth or denigrate professional journal editors. In my own personal experience, they are very bright, dedicated, knowledgeable, hard-working science-enthusiasts. I have the impression that they are passionate about science and believe in their ability to sort the ‘wheat from the chaff’. It’s just that the task that you seem to intend for them is not humanly possible to carry out. The current system is woefully inadequate and has professional scientists and professional editors needlessly pitted against each other, rather than having them work together for the advancement of science. In our ancient science communication system, animosities between the two camps are inevitable, but highly counterproductive. Most people have realized this by now which is only one of the reasons why PLoS One is such a striking success.

The planet where this works is Earth. If you seriously believe that a directory of all the inherently amazing, revolutionary things you’re discovering is sufficient, then you are arguing that journals no longer need to exist, editors have no role, and technical filters and personal judgment will somehow suffice. If that’s the case, why pay PLoSONE money for a hand-waving peer-review process? Why be a part of it? Aren’t you posing as one of the ineffective, unnecessary elements yourself?

Filters are important. You seem conflicted on this point, but I’m not. Scientific publishing is being swamped by high-volume, author-pays publishers who pass off sniff-test review as peer-review, have acceptance rates of 70% or more, etc. The reader-pays, traditional peer-review system isn’t perfect, but it’s at least a noble effort to strive toward a goal of rigor, reason, and reader-service. Publishing 7/10 papers you get and getting paid for each one sets up a dynamic that I find hard to swallow as an information consumer.

Trust is absolutely critical to journals. Why cite, read, receive, look up, access, care about, etc., articles in a journal if that journal does little more than list what arrived via email over the past few weeks? Don’t you respect the time of scientists more than that?

you wrote:

> If you seriously believe that

> a directory of all the

> inherently amazing,

> revolutionary things you’re

> discovering is sufficient,

> then you are arguing that

> journals no longer need to

> exist, editors have no role,

I do argue that journals don’t need to exist, what benefit do they provide?

I briefly alluded to what role today’s editors could play in a modern publishing system. More detail below.

> technical filters and

> personal judgment will

> somehow suffice.

It’s slightly more sophisticated than that, of course. If I were king for a day and were allowed to decide how scholarly publishing should work, here’s what I’d want to have:

Three different sets of filters. One for new publications, one for my literature searches and one for evaluations.

1. New publications (within the current week).

This is a layered set of filters. The first layer is unfiltered: I must read every single paper that is published in the field(s) I define by keywords and tags. This is my immediate field and I cannot afford to miss anything in it, no matter what. In my case, these fields could be Drosophila behavioral neurogenetics and operant learning in any model system, both further constrained by some additional tags/keywords. The second layer would include all invertebrate neuroscience, but only those who have been highlighted/bookmarked by colleagues (or editors, or bloggers, etc.) in my own field. This layer would include social filter technology such as implemented in FriendFeed, Mendeley, citeUlike, etc (which I all use). The third layer would include all of neuroscience. There, I would have a relatively high threshold with many of my selected colleagues/editors/bloggers (in neuroscience) tagging/flagging it and it would include some form of discovery/suggestion tool such as the one in citeUlike. For all of Biology, I would only like to see reviews or press-releases of reports that have been marked as groundbreaking by colleagues/editors (in biology). For the rest of science, I wouldn’t understand neither papers nor reviews and press-releases or popular science articles would be what I’d like to see, maybe 2-3 per week.

This filter would deliver a ranked list of brand-new scientific publications to my desk every week. Icing on the cake would be a learning algorithm that remembers how I re-ordered the items to improve the ranking next week.

2. Literature searches

There, I’d like to have a single front-end, not three, as it is now (PubMed, WoK and Google Scholar). In the search mask, I can define which words should appear where in the full-text of the article. If my searches turn up too many results, I want the option to sort by any or all of at least these criteria: date of publication, citations, bookmarks, reviews, media attention, downloads.

3. Today, whenever people or grants need to be evaluated for whatever reason, in most cases it is physically impossible to read even only the most relevant literature, that’s the cost of our growth. So in order to *supplement* human judgment, scientifically sophisticated metrics should be used to pre-screen whatever it is that needs to be evaluated according to the needs of the evaluator. Multiple metrics are needed and I’m not talking single digits. The metrics include all the filter systems I’ve enumerated above, obviously.

Actually, come to think of it, I may want to have a fourth set of filters:

4. Serendipity

I want to set a filter for anything scientific that is being talked about in the scientific community, no matter the field. If it generates a certain (empirically determined) threshold, I want to know about it (think FriendFeed technology).

The above system of filters and ranks would be possible with the available technology today. It would be the best integration of human and machine-based filtering system we can currently come up with and it would easily be capable to do a much better job than today’s actual system, even if it may no be perfect initially. I would never see anything that is irrelevant to me and if the system isn’t quite where I want it to be yet, it’s flexible enough to tweak it until it works to my satisfaction. What is required for it: something like ORCID, a reputation system and a full-text, open-access scientific literature standard, none of which poses any technical challenge. Thus, the only thing between me and the system described above is the status quo.

Yet, I still have to browse eToCs of journals where 99% may be great science but is of absolutely zero interest to me, use multiple search engines to find what I’m looking for, use FriendFeed and citeUlike and Mendeley to take care of my references, only to find out that when I finally have the exciting paper, nothing happens when I click on the “experiments where performed as previously described” if I find out what they actually did!

For years this has been steadily driving me up the walls! Factor in that nowadays it takes between 1-2 years to get your research published after you have all the data (simply by having to reformat and resubmit it down the ladder) and the current publishing system is hands down the single most enraging, frustrating, anachronistic and absurdly insane component of my job.

And then someone comes along and exclaims that the only thing on the horizon that at least shows some potential for relief from this plague, doesn’t quite fit into an antiquated world-view which is the single factor responsible for this whole mess to begin with! Please excuse me if I forget to be dispassionate for a minute and let off some steam 🙂

I am afraid you forgot how the whole publishing thing started in the first place. Few hundred years ago, alchemists, chemists, anatomists, etc. started sending letters to each other. Sometimes this would be just to describe how efficiently to produce sulphuric acid or how to work with glass to make a particular piece of lab equipment.

It is no coincidence that a lot of journals have the word “letters” in the title.

A bit of history helps a lot.

Imagine if Lavoisier was trying to publish his oxygen theory today in let’s say Nature. Do you believe who would have succeeded when the likely successful reviewers are heavily vested in phlogiston theory? Perhaps, yes, but not likely.

As far as I am concerned PLOS model does not go far enough.

Let’s have more blog-like publications with contributions form the interested scientific community. It is the ultimate peer-review process and it will not stop scientific communication, which in its bulk maybe no that interesting, but there would be rough diamonds in it.

Having said all of that, there is a definite place for high quality conventional journals. I cannot imagine my life without PNAS, Science, Cell, Nature, Annual Reviews and a lot of other journals. But the need for open-access and even open-source scientific publications for me is obvious.

So let’s all go back to the caveman diet? To leeching and amputation? You could also be arguing that PLoS is taking an oddly anachronistic approach.

I do think Lavoisier would have been published in Nature or Science. You should look him up. He might have owned one of them, if given the choice.

Don’t put words in my mouth. This is not very scientific.

The point is (as apparently you missed it) that publications’ role is to foster exchange of ideas. And exchange of ideas is always free or it becomes something else.

As far as Lavoisier is concerned- perhaps he could have owned Nature. The point is- how hard he had to fight to get the scientific community to accept the facts. Perhaps you should look up Lomonosov’s experiment- 1753 I believe.

And yes- if you exert sufficient pressure over Nature/Science, etc. you will get published. How is this a good thing? You are making an argument against yourself.

I didn’t put words in your mouth, and this isn’t science. It’s argument. I used rhetoric (reductio ad absurdum), and then gave a different interpretation of your comparison (PLoS is anachronistic). In the pre-abundance information age, there were natural brakes on information flow — paper was expensive, distribution was hard to achieve, etc., whether your ideas were revolutionary or standard-issue. Now, filtering is a function of self-discipline. PLoSONE is using the age of abundance to drive a business model — publish more papers, make more money. Wikipedia doesn’t charge anybody anything. If PLoS people are true idealists, why not do that?

My joke about Lavoisier is that he was a nobleman, a bank owner, and pretty influential and insulated. The orthodoxy of the time was less pliable because information was scarce, thus more susceptible to control. That doesn’t mean that the counter — information published with a low filter to just “get ideas out there” — is the answer. It makes me wary when someone makes more money by publishing more articles. Do you really think Lavoisier’s ideas would have been as influential if they’d been shoveled out into the waves like just more PLoSONE water?

Two other points — authors may be fans of this type of publishing, but readers, with scant time, will soon tire of it. Also, I’m not defending profits, but talking about practices. Both PLoSONE and traditional publishers are good at returning money to their owners, but how PLoSONE and other high-volume, author-pays publishers make their money — what they exploit — I think is fundamentally untenable in some important ways.

I think you are confusing several arguments here. The ‘professional versus academic editors’ is one much discussed by both aggrieved authors and editors but it is not relevant here.

The majority of subscription journals are edited by academics – it is they who do the selecting (I think that’s what Kent actually means when he says scholarly publishers).

This argument is about whether to select – not who should do the selecting.

As for Elsevier/NPG and their profits, again I sympathize with your arguments but am not sure they are relevant here. If Nature Communications or a BMC-like OA journal were to dominate the author-pays space and make vast profits off authors, would you be such an enthusiastic advocate of this approach?

> If Nature Communications or a

> BMC-like OA journal were to

> dominate the author-pays space

> and make vast profits off

> authors, would you be such an

> enthusiastic advocate of this

> approach?

Of course not! In the end, I really don’t care by what strategy single entrypoint, full-text OA is achieved, institutional subscriptions or author pays or something else. I might even be persuaded to give in and allow some profits off of taxpayer money, provided a good enough cause. But the insane billions every year even in years when entire nation-states face bankruptcy is just corporate greed with a big middle finger right in our faces.

Do you have any objections to PLoS making massive profits from overcharging PLoS One authors in order to fund a bunch of what you call “fossilized” journals?

Good point! Indeed, if PLoS One would succeed in significantly altering scholarly publishing, there would be no need for brand-generating journals. In this case, if there were no plans to merge the journals into One, I would definitely have objections! At the moment, I see it as a necessary evil for the greater good. That may change.

“Overcharging” is a pretty steep accusation (especially for someone who works at CSHL Press… http://cshprotocols.cshlp.org/subscriptions/cost.dtl). Care to back it up?

Bill–Overcharging seems more an obvious observation to me than a serious charge. PLoS is profiting heavily from PLoS One. They’re using those profits to pay for their unsustainable higher overhead journals. The high profits generated have inspired others, such as Nature, to start their own journals to take advantage of the great amounts of money to be made. Essentially, PLoS One authors are being required to pay more than it costs to publish their articles in order to subsidize other publishing models. Can you dispute this in any way?

And if you want to compare apples to apples, I run a journal that not only has no charges whatsoever to authors, we actually pay authors a royalty as part of a revenue sharing plan based on the usage of their articles.

I take “overcharging” to mean “charging an unfairly and indefensibly high price”. Since page and colour charges at TA journals average not much less than PLoS ONE’s author-side fee, and since that fee subsidizes not the profit margin of some multinational but (a) the fee waiver scheme and (b) the other PLoS journals — no, I don’t call that overcharging. If “overcharging” simply means “charging more than the break-even costs of publishing”, then nearly every publisher is guilty!

Regarding Nature — I understand that you are referring to Communications (and agree with your comment above, about this being NPG’s attempt to bring the Glamor Mag model to OA). I do think it odd, though, that PLoS is singled out for criticism of this “self-subsidy” model, when it’s the same thing that NPG has been doing for years, with their flagship Nature and a stable of 40 or so “second tier” (their term!) journals. Other publishers have been at the same game even longer.

Apples to apples would require comparing subscription income for CSHP with author-side-fee income for P.1, no? Is CSHP financially self-sustaining? Does it take a subsidy from the larger Press, or does it provide a subsidy to other Press activities?

Bill, you raise a good point. PLoS, and OA journals in general are often spoken of as having a higher moral standing than the usual closed access journals. CSHL is a not-for-profit research institute. CSHL Press does make a profit, but every single penny of that profit goes into funding scientific research at the laboratory. Essentially, just as PLoS does, we charge grant funding more than it costs us to produce our journals, and we put the extra money left over toward doing things that we think are important for science. Most society journals work exactly the same way–they charge more than it costs to produce the journal and the extra money is used to fund activities beneficial to the members of that society. If you want to put us all on the same moral standing, then I have no problem with that, and it’s a refreshing change from the usual rhetoric.

I think PLoS One points to the future of academic publishing. The future may be sunny or overcast (we will know when we get there), but we will see much more activity like PLoS One going forward. I would think that making it work better (with competition) is a better course than trying to overturn it. An analogy: PLoS One is like Craigslist, which is tying the NY Times in knots.

There’s an important difference — Craigslist is a directory service competing with other directory services, and winning because it scales better than any of the old directory services. PLoSONE and the like are looking more and more like directory services, but they’re competing with peer-reviewed journals. If all scholarly communication is going to be is a set of “Read me! Read me!” want ads for articles, in effect, then we’ll just end up reinventing the peer-review system as a meta-filter on top of it, as Bjoern Brembs is suggesting. And how efficient is that?

See, Kent, you do get what Bjorn is suggesting. As to the efficiency of it, well, what if Google and other search engines took a human-powered editorial approach to what they indexed? To provide a similar level of service, how many people would they have to hire? Has their brand been tarnished by their acceptance of every web page in existence?

Efficiency is exactly the debate scientists, authors, and publishers need to be having. I don’t know what efficiency looks like, but putting pressure on authors to submit to high-ranking generalist journals when their stuff would be better suited to a niche outlet isn’t efficient for scientists or publishers. Forcing libraries to subscribe to Big Deals for a bunch of stuff they don’t want isn’t efficient nor is it good reader service. In fact, patting yourself on the back for your selectivity is hypocritical when you also force the library to carry the other journals that the rejected manuscripts end up in.

There’s a fundamental shift happening, which I think you’re doomed to fail to realize, and that’s the shift from browsing to searching. From an oligarchic pre-filtering to a democratic post-filter. The squandered opportunity is in missing out on this. The failure to scale lies in missing out on this.

Google’s brand has failed to extend into realms of knowledge, e.g., Knols. It is a brand that is about knitting things together, indexing. It’s not a brand about knowledge generation or knowledge validity. So the comparison doesn’t work.

I completely understand the shift from browsing to searching. That’s nothing new. I’ve stopped talking about it when giving speeches because it’s such old news. But, in fact, it makes my point all the more important. If everything is unfiltered and searching is the only way to find things, then Google is the authority of the Web, and expertise has no role except as awkwardly captured in search algorithms that are essentially popularity, not discernment, engines.

Efficiency for users and readers involves filtering. Getting published may be frustrating, rejection may hurt, etc., but efficiency is a two-sided topic. Filtering at the source makes for a more efficient information environment, just as filtering water makes for more efficient drinking. Having to filter, boil, or otherwise purify water makes it easier for the water company, but not for the populace.

The “Big Deal” is a separate deal. That’s a sales tactic that also smacks of bulk-publishing. So there you have it.

Reply to Kent, in case the threading gets messed up.

No, you don’t quite get it yet, because what makes Google work is precisely the lack of top-down authority or editorial filters. Google does fail in many ways, but no one can claim that the top links aren’t authoritative sources for most queries. AltaVista (remember them?) got destroyed by Google and their algorithmic (but ultimately human-powered) approach.

I’m not joining Bjorn and calling for the overnight abolition of journals, but since you mentioned scaling, I have to say it’s editorial control which doesn’t scale.

How many people really believe that the reviewers of their submission to Cell/Nature/Science do as good a job as the reviewers of submissions to niche journals? There’s just too much stuff! While PLoSONE is dealing with this issue head-on, other publishers continue to pretend it doesn’t exist. I don’t care how selective you are, there’s too much stuff out there and the method doesn’t scale.

So, yes, expertise as captured by human inputs but not direct editorial control (which is corruptible) is the direction things have to go if current publishing orgs are to remain relevant.

Actually, I have to reply that I think you don’t get it. The top links often aren’t authoritative, but user apathy leads us to accept them. Often, more authoritative links are below. For instance, try searching on “bisphosphonates.” You get Wikipedia, a college course, WebMD, and a commercial site as the first four listings. The FDA comes fifth, then a dentist. You don’t get to journals until a couple of pages in. And this is just a spontaneous example. Scott Karp had a great talk on this when the “autism – vaccine” tie was still believed.

And how do you accept “authoritative”? Branding. If the URL is a good brand, then it’s more authoritative. Google’s algorithm works sometimes, not others. Do brands work better than Google? It’s likely they do.

Editorial control can scale. It takes a few people to manage the flow for a few hundred papers. That’s pretty decent scale.

Disclaimers:

1) I’m one of ~1000 academic editors (AEs) in PLoS ONE. I also have four papers published in PLoS ONE, three of which were accepted or published before I joined the editorial board in 2008.

2) I can see two or three totally different topics in this post, sometimes contradictory:

(a) Author-pays versus reader-pays models

(b) PLoS ONE versus other selective journals, including other PLoS journals

What really seems to be common between (a) and (b) is

(c) PLoS versus all other publishers

So, I will mostly focus on the PLoS ONE model, which seems to be the major target of this post’s criticism; but I may also address the specific attack on PLoS versus others (which somehow reminds me of the attack on priests’ versus everybody else’s misconduct, or Tiger Woods versus every other athlete. Since PLoS seems to present themselves as a “holy” alternative, they should not engage in “lay” publishers’ filth; this is what I read between the lines.)

I agree with you on two things, actually three:

1) PLoS has initially taken a pragmatic approach to make its name known, which is playing the traditional publishers game. We can argue whether this pragmatism is justified or not; however, it was a proof-of-concept that open access journals can compete with “top-flight” journals in quality, and in attracting authors and readers. I agree with you that such pragmatism is contradictory to the radical discourse in the PLoS core principles. But I’m surprised that you later use this argument to attack PLoS ONE rather than the non-radical PLoS journals. If there is any radical change done by PLoS, it’s PLoS ONE!

2) I agree too that PLoS ONE post-publication peer review/commenting/etc. is not working well, and I claim that this is mostly because of technical reasons. Once the IT team manages to create a blog-like dynamic commenting system, allowing RSS feeds and comment notifications, things may change quickly (as in arXiv and the science blogosphere).

3) I also agree with you that authors won’t change their culture soon. Authors (of whom I am one) are similar to investors in the stock market, and it’s a chicken-or-egg situation: publish in a “high-risk” journal and be a pioneer or wait till a journal establishes its reputation. However, many authors (some of them among the top scientists around) have willingly agreed to rock the boat.

**********

Now, let me go to where we disagree.

1) “And thus, starting in late 2006, PLoSONE became their financial salvation, a salvation achieved via bulk publishing.”

Claiming that PLoS ONE was adopted as an option for financial salvation is an attractive hypothesis, but, like many hypotheses, is far from reality. PLoS ONE was the initial vision of PLoS founders but seemed to be far fetched in the beginning. As a matter of fact, PLoS ONE quick success has surprised everybody, including the PLoS ONE team itself, and the expected volume of submissions will overwhelm all those working in PLoS ONE, and that is why the editorial board has almost 1000 members. This doesn’t happen with other “predatory” publishers who would publish this post as an opinion article for $450 or less! Many online journals that charge less have almost published nothing in two years! So, in summary, I am saying that if PLoS is to be proud of anything, it should be proud of PLoS ONE. And I’m also saying that PLoS ONE did not aim at or plan for bulk publishing. This happened as authors, frustrated with the current slow and subjective system, gained confidence that work published in PLoS ONE does not go down the drain.

2) Since we’re talking about science, or at least scientific communications, resolving any dispute should be based on data not on rhetoric; on objective not subjective statements (including those by Björn, rating his own papers). So, please provide some data to support your claims. Do you have any numbers to justify that PLoS ONE papers have less quality than others? Do you have statistics showing how many papers are submitted to PLoS ONE directly, before being submitted to other journals? Do you have data correlating an article’s quality to the selectivity of its journal?

If you provide these, we can scientifically discuss your claims. Otherwise, I can easily make a claim that among the > 10,000 papers published in PLoS ONE, 5,000 are the best papers published in the history of science. Can you rebut this statement objectively?

More specifically, I can challenge you (or anybody else) to go through the PLoS ONE articles I have handled as an AE and claim they are scientifically less rigorous than any other paper in the fields of microbiology and immunology, microbial genomics or bioinformatics.

3) Claiming that PLoS ONE is no different from all current publications is unfair and wrong. There are different classes of publications and journals, and PLoS ONE is different from most of them. First of all, PLoS ONE is not a magazine like Science and Nature, whose editors pick interesting scientific communications to publish. Unlike Björn, I have no big problem that an ex-scientist does the picking as long as the publication is called a “magazine,” not to be confused with a scholarly article–which is not the case. However, I am concerned about the range of expertise of professional/in-house editors. For example, while most biological entities on planet Earth are microbes, most biological editors in most biology journals (including Nature and PLoS Biology) have studied the biology of eukaryotes, some minor group of organisms that we—humans—happen to belong to thus have unjustifiably overstudied them.

So, PLoS ONE is not really in competition with Science, Nature, Cell, or the like. PLoS ONE publishes no review articles, and yet has received > 33,000 citations in the past three years. Quite impressive! If you exclude reviews and editorials from Nature, Science, the number of citations these two magazines receive will be substantially lower than the current prestige-driving numbers. PLoS ONE publishes papers in some “unpopular” fields as well, which other journals reject to artificially keep their misused impact factors high, or at least to keep the journal “interesting to the specific readership.” PLoS ONE has no specific readership that it targets. Publishing science only because it “interests” the readers is against the advancement of science.

PLoS ONE doesn’t write an editorial/perspective article about almost every article it publishes. Very few journals publish only primary research, an in PLoS ONE more than 99.9% of the articles are primary research.

4) I also share your concern for sorting the wheat from the chaff, and this is the main responsibility of the hundreds of AEs in PLoS ONE, whom you have almost described as incompetent in your post. You depicted PLoS ONE as a sink of low-quality papers, and implied that the job of the hundreds of AEs, who freely volunteer their time, is just to “sniff” the papers for scientific values. I would like to see some records that justify your claims. Have you had access to the peer review records, which are all documented by the way, before making such an assumption?

So, the job of AEs is to sort the wheat from the chaff, and I am not saying that they all do it equally well, but there is also no reason to believe that they do the sorting worse than other editors. However, sorting the wheat from the chaff is different from sorting the “sexy” wheat from the “fat” wheat, or the wheat produced in USA and UK (which alone contribute 67% of papers in top-flight journals) from that produced in a “third world” country. What could be a better way for sorting good from bad science than making full-text papers available to all the scientific community the first day they are published (not 3, 6, or 12 months later)? This will allow all human-based or computational text-mining tools to find whatever they define as wheat, or even “gold”, in the primary literature (not what a few people have defined for them). Moreover, compared to other publishers, PLoS journals allow immediate commenting. Comments are not moderated before their publications; they are sometimes removed after their publication if they break the commenting protocol. No other journal on planet Earth offers a quicker way of commenting or posting notes and corrections. Just think about how long it takes to send and publish a letter-to-the-editor in some “classical” journals to refute an obvious mistake or a blatant fallacy.

I am not really blindly defending PLoS ONE (and I have no interest at all in doing so) nor am I claiming that I will submit all my future papers in it. Actually, I have no problem in showing you 3-4 REALLY bad papers in my field that were published in PLoS ONE in the past three years. But, you know what, I can also show you 5-6 HORRIBLE papers (very well written but completely misleading) published during the same period of time in PNAS (via all three tracks). Some “highly ranked” authors can intimidate any editor in a selective journal.

5) Author-pay is certainly a problem. But, as far as I know and I have experienced, PLoS is among few author-pay publishers who never reject a waiver request.

You seem to be implying that every paper published in PLoS ONE has been paid for. How can you tell? I received an immediate 40% discount on the article-processing fees of the last paper I published in PLoS ONE for example, and this is exactly what I asked for. I paid what I could afford (actually my collaborators did pay). Waivers are automatically granted on request, and no one judging the articles has access to this information. I know several authors who are not funded and who never pay to publish their papers whether in Elsevier or PLoS.

Again, I am not blindly defending PLoS or PLoS ONE. But, if you want to challenge the PLoS ONE model, “show me the data,” as the editors of JEM once said (http://jem.rupress.org/content/204/13/3052.full)

ONE vs. all– Part 2

This is a continuation of my long comment, and I address here some specific points in your post.

“However, author-pays and open access journals need to be high-quality, which usually means low-volume.”

This is an interesting hypothesis that remains to be proven. So far, it is baseless. And even if it is true and scientifically sound, who decides on the “right” or “optimal” rejection ratio? Why is 90% rejection better than 30% in your opinion? If neither is good, could you let me know what is a good percentage? How about 66%? Is it the same for all disciplines? It has been well said that: “if, according to conventional wisdom, the quality of a journal parallels its rejection rate, then the best journal would by logical extension be the one that accepted nothing at all!’!”

http://www.clinchem.org/cgi/issue_pdf/backmatter_pdf/27/4.pdf

—-

“One of the easiest ways to maximize revenues with an author-pays model is to publish as many papers as possible.”

How many is too many? And what is the proof that PLoS ONE’s aim is to publish as many papers as possible rather than “as many good papers as possible?” Is there anything with the latter, especially that there is no page limits any more with online publications?

If some laboratory conducted a 10-year research program on a new chemical entity and, at the end, proved with high statistical confidence that it has no effect on 15 diseases, would this be publishable in your opinion? Would a paper claiming that a chemical entity tested on mice have some preliminary effect on reducing cancer have more value than the one with 15 confirmed negative results? Should one be published in a “journal” and the other in a a “blog”? Why?

“There seems to be a conflict of interest with author-pays at the journal level, at the business model level. ”

This is true only if the journal in-house editors, whose salaries are paid by the journal’s publisher, are the ones making the decision. However, AEs have no interest at all in accepting more papers. I would argue that an AE’s job would be way easier if she or he rejects every paper right away to save herself/himself the headache of sending the article for peer review, dealing with reviewers busyness, and authors’ frustration or lack of willingness to take “no” as an answer. It is also much easier and less risky to reject a paper right away than to accept one without in-depth review, which may result in more trouble in the future.

Finally, I think the lack of “selectivity” and the attempt to minimize “subjective criteria” is why PLoS ONE actually can improve scientific reporting. Authors will no longer have to make weird non-scientific statements to catch the attention of professional editors and less specialized readers. Statements such as those that start with “interestingly,” or “importantly,” are hopefully going to fade. Others such as “this is the first report of…” or “this paper is a major milestone towards uncovering bla bla bla” will hopefully disappear from literature. I have actually returned papers to authors sometimes so that they tone down these kinds of statements. In PLoS ONE, (ideally) only scientific methodology and reasoning get a scientist to be published, not the packaging three experiments with four pages of discussion!

Contrary to what many people think, PLoS ONE is not the place to “pass” weak research. In PLoS ONE, you cannot hide a bad study for a long time. One day (even years after) some competitor will come and trash it with comments and notes. On the other hand, an author can easily “smuggle” a paper in a journal hidden behind closed access bars.

Well, PLoSONE people have proven one thing — they can’t write short.

All I can say is, Read the post. Most of the answers are there. I’m not going to restate them in a response.

Yes, the posting is long, but well worth the read. Sometimes byte-sized chunks simply aren’t enough. (He says, writing a short piece :-).

I think there’s a final point that didn’t really get emphasized enough: it’s not that I think editorial filtering is too tight. On the contrary, the sort of filtering that Kent suggests has been shown time and time again to be useless (i.e., rejecting important science and publishing unimportant, fraudulent or ‘bad’ science).

Thus, I’ve not been arguing against filter or quality control, I’m saying that the old, fossilized system that Kent is defending here is not filtering and sorting nearly to my standards. The filter size is not nearly small enough! The current system is what is vastly sub-standard, for my workflow. What I read in the so-called “highly selective journals” is not even close to selective enough for me: Maybe once a month, probably less, I actually read a full paper in one of these journals, but I have to read all the ToCs nevertheless, just to find them! That is what’s frustrating me: the standards of selection are way too *low* for me, not too high!

In contrast to Kent, I don’t claim I know what’s best for other colleagues, Einstein’s error taught me this. In contrast to Kent, I don’t want to impose my filter and sorting on them. In contrast to Kent, I would like everyone to be able to set the filters and sorting to where they need it for their work.

So your standards are much higher than anybody’s, yet you spend your time defending a “journal” that accepts 70% of what it sees?

I still don’t see how your world views square.

I think what Bjoern is saying is that journals should have low bars and leave the filtering up to other systems that may or may not be more capable (which seems to be the crux of the disagreement).

I agree with you interpretation, but then why work for PLoSONE filtering the literature, even 30% of it? That’s what I don’t get. He should stop that, and begin working on the kind of filter he’s advocating. He’s part of the problem he’s supposedly fighting.

Even today, peer-review is a threshold which is useful for several reasons.

1) Make sure the manuscript is intelligible (think Sokal Hoax).

2) Make sure the statements in the text are backed up by the data.

3) Try and spot general mistakes (none of the authors’ friends will read it as thoroughly as a reviewer).

All this serves to establish some minimal criteria which have to be met for the publication to get the stamp ‘scientific’. It is a set of criteria which may be defined as the lowest common denominator on what constitutes a scientific publication. Everything else is rather subjective and varies from field to field and person to person (or at least more than the minimal criteria). Why impose one field’s/person’s view on all others?

Interesting cases are ‘borderline’ manuscripts where it’s difficult to say for sure if even the minimal criteria are met. These are rare, but an example I currently have come across would be an interesting discovery, but with practical issues preventing the authors to pursue the research further until all the criteria are fully met. Publication of such papers would help other scientists to pick up potentially important discoveries and prevent them from falling into obscurity. I wish PLoS One had a section for such papers.

Along similar veins, I’d love to have a “rejected by Nature/Science” stamp on every paper that describes a truly groundbreaking discovery, but wasn’t recognized as such initially.

Today, Nature and Science editors define what’s an important discovery. More often than not, their assessment is at odds with my own and many of my colleague’s. Not a month goes by without some student in Journal Club asking: “how could this get published in ‘Science’??” The older folks then usually explain that where something is published doesn’t really have much relationship to what is being published.

Kent, interesting post, there is some more commentary on some of the issues raised over at friendfeed which might be of interest.

Hi all, having defended my paper from what seemed unfair -but always welcome- criticisms, I wanted to share my experience with PLoS ONE.

I have three papers in it.

I chose it precisely because, as suggested by Ramy K. Aziz, it is very fast and efficient (it allowed me to have three papers published in less than two years from the start of a completely new project!), and because it had built a reputation for publishing studies on bias and science policy issues. (I wasn’t really sure who else would be interested in this stuff).

Having very little funds available, I asked to have the publication fees reduced to less than a third in all three cases. And in all three cases no problem was made about it.

I aksed this honestly and in good faith, and in my next grant applications I now always specify an amount that I will need to publish with PLoS, whose philosphy I entirely support.

As for the peer-review, I felt no particular difference with other journals (I am -or rater used to be- an evolutionary biologist).

If anything, the peer-review was better, because it focused much more on the methodological aspects of the paper (I invariably got sent to an expert in the statistics I used), and less on the “importance” or the “interest for journals’ readership” as a criterion of selection.

I think that any criteria of selection other than a paper’s scientific quality is an obstacle to intellectual progress. An obstacle that initiatives like PLoS ONE, if properly developed, have the potential to remove.

Thank you for adding your comments. It’s noteworthy to me that part of the attraction for you is ease of publication. This is not a reader-focused but researcher-focused benefit. As for payments, it’s good that you only had to pay $1,000 each time to be published. At least the convenience of not having to hassle with multiple submission efforts was given a discount as well. As for the peer-review process and editorial process, your experience that “it focused much more on the methodological aspects of the paper” raises a concern, given that the major criticisms expressed here and elsewhere were about the methodology. Focusing on statistics alone isn’t going to deal with the broader methodological problems, for instance.

In any event, thank you for commenting. I agree that a paper’s scientific quality should be the focus, but I don’t agree that authors paying for low-bar, low-friction publication is the way forward.

“I asked to have the publication fees reduced to less than a third in all three case” vs. “it’s good that you only had to pay $1,000 each time to be published”

These two statements don’t match.

Less than a third of PLoS ONE $1,350 charge is < $450

Now, I’ve been following this discussion here for the past three days and I’ve been reading your post and the comments multiple times.

We have shown you that current technology would provide us with a vastly superior filter and rank system than the one you defended in your post, if only we could get rid of the old one.

Yet, instead of arguing why the traditional system is better than the currently possible but blocked one, you keep repeating theoretical ideas of quality control which have been shown to fail real-world tests time and again.

So please enlighten us, what, other than “that’s how we’ve always done it” is your argument to not improve our filter and rank services? Why slow progress? Why continue to block improvements? Why not take advantage of the technology non-scientists are already enjoying? Why this refusal to let modernity enter into the dusty halls of petrified scholarly publishing?

It’s not an either-or situation. I think systems that allow community filtering or individual filtering are fine. But I think those systems are overburdened and not helped by having a loose filter upstream from “journals” like PLoSONE and others. Author-pays, bulk-publishing journals relinquish responsibility to filters and users, which I think is basically a cop-out in order to generate as much author-pays revenue as possible.

What??? It’s not an either-or situation? Of course it is! There isn’t a standard with which one could get access to the full text of all the literature! That should be obvious to anybody with the slightest familiarity of how these filters work.

What??? You’re worried the modern information technologies I cited above may be ‘overburdened’ with papers? That’s like saying the 1000hp tractor may be overburdened by the weight a single horse could pull!

Are you sure you know enough about the technology I’m suggesting?

As long as you keep defending the horse carriage when there are cars outside with the key in the ignition, the system you defend just keeps looking more and more like this to me:

P.S.: If what you are trying to say is that one could force all publishers to publish their papers according to this standard which would allow us to implement the system scientists like me are demanding, even with the current system still in place (only that all of it will be full-text OA), then you are correct!

However, once that modern system is in place, where something is published will matter even less than now and your system will slowly wane and die. Thus, it’s either the old system or a modern system, even if you force-implement a modern system, as you may be suggesting.In the latter case, the old system will die as a consequence of having the modern system. Which is precisely why the corporate publishers have all reason to fear the current technology.

I think I know enough about content filtering to answer this. How this relates to a mode of transportation (horse-carriage vs. car) can only be found in your mind, I’m afraid. You’re just grasping at a tired old analogy to defend what you think is radical and what I think is sloppy. At the same time, you’re also participating in filtering while claiming it’s unnecessary or even counter to the goals you espouse. Again, I don’t think I’m the one who’s confused.

We disagree. I think you’re defending a sloppy system that’s bound to fail. You think I’m defending an elitist system that has already failed. I’m going to leave it at that.

Kent,

I did not mean “ease” of publication, but speed. Being online, and not having a page proof phase, PLoS ONE is simply more efficient.

And open access means that I can reach virtually any reader that might be interested.

1000$ is the actual publication fee. I just paid 300$. And could have asked not to pay at all, but didn’t want to abuse the system.

In all three papers, I had to undergo two rounds of peer review, and in all three cases I was forced to basically redo the analyses.

You talk about a “low bar”. But note that I could have sent it to some mediocre journal that, happy to have some controversial paper to publish, wouldn’t have even cared about the soundness of methods, which was my primary concern. And non-mediocre journals would probably do the same.

At least, with PLoS we save paper!

But swamp the search engines. Even if you revised it twice, they still accepted it, and there are major problems with it. So, say what you will, my mind isn’t changed that PLoSONE is a high-volume, author-pays “journal” that is closer to a directory service, one that floods the market with papers. Major journals can publish just as quickly, so speed isn’t the issue.