With the growing support for DORA and policies like that of HEFCE, which forbids the use of the Impact Factor in assessing researchers, one would think that the power of the Impact Factor would be on the wane. But search and readership data suggests otherwise, even for a journal where it should matter the least.

One of the more interesting parts of running things behind the scenes at The Scholarly Kitchen for the last month or so has been the unfettered access to all the data the blog generates. While it can be easy to over-interpret small sample sizes, there are, however, long term trends that jump out as obvious. For The Scholarly Kitchen, one such trend has been the incredible readership and resilience of any article dealing with PLOS ONE and its Impact Factor.

Since it came out June 20, Phil Davis’ post on The Rise and Fall of PLOS ONE’s Impact Factor has been either the most-read, or the second most-read post each day on The Scholarly Kitchen. In a little over one month, it has become the most-read post for the entire past year (over 39,000 views as of this writing). The second and third most popular posts over that time period? PLOS ONE’s 2010 Impact Factor and PLOS ONE: Is a High Impact Factor a Blessing or a Curse?

Both PLOS ONE and the Impact Factor are hot-button topics. Both have their detractors and, at least in the case of PLOS ONE, there’s a passionate cadre of supporters. So clearly a good amount of the interest and readership stems from the controversy.

But a look at The Scholarly Kitchen’s Search Engine Terms suggests there’s something else at work here. This data shows the terms that were typed into search engines that then led the reader to this blog. The most used search terms are remarkably consistent over the last week, month, quarter, year and the entire life of the blog. Here’s the top ten for the last year:

- plos one impact factor

- scholarly kitchen

- plos one impact factor 2011

- plos one impact factor 2012

- impact factor plos one

- plos one impact factor 2013

- elife impact factor

- the scholarly kitchen

- plos one impact

- plos impact factor

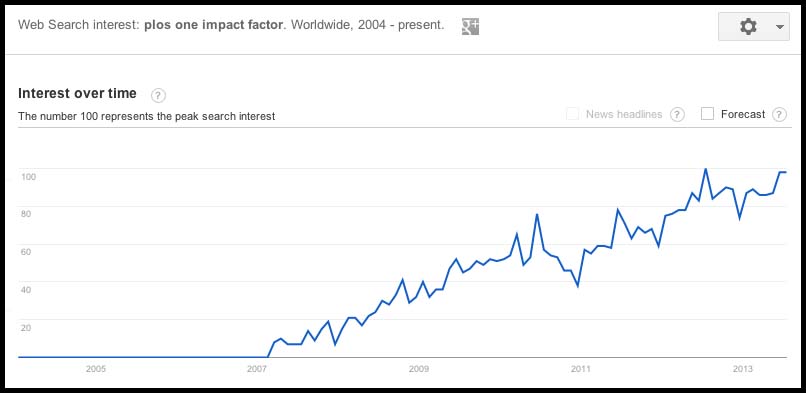

Clearly there are a lot of people out there who want to find out PLOS ONE’s Impact Factor. This goes beyond The Scholarly Kitchen though. A look at Google Trends shows that the number of searches for terms like “plos one impact factor” continue to rise. The geographical data on interest given on that page are interesting as well.

While not a direct indication of causation, that high level of interest does seem well in line with Phil’s suggestion that Impact Factor is a significant driver of submissons to PLOS ONE (as it is for all journals, at least according to Ithaka’s most recent faculty survey, see page 59).

As Phil’s analysis notes, PLOS ONE is a very different sort of beast from traditional journals. Because of its very different design, the Impact Factor does a poor job of measuring how well it is accomplishing its goals. While many of the commenters on Phil’s post suggested much better reasons for choosing PLOS ONE than the Impact Factor, the power that it still seems to hold over the research community suggests that these commenters may either be out of touch with the mainstream researcher, or perhaps a few steps ahead of their colleagues and jumping the gun.

At every editorial board meeting I attend for the journals I manage, we go through the same ritual. The subject of the journal’s Impact Factor comes up, which is followed by a gripe session about how much we all dislike it as a metric, and how badly it’s misused in academia. This is quickly followed by a lengthy session discussing strategies for improving the journal’s Impact Factor because we simply cannot ignore how important it remains for our authors and readers.

While many of us would be happy to be able to leave these conversations behind, we are, at heart, a service industry and must respond to the needs of the community we serve. The growing level of interest shown by search and readership data indicates that, at least for now, Impact Factor remains one of those needs. And even new types of journals, designed to thrive in a post-Impact Factor world, are still somewhat under its sway.

Discussion

17 Thoughts on "The Persistent Lure of the Impact Factor–Even for PLOS ONE"

“At every editorial board meeting I attend for the journals I manage, we go through the same ritual. The subject of the journal’s Impact Factor comes up, which is followed by a gripe session about how much we all dislike it as a metric, and how badly it’s misused in academia. This is quickly followed by a lengthy session discussing strategies for improving the journal’s Impact Factor because we simply cannot ignore how important it remains for our authors and readers.”

Ditto David. It’s really depressing. What is worse to me is the complete and utter lack of professionalism at Thomson Rueters when you have issues or questions related to impact factor. The fact that we depend on this data so much and TR could care less about actually responding to email is frustrating.

I am reminded of the IQ test and its importance in the 1950’s. Do they still give those tests?

The interest in PLoS One’s IF seems obvious, albeit ironic. It is an experimental format which claims that importance is not important. So people want to see how important it is. How funny is that?

ImpF really is the Emperor’s Clothes. The numerate sciences know that trying to deploy a ‘metric’ structured like ImpF within a scientific article would be bombed by the referees (“Methods Section: The highly skewed, non-normal distribution of our statistic will be represented by its arithmetic mean.”). Yet the same journals and their often academically excellent editors, as David Crotty writes, agonise about their own set of Emperor’s Clothes in the crazy fashion world of ImpF. Worse, the self-same scientists and academic clinicians who – as referees- would decry the ‘metric’ do use it in hire-and-fire, staff quality assessment and key decisions about funding and research strategy. Bosses might publicly claim (as they must) that their assessment of publication quality is ImpF-independent, yet a cursory glance at the ‘approved journals’ lists at every Research Dean’s elbow reveals congruence with ImpF ‘data’.

Publishers could claim, with some justification, that ImpF is part of their ‘real world’, even though they profess to hate it. If it is, then we academics are to blame for having ever given it any credence. It really is up to the academic community to acknowledge the stark b*ll*ck nakedness of ImpF being any sort of a ‘metric’. The scandalous ‘justification’ that ImpF is ‘rough and ready’, ‘the best we have’ etc is grotesque. Do better – read the papers not the journal title.

I think this is an important thing to realize in discussing the IF (and moving beyond it). Too often publishers get targeted as being somehow behind the support and continued use of the IF. In reality, publishers are in a reactive position here. We ask the research community what it needs and do our best to supply it. Publishers care about the IF because academia cares about it so much. If academia moves away from it, publishers will immediately do so as well.

That said, it’s not as easy as just “read the papers not the journal title”. The world of research has grown so large that there need to be some shortcuts, otherwise no one would have any time to do any actual research. The NIH alone is receiving 80,000 grant proposals each year (http://ascb.org/activationenergy/index.php/the-perils-of-reviewing-peer-review/). If everyone sitting on a study section had to read every relevant paper for every application, it would take years, if not decades to make funding decisions. Similarly, a colleague recently went through the job market for the first time and reports that for every tenure track position, there were at least 400-500 qualified applicants, and somewhere between 25 and 50 “superstar” applicants. Again, it’s not possible for a faculty search committee to read all of the papers of 500 applicants in order to start narrowing things down to the 5 or 6 they’re going to interview.

So while I completely agree that IF is deeply flawed, replacing it with a deep literature review for every single candidate/applicant is simply not feasible.

I was not aware that IF was deeply flawed, not as a measure of what it measures.

There are a lot of problems with the IF, beyond just the misuse of it for purposes for which it wasn’t designed. One issue is the time window involved, looking only at citations that occurred in year X to papers published in years X-1 and X-2. Different fields may have different citation half lives and patterns, so this may punish journals with more citations that take longer or shorter. Another flaw is the variability in what counts toward the denominator, which is not uniform across all journals. It’s problematic to me that it purports to go out to so many significant digits–is a journal with an IF of 4.723 really a better journal than one with an IF of 4.722? It is somewhat game-able, through journals writing editorials that cite their own eligible papers or demanding authors cite other papers in the journal. And also, it varies a great deal from field to field. What’s a great IF for a paleontology journal is very different from what’s a great IF for a neuroscience journal, yet in many cases, the numbers are compared directly, with no allowance for category.

You seem to have missed my point. None of your objections relate to what the IF actually measures, which so far as I can tell it does accurately. You seem to be arguing for a better measure, as though there actually were a simple scaler function properly called impact, which there is not. In other words you are arguing for an undefined altmetric to measure an undefinable quantity called impact. That systemic confusion is the problem, not the IF. The IF is what it is and it does that well.

I didn’t miss your point at all. The Impact Factor purports to be “a systematic, objective means to critically evaluate the world’s leading journals”. All of my complaints are relative to what it purports to actually measure.

If its goal is to provide an imperfect measurement that does not take citation half lives into account, that varies from journal to journal due to human interpretation of what counts as an “article”, that pretends to be more accurate than it is, that is game-able and that doesn’t provide any accuracy when comparing between fields of study, then yes, it is completely perfect and flawless.

If it is meant to critically evaluate the world’s leading journals, then it suffers from some flaws.

I do not see that any of your complaints (actually suggested improvements) show that the IF is not “a systematic, objective means to critically evaluate the world’s leading journals”. Note that they do not claim that it is the only such means, just that it is one, and it is, which is why it is so used. I am not defending the IF from valid criticism but claiming it is wrong is wrong.

Now you’re moving the goalposts. I never said the IF wasn’t a measure for critically evaluating journals. Just that it was a flawed one. I never said it was completely without merit or “wrong”, just imperfect.

If you know a field well, you can easily look through it’s JCR category and spot some anomalies in the rankings.

Here’s a good example. Acta Crystallographica Section A had an 2008 IF of 2.051. Then, in 2009, because of one (1) highly cited article, its IF jumped to 49.926. That same article still counted in 2010 and the IF was 54.333. Then, along comes 2011, that article stops counting and the journal’s IF drops back to 2.076. Would you suggest that the journal as a whole, was publishing articles that were 25X higher quality in 2009 and 2010 than it was in 2008 and 2011? Or was this just evidence of how this metric, which does a decent job of ranking most journals, is not perfect?

“… what the IF actually measures…so far as I can tell it does accurately”.

Impact Factor [sic] is technically unaceptable as any sort of statistical reporting measure. For almost any journal in the relevant year (published in years X-1 and X-2 as David Crotty describes) most of the papers are cited very little if at all, some are cited a few times and a very few might be very highly cited. However, ImpF is just a crass ‘average’ – the total citations for that journal that year divided by the number of articles published that year. Even in its own terms, this figure is statistically invalid as a way of representing the citation pattern for the journal.

You could compare ImpF with one reporting ‘average wins or places’ of a population of racehorses. Clearly, some horses win or place often, some just a few times, most very rarely. An ‘average’ figure tells you less than nothing about the qualities of this population of horses. I say ‘less than nothing’ because, in such circumstances, an average is grossly misleading (as I hope is obvious). That’s why I remarked that such a measure would certainly not be acceptable to the referees if offered as an ‘index’ of anything in a paper in any scientific journal. What ImpF purports to measure is thus *not* captured, or even approximated, by the numbers it reports.

This confusion affecting ImpF is compounded by factors such as;

1) How many workers are there in this field or specialism? More workers will mean more citations .. but that alone is no index of quality, merely how ‘busy’ the topic is … at the moment.

2) Are papers still being cited several years later i.e. long after X-2 (as in the ‘long-lived works in some areas of biology and medicine) whilst others are not (as in shorter-lived fields)? Many would consider a long citation life rather than a short life to be more relevant to notions of ‘impact’.

3) Notoriously, some papers are often widely cited because of errors or even retraction, but it all counts for ImpF.

4) Some disciplines publish very brief papers and others principally publish longer, more ‘considered’ works.

5) ‘Self-citation’ can be enough to distort the figures.

In the end, the quality of a given scientific work and the authors involved is only very losely related to the ‘performance’ of the Journal itself. Good work by good people can, and does, appear almost anywhere. This reality cannot be captured by any simplistic ‘statistic’ that looks at the journals.

So, ImpF fails in its own terms. Furthermore, even if ImpF *was* a reliable index of the citation pattern for a given journal after 2 years, it would still fails on many grounds to provide an index of anything of relevance for assessing the quality, interest level, community acceptance, longevity or any other qualitative feature of the papers involved.

While many publishers complain about the IF, someone should perhaps tell the marketing folks who beat the drum every year when the IF is released. I just went through some of my old mail and counted 14 different publishers in the past two months that are praising the fact that their IF has improved. It is a big deal to many researchers as well. Flawed or not it is used in our community and publishers marketing folks make a very big deal of it.

Reblogged this on The Citation Culture and commented:

An interesting contribution to the debate about the Journal Impact Factor.

At least the search engine entries about PLOS One’s impact factor lead the readers to your blog… My institution rates researchers using the impact factors of the journals they have published in. You are awarded the full impact factor if you are 1st or last author, half if you are second or second last author, and a pittance if you are positioned anywhere else… So many problems stem from this. BTW Thanks for pingback to my new blog.