Released on Wednesday afternoon (or to be precise, at exactly 1:10 pm EDT), Thomson Reuters published its 2012 Journal Citation Report (JCR)–an annual collection of citation metrics on the performance of scholarly journals. While the JCR contains many statistics, most publishers and editors await a single metric–the Journal Impact Factor.

The Journal Impact Factor (JIF) is a single statistic which summarizes the average citation performance of articles published between two and three years ago within a given journal. For example, the 2012 JIF for journal X is the sum of all 2012 citations made to 2010 and 2011 articles divided by total number of articles published in 2010 and 2011. This is the simple explanation. Those interested in the mechanics of identifying and classifying what goes into the numerator and denominator of this equation are encouraged to read (Hubbard and McVeigh, 2011 and McVeigh and Mann, 2009)

I’m not going to touch on the weaknesses of the JIF or its misuse by academia. For some editors–especially those whose journal performs poorly in terms of citation metrics, or for those with little to lose–criticizing the JIF has become a regular pastime. Every month, dozens of editorials are written on this topic and the season for high critique comes just following the publication of the Journal Citation Report in mid-June.

While many scholars and editors eschew the notion of attributing the success of individual articles by the prominence of the journal, scientific authors continue to place great importance on the Journal Impact Factor in their decisions on where to submit their manuscripts. Open access authors are no different. Many institutions around the world have adopted a direct compensation model that reward authors based on the Impact Factor of the journal in which they publish, an incentive that locks many of the world’s authors into this unidimensional measure of journal performance.

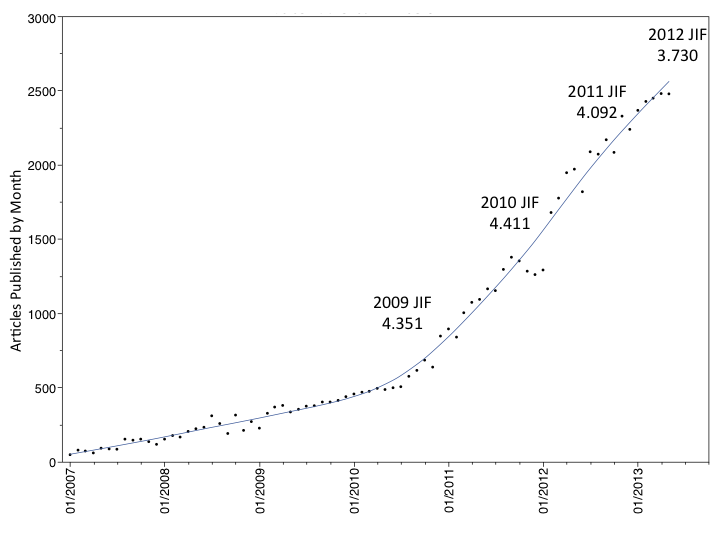

Despite its founders seeming disdain for the JIF, when PLOS ONE received its first JIF for 2009 (4.351), authors of the world responded by flooding the journal with manuscript submissions. Several months later (see figure below), you can see the effect on the number of articles published in PLOS ONE. In 2012, PLOS ONE published 23,464 articles, making it the largest journal this world has ever witnessed. Editors of biomedical journals with comparable JIFs could feel the gravity of PLOS ONE dragging down their own flow of manuscript submissions. The following year, PLOS ONE received its second JIF (4.411). We have witnessed its rise, now prepare for its fall.

The fall of PLOS ONE‘s JIF is to be expected if you look at how the Journal Impact Factor is calculated and the structure and editorial policy of the journal. Since the JIF is a backwards-looking metric, based on the performance of articles in their second and third year of publication and then reported six months later, the effect of new submission strategies takes several years to have an effect on the JIF.

For example, when PLOS ONE‘s 2009 JIF was reported in June 2010, it was reporting on the performance of articles published in 2007 and 2008, when the journal was still very young, small, had the backing of prominent scientists, and more importantly, had no JIF. The effect of the flood of manuscripts that were submitted in the third quarter of 2010 would not be felt for another two years.

Similarly, when the 2010 JIF was reported in June 2011, it was reporting on the performance of articles published in the pre-JIF era (2008 and 2009). The first detection of the wave of post-JIF submissions was first only reported last June, 2011 for articles published in 2009 and 2010. The 2011 JIF dropped by 7% from the previous year partially as a result. (Note: Journals that are growing quickly tend to have depressed JIFs). The 2012 JIF is the first citation metric to encompass a full year of articles published in the post-JIF era. Based on the performance of articles published in 2012, next year’s (2013) JIF will likely decline further.

The reporting delay of the Journal Citation Reports is responsible, in part, for the boom and bust we are witnessing in PLOS ONE‘s JIF. The other component that is fueling the decline in this particular metric the editorial policy of the journal.

In smaller journals that base acceptance in part on novelty and significance, a downward spiral can be thwarted by concerted efforts of the editors to attract high-impact articles and reviews and by preventing perceived low-impact articles from being accepted. In the absence of perfect information on how any article will perform, the unpredictability of individual article performance can also shove a journal out of a downward spiral.

For very large journals that publish thousands (or tens of thousands) of articles per year, any one highly-performing article has almost zero influence on the JIF. These journals are playing a large numbers game that makes them completely insensitive to the performance of individual star articles: they operate entirely on the bulk flow performances of the article market. When PLOS ONE was still young and small, submissions by influential researchers had a real effect on the performance of the journal. If these researchers are still publishing with PLOS ONE–and have not defected to other journals promising revolutionary new forms of high-impact open access scientific publishing, like eLife–their effect is drowned out by the new author population that followed them to PLOS ONE.

The second reason why PLOS ONE‘s editorial policy will not be able to correct their declining JIF is based on the differential citation patterns of the biomedical literature coupled with the preference of authors to be published in a journal that meets (or exceeds) the citation potential of their own articles. Journals in certain biomedical fields (e.g. cancer research) tend to have much higher JIFs than other fields (e.g. plant taxonomy). In this example, plant taxonomists would be better off publishing in PLOS ONE–as the JIF would likely be higher than any plant taxonomy journal–and cancer researchers would be better off publishing in a disciplinary journal, where the JIF would not be depressed by the publication of other fields of biology. When operating in a journal performance market (rather than an article performance market), multidisciplinary journals reward the underperformers and punish the overachievers.

Other multidisciplinary journals (e.g. Science, Nature, PNAS) run into the same problem where the citation dynamics of one field (e.g. planetary science) drags down the performance of others (e.g. oncology). However, these journals have strict editorial guidelines that select manuscripts from the best of each field and reject the rest. These journals also have Editors in Chief, who, like captains of their own ship, are entrusted to develop the focus and direction of their journal. If the journal is publishing too much in one field, or not enough in another, the editorial policy can be changed. PLOS ONE has no Editor in Chief and as far as I can tell from their editorial and publishing policies, contain no targets or limits on how many articles are published in any one field. If disciplinary journals are like small cruisers with captains on deck, PLOS ONE is, by design, more like a barge without a captain, no engine room, left to the direction of the currents.

This deliberate design, along with the vision and dedication of its founders has served PLOS ONE well, allowing it to be wildly successful. Yet, the structure of the journal was founded on scale and efficiency, and under these conditions, its founders have very little control over the direction of their ship.

The open access landscape of 2013 is radically different than the landscape of 2007. Not only do other multidisciplinary open access journals in the biomedical sciences now compete with PLOS ONE, many specialized titles do as well, offering fast publication, methods-based review, and an array of post-publication metrics. Journals like F1000Research even publish before review. PLOS ONE now competes with for-profit publishers operating journals that are not required to create surpluses and may run indefinitely even at a loss.

The shape of the PLoS ONE publications curve suggests that the journal may be reaching its asymptote–its maximum publication output based on the ability of authors to generate manuscripts. However, this forecasting assumes some equilibrium in the market. Unless PLOS ONE has cultivated a strong and loyal group of authors, a decline in their 2012 Impact Factor will likely signal the year when authors (at least for whom the JIF is an important factor) turn away from the megajournal and return (as some hope) to a discipline-based model of publishing.

Discussion

63 Thoughts on "The Rise and Fall of PLOS ONE's Impact Factor (2012 = 3.730)"

Generally articles in the 1st year after publication receive fewer citations than in the 2nd year after publication. Given the PLOS more than doubled in size from 6722 articles in 2010 to 13781 articles in 2011 I would expect the Impact factor to fall independently of any change in the longer run citation rates of the articles published. This effect is compounded when looking at the monthly growth figures, with the massive increase in growth throughout 2011 meaning the bulk of 2011 articles are published in the latter half of the year, giving less time to accrue citations for the 2012 Impact Factor.

Although you mention this effect briefly I would be interested know how much of the decline you think can be explained by the growth and how much relates to the other factors you mention.

This is a really good question! To answer it, one would need article-level citation data for several years. In a regression model, you could model and control for the date of publication and see if there is a residual longitudinal trend. As a caveat, the Journal Impact Factor is derived from a separate process at Thomson Reuters than the article-level WoS data, making it difficult to derive a journal’s Impact Factor from the WoS data with precision, as we all know.

Despite what you portend, I envisage continued growth of PLoS ONE. It seems most authors submitting to this journal are bothered less by impact factors. What is most appealing is PLoS ONE’s editorial policy and a fast yet rigorous peer review (on average one gets reviewers’ comments within 4 weeks).

Indeed before they are cited, you can clearly see the impact of PLoS articles from the post-publication article metrics particularly pdf downloads and sharing in social media.

While citations are important, I think sometimes they can also mislead. I have heard of ground-breaking work that took long to get cited (or even published); yet many retracted “Nature” and “Science” articles continue to be cited. The history of science humbles me much; what seems worthless now (i.e. not cited) can be found to be a masterpiece in future (even centuries from now). So that’s where the PLoS model makes sense.

The question no-one seems to ask is what is it reasonable to expect the JIF of a journal like PLoS ONE to. If it aspires to publish a representative slice of the valid scientific literature without selection for ‘importance’ or subject area then PLoS ONE should have a JIF around about the average of the entire scientific literature. I’m not sure what that is but it is worth also looking at Scientific Reports, a journal with similar editorial criteria to PLoS ONE which has just received it’s first ever JIF of 2.927. Scientific Reports’s JIF is the 1,561 highest of any of the 8 thousand plus journals listed which puts PLoS ONE and Scientific Reports well above the median value, indeed inside the top 20% ranked. If JIF is any measure these anti-selective journals are outperforming the majority of scientific journals and will continue to do so unless and until their JIFs ‘fall’ dramatically more than the few tenths of a point that we are seeing here.

Also I would be wary of identifying a plateauing based on at most 2 data points in a graph as historically noisy as PLoS ONE’s monthly publication numbers.

This seems mathematically pre-determined — that is, if you increase the denominator in the IF calculation, you decrease the IF. What’s interesting is that the IF hasn’t gone down as much as you might expect given the rapid increase in PLOS ONE’s article counts.

I feel compelled to point out how misleading the graph is. Not only are the IF numbers placed as if they are data points correlated with the underlying line, but the timing is off — that is, the 2012 IF actually should be a year in arrears, because it only covers the prior two years. In fact, each IF number should be pushed to the left by a year at least (you could argue each should be pushed back to the January of the final year of each two-year cycle, so an 18-month pushback would probably be even clearer), and a separate y-axis for IF with a line tracking the four data points would have made the two major data sets clear at a glance. As it is, this graph is a great example of how data can be plotted in a confusing manner.

To me, the data points are more akin to these (I did not take the time to redraw a line for the IF data, which would be inversely related to article counts, another big problem with the chart, but I did move the last data label in a meaningful direction):

Kent, I’m sorry that you are so confused by the graph. The point was not to explain how the JIF was a function of the publication rate, but how the JIF is a strong signal to potential authors, who respond with submissions to the journal. You’re trying to imply the reverse causal connection.

It’s a confusing graph. If that was your intent — to show that the IF signal affected submissions — it’s ineffective. You need more zoned detail to show that, and peering at the data, I’m not sure the data are there to support that idea. So, bad graph — timing elements are placed against a line with no clear correlation, the IF items are placed as if they relate to the graphed data points, there’s no axis for the IF so you’re moving them vertically along a line of submissions, which creates a visual message that has no meaning. If you wanted to make that point, you should have put the IFs along the bottom, with no dynamic relationship with submissions, and created a zone of submissions following each announced IF, probably adding the average time for PLOS ONE from submission to publication to adjust for latency in the system.

Information graphics (good ones) are hard to do right. This one doesn’t accomplish its intended purpose, and sends inadvertent signals that have no real meaning or context.

Kent is right, with such a growth curve, not to mention changing perception of authors towards the journal’s quality, it will take years to reach any kind of (quasi) steady-state JIF. I also favor the analysis of Chris on PLOS ONE eventually approaching an average JIF value, though I expect it to be in the second quartile.

To further show how this graphic could be clearer (and I think better showing your point), try this zoned model with some assumptions (at least clearly stated, if not correct — feel free to correct them).

This shows the relationship I think you’re trying to cover with less chance for confusion or bad signal.

This a far more confusing and less relevant graph. The original graph showed the IF and the resulting submissions. This is not a confusing message. It does not show, or try to show, the articles that contributed to the IF.

The point of the graph being that PLOS obtained an IF, then submissions rose. Therefore people care about the IF enough for it to be a (possibly the deciding) factor for submission.

Submission of an article to a journal is a very human event. Do I submit to a journal with no IF that may never be read (even if I believe strongly in OA), or do I submit to a higher IF journal that may not be OA, but I know has an audience. What is the long term impact on my career for each?

That PLOS became a journal that could offer both (to a degree) is likely the reason why submissions rose. The minimal editorial influence on the journal which helped raise the submissions is also now contributing to the lowering of the IF. It will be interesting to see the results of this.

I apologize, I misinterpreted what the graph was trying to show and you can ignore my first point.

I disagree. The graph I’m proposing factors in exactly what you’re talking about, makes it clear which IF related to which submission period (or attempts to do so, and makes its assumptions clear), and illustrates that we can’t yet really determine anything about the new IF, which the other graph doesn’t show at all. The latency in the signal to the response is really important in Phil’s hypothesis, but his graph doesn’t visually capture this at all.

I’m curious about this paragraph from your post:

“The second reason why PLOS ONE‘s editorial policy will not be able to correct their declining JIF is based on the differential citation patterns of the biomedical literature coupled with the preference of authors to be published in a journal that meets (or exceeds) the citation potential of their own articles. Journals in certain biomedical fields (e.g. cancer research) tend to have much higher JIFs than other fields (e.g. plant taxonomy). In this example, plant taxonomists would be better off publishing in PLOS ONE–as the JIF would likely be higher than any plant taxonomy journal–and cancer researchers would be better off publishing in a disciplinary journal, where the JIF would not be depressed by the publication of other fields of biology. When operating in a journal performance market (rather than an article performance market), multidisciplinary journals reward the underperformers and punish the overachievers.”

First, can you cite any studies that show that authors have a preference to be published in journals with high journal impact factors? Second, (how) do you think authors calculate the citation potential of their own articles? Any literature on that?

Speaking as an author, and someone who does like to see his work cited, I can honestly say that having my work cited more times is not the only consideration that factors into my decision of where to try to publish an article. But even if it were, I don’t think I’d consider the JIF in the way you seem to indicate would be rational or prudent (for the plant taxonomist and the cancer researcher). For a researcher from any field, if what they want is to receive the highest number of citations for their individual articles, it doesn’t follow that they should automatically seek to publish those articles in a journal with a higher impact factor. Instead, they should seek to publish their articles wherever their audience is more likely to read them. Figuring out one’s audience is difficult, of course. But it cannot be done simply by looking at the JIFs of various journals and aiming for the one with the highest number.

First, can you cite any studies that show that authors have a preference to be published in journals with high journal impact factors?

It’s a pretty common finding when studies are performed. The study linked in the post above is here:

http://arxiv.org/abs/1101.5260

A couple of others can be found here:

http://www.sr.ithaka.org/research-publications/us-faculty-survey-2012

and here:

http://www.sr.ithaka.org/research-publications/ithaka-sr-jisc-rluk-uk-survey-academics-2012

Awesome, thank you! But this makes the question of the rationality of this preference loom even larger. And that, in turn, calls into question the ethics of using such metrics as the JIF.

It seems perfectly rational to me from the researcher’s point of view–if career advancement and funding decisions are based on the JIF, then it makes sense to pursue it. The irrationality lies with those choosing the JIF as the determining factor in making those decisions, not the researchers who are merely following the most pragmatic path to moving up the ladder.

Interesting. I agree with you that there is an irrationality in choosing the JIF as the determining factor for career advancement (say, on the level of promotion and tenure criteria). But I disagree that it is rational to pursue career advancement according to irrational standards.

I think it’s rare for anyone’s career advancement to be tied *only* to the JIF of the journals in which they publish. This is why in my original comment, I brought up the supposed connection between citations of one’s articles and the JIF of the journals in which they are published. My question was whether it’s rational for a researcher in pursuit of the maximum number of citations for her individual article to pursue publication in the highest JIF journals.

I don’t think it is. What’s rational is to pursue publication in the journal that will reach a maximum number of members of your audience — people who will be impacted by and more likely to cite your work. That may or may not be the readers of a journal with a high JIF.

Given the level of competition in academia these days, I think it’s rational to pursue advancement in any ethical manner possible. You’re right that JIF isn’t the only determining factor in advancement or funding, but it remains a factor.

I met with a colleague who recently went through the job market grind (successfully, I’m happy to say) and her experience was that for every job she applied to, there were at least 400-500 qualified applicants and around 30-40 outstanding applicants. The search committees had to narrow those applications down to 5 candidates to interview. While we’d all like to think that the best way to judge a researcher’s work and potential involves a deep understanding of what they’ve done and what they plan to do, it’s simply not practical to go that far into depth for 500 job applicants. So there have to be some shortcuts.

I do agree with you that citation counts for individual articles is in many ways a much better metric than JIF. But it is also a much slower metric. If I’m a postdoc ready to hit the job market and I publish an article covering my current work, I don’t want to wait 2-3 years for citations to accumulate, I want to start my own lab now. I can point to an article in a top journal, an article that has been reviewed by a panel of my peers and judged by those peers to be of high enough quality to achieve the standards required by that journal. I think that remains a helpful, if not completely definitive, pointer toward the quality of my work.

Yes, I agree — the slowness of citation counts is an important limiting factor on their value. That’s one reason I really like altmetrics as a complement to other article level metrics. Altmetrics are fast! I also suspect that the degree to which JIF correlates with the reputation of a journal varies by field. In the humanities, I’d guess JIF matters less. That’s perhaps why the survey of British researchers you pointed me to earlier (thanks again for those links!) frames their question in terms of “High impact factor or excellent academic reputation.” At first, I thought this could be read as equating the two (and I suppose it could be). But it also opens the door to separating JIF and academic reputation.

I’m still waiting to see more from altmetrics that offers indications of quality, rather than indications of interest or popularity (both useful things to measure, but not necessarily correlative with quality). That would be the goal, or perhaps at least moving to better existing metrics to replace the JIF (or maybe using a panel of metrics rather than just one).

I do agree that most researchers have in their heads a hierarchy of journals in their field that may or may not correspond exactly to the JIF. I do think there’s a lot of reaction along the lines of “oh, you published a paper in Journal X, that’s impressive,” rather than, “oh you published an article in a journal with Impact Factor X, that’s impressive.”

which is why there is DORA

The hyposhesis that the takeoff was due to the high IF is plausible but remains to be tested. The curve looks a lot like what my team found for emerging fields so it might just be the emerging PLoS business model, independent of the IF. We will have to see what the curve does over the next two years. If it falls then the IF hypothesis looks good. If not then not so good.

I did not mean that my team discovered this pattern, as Price did that in the 1950’s. We just explored the causality and the possible role of communication, using a contagion model. One scenario here is that the high IF certified PLoS One as real, so allowing the takeoff, but a declining IF now will have little effect.

Reblogged this on jbrittholbrook and commented:

This should be read along with Paul Wouter’s post (below). Lots of confusion surrounding Journal Impact Factor, I think.

An interesting column but I take issue with the central premise — that authors are submitting to PLOS ONE largely or entirely due to its JIF. You state:

“Despite its founders seeming disdain for the JIF, when PLOS ONE received its first JIF for 2009 (4.351), authors of the world responded by flooding the journal with manuscript submissions.”

Submissions to PLOS ONE have grown inexorably year on year — an astonishing 23,000 last year — while the JIF has remained essentially flat over the past 4 years. (The new JIF — 3.73 — is a 15% dip from the inaugural JIF.) Is there any evidence to support the notion that this rise was sparked by that debut JIF, rather than the many other purported attributes of PLOS ONE — the unique tenor of the review process, rapid publication, reduced frustration with meddling reviewers/editors, and support of the open access movement in general?

You close with this:

“A decline in their 2012 Impact Factor will likely signal the year when authors (at least for whom the JIF is an important factor) turn away from the megajournal and return (as some hope) to a discipline-based model of publishing.”

I would not be surprised if the PLOS ONE JIF slides in future years, but I’d expect that based on the ever growing long tail of published articles. It’s hard for me to believe that authors en masse are going to select their chosen journal based on a modest dip in JIF. If they do, they really have their priorities backwards.

“Is there any evidence to support the notion that this rise was sparked by that debut JIF, rather than the many other purported attributes of PLOS ONE — the unique tenor of the review process, rapid publication, reduced frustration with meddling reviewers/editors, and support of the open access movement in general?”

Unless the journal changed to include these attributes at the same time as the JIF appeared, it would seem unlikely that they precipitated the raise in submissions.

Now, it is possible that people saw that the journal had a JIF and considered it a more “credible” journal purely because it had one, rather than the actual number itself. But this seems unlikely.

I did an analytical exercise. I had a look at the 2012 JIF’s of journals where I published my last 11 papers (over the last 2 years). The JIF of 4 of them went up, while of 7 of them went down.

The distribution is as follows (from largest increase to largest drop): +11%, +3%, +0.3%, +0.3%, -2%, -6%, -11%, -13%, -16%, -23%, -23%.

So on average my impact dropped 7.2%. Coincidentally the JIF of PLOS ONE dropped 8.8%.

Before thinking this proves that PLOS ONE is the average of the field, it should be noted that my field of research does not intersect that of PLOS ONE (i.e. I cannot publish my stuff there). But it does suggest JIF’s may have generally dropped this year, after hitting a peak last year (true for most of my journals), and PLOS ONE is well within my range of deviation. So nothing to be alarmed at.

When I go my society’s conferences, our publisher arms me with a list of the top-cited authors in our field. I make sure to court these people and encourage them to submit manuscripts to our journal. Why? Because, as this article points out, a couple of stars can make a difference to a small journal.

The “editor” of PLOS ONE (if there were one) would have less interest in courting these stars, because the effort required would make little difference in the JIF. Here’s a case where the little guy can compete on better terms with the big guy!

This is a “gordian knot” discussion. PLoS ONE is receiving an “ecological” impact factor. Remember, no reviews just scientific reports, no selection of eligible documents by the journal. Having this number of papers and touching almost everything in biomedicine, PONE IF means the average impact of biomedical sciences. So, PLoS ONE is measuring life sciences impact. It is relatively easy to predict that when paper numbers will reach plateau (now is incresing year after year) it IF will get stability too.

Maybe I shouldn’t say anything, since I have nothing positive to say, but it is hard to resist. So many words expended on so little! It may be true that some authors think that JIF is an important consideration. But given the amount of evidence that has come out recently on the unreliability of JIF and its rather weak correlation with the number of citations of articles in the journal (for example http://www.frontiersin.org/human_neuroscience/10.3389/fnhum.2013.00291/abstract), the shift toward citation-based measures at the individual article and researcher level for research assessment, the declaration DORA, etc.

I really can’t understand the point of the article unless it is an attempt at schadenfreude (that misses the mark). Perhaps the author is one of the some who hope for a return to the good old days when authors knew their place. My opinion is that change is unstoppable and publishers have been disintermediated, not before time. How about trying to discuss how legacy publishers can adapt and actually add value to scholarly publishing, since it is so unclear to many of us that they can? That would be worth writing about.

As correctly pointed out, if increase in deniminatore rises at proportionally FASTER rate than increase in the numrator (say, t^3 vs t^2, where t is the time lapse), the ratio falls. That is one of the weakest Achele’s heels of JIF (which in conrtast to the anecinet hero, has many such heels, a true centipede ;-). Anyway, the citation index (h-factor, to be more precise, self-ciations excluided) I think is a much more fair parameter, although it also has its own heels (let say it is an insect 😉

Igor Katkov

Well, there are a few more factors behind this so called “decline” in JIF of mega journals like PLoS ONE. Unlike the regular stream oriented traditional journals, PLOS ONE is a journal with no monthly publication limit. It publishes all papers which really and genuinely deserve to be published, and meet their publicaiton criteria, coming from different streams. While the traditional journals do all sorts of hard jobs to make sure that they control number of papers published per month or year. In calculation of JIF, this factor plays major role.

The random probability of not getting cited or with very few citations is much higher than getting cited. By restricting and limiting number of publicaitons, these tradtional journals manage to maintain their impact factor cleverly and artificially. In fact these are the guys who do this business and propagate the importance of JIF. Which has resulted into some sort of scientific nexus and lobbyism also, keeping at bay the true reach of innovative research from various labs all around the world. If one would look at total citations and readibility, I am sure that it will be much higher with journals like PLoS, compared to traditional guys who desperately manage their publicaitons per month to mange their JIF, which is out and out an artificial performance meter.

Personally, while working in an area of interdesciplinary science, I feel that publishing at wide area journals like PLoS and BMC is more fruiteful. Journals like PLoS ONE are important for democratic scientific research system, where without any doubt, the quality of publicaitons and review system has been extremely democratic unbiased and top class, much better than several better JIF scoring traditional journals from reputed publishers.

In the meantime, PLOS ONE guys also need to strategize a more aggressive presentation and publication style, where they could on the spot present their main page into various subsections/ categories i.e. a mega journal with several daughter journals. The published articles might be sorted according to their categories and presented in more systematic manner. Lets hope that spirit of PLOS like journals never gets bogged down by parameters like JIF and its worshippers.

Added to this, I would like PLoS ONE to post a comparative publicaiton plot chart on their page, showing the percentage of MSes being recieved from each nation, and %age being accepted and published. I am sure that this chart alone would disclose a big truth about the global accpetance of several high JIF journals, where one would find more publications from all over the world at PLoS ONE, instead of restrcited to certain labs and certain countries.

Ravi. I don’t fully understand your argument. First you suggest that journals being selective based on subject area are not the way forward, then you suggest PLOS launch additional journals based on subject areas. Isn’t that counter-intuitive? Surely any issue PLOS has is with the structure of their website and search, rather than requirement for new publications.

You also imply that traditional journal’s only benefit is a falsely inflated impact factor. Yet one issue I consistently encounter among scientists is that there is too much literature and it is too hard to know what to read. So a benefit of selectivity around a subject allows me to easily see what is important in my field. Selectivity based on quality (e.g. Nature) ensures I read the most breaking science, regardless of field. PLOS also has it’s place, for ensuring the masses are published. Each has it’s purpose and position within the publishing ecosystem.

The question is does the JIF have an impact on submissions to PLOS and if so how large a drop in JIF would have an impact on submissions and how does this change the balance I mention above.

Hi Thomas!

I understand that in this article a projection has been discussed regarding possible fallout of PLOS ONE IF due to lowering of its current IF and continous publication of articles from all fields, instead of “selctive” trimming. What I could see from the mentioned plot, a major reason for fall in PLOS ONE IF is publication of large volume of articles from all streams, which this article also has discussed. And it is indeed the major reason. Your question that whether this fall in impact to influence the rate of journal submission and subsequent affect on its IF. I feel it will not matter much. What you see as my contradictory statement is actually a way I suggested PLOS ONE to tackle such situation. Situation, which you also suggested that you wish to get focussed on your type of article instead of sorting across the volumes of article. This is something which is troubling all readers of PLOS ONE. To reach their article of interest, they have to excavate a lot at PONE page. Despite of having so many good articles published there, just due to improper presentation of articles at PONE page, the desired reach of articles is not achieved. The beauty of PONE is its wide range, but same is its negative point when readers are considered. And I suggested a way to manage it. I never advocated selective trimming, which comes from plain compulsion of traditional journals to maintain the number of papers to be published per month to keep IF intact. And obviously, the best and the acceptable trimming is the review system. Then why one needs a hobnob to forcibly maintain a false respect representation? Let it settle between the scientist and an authentic review system concerned only with the quality of science being presented!

Based on keywords, PONE can go a well innovative way of presenting the works from various fields which will solve your and mine complain as well as improve its readibility reach and IF ( which matters to several, espeacilly from Jurassic world of paper publication when print was the only media and accessing articles was a snail mail matter). Few codes which could archive the papers based on keywords, and break PONE into subjournals within this main journal, so that a reader could directly reach his/her interest zone. Similarly, landscaping of each published article is important. Ask authors to furnish highlight and a main diagram to present before the article on the journal page. It will catch you simply. When volume is so high no reader wants to waste time digging limitlessly and finding his kind of stuff. You then need to make serious effort on article presentation in batch. And what I suggested above solves this. There are several article of my interest which PONE publishes and I don’t know even. Even my articles published there gets burried down the volumes and none knows. But these things have nothing to do with quality of articles and review system there. They are without any doubt better than several higher impact journals. The print journals can put their reasoning that since they publish in print, they could not print large number of articles like online journals like PONE. Then, I have a logic to suggest them that here is the place when you can play selective screening, by deciding which one to be on print and which one to appear online, as today all journals have online subscriptions also. Why not do it such positive way?

There are several reasons why people prefer to send article to PONE. I tell you mine:

1) Open Access

2) High respectibility among fraternity

3) Just USD 500 for authors from developing nations, and unlike BMC they even give full waiver on request. I have been able to publish my all articles without any cost, and at our place we hardly get exclusive funds to pubish papers. I admire their kind heart to the core which has enabled several of developing nation guys to present their work at global level, where PONE hardly allowed the monetary reasons to be a hassle for publication.

4) Impeccable review system, where I have witnessed a system to ensure justice to authors and several instances where reviewer abuse has been curtailed greately. The challange system works there way better than any journal. This all makes it a democratic system of publication, unlike any other journals, where reviewer abuse and high headedness of handling editor is mostly without any scrutiny.

5) Comparatively better speed of publication.

6) I get limitless space to describe my work, instead of getting bogged down by fathers of English asking me to showcase my English writing wizardness with writing of a 4000 word comprehension on my research work, diluting the main objective of the work presentation: the details of work!

So if you ask me that what I will do if the IF of this journal has fallen, I would tell you that this is the journal which I respect to my core and have reasons to do so, and I will keep on sending my articles there. It is a journal with great loyality coeeficient and fan fareship, which is rare with other journals. Obviously, I too would like to have readership for my artcile and have suggested a way to PONE that how it could be addressed in a better way.

I have I have sprays enough words and view this time to clear my stand, leveraging a large from an open access free and limitless writing space at this portal!

Ravi: being selective may indeed keep a journal’s IF high but journals have been selective for far longer than the IF has existed. They see selectivity as their mission. Ironically the problem you highlight with PLoS One, namely missing important stuff, is precisely why journals are selective.

But basically PLoS One and the selective journals are doing two different jobs. Think of it as the difference between getting into a football stadium and getting into a beauty contest, only in this case the beauty is that of important truth. I personally think that everything that is credible should be published and the mega-journals are doing that job. But ranking articles by importance is also useful and the selective journal system is doing that job.

Sir, you are correct that sometimes ranking also does matter. But do you think that the existing formula of JIF does a good justice to evaluate the true ranking? Irony is that, such irrational estimation of rank is being used by a community, which is supposed to be led by rationals and logics, scientists…and we are blindly following this tradition despite of it being an obselete and in utter need of modification with current scenario of scientific world. Its formula stands as a very tentative and half hearted, and factors like visibility, reading times, discussions etc should be included while calculating it. And in every sense it needs to be normalized to decomse the artificial management done by publishers by restricting their total number of papers published. This single factor alone, if neutralized, will give you a better JIF evaluation. A journal publishing thousands of papers per month can never be fare compared with a journal publishing just 10-20 articles per month, with existing JIF formulae. It is an absolutely irrelevant formale for sure. If one wishes to look for ranking, then please make a pragmatic and correct formula of JIF.

I am referring to the ranking of articles by their acceptance in a specific journal, not the ranking of journals via JIF. What the standard system of journals does that PLoS One does not do is sort and rank articles by the process of acceptance and rejection.

How would have the “Nature” folks ranked the first two Einstein’s relativity papers now? When something trully innovative is published, who will be the judges of its importance rather than the future? It is like a mowing machine that cuts a lot of weed grass on the lowest level but can cut out the future sequoia tree because it doesn’t fit any definition of the bush ;-)) Very often, trully innovative, revolutionary papers have been published in some small “obscure” journals first, just to avoid theft the idea by the reviewers while rejecting the manuscript. Tell me that doesn’t happen in the “Nature”? Happens in my field all the time!! Therefore, specialized journals are not designed well for the discovery of very revolutionary work as they heavily rely on a set up list of the reviewers, a “pack” so to speak, who would in 99% cases reject a “black sheep”, or “a white crow”. DARPA, for example, can do it much better.

DARPA is a funder not a publisher but they also use peer review, as do all the federal funders. Any human system based on judgement will have a level of error but that is no argument against it unless a better system is being argued for. Cars are less than 40% efficient but still very useful. Dumping 1.8 million papers into a big unsorted, unranked pile does not seem to be a good solution to the problem of misranking. Beyond that you are making a lot of empirical claims for which I doubt you have any data. Revolutionary papers are few but I imagine they often find their way into major journals. Scientists are not as stupid as you seem to think.

As for Einstein I was not aware that his papers were published in obscure journals. Can you document this?

Nice. According to this review article just out today, the lower the IF, the more reliable the science.

http://www.frontiersin.org/Human_Neuroscience/10.3389/fnhum.2013.00291/full

In that case, the science published in PLoS One just got more reliable over the last few years.

So the Impact Factor can make an article that is already written more or less reliable after the fact? Wow, that is one magical metric.

Magical, of course! What else is new about the IF? 🙂

The art of science is to avoid the extremes and digital “good guys – bad guys” mentality. The truth usually lies in the middle and practically nothing in real science has “1-0” options, all have their own pro’s and con’s. Relativism? Maybe. But at least, this notion has logic and doesn’t lead to an absurd. Looks like that article in the “Frontiers” is a quite an opposite one, and following that, we can really come to the Absolute Absurd ;-)) JIF is not perfect, and I honestly prefer h-factor, but nobody has proven long-term NEGATIVE correlation between them. Thus, saying that all people (or the majority of those), who are citing good and professional papers are stupid is a bit of a stretch of imagination.

In the years considered for calculation of the 2012 JIF (2010 and 2011), PLOS ONE published 20,503 articles. Collectively these articles accrued more citations (76,475) than:

Nature (1703 articles, 65,731 citations)

Science (1733 articles, 53,769 citations), and

PNAS (7378 articles, 71,842 citations).

Unlike Nature, Science and PNAS, all those PLOS ONE articles were made free at the time of publication.

Google Scholar’s journal rank lists PLOS ONE amongst the 100th top journals in the world.

Arxiv doesn’t have an impact factor an nobody denies it’s importance to the scientific community.

I’m sure that these are the numbers that really matter to many scientists when they choose to submit to PLOS ONE.

I have published in PLoS One two times, and I am proud of it. J Biol. Chem. has a gang of editors, which act like a fraternity club. If you are not a frat-boy, you can not publish there, especially if there is a competition with one of the frat-boys. PLoS has the widest array of possible editors and reviewrs, and they are not exclusively people from the USA (which gives a strong bias in journals like JBC).

I can cite a dozen of Nature papers which turned out to be total trash, but were originally published in nature because powerful institutions were behind the papers, and the manuscripts were prepared to be popular, rather than quality research.

the review that my manuscripts got in PloS One was as good, and even better than the reviews in J Biol Chem, J Mol Biol, or ACS Biochemistry. In each of the three cited journals I could guess the type of the reviewers bias.

JBC, J Mol Biol. PNAS, Biochemistry artificially elevate their impact factors by making them exclusive toy for those in power. The quality of Science in PLoS one is as good as in J Biol. Chem,, or J Mol Biol, or Biochemistry.

Thanks God we have PLoS One, so I do not have to write papers in fear that some gizzer in power will heal his frustrations on my back.

“JIF is based on citations per article not total citations so I do not see your point”

Exactly David!I and I don’t see any point for concern so far, it’s statistcs + arithmetics combined, within a range of variation. Nobody and nothing growths indefinitely (myabe some nasty bacteria do ;-). Scientists shouldn’t behave like brokers on stock exchange and panic at any 10% fluctuation, otherwise even the best things can collapse! It’d be interesting to see avarage h-factors of PLONE vs. others. I think PLONE is a unquie combination of scientific quality and professional diversity and at the same time, luck of elitarism, the balance, which is very difficult to keep.

There is a reason why in total PLoS One gets more citations than Nature, or Science. Science is always progress in small steps, it is not a beauty pageant. When you look in 30 years period, more good science will come from 30 years of PLoS One, than 30 years of Nature.

In the year 2000 a group of people not trained in medicinal chemistry published in Nature that NSAIDs can be used to treat Alzheimer’s disease. It looked fabulous, you just have to drink ibuprophen or indometacin, and you will not get Alzheimer’s disease!

What beauty pageant reviewers were not capable to see, is that the authors used over 10 micromolar concentration to see the effects, concentrations that did not know have specific interactions. Now if I want to publish in the Nature that a previous Nature paper was a trash, Nature would not give me that opportunity.That is why I have PLoS One, a shelter from those who try to make science look like a fashion show. A place where I can publish Science as science, not thinking who will spoil my review process.

Journals typically do not publish articles that simply attack other articles. They are not blogs. I fear you are simply ranting, not contributing to the discussion. Science as a social process is in fact analogous to a beauty contest because truth is beauty and deep truth is what science is after. Human judgement may often be wrong but it is central to the process of science. Results which are not used are useless. There is no alternative.

Zejco and David.

I agree with you. Both of ouy. Partially to each of you. I think that journals do and MUSTpublish “rebuttal” papers if those papers are supported by own experimental data and/or mathematical calculations (or even common sense). It is deeply imbedded in the American and (nowadays) in the Western culture in general, that avoidance of harsh critiques as it it’s often considered as a personal atatck. It is diffirent in other civilzations though (I use the Hantington’s concept on that matter). From that point, my culture is rather “confrontational”. However, it is closer to Math and Physics environment: people can blead each other ‘s noses in an international symposium and than goo to a closest watering hole and continue to be the best friends like nothing’s happenned. In a dispute, we will obtain the truth. Hardly beleive it can happen in USA in medical/biological world, ‘dispute” has far more legal connotations and people stop shaking each other hads for a slightest public criticism.

And again, science is actually both, small steps and quantum leaps, both hard work and pageant beuaty contest. Ask spirit of Sir Isaack Newton about his presentations to the kings if you don’t believe so. And the spirit of Professor Leibnitz at that extent about personal attacks. We might stand on the shoulders of those giants and can see thier greatness now, but at that time, it was business as usual, all those ‘working bees”, who brought small pieces of the puzzle so the giants (very ordinate people otherwise) could bring a larger picture.

To Zeiko:

Are you sure that had PLONE existed in 1949, it would have rejected lobotomy? I am not. And you are stepping on very thin ice by bringing modern ethical standards and politics in this conversetaion: many people in the World consider experiments on laboratory animals or abortions so barbaric that they’d go to kill the doctors and smash the labs for their believes! I won’t be surprized if in 200 years, the humandkind might well consider any “-tomy” barbaric!! My point is that probably PLONE would have goten that paper on lobotomy published even faster as it is less conservative but many things in PLONE depend on a particular reviewer’s set of mind while in the “Nature”, there is a more or less unified set of stanrads. And as I said before, there are pro’s and con’s of each approach. It depends on what your goal is and real means are. I, for example, have no much “well established” Full Harvard Professors behind my back so I will start with PLONE. It might change wiht the future though. I know one very well established professor, who is published in both, depending on the topic and novelty of the data. PLONE, from his own words, is “more innovative” but at the same time, “much more omnivorous” quality wise.

Predicting the fall of PLoS presupposes that the majority of authors who publish there care much about the specific impact factor. However, it may simply be the effect of having an impact factor and a low burden for publication that is driving the success. Using China as an example, their incentives for impact factor don’t differ at all between a JIF of 1 and a JIF of 3, and the drop off between the 1-3 category and the <1 category in terms of cash payout is fairly low.