Peer review, journal reputation, and fast publication were selected by Canadian researchers as the top three factors in deciding where to submit their manuscripts, trumping open access, article-level metrics, and mobile access, a recent study reports.

The report, “Canadian Researchers’ Publishing Attitudes and Behaviours“ was conducted by the Phase 5 Consulting Group for Canadian Science Publishing, a non-profit organization that oversees the NRC Research Press.

The survey, conducted in February, 2014 was sent to more than 6,000 Canadian authors publishing in a broad spectrum of scientific and technical fields, and resulted in 540 completed responses–a 9% response rate typical of similar surveys.

The report provided confirmation of what larger, international surveys of researchers have reported previously–that peer review is an essential step prior to publication, and that many strongly-held beliefs of researchers regarding free access to the scientific literature do not coincide with their own behaviors.

The survey suggests however, that there is a disconnect between researchers’ apparent agreement with the principle of open access (i.e., that research should be freely available to everyone) and their publishing decision criteria. Although the vast majority of researchers (83%) agree with the principle of open access, the availability of open access as a publishing option was not an important decision criterion when selecting a journal in which to publish. In this regard, availability of open access ranked 6th out of 18 possibility criteria. It was eight times less important than impact factor and thirteen times less important than journal reputation when selecting a journal.

To me, this disconnect does not underscore widespread hypocrisy in the scientific community, as even scientists who have familiarity with open access rate it much lower than other factors (viz. SOAP survey). Scientists have many identities, and tapping into the fundamental desire of the scientist-as-reader (access to everyone else’s work), does not always coincide with the fundamental desire of the scientist-as-author (recognition by one’s peers).

Moreover, openness is a central ethos of science (see Merton’s Normative Structure of Science), so it is not surprising that the phrase “open access” receives so much popular support. The structure of science (unlike, say, the financial industry) requires that findings be public in order to claim credit. And the more public, the better, which is why scientists do such a good job using peer-to-peer and informal networks for disseminating their work.

Consider page 11 of the Canadian study. While 97% of respondents relied on their institution’s subscription to access journal articles, the next most frequent response was contacting the author(s) directly for a copy (91%), followed by institutional repositories (76%), ignoring the article (75%), and social networking sites (50%). Pay per view took last spot at 27%.

Discovering that scientists support openness and then using that finding to advocate for overhauling national funding policies is like discovering that undergraduates love beer in order to advocate for underage drinking. To me, it is much more constructive to ask which features of the publication system scientists value most and work toward improving them. On this point, the Canadian survey does an excellent job.

Not surprisingly, the peer review process was overwhelmingly supported (97% of respondents agreed), following by a journal’s ability to reach the intended audience (93%), and discoverability through major indexes and search engines (92%). Copy editing (77%), layout and formatting (71%) still received a majority of support as did advocating on the behalf of authors when their work was being misused or when their rights were violated (71%).

As for the factors scientific authors considered when selecting a journal for manuscript submission, journal reputation scored highest (42.9 out of 100 possible points) followed by Journal Impact Factor (26.8), although in my mind journal reputation is a construct that incorporates its citation standing, among other attributes. Third place went to speed of publication (9.6), which, given the value scientists put on priority claims, is not surprising. “No page charges or submission fees” received 4.4 importance points–an issue very familiar to American society publishers.

Immediate Open Access (OA) publishing via the publisher’s website received just 3.3 out of 100 possible importance points, and OA publishing after an embargo period received just 2.2 points. As mentioned above, these results confirm prior author surveys, even those focused on authors with a history of publishing in OA journals.

The rest of the list includes a long tail of issues advocated by authors, librarians and advocacy groups, such as author-held copyright (1.9), article reuse policy (1.2), and third-party archiving (e.g. Portico and CLOCKSS, 0.39). I’m a little puzzled how authors can express the need for retaining their copyright while simultaneously wanting publishers to defend their work against misuse, but if a researcher doesn’t really understand what copyright entails, keeping it may sound like a better option than giving it away; and having an organization defend your rights sounds a lot better than defending them yourself.

What was surprising, given all of the attention received at conferences and press-reports, is that article-level metrics received just 0.73 points, putting it in 11th place (out of 18). It could be that few authors have prior experience with journals that offer such tools that detail the dissemination of one’s article to the broader community.

Equally surprising was that “Accessibility on mobile devices” ranked dead last, receiving just 0.04 importance points, even below the option to include video abstracts and multimedia files (0.14 points). I don’t know of any other author survey that includes a mobile device question, so I have no basis for comparison. Journal editors and publishers would be right to remain skeptical against the claims of marketers for mobile and apps-based products until they see better data.

When I travel to publishing conferences, I spend a lot of time checking out nearby hotels, selecting one in my price range with a gym and pool. When I arrive, I’m often disappointed that the “gym” is no more than a small room with a few machines, poor lighting and bad ventilation. The pool is often even more of a disappointment–too small and too warm to do laps. In the end, it doesn’t matter, because I hardly ever use these facilities, especially after a late night out drinking with colleagues. If I even make it to these amenities, I’m often alone. I think the hotels know that the desires of their customers frequently contradict their actual behaviors and plan accordingly. This doesn’t mean that hotel customers are hypocrites; it does mean that hotels (like publishers) may be in the unfortunate position of supporting costly services that serve more of a marketing function than addressing real needs.

“Build it and they will come,” may still be relevant. Just don’t expect many to use it.

Discussion

36 Thoughts on "What Researchers Value from Publishers, Canadian Survey"

… third-party archiving (e.g. Portico and CLOCKSS, 0.39)

To me, this is the most astonishing finding of all. What it says is that authors either (A) don’t care whether their work will still be accessible in 10, 20 or 50 years, or (B) don’t have any understanding of what it takes to ensure that it is.

That’s truly disturbing.

(Possible corollary: the typical author may not have given much thought at all to what parts of the publishers’ service are actually of value, and may instead just be following what he’s always done, or what his advisor always did.)

As Kane has pointed out, welcome to the league for academic competition. speed to acknowledge the claims, journal reputation that the game is being played in the majors and peer review is the required certification that the journal needs. The informal community already knows the content. In the world of science, it is now old, historic information and the web never forgets. Back on the road for the next round in the publish/perish/tenure standings.

A medical research team chose a ranked journal over the faster OA because one of the team was up for tenure and a minor league game regardless of the value of the work was not the question.

The fact that transport, tickets, beer and brats and chips costs well over X to attend and that those without the money are stuck in a bar watching the telly is not in the equation.

in the social learning cycle of Boisot, this is now public knowledge. What it costs the public is not of concern anymore than the audience at a sports match unless there are scouts in the boxes with funding contracts.

let me amend the rather flippant comments by pointing out, again, that Ann Schaffner’s well documented and constructed article points out much of what the Canadian survey and previous research has shown.

1) the rejection rate for the sciences and engineering is much lower than other disciplines

2) even Kepler noted that he wrote for whomever, even if only for posterity

3) the actual publishing is a vetting process often for promotion and grant seeking

4)much of the innovation flows well before the journal article comes out. In fact if one follows an interesting history, the prestigious Phys Rev needed a faster rate of pub and created Phys Rev Let which eventually became the path for more articles seeking early cite. Many publishers also follow the option of early cite of articles accepted for publication.

5) in the past, there was more interest in the publishing than in the fact that it was widely read. Today, the IF today maybe another way to add to the value for both the publisher and the authors.

I am currently in Africa which has its own list of African published journals. Access to research whether published or otherwise is difficult, at best, and at worst expensive to access. The resources to act on this knowledge is limited by the economics of the research facilities and universities, for the most part. Open Access and many of the efforts to bring knowledge to the community is important. But even having such access but not the invisible or informal community shows. Similar situations exist outside of North America, Europe and parts of Asia.

The sports metaphor, while somewhat flippant, is fair. The number one question I get from faculty and students is how to get to the US or EU. Africans follow “football” and some hope; similarly academics hope. Watching the problems with the World Cup in Brazil makes one wonder about the wise use of fiscal resources to play in what Harvey Kane calls “competitive academics”.

Why are there “x” 000’s of “academic journals, the equivalent of sand lot to majors in baseball?

Why in an era of big data are there still archaic forms of articles when most can be condensed into a data table and a short note? Or why is a project divided into many parts instead of one substantive piece of work that will take two years or more to get significant results.

Phil’s article is on target.

When journals came in, it took about 150 years to get to the current form. When movies first came in they were made by shooting action on stage. Now that “big data” has entered the world of journals, as i have suggested elsewhere, there are changes coming. I have not seen one “delphi”, one “implications wheel” or other foresight analysis that informs the publishing industry as to what is on the horizon.

Why in an era of big data are there still archaic forms of articles when most can be condensed into a data table and a short note?

Why must it be an either/or situation? Why not make both available? Unless it’s research that is directly related to what I’m doing, it’s unlikely that I want to take the time to dig through your (potentially) enormous set of data to understand what you’ve done and what can be learned from the results. I want a condensed version, essentially the “story” of your experiments. What did you do, what does it mean. My time is valuable and limited. This provides an efficient manner for me to get a handle on your work and decide if I want to dig deeper.

Data availability is indeed the new frontier. But if our experiences with supplemental data is indicative, then very few researchers are terribly interested in poring through the datasets of other researchers. This varies from field to field, much dependent on the nature of the data and how readily it can be re-used. But given time constraints, most want access to a summary of the work, and that’s what the paper provides.

But there’s no reason we can’t do both.

In fact if one follows an interesting history, the prestigious Phys Rev needed a faster rate of pub and created Phys Rev Let which eventually became the path for more articles seeking early cite.

It seems to be a stretch to claim that PRL was born of necessity. It was started by Goudsmit as an experiment – one that included largely dispensing with peer review.

It might also be that the language ‘third-party archiving, eg Portico CLOCKSS’ speaks to publishers and publisher-nerds (sorry Mike) but not to everyday researcher language. Especially if this is way down the list of options for a respondent who is already hastening to the end so not really giving it the attention the surveyors think it merits.

Not sure about 10 or 20 years, since that is still within a career lifetime. However whether a specific paper is available or not 50 years down the road is just not very important to anybody except a historian or archeologist. If the findings of that paper have stood the test of time, the main points will form part of “established knowledge” summarized in textbooks. There are very very few scientists who have ever needed access to the primary literature published five decades ago…

This is very field specific. In many of the natural sciences – conservation biology, taxonomy, paleontology, geology, etc. – meaningful citation of decades-old papers is very much the norm. I regularly cite one particular work from 1907, which still stands as an authoritative work on horned dinosaur anatomy. The case is very different in some areas of medicine, etc., but nonetheless mileage varies greatly.

I fear I have to disagree. Microbiologists are always seeking the formula for various agars and they are in very old journals. It was this very problem that was the impetus for the Handbook of Microbiological Media. A book that is constantly revised because both old and new are found.

Just because a journal goes belly up does not mean that the articles disappear. Journals are archived.

Actually, authors are concerned that their research will be available. There is no assurance that OA journals will be around! At least those with high impact, backed by reputable publishers, and both in print and on line have a history of being around.

My experience shows that authors give great thought – as the survey points out- to the services and value of the publisher.

Most Americans assert support for the Bill of Rights yet many of them will advocate practices that directly contravene the principles contained in those first ten amendments to the US Constitution. Psychologists tell us that compartmentalization, as they call it, is a common human trait.

Thus, the dichotomy of scientist as reader versus scientist as author is perfectly sustainable and quite natural.

In the scientist as author compartment, attention is focused on whatever promises to enhance the chances for advancement, most commonly indexed as promotion, tenure and attractiveness to funders. Pavlovian, really.

Change the way that academic work is assessed and academic behaviors will change and adapt to that. The behavior of the author/scientists doesn’t validate the system, it only illuminates it.

I fear you have lost me. Just what does that have to do with the Bill of Rights?

I’m just finishing one of the several conferences I attend each year for my society. Besides letting me solicit good articles, these conferences give me valuable feedback from our authors. So, none of the survey results are surprising. Reputation seems the key thing authors value, followed by a journal’s relevance to their paper’s topic.

Even at this most recent conference, on the high-tech and highly visual subject of Geographic Information Systems, authors are remarkably indifferent to publication format. Once again, nobody pressed me to move forward with whiz-bang interactive features or anything of that sort. They are very happy with the staid old PDF!

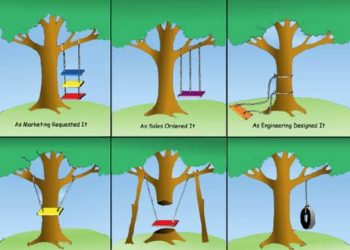

Has there been a study that asks researchers to identify their own lists of what is most important when selecting a publication outlet? It would be interesting to see what stands out enough in researchers’ minds to make it on the list, as many of these items probably would not be considered without being presented first.

I agree. It would also resolve potential issues with acquiescence bias, which may play heavily into the responses to questions about open access, copyright and reuse policies.

Rose and Phil, editors ask these kinds of questions all the time. We live in a competitive world and innovation is what keeps one in the game.

In doing this sort of research, if you don’t use a controlled vocabulary, wouldn’t you introduce other potential issues? If different people respond with answers that are ambiguous, and the person doing the survey has to interpret which category to count their responses in, doesn’t that introduce other biases? If I say that “broad readership” is important to me, does that mean circulation, subject coverage or access?

There is a statistic used in perception research called Multidimensional Scaling. It isn’t easy to use in survey form as the best approach is to have the same people participate at two distinct times – but it could be different participants. Participants would first generate a list, and ambiguous terms would likely be omitted from a final list in favor of frequently employed terms (e.g. discoverable; open-access). Once that final list is created, participants match pairs of terms together based on how (dis)similiar they find them to be. Dimensions of perception are determined. (Think if tasting different sodas, common dimensions that appear are “diet,” “cola,” “non-diet” – that is what is most salient to tasters.)

Why do such surveys only ask about authors’ choice of publishers for journal articles, not books? I realize that, for scientists, articles count much more than do books, but it would be really interesting to ask these kinds of questions of scholars in the HSS fields and ask them about both books and journals.

While limited to books, not books & journals, you might want to have a look at this report from the Lever Initiative, if you haven’t already:

Looked at the study and it is interesting. especially the 82% who said they would be interested in publishing a OA book. I wonder how interested they would be once they saw the cost of doing so.

I think it might be a difference between principle and practice; a lack of awareness of how much publishing a book in *any* format, especially electronic ones, actually costs; a vote of confidence in something they’d like somebody to do (even if they’re not the ones doing it themselves); or some combination of all the above, along with other factors and a dash of “wouldn’t it be nice if…”

What I find most interesting is the divide between what academics want as authors (who have careers and reputations to advance) and what they want as readers–or even (especially?) the divide between authors for whom the status quo has worked out just fine (those with positions at 80 of the most prestigious liberal arts colleges in the US), but might be in a better position to publish something without worrying what a tenure committee will think of it, and those outside the Oberlin Group who, I’m guessing, are more likely to be trying to advance their careers. Now I’m wondering how things might break down if you studied the opinions of tenured/tenure track/adjunct faculty on what they would like to see from publishers, both as authors and as audience.

When it comes to books, I have on principle made it a practice to avoid anyone who is not tenured. The book can be a career killer. Regardless of discipline, schools want articles in prestigious journals. Once tenured, a prof can look at a book project if that is a desire. That is the hard about book publishing. One has to sell the idea to the prospective author, and then the author has to produce. In short, an acquiring editor has to sign 30-40 books per year to have 20-25 published in any given year.

Not my experience in university press publishing, Harvey. Only in the sciences, where acquiring editors often have to persuade authors to write books (because they don;t carry much weight in P&T decisions), is there much dropoff in completed books after signing. Very seldom does this happen in the HSS, where authors have much more motivation to publish books, especially in some fields. And advance contracts are not used there nearly as much as they are in the sciences. Consider, after all, that many first books are revised dissertations, so much of the writing has already been done earlier.

Sandy:

That is so in HSS. I should have limited my audience.

Yes, i read it. Some librarians have been surprised by what faculty said.

The classical example of disruptive innovation is the US automobile industry that basically wrote off the upstart Japanese car makers. Looking at this thread, he question for the publishing industry is whether or not there are disruptive forces or only small problems that will not severely impact the future of academic publishing/publishers.

Rereading the responses to Phil’s summary of the Canadian study, One seems to sense that the industry is stable with minor adjustments for rough edges.

thoughts?