There are so many types and uses of data, that it’s hard to pin down what is most important. What one organization might consider the bar of entry in data collection and curation might be beyond the capabilities of another organization. Like any type of development (strategy, product, process, technology, user interface, etc.), developing a data strategy is dependent on use cases. The bottom line is always: What are we trying to accomplish and what insights would help us meet our objectives?

When we pose a question to the Chefs, we intentionally leave it broad in an attempt to identify different perspectives. This month’s question is no exception:

What is the most important data for a publisher to capture and why?

Joe Esposito: Although the phrase “the most important data” is likely to make most people think about various kinds of usage metrics (which are, of course, important), my experience suggests something more basic. Many of the organizations I have worked with over the years simply lack a grasp of the basic operations of their business. It is not unusual for a society publisher, for example, not to be able to tell you whether or not it is making money. This is not a made-up example. There are organizations that put their financial statements together by customer type instead of the products they deliver (making it impossible to track the outcome of an investment), and organizations for which the allocation of overhead is as complicated as the Manhattan Project. I should not leave out the librarian who was touting her institutional library, who boasted that she had spent two hours teaching a faculty member how to deposit a green copy of a paper. “How many papers can you handle like this in a year?” I asked. She didn’t know, but she was confident that she was leading the fight to take down Elsevier.

We all want to work on the new and interesting problems — of course. But before you think outside the box, you have to be able to think inside it. Many publishers (and this includes some of the largest) falter because of poorly understood business practices. Perhaps it is time for us all to take a class on Publishing 101.

Kent Anderson: I think some important and obvious data sources and data points get overlooked because we sometimes think of data as what our digital systems produce. There are rich data in our systems, for sure, but if we don’t use broader data to develop context, we can’t derive much strategic meaning. For example, I may see that usage is going up in the UK, but if I don’t integrate the larger data points of how the pound is performing against the dollar, how science funding in the UK and EU is projected to change after Brexit, and so forth, that usage data may mislead me. I may assume usage will continue to go up, whereas with the macroeconomic data, I may instead think I’m at a peak UK usage and it will likely go down. The same could be said for the importance of understanding hiring trends in a discipline, changes in training program requirements, and so forth. I think publishers should be looking to capture leading indicators about broader changes to inform strategy. Data from systems play a role in this, but tend to be lagging indicators that level-set. Strategic, leading indicators about the world writ large are the data I think strategists and leadership teams should focus on first and find a way to capture and maintain — not the click-through rate on ads, the conversion rate of marketing campaigns, etc. These are important, but without the broader, longer-term look-ahead, they are tactical, not strategic.

Data from systems play a role in this, but tend to be lagging indicators that level-set. Strategic, leading indicators about the world writ large are the data I think strategists and leadership teams should focus on first…

Lettie Conrad: This is a great question, because it encourages us to think rationally about the data we care about and why we invest in its storage and use (or not). Given all the big-data hype, it’s easy to assume that more is better, but thoughtful data architecture is worth the time. There are a few key types of data for publishers to strategically consider: Content data; Customer data; Performance data; and Operational data. That first category holds the most important area for a publisher’s data strategy: publication / product metadata, aligned with our supply-chain standards. Time-tested protocols such as Dublin Core or MARC are good representations of core publication metadata. While numerous downstream organizations will ingest and make use of our bibliographic / product data, the originating publisher should take pride in ownership, acting as stewards of the most accurate and authoritative versions of metadata records. Once quality assurance, systems integration, and data governance foundations are in place, then we can optimize our content datasets with advancements, such as ORCIDs and license indicators — as well as move on to plan our approaches to other data categories.

Karin Wulf: When I read about the kinds of data that is available to, and useful for, my colleagues in much larger publishing operations I’m fascinated by both the potential for information but also the divergence between what small organizations like mine can expect and digest and what much larger organization are able to demand and process. I suspect these may be matters of scale, but I am interested in when they are matters of type, too.

For a small society publisher in the humanities there are three kinds of data we deem essential: reader behavior, author behavior, and internal process. These are interrelated. We don’t require sophisticated tools to produce this information, but it does take plenty of people in the room working it over to think through how to get it, and how to use it.

For reader behavior, we’re combining data from all the platforms on which our publications appear. We look at our content aggregators (JSTOR and ProjectMuse), as well as direct online and app access and we try to put all that in context to understand when, why, and what readers are doing. For example, it’s clear that for us back content is very active (for more than three decades). Making that content discoverable and accessible is important, as is finding new ways to leverage that content in combination with new and related content.

Author behavior is frankly harder, and we’re not dealing with anywhere near the numbers that our colleagues in STEM fields do (for a single journal but also for any individual author). We look to internal processes to help us a bit with understanding and predicting author behavior, clarifying workflow for ourselves and our authors to create efficiencies but also to make more transparent the value of our slow process.

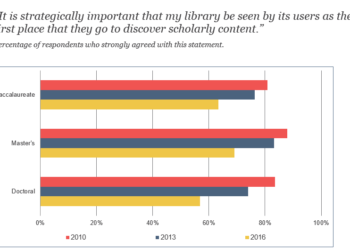

But we’re also always looking at available data on larger trends in special collections and archives, humanities funders, university libraries and publishing because these institutions often drive the systemic changes that we need to be aware of and navigate. We look to institutional sources such as Ithaka, but we’re also always reading for the data collection and sorting methodologies that may — or often may not — reflect and relate to our on the ground experience.

Judy Luther: Data could be metadata possibly represented by an identifier such as an ORCID or DOI. Data could also be metrics measuring some form of usage or attention as an indicator of value. What could be most valuable is a combination of the two.

ORCID has continued to develop and the author identifier is linked to a potentially robust profile that includes education, employment, funding and ‘works’. The latter can be a publication, conference, intellectual property or ‘other’ such as a data set, invention, lecture, research technique, spin-off company, standards and policy, technical standard or other. Users can designate elements of their profile as private, available to trusted parties or open to everyone. Software developers can use the ORCID API to connect to the registry and retrieve a machine-readable version of the user’s public ORCID record. This enables other organizations such as research management information systems at universities to utilize this information in grant applications.

Now imagine using this data with a range of metrics and other indicators of value. Funding could be more directly linked to research discoveries and the combined works of an author. The one missing link currently is an organization identifier and fortunately, ORCID, Datacite and Crossref are leading an initiative to create an open, non-profit independent organization identifier registry to clarify researcher affiliation. With this last piece in place it will be possible to construct new views of the research environment, both relationships within it and the outputs from it. Hyperbolic browsers were introduced years ago, and were more recently used by the now defunct Microsoft Academic Search to present an interactive display of the relationship of authors and co-authors. Imagine now displaying the connections between funders, authors, publishers and institutions.

Angela Cochran: There is so much data available to publishers that it’s hard to pick the most important. Whether the data has value is dependent on the goals of the publication. Publishers using competent submission systems and production tracking systems are already capturing loads and loads of data. Whether they use this data to analyze or make improvements is up to them. In my editorial group, we rely on this data to not only paint the picture of the health of a journal but also to change editor behaviors and justify staffing requests. This kind of reporting has single-handedly lead to vast improvements in our program.

So many of our users are accessing content via IP authentication from their institutional access. If the individual is not registered on the platform, we are missing key pieces of information.

On the business side, I think there is critical data that we are NOT capturing and that is user data. So many of our users are accessing content via IP authentication from their institutional access. If the individual is not registered on the platform, we are missing key pieces of information. This hinders product or service offerings. For example, if we knew which content (specifically) was being accessed by undergrad students, we could develop collections or apply tags for identifying the content most useful to that audience. This might assist other students looking for content or even faculty in creating course packs.

Of course, there are privacy issues and as much as I don’t want my general internet usage patterns to be sold to third parties, I know that what we collect and what we do with that information is sensitive. At ASCE, we have always chosen to ask for the bare minimum in the registration process because we don’t want to put in any barriers to the tools available with registration. What we lose in that is the ability to tailor content to the specific needs of our users.

Alice Meadows: We live in an increasingly data-driven world, which is not always a good thing. How many of us really like those creepy ads on Facebook and the like where we are constantly being reminded that we once viewed a pair of shoes or a vacation that we know we can’t afford? Or being targeted — or seeing others targeted — by unscrupulous lobbying organizations seeking to influence how we vote or what news (fake or other) that we are exposed to? Or — in our world — having our hiring, promotion, and tenure committee pay more attention to the Impact Factor of a paper than the actual impact of our work?

However, that’s not to say that data can’t — and doesn’t — also play a positive role in scholarly communications. From my perspective the data that’s included in scholarly metadata is especially important. The information that we connect as metadata to any kind of record — for a person, place, or thing (paper, book, dataset, etc) — is what makes those records valuable. It enables us to understand the provenance — who contributed, what role(s) did they play, when and where — as well as other key information like publisher and publication date, changes (including retractions), and more. And metadata that includes persistent identifiers (DOIs, ORCID iDs, organization identifiers, etc) and adheres to agreed standards is especially valuable for ensuring consistency, reliability, and longevity of that metadata.

Done properly, good metadata builds trust in individual contributions and, ultimately, in scholarly communications overall. So it’s not just which data publishers collect that’s important but how and why they do so.

Ann Michael: My first inclination when faced with a broad and complex question is to try and categorize it in some productive way. Several of the Chefs offered some “buckets” for us to consider.

Joe spoke of usage, operational, and financial data. Kent spoke of macroeconomic data that could put our own collected data into context. He also highlighted leading versus lagging indicators. Lettie put some of the categories Joe offered into a broader context: Content data; Customer data; Performance data; and Operational data. Karin mentioned reader, author, and internal process data. Judy and Alice focused on scholarly metadata.

Perhaps a good way to categorize types of data in a publishing environment might be:

- Content data (to aid in production, distribution, discovery, etc.)

- Customer data (including usage data, reader and author data, demographic data, etc.)

- Performance data (including some aggregate usage data, financial data, potentially

- Operational data (process data, allocation and productivity, etc.)

- Environmental data (macroeconomic, scholarly metadata, external data sources that give data context and enable comparisons, enable identification of trends, etc.)

Are we missing any major buckets? Maybe the next question we should ask ourselves is, given our strategic objectives, what specific data might support us in each of these categories?

Of course, this month’s question may also lead us to other questions regarding how we secure the data we need, the skills we need to structure, manage, analyze, and report on data as well as how we will make use of insights resulting from data.

Now it’s your turn. What do you think is the most important data for a publisher to capture and why? How do you feel different types of data could be enhancing scholarly publishing?

Discussion

15 Thoughts on "Ask The Chefs: What Is The Most Important Data For A Publisher To Capture?"

Article impact (magnitude and duration) as a way to assess selection, presentation and dissemination. Also perhaps the consumption and generation of related artefacts (data sets, for example).

Before asking what kind of data would Publishers need, we need to ask “why” we need the data for? Big data and its appeal as well as its mis(understandings) are all but well-known. If we can pinpoint the purpose (let’s say, if we have ‘inferences’) on understanding certain behavior, in whatever bucket, we will know the scope, scale and type of data we would need. Editorial planning may need different sort of data than sales/licensing planning. Metadata services are very good, but currently limited to increasing visibility and usage. When we have a pinpointed purpose than just collecting whatever data we can, then it is easier to leverage (sorry for using this infamous word) on ancillary metadata and usage patterns.

Disclaimer: all opinions/comments are my own and do not necessarily represent my employer’s position.

Well done! This type of discussion is exactly where publishers need to be. While everything in this article is essential to running a business, there are a few key statements that deserve to be highlighted, and are most often ignored or undervalued, particularly the smaller the press is. True there are bandwidth issues, true there are mission serving principles that must be factored in, but these three points deserve wall space in every director’s office and in every conference room in the press.

The bottom line is always: What are we trying to accomplish and what insights would help us

meet our objectives?

We all want to work on the new and interesting problems — of course. But before you think

outside the box, you have to be able to think inside it. Many publishers (and this includes some

of the largest) falter because of poorly understood business practices.

There are three kinds of data we deem essential: READER BEHAVIOR, and internal

process. These are interrelated. We don’t require sophisticated tools to produce this information,

but it does take plenty of people in the room working it over to think through how to get it, and

how to use it.

I’ve called out reader behavior because too few presses are working on solving a core problem with the business. If your reader (or institutional customer) is not going to support the business, it’s time for a new model. There’s only so much you can do advocate for the value a press provides. When the rubber meets the road, it’s time to reevaluate what the press does offer that is valued and what the price to deliver the service and product is.

Would love to see more on business decision making. Sometimes asking a different question and examining a new set of data points can lead to a new path.

Business practices and models, regardless of mission or commercial goals need to be understand

As a vendor providing solutions in the Open Access space our product pulls data, industry wide standards, and other information directly from the publisher workflow. We use the data in “real time” to drive publisher business rules and pricing. The data is captured in an onboard reporting tool that provides meaningful business insight to various stakeholders in the OA space.

The secret sauce is standard API’s which pull these otherwise disparate meta data nuggets together in a meaningful way. In summary it is necessary to harness the data to drive the business.

In the professional society environment, it’s critical to understand how the scarcest resource — the time and effort of volunteers and staff — is being used. Even if a well-derived P&L looks healthy, a publication program (or other society endeavor) may cost too much time and energy relative to its value for members and the broader profession. Of course this involves slippery numbers rather than neat data points. It’s hard enough to allocate staff time over a presumed 40-hour work week, and it’s even harder to assess the capacity of the typical volunteer who’s doing a lot of society work during evenings and weekends.

All true and hence the real challenge. Suggestions for incorporating this into current decision-making?

I wish I had a magic formula here, but I don’t. Here’s what I would try: First, ask everyone involved in a project — volunteers and staff — how much time they think it will take, in the planning process (if it’s a new project) and in implementation. Then go back in a year or so, show them their estimates, and ask them to re-estimate. Over time, the organization will build some expertise in forecasting and evaluating human resource expenditures and will gradually learn how to make wiser decisions. (This isn’t a bad approach for any estimating issue, e.g., sales forecasts for new books.)

Long before computers came on the scene, Princeton University Press was systematically collecting sales data for each field in which it published in a fine-grained way so that, for example, one knew what the average sale for a monograph in a field would be after one year, three years, and five years. These data were used in making decisions about the print runs for new titles and were crucial for inventory control.

In my mind most data is rather useless because what was published is not what is going to be published. Further, we publish what others write and have no say on that except to say we will not publish it, but then again we are publishers so publishing it is what we do.

Many years experience using historical sales data to predict sales of future books in the same fields shows that it can be quite reliable in aiding predictions. Monograph sales show significant patterns by field and can be quite useful guiding decisions about how to shape a list. I don’t know whetjher such patterns exist also in areas like fiction, poetry, general trade books, and the like.

Depends on the field, of course. Once upon a time (pre-2008 or so), sales of backlist clinical titles were pretty predictable. Around the time of the Great Recession, but probably not directly related, backlist sales started to deteriorate significantly. So, as in the old Gorbachev line — “trust, but verify.” Your old reliable patterns are reliable only until they’re not.

Apologies for the shameless plug, but in case anyone is interested, there’s an SSP webinar on Building a Common Infrastructure: The Challenges of Modern Metadata on April 11. Organized by Patricia Feeney of Crossref, speakers are Christina Hopperman (Springer Nature), Maryann Martone (Hypothesis) and me. More info here: https://www.sspnet.org/community/news/explore-the-challenges-of-modern-metadata-with-next-weeks-webinar/

Excellent discussion. Regarding Ann’s five proposed buckets, I feel that a bucket for your “market” is missing. It would sit between 2/customer data and 5/environmental data. Deciding where you want to play will shape all downstream decisions. it defines not only your audience, but also your competitors and the macro-economic factors at play. No market is free from change so deciding what indicators you use to understand your market(s) is essential.