Editor’s Note: Today’s post is by Jessica Polka, Executive Director of ASAPbio, a researcher-driven nonprofit working to promote innovation and transparency in life sciences publishing. Jessica leads initiatives related to peer review and oversees the organization’s general administrative and strategic needs.

The design of critical infrastructure determines what its users can do, and when. For example, the New York City subway system carries 1.7 billion passengers annually, shapes centers of residential and commercial activity, and enables a vibrant culture with its late night service.

Incredibly, it does this with a signaling system that predates World War II that forces trains to be spaced far apart from one another, limiting capacity and causing delays. Upgrading the signaling system is necessary to meet current demands, but it is estimated to cost tens of billions of dollars and would require closing stations on nights and weekends, harming New Yorkers who depend on these services. Thus, the radical (but ultimately necessary) upgrade has been delayed in favor of putting out more urgent fires, for example track damage caused by hurricane Sandy.

Similarly, journal management systems and publishing platforms act as essential infrastructure for scholarly communication. While more nimble than a metropolitan transport network, they nevertheless face challenges in balancing needs for both urgent fixes and aspirational developments. Over the long term, their supported features can shape the nature of scholarly communication, restricting or inspiring innovation.

Peer review innovation

Interest is mounting in modernizing peer review. In just the last year, a variety of new platforms and initiatives have launched: BioMed Central’s In Review, a Wiley, ScholarOne, and Publons collaboration, and independent peer review services linked from both Europe PMC (see the “External Links” tab of these results) and bioRxiv (see the section on “Preprint discussion sites” in this example).

At a meeting organized by ASAPbio, the Howard Hughes Medical Institute, and the Wellcome Trust in early 2018, a group of approximately 90 researchers, funders, editors, and publishers discussed the merits of making peer review more transparent. Attendees expressed interest in three major areas: publishing peer reviews, credit for co-reviewers, and peer review portability. They also expressed concerns about the technological feasibility of implementing them.

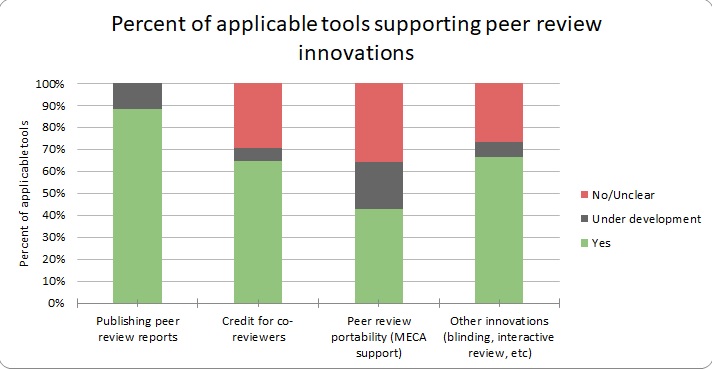

To better understand the state of the art in technological support these innovations, we’ve collected information from 18 journal management and publishing platforms about support for the suggested changes above, as well as other miscellaneous changes to peer review workflow. You can view all responses in this table.

The responses cover four major areas, explored in detail below.

Publishing peer reviews

Most researchers and editors agree that peer review is essential to knowledge creation, and many see it as a form of scholarship in its own right. Given its importance and potential value in helping readers interpret the context of a manuscript and the rigors of the editorial process, attendees at the meeting agreed that it makes little sense to keep private the content of reviews — with or without referee names. Meeting organizers summarized our thinking in an article accompanying the release of an open letter, now signed by more than 300 journals expressing their commitment to making it possible to publish the contents of their peer review process. While many signatories have been publishing this content for over a decade, the last year has seen the launch of new collaborations (for example, between Wiley, ScholarOne, and Publons) and platforms (such as In Review) that enable more dynamic and immediate display of this content.

However, having the intention to publish review reports is not enough. Journals need to consider whether their content management system will support not only technical needs (ideally, the best practices worked out at a recent workshop, which includes treating peer review reports as standalone objects) but also the necessary workflow changes (which can include obtaining additional permissions from reviewers, or enabling editors to remove potentially sensitive information before publishing a review).

Development status: All respondents to our survey of tools included some information about the publication of peer review reports, with the majority of applicable platforms already supporting this and the remainder noting that features are under development. Of course, the devil is in the details, especially for journals that rely on interoperation of multiple different tools in a publishing chain. Future efforts could provide more granular information on the combinations of tools for which support is provided, and the details on how reports can be made available.

Credit for co-reviewers

COPE authorship guidelines dictate that manuscript co-authors who have made significant intellectual contributions to the project be recognized for their work. Strangely, a similar norm does not exist when the scholarly output is a review, rather than the manuscript itself. For example, a survey by eLife found that many early-career researchers are contributing to peer review, yet preliminary data from the TRANSPOSE project indicates that few journals provide a way to acknowledge the contributions of co-reviewers (individuals, often grad students or postdocs, who help an invited referee complete their report).

Giving proper credit to co-reviewers benefits them directly: they can feel more confident sharing their experience on CVs, job applications, or even green card applications in the US. Surfacing their contributions is also helpful to editors since it can broaden the pool of peer reviewers. There are several questions that need to be addressed in order to make this feasible:

- Can a journal be made aware of their contributions, or does a restrictive confidentiality policy discourage reviewers from disclosing this kind of assistance?

- Is there a dedicated space in the review form where their names can be easily added?

- Can these individuals receive credit for their contributions via ORCID or Publons?

- Finally, can the co-reviewers be easily integrated into the reviewer database?

Development status: Facilities to help identify and credit co-reviewers are not well-developed; this area had more blank fields and short responses than those pertaining to publishing reports. Perhaps this is because co-reviewing is a seldom-discussed topic, at least compared to some others on this list. As awareness grows, demand for this support may increase.

Peer review portability

Researchers and editors spend an estimated 15 million hours annually conducting and managing peer review for papers that are rejected. In an ideal world, this peer review could be transferred to other journals in order to help authors publish their work in a suitable journal.

As discussed previously in The Scholarly Kitchen, MECA, the Manuscript Exchange Common Approach, is being developed as a standard way for journals to transfer papers to one another. MECA defines a container format that will encapsulate all elements of a manuscript, its metadata, and associated process files. While the standard is still under development, eLife has deployed a MECA-complaint module that represents its first live use. However, to enable MECA’s primary purpose of submission transfer between publishers, more journals need to be able to implement it. This will require manuscript transfer systems to export and import material shared in this format.

Development status: Excitingly, several journal management systems are already developing (or plan to develop) MECA compliance, which is impressive given that no specification appears to have yet been publicly released. The completion of NISO’s work on the project could increase this number further.

Other workflow innovations

Ideally, journals could choose from a large menu of peer review implementation options. For example, will the review be single blind or double blind? Can reviewers interact with one another and possibly also authors through a forum? Could the peer review workflow be customized to more efficiently engage highly specialized technical reviewers? While the will to experiment must come from editors (we catalog such trials at ReimagineReview), it must be enabled by a flexible technological platform.

Development status: Given the open-ended nature of this question, responses were mixed, but several platforms discussed different blinding options and discussion modules.

What’s next?

Service providers are encouraged to contact jessica.polka@asapbio.org to add or update information about your project.

Vendors are likely in good communication with their clients already. But perhaps editors could influence the prioritization of development roadmaps by making known their longer-term goals in addition to their immediate needs. By doing so, we can ensure that the tooling for communicating science matches the needs and philosophies of communities of editors and researchers.

Thanks to Judy Luther and Naomi Penfold for helpful discussions.

Discussion

10 Thoughts on "Guest Post — Technological Support for Peer Review Innovations"

I know that things are often a little less formal in the life sciences than in some other fields, but in my opinion the issue of “co-reviewers” should not even exist. Only those designated as peer-reviewers on a given manuscript by the editor handling it should have access to that manuscript and if a reviewer feels it necessary or desirable to ask someone else’s opinion on the paper, they should recommend that person as a reviewer to the editor or at the least get permission to share the paper with them so that the additional comments are attributed to the correct person. All of this can matter greatly down the line if the work involved raises patent issues. Simply sharing an unpublished manuscript with whomever one wishes to consult with should never be acceptable.

I still struggle with the need for significant investment in portable peer review. It’s not clear to me what the author’s incentives would be for wanting to pass along reviews that resulted in a rejection to a second journal (except in a small number of circumstances). In my experience, each author wants a fresh set of unbiased eyes looking at their manuscript, whether it’s the initial submission or a subsequent one to a different journal.

If your paper was rejected for a perceived flaw, and you choose not to correct that flaw, do you want it brought to the attention of the next editor? If you have corrected that flaw, the review is no longer relevant, and only serves as a distraction. I could see an author passing along a review that said, “this paper is amazing, but out of scope for our journal” but why would you want to pass along a review that says “it’s not quite good enough”?

Where these programs exist, has there been much uptake?

*disclaimer* I don’t have much experience as an author, and none as an editor, so I’m mostly repeating what others wrote.

Yes, in a perfect world portable peer review wouldn’t be necessary. But, here and now, what happens is that after you get rejected at journal X, you apply to journal Y, and editors of Y sit down, look for reviewers for your paper, and, not surprisingly, reach similar/same list of people as editors of X. They write an invitation to review, and get back an angry email saying “but I’ve seen this already”. Everybody gets frustrated, everybody lost time.

I’d guess that if news spread that portable peer review is possible, and if we enable authors to use it, then 1) situations like above do not happen; 2) researchers move one step ahead and write to the editor: here is the paper, these were the reviews, we addressed the comments, now what do you say?

2) is again a bit of a perfect-world situation, but at least 1) would be something worthy the work invested in it.

Perhaps a possible solution is to make the process easier and more efficient for reviewers, who volunteer their time, and also to provide some value-added back to them in terms of reviewer tools that help them to become better readers. We’ve coded some tools for reviewers (as well as for authors); our “Review Building Tool” is free for anyone to use regardless of whether the user is reviewing for us or for another journal. It uses evidence-based medicine standards to help the reviewer to focus on the key methodological elements that are specific to each study’s design (different elements for studies about therapy, diagnostic tests, natural history of diseases, meta-analysis, etc), and then includes prompts to include information relevant to studies of all designs (importance, novelty, dealing with no-difference findings). Although our journal is a surgical-subspecialty title, the tool would be equally usable for the review of articles in any medical specialty. Feel free to give it a try under “CORR Author and Reviewer Tools” –> “Review Building Tool” off our home page at http://www.clinorthop.org. No charge to use or share. Trying to raise all boats here. For a little more about it, including how and why we developed it and share it freely, see this editorial: https://journals.lww.com/clinorthop/Fulltext/2016/11000/Editorial_CORR_sNew_Peer_Reviewer_Tool_Useful_for.1.aspx.

Can you imagine well-known Professor X – who is on grant panels/conference committees etc, finding out that his work was rejected by a post-grad/doctoral student?

Peer reviewers do not accept or reject papers. They offer opinion and advice to journal editors who are responsible for those decisions.

In my experience as a journal editor, postdocs and advanced graduate students are often excellent peer reviewers, as they are usually more directly aware of and experienced in the latest research and techniques than their PI bosses who are removed from doing experiments. The only issue I’ve found with students/postdocs as reviewers is that they tend to feel like they have something to prove and nitpick a bit more than their more experienced colleagues.

If you send a paper to a PI for review and they want to have their student/postdoc do the review, that’s fine, as long as they’re transparent about it and ensure that the editor knows this is the case. Working together on a review with your mentor can be an incredibly valuable training experience for the student/postdoc. Really good mentors use this as a means to teach their mentees how to do a good review, and the practice should, IMO, be encouraged as a means to build the next generation of good peer reviewers.

David, many thanks for this clarification, you are of course absolutely correct in all the points you make, and i have no dispute with this. However, the perception, attitude and response of Professor X is a key element within any community. Until attitudes change, we will see unethical behaviour as a consequence of finding out whose report(s) led to the rejection of your work.

100% Alignment!