Following the Twitter activity from last week’s gatherings of the Association of College & Research Libraries (#ACRL2019) and the STM Association (#STMAnnualUS and #STMSocietyUS), I was encouraged by an underlying drive to better understand and serve today’s scholars. While not a new topic in our industry, there is clearly a renewed focus on coming to terms with how scholars conduct their work and what their information behavior means for service and content providers.

This fresh look at the researcher experience is driven, in large part, by our basic survival instincts, to be honest. Facing open access mandates and other challenges that promise to rewrite the usual rules of engagement and turn existing business models and supply chains upside down, libraries and publishers alike are questioning our value-add to the scholarly communications lifecycle. Many are answering these challenges by revising fundamental priorities and pivoting to strategies that better serve the realities of scholars and scientists, students and instructors.

Adapting to support modern research experiences requires an investment in our working knowledge of the scholars we serve — an investment not unlike those we make everyday, in infrastructure and staff and systems. Information user research generates the insights and inspiration that fuels the evidence-based decisions that drive our institutions forward (or not). An investment in our awareness of and compassion for research information practices requires the same well-considered, methodical approach as any other expenditure.

Whether working with quantitative outcomes of mass surveys or applying qualitative interviews to explore user needs or preferences, information user research should ideally be placed at the same strategic level as other forms of data used for organizational assessments and business intelligence — akin to financial reporting, usage logs,or other performance metrics. A 360-view of customer or patron experiences can built up using a variety of data sources. Whether developed in-house or out-sourced, a robust cycle of end-user investigations and testing should underlie the experiments in products and services that will define future transformations across the scholarly communication landscape.

Our own industry research — in reports from Ithaka S+R to Renew and other leading resources — demonstrates that there is no singular profile of the modern researcher. Many research roles can overlap, where a bench scientist is also often a journal author and maybe also an instructor, reviewer, or potential editor. Therefore, we must adopt a contextualized view of our users, enabling a deeper understanding of researchers situated in the cultural realities of various regions, fields of study, and levels of education. A contextual approach to user research often means focusing on the information tasks at hand for specific stages of research, such as, the work discussed recently by fellow Scholarly Kitchen Chef Angela Cochran, where the ASCE invested in a renewed understanding of research data practices and needs among civil engineers

Neuroscience tells us that the choices behind all human behavior, scientific or otherwise, is highly context dependent — not just purely situational, but varied by history, culture, and language, as well as genetics, biology, and evolution (for example, Robert Sapolsky’s work). Similarly, our interpretations of human behavior are highly contextual, where meaning and value can vary based on our perspectives, motivations, and needs. Entering into strategic information user research with this understanding allows us to channel our transformational energies in more precise directions, and filter others’ interpretations through relevant lenses for our given initiatives or organizations.

Understanding that all information experiences are context dependent allows us to focus on answering very specific, strategic questions, generating actionable data to drive library and publishing innovations. We can slice and dice scholarly communications along functional lines — jobs to be done within the research lifecycle — or using topical or disciplinary structures in place in higher education and other research institutions. These insights can both support feature-level decisions in database design and can spark ideas for wholly new services. User experience data can also drive performance metrics, such as setting target thresholds for article downloads, shares, etc.

Some academic user studies show that preferred resources or content types vary by area of study — computer scientists, for instance, favor conference proceedings and medical researchers often look to government or technical reports more than other fields. These trends vary still by geographic region, where access to scholarly journal articles impacts how researchers rank their importance to their work. Critical incident studies can help organizations delve into the immediate unmet needs and cutting edge practices in play within their communities. Some practitioners advocate for examining academic information practices within the bounds of epistemological frameworks, which often dictate or influence information seeking or usage customs among researchers.

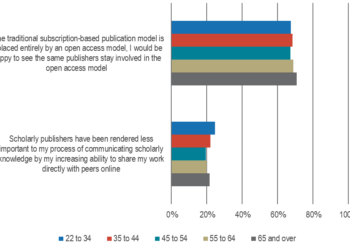

Information scientists have been steadily generating a wealth of data about human / information interactions since the 1980s. And academic information user research has been steadily growing over these last few decades, plenty enough for longitudinal evaluations of patterns and trends in these information practices (watch this space). Some, like the venerable Carol Tenopir, are beginning to develop models to understand and predict trends in researchers’ information behavior. And many of these insights will likely dispel common publisher misconceptions about scholarly information practices, such as demonstrating the relative value of quick search versus browse features on a platform, and how these preferences vary by age or training.

Whether leveraging existing literature or developing original user studies, all stakeholders in our field are well served by honing a nuanced understanding of modern research practices, challenges, and brand-new developments. This holds true for libraries, publishers, and software providers — as well as for funders, administrators, and policy makers, who often favor a one-size-fits-all approach to scholarly protocols. If we are not investing in our working knowledge of researcher experiences, we are undoubtedly making uneducated assumptions and defaulting to outdated or self-serving modes of decision making

Only with a realistic, up-to-date and situated view of researcher experiences can we define the way forward for scholarly communications. The future state of our institutions rely on our investing in an operational and compassionate understanding of today’s researcher experiences, with the relevant contextual lenses to make the choices that best serve our communities.

Discussion

1 Thought on "Investing in the Researcher Experience"

Perhaps it is an obvious point, but it strikes me that surveys or sociological study of research habits run the risk of reinforcing longstanding habits. E.g., if a user survey revealed that researchers want to publish in high impact journals (on whatever suitable metric), might this not merely reflect acculturation into old ways of doing things, or the questionable practice in at least some t and p evaluations of emphasizing publication in name-cache journals? (and not recognizing that a mere subset of journals published in a given title can account for a journal’s impact)?

It’s all in the framing of the question. A researcher should be asked not only their publishing preferences, but also their attitudes toward alternative models of scholarly communication.