Editor’s Note: Today’s post is by Chris Graf. Chris is the Research Integrity Director for Springer Nature. He volunteers as chair of the governance board for the STM Integrity Hub, volunteers as program committee member for the World Conference on Research Integrity, and was volunteer Trustee at COPE until 2019.

Professional courtesy mixed with organized skepticism is one way to describe how — in author-mode, peer reviewer-mode, and editor-mode — scientists and researchers (and sometimes people who used to be researchers and who now are professional editors) decide what publishers publish. We call it peer review. And it’s a wonderful, complex, multicolored beast. But can peer review really keep up with what we have grown to expect from it, particularly when it comes to research integrity? I’ll explore that in this post for Peer Review Week 2022, discussing reproducibility and things rather more sinister, with perspectives from a few good colleagues and friends around the world. I’ll consider what our expectations should be, and close with what are for me, and I hope for us all, five reasons to be cheerful about research integrity and peer review.

“Our research system is brilliant and multi-faceted. Of course, research integrity is necessary for good research, and is best achieved when the different players work together to ensure systems, processes and culture are aligned to best effect. Through partnership and collaboration, we can all work together to safeguard integrity.”

— Rachael Gooberman-Hill and Andrew George, Co-Chairs, UK Committee on Research Integrity

Reproducibility

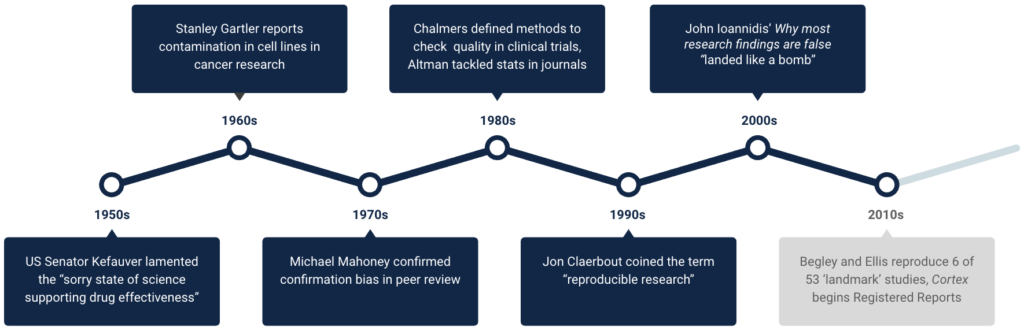

Scientists and researchers have been asking questions about research and how reliable it is since at least the 1950s, with the ‘peakest’ peak rising in the years after 2000 with a focus on reproducibility (see the figure). If research integrity to you means reproducibility, then Professor Dorothy Bishop shared what I think are reasons to be cheerful in late 2019, including newer approaches to peer review that pre-register and focus on research plans (registered reports), and newer ways to share questions and concerns after publication (Twitter, PubPeer).

Since then, evidence has been building that registered reports make a difference. But, as yet, registered reports and their cousin ‘pre-registration’ lack universal appeal. This particular innovation in peer review is a good but, for now, a partial and limited solution, and perhaps it will remain that way. So we should also be looking to other initiatives like those promoted by the Reproducibility Networks emerging around the world, as well as for answers from research into research integrity such as those presented at the World Conferences on Research Integrity.

“I see framing the mindset of researchers for quality and reproducible science must start at the earliest opportunity, with ‘Peer-review’ at every level of a project, and many initiatives are working towards this. Catching dubious manuscripts at or post publication will help. We must be careful though, to use post publication peer-review wisely as there are unintentional mistakes made by good researchers. With fancy technologies and alarm bells ringing, it is still an uphill battle with increasing retraction numbers.”

— Danny Chan, Director for Development and Education of Research Integrity, Hong Kong University

Something more sinister

Perhaps, instead of reproducibility, research integrity to you means something more sinister, like the consequences of paper mills and research misconduct. If so, then you’re in the esteemed company of the US House of Representatives Committee on Science, Space, and Technology and the members of its Subcommittee on Investigations and Oversight, who joined the hearing on paper mills and research misconduct earlier this year. You can watch the recording at the link or read the transcript. I was there as a witness, alongside Drs Jennifer Byrne and Brandon Stell.

Reflecting on Professor Bishop’s second reason to be cheerful, I’m also enthusiastic when readers (a.k.a. peers) share questions with publishers and notify us of concerns after publication. (If you’re a reader who does that then thank you, sincerely: Elisabeth Bik, Cheshire, Smut Clyde/David Bimler, TigerBB8, Morty, and many others). These notifications can and do include assertions that paper mills are at work. They also, sometimes, are milder and relatively simple to address with authors.

“Review by peers remains critical for curating data that is added to the existing body of knowledge. Journal peer review alone is insufficient for research integrity. The gaps in the process are revealed by post-publication reviews that identify errors. Preprint review offers the advantage of allowing a larger group of people to comment on the data along with free access.”

— Sandhya P. Koushika, Associate Professor, DBS-TIFR, Mumbai

At Springer Nature, like many publishers, we have a great team of people working on research integrity concerns raised after publication. We resolve around 100 integrity cases per month right now. Cases are growing 15% year on year, though. And paper mill concerns can add significantly to our work, with perhaps multiple papers in one notification that claims to have spotted a paper mill. Again, it seems that peer review as it currently works isn’t enough to reliably prevent publication of papers suffering from integrity problems.

Expectations

Maybe we should ask whether the expectations of peer review are realistic, if they include expectations to “detect fraudulent and erroneous research” or to effectively address concerns about reproducibility. Historically just four in 10,000 papers are retracted for serious and unaddressable problems. That seems to me like an indicator of significant and successful investments in quality made first by researchers and then by publishers. Or maybe those expectations speak directly to the value that researchers, and the other people who use our content and services, need publishers to add now and to get better at. Perhaps researchers want us to augment peer review, so a new combination of efforts more effectively prevents concerning papers from being published, more efficiently resolves concerns that arise after publication, and more actively nurtures emerging research practices that help good researchers present and share their work with integrity and impact.

“The core business of publishers and journals is quality control. Sadly at most 10% of fraudulent or fatally flawed publications are retracted and often only after a long delay.”

— Lex Bouter, Professor of Methodology and Integrity, and Chair of the World Conferences of Research Integrity Foundation

Reasons to be cheerful

I see smart publishers, technologists, and other stakeholders working on exactly these things. And this gives me, and I hope us all, five reasons to be cheerful about research integrity and peer review this Peer Review Week.

Reason #1, Foundations

Publishers are continuing to invest in screening, including improvements to routine checks for plagiarism as well as for other indicators of ethics and quality (like disclosures of conflicts of interest, description of ethics committee approval and funding sources).

Reason #2, Extensions

Publishers are beginning to roll out screening for newer challenges, like concerning images, which may result from a data-management error, naive efforts to make images look presentable, or manipulations with an intent to mislead. I also see researchers creating new knowledge and insights to inform future extensions to those screening initiatives (see also #4 below).

Both #1 and #2 require continued investments in a mixture of technology and, perhaps more importantly, the people to use it. And I see publishers making those investments. They’re giving good research authors careful feedback on how to improve their research papers before publishing them. They’re avoiding publishing research that is beyond correction.

“Technology is increasingly important in research integrity — if only because it is more and more deployed in manipulation and malpractice. By pooling resources, knowledge and experiences across publishers and submission systems, and by developing training sets and resources allowing submissions to be screened consistently and thoroughly, technology can play a vital role in preventing unsound material from entering the scholarly record without burdening the peer reviewers. The STM Integrity Hub was launched to achieve exactly that. And technology thrives on collaboration.”

— Joris van Rossum, Integrity director, STM Association

Reason #3, Processes

Publishers, together with other stakeholders, are developing and sharing resources about managing integrity concerns largely after publication — from honest but fundamental mistakes, through to systematic manipulations. We need these shared, standardized ways to work. We need to update existing processes for newer uses and situations so they can work at the scale of the paper mills.

Reason #4, Infrastructure

The publishing sector is embracing collaboration, like the 23 publishing organizations now participating in the STM Integrity Hub. The people working on this Hub will set the agenda for shared infrastructure to address newer threats, like paper mills. It’s early days in that collaboration; if you or your organization are interested click this link and fill in the form.

Reason #5, Coalitions

I see initiatives that challenge us to go to ‘the source.’ The integrity challenges we deal with as publishers begin way ‘upstream’ where research is done. We can and are doing more (see #1 #2 #3 #4). And other actors also have a responsibility, namely the organizations that employ, fund, and set policy for research and researchers. That’s where the solution lies, in a broad coalition of those who are able to act and make change happen. COPE is building exactly this kind of coalition with universities and research institutions, alongside its existing members.

“Publishers cannot solve these problems alone. There needs to be collaborative dialogue with universities, journals and funders to have a greater impact on integrity in research. Working together we can raise the profile of integrity of the published record, collaborating on guidance and advice to support better ethical behavior.”

— Dan Kulp, COPE Chair

Whatever your favorite color from the many multicolored models for peer review, the way we do research publishing is founded on professional courtesy, mixed with some organized skepticism, extended by researchers to other researchers — whether they are authors, peer reviewers, or editors. That’s a good thing. It really does put the communities we serve at the center of what we do. It puts members of those communities in charge of what we publish. And we can do more. We can create integrity checks to run alongside that professional courtesy and organized skepticism. We can augment the feedback given to authors by researchers operating in peer reviewer-mode. We can augment the decisions made by researchers operating in editor-mode. We can better address questions and concerns raised after publication.

There’s lots to be cheerful about this Peer Review Week. Peer review, we salute you.

Discussion

2 Thoughts on "Guest Post — Peer Review and Research Integrity: Five Reasons to be Cheerful"

Thank you for this post and your work with the STM Integrity Hub. Your first paragraph reflects the mostly positive experiences I’ve had as an author, reviewer, and AE.

A question on addressing problematic papers: Could you say something about the purview of EIC’s versus the Springer Nature research integrity team. There have been SN EIC’s quoted in Retraction Watch saying in effect “not my responsibility to investigate, that’s for the publisher to resolve.” In contrast, at society run journals it seems to mostly land in the EIC’s lap. Could you say something about your team’s research integrity review approach, especially if there’s inaction from the institution?

Great article, Chris! Thank you for putting RI within this broad, multi-institutional perspective. It really will take a village to curb the behavior that has become increasingly visible over recent years.