Editor’s Note: Today’s post is by Christos Petrou, founder and Chief Analyst at Scholarly Intelligence. Christos is a former analyst of the Web of Science Group at Clarivate and the Open Access portfolio at Springer Nature. A geneticist by training, he previously worked in agriculture and as a consultant for Kearney, and he holds an MBA from INSEAD.

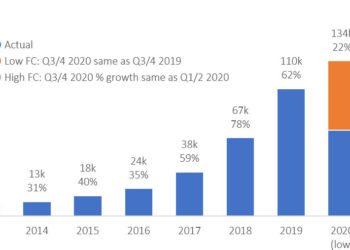

Until recently, MDPI and Frontiers were known for their meteoric rise. At one point, powered by the Guest Editor model, the two publishers combined for about 500,000 papers (annualized), which translated into nearly USD $1,000,000,000 annual revenue. Their growth was extraordinary, but so has been their contraction. MDPI has declined by 27% and Frontiers by 36% in comparison to their peak.

Despite their slowdown, MDPI and Frontiers have become an integral part of the modern publishing establishment. Their success reveals that their novel offering resonates with thousands of researchers. Their turbulent performance, however, shows that their publishing model is subject to risk, and its implementation should acknowledge and mitigate such risk.

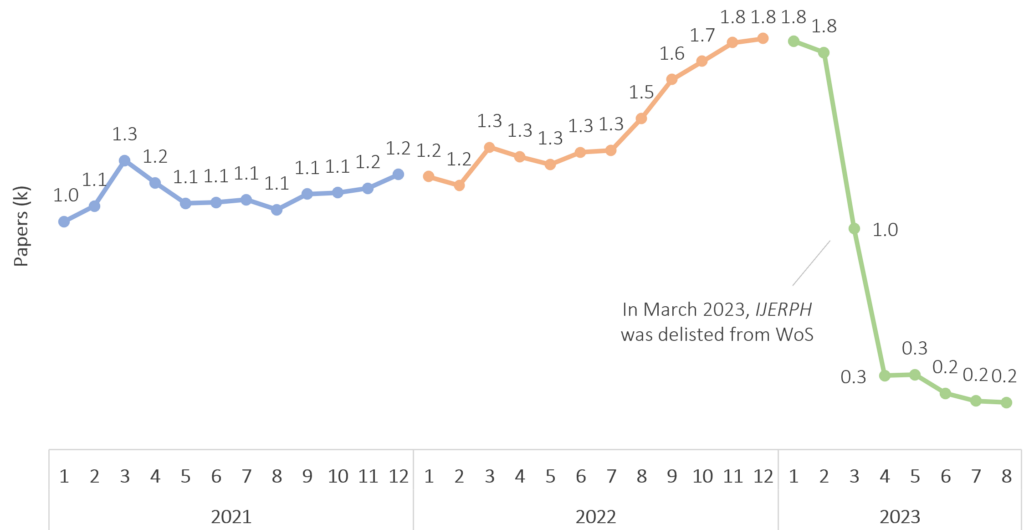

IJERPH’s freefall

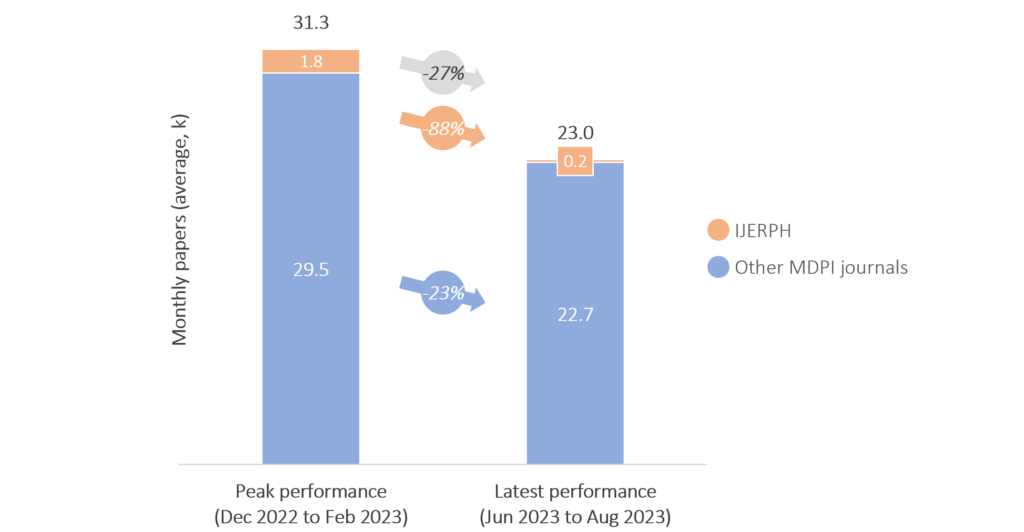

MDPI’s largest journal, the International Journal of Environmental Research and Public Health (IJERPH), was delisted from the Web of Science (WoS) in March 2023. What came next was both expected and staggering. The journal shrunk by 88% in comparison to its peak. It published about 1,800 monthly papers in the three-month period from December 2022 to February 2023, and it dropped to about 200 monthly papers in summer 2023.

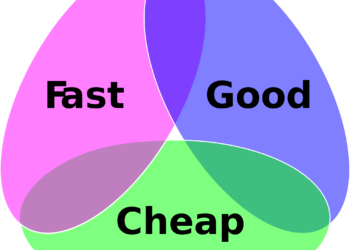

IJERPH’s decline is a stark reminder that in the absence of an Impact Factor, other journal traits matter little. Fast publishing, a high acceptance rate, and a low APC are unattractive to authors if they are not accompanied by a good (or in some cases, any) Impact Factor and ranking.

Contagion, silos, and valuations

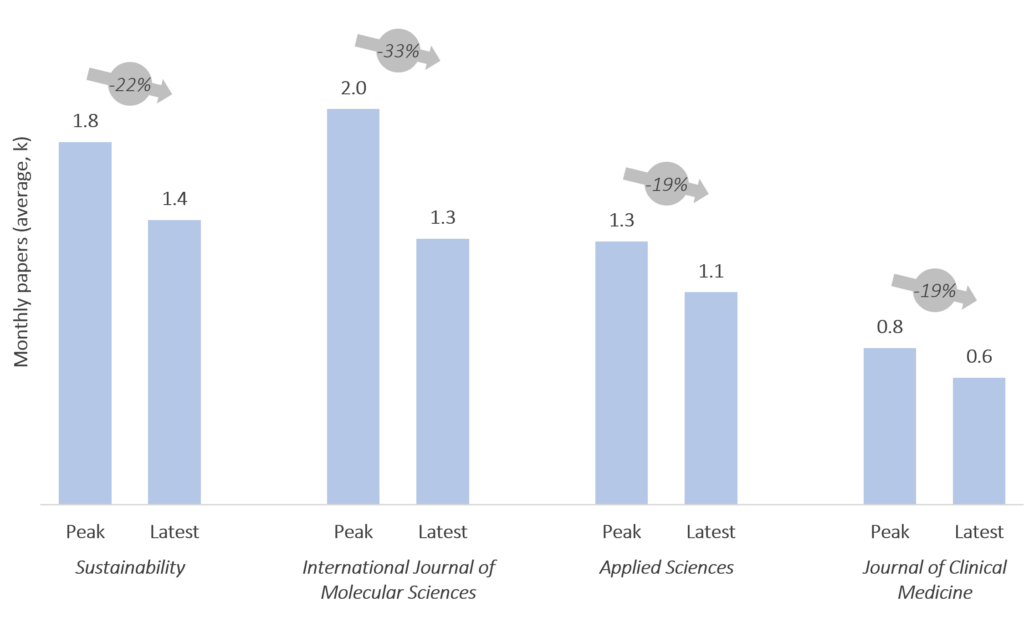

While the demise of IJERPH was not surprising, the implications for MDPI’s broader portfolio were harder to foresee. A month after IJERPH’s delisting, the rest of MDPI’s journals declined by 17%, from 28,500 papers in March to 23,500 in May. Since then, they have published fewer papers on every month, dropping to 22,500 papers in August.

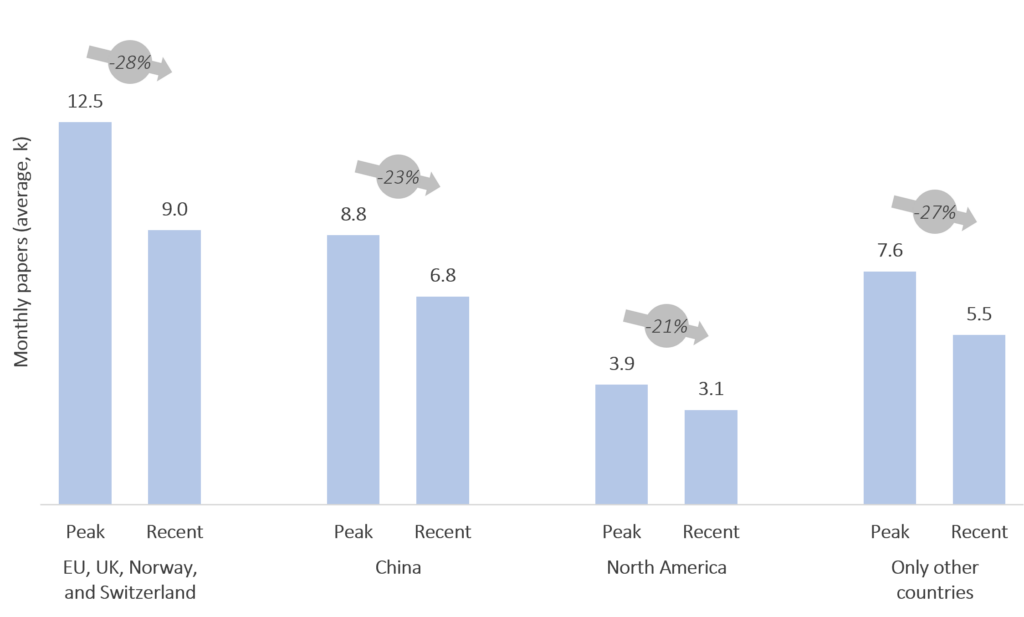

Including IJERPH, MDPI declined by 27% in summer 2023 in comparison to its peak. Summer 2023 also marks the first year-on-year decline for MDPI (5%) in a long time. In addition, the decline has been similar across regions and paper types (Guest Edited papers and regular papers).

When asked, MDPI’s CEO, Stefan Tochev, suggested that ‘while there was a drop in publication numbers in April… during the following months MDPI submissions and publications have been steady’. Stefan also mentioned that MDPI has ‘implemented methods to improve the relevance of publications’. This should reduce the risk that another journal is delisted for being out of scope, as in the case of IJERPH. Given the currently accessible information, discerning the impact of author flight versus the impact of the new editorial policy on MDPI’s slowdown is a challenge. However, given the interchangeability of MDPI’s journals, it seems improbable that the new policy has significantly contributed to the slowdown.

The contagion across the MDPI portfolio reflects a source of risk that is unique to publishers with relatively uniform portfolios. When all journals are similarly branded and share the same editorial and operational policies, a journal’s reputation reflects the reputation of the entire portfolio and vice versa. Trouble in one journal may be viewed negatively by authors of other journals. If IJERPH got delisted by WoS, is there any guarantee that other journals will not have the same fate? Does the perceived reduction in IJERPH’s reputation translate to a similar reputational drop for MDPI’s other journals?

It is unlikely that the same extent of contagion would be observed in other large publishers, such as Elsevier and Springer Nature. Their multiple, uniquely branded portfolios and journals can act as silos in case of reputational challenges in one or more journals. A problem in a Cell-branded journal would not likely have much impact on the perception of a journal from The Lancet, nor would an issue with a BioMed Central journal hurt the reputation of a Nature Portfolio title. Such silos pose other challenges for traditional publishers, as for example, it becomes more difficult to implement policies and programs across their portfolios, but at least they limit the damage from reputational issues.

This raises interesting questions about the financial valuation of uniform, fully-OA publishers. The APC OA business model is publication volume-driven, so a drop in papers published means an immediate drop in revenue. Further, uniform portfolio publishers may be at higher risk for reputational damage done by one journal than diverse, traditional publishers. If your profit is less reliable and is subject to the whims of indexing services, then there may be less value presented than the better protected profits of diverse portfolios. Elsevier and Springer Nature can predict their income in the coming 3-4 years with high accuracy. MDPI could get it wrong by year’s end.

There is also a discussion to be had about the capacity of indexing services to wipe out more than $1,000,000,000 of an organization’s market cap (a figure arrived at using the price that Wiley paid for Hindawi as a yardstick for MDPI). Such consequential decisions require transparent and fit-for-purpose decision mechanisms from indexing services and their metrics.

Reputation comes knocking

When I first wrote about MDPI in summer 2020, their reputation was not stellar, but it was improving. It would take several paragraphs to list all the reputational challenges that MDPI has faced since then. They have encountered institutional challenges, such as the inclusion of several of their titles in the Warning List by the Chinese Academy of Science. And they have faced a steady stream of negative press in the form of typically poorly researched blog posts and scholarly articles.

However, nothing seemed to stick. Up until the moment of IJERPH’s delisting, MDPI was successful despite the attacks to its reputation. Its annual growth was about 35% in the months before the delisting, and the publisher was on track to exceed 350,000 papers in 2023, bringing them close to the size of the second largest publisher, Springer Nature.

But now it looks as if questions about MDPI’s reputation have begun to catch up with it. First, the delisting of IJERPH likely is a consequence of MDPI’s reputation. It is possible that WoS would not have taken such aggressive action if MDPI was viewed in better light. Then, the wide contagion across the MDPI portfolio is also likely a consequence of the publisher’s reputation. It is possible that authors would have treated the delisting of IJERPH as a one-off event, if MDPI was viewed with less skepticism across the scholarly world.

MDPI does not deserve the entirety of its poor reputation, but it has not been proactive in its defense. When MDPI reacts to negative developments, it appears unconvincing. For example, their response to a paper by Oviedo-Garcíathat discussed self-citation rates of MDPI’s journals included a chart that showed MDPI standing out for its intra-publisher self-citation rate, in essence supporting the author’s argument (the article has since been retracted and revised). Meanwhile, MDPI’s self-citation rates are high but unspectacular, and certainly not indicators of predatory behavior.

MDPI’s poor reputation is partly the result of inattentiveness. And if papermills are ever discovered to have operated at MDPI en masse, the root cause will likely be the same, namely inattentiveness.

What does this mean for MDPI’s future performance? There are two extreme scenarios that can combine in various ways. The bad scenario is a vicious circle of declining reputation and administrative action against MDPI that leads to declining paper output. Having previously benefitted from a virtuous cycle of improving reputation, MDPI will witness the other side of the coin.

The good scenario is a brand refresh that combines with the short memories of forgiving academics and the ranking of several MDPI journals in next year’s Impact Factor release. This could place MDPI back on the growth track.

In any case, MDPI’s performance in the short-term will be beyond its control. No matter how hard it works to improve its reputation, it will not be able to stop another delisting from a major index or a regional anti-MDPI policy. Meanwhile, it appears to be temporarily stabilizing just above 250k annual papers.

Frontiers, same but different

As with MDPI, Frontiers is a fully-OA publisher that is based in Switzerland, has a uniform portfolio, relies on the Guest Editor publishing model, and grew phenomenally up until 2022. There are some differences though. For example, Frontiers publishes papers fast, but slower than MDPI, it has a higher reliance on the Chinese market than MDPI, it charges higher APCs than MDPI, and its journals are slightly better ranked than those of MDPI.

Another difference between MDPI and Frontiers has to do with their reputation. While MDPI is not in good control of the narrative, Frontiers has a tighter grip on it. For example, they highlight, on seemingly every possible opportunity, that they are the 3rd most-cited among large publishers (which is true in some ways, but not in every way), and they run a series of reputation-building initiatives such as the Frontiers Forum, Policy Labs, and Frontiers for Young Minds.

Instead of getting caught in unflattering online exchanges, Frontiers’ founder, Kamila Markram, has received a series of glamorous awards. And in Frontiers’ flagship event in 2023, the Frontiers Forum Live, the line-up of speakers included former secretary-general of the United Nations Ban Ki-moon, historian and author Yuval Noah Harari, primatologist Jane Goodall, and former vice president of the United States Al Gore. Such an event might not be to everyone’s taste, but it certainly makes for good PR.

In the face of clearcut misconduct, WoS would not hesitate to penalize the journals of any publisher no matter their reputation. But it is possible that for less egregious misconduct, such as a journal publishing papers out of scope, a publisher with the reputation of Frontiers would be given the benefit of the doubt, when a publisher with the reputation of MDPI was not.

The plot thickens

Given the similarities between the two publishers, one would expect Frontiers to benefit from the slowdown of MDPI. Instead, Frontiers has experienced a protracted slowdown of its own, starting in October 2022. While the trigger of the contraction of MDPI (and Hindawi) is easy to pinpoint, the thread related to Frontiers’ contraction is harder to untangle.

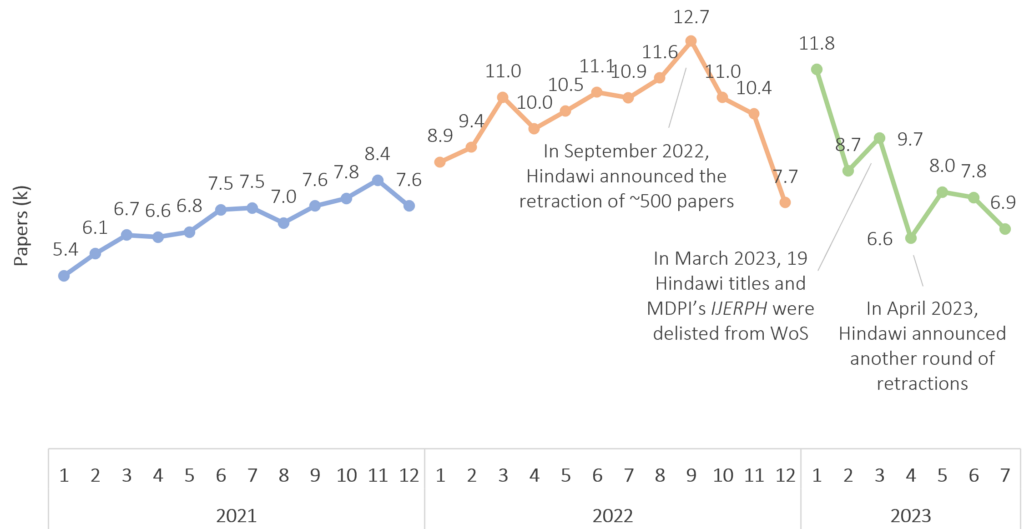

In September 2022, news broke out that Hindawi planned to retract about 500 papers in 16 journals. Hindawi’s announcement was followed by an initial drop of Frontiers’ paper output. Hindawi’s first round of retractions was followed by more retractions and the delisting of 19 of its journals from WoS in March 2023, when MDPI’s IJERPHalso got delisted. This was followed by another drop of Frontiers’ paper output (6,600 papers in April 2023 was its lowest output in 26 months). In the three-month period from May to July 2023, Frontiers’ paper output was 36% lower than its peak in Q3 2022.

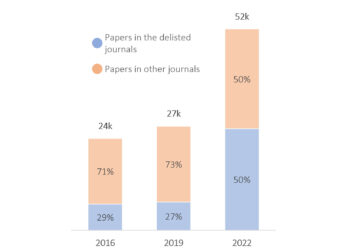

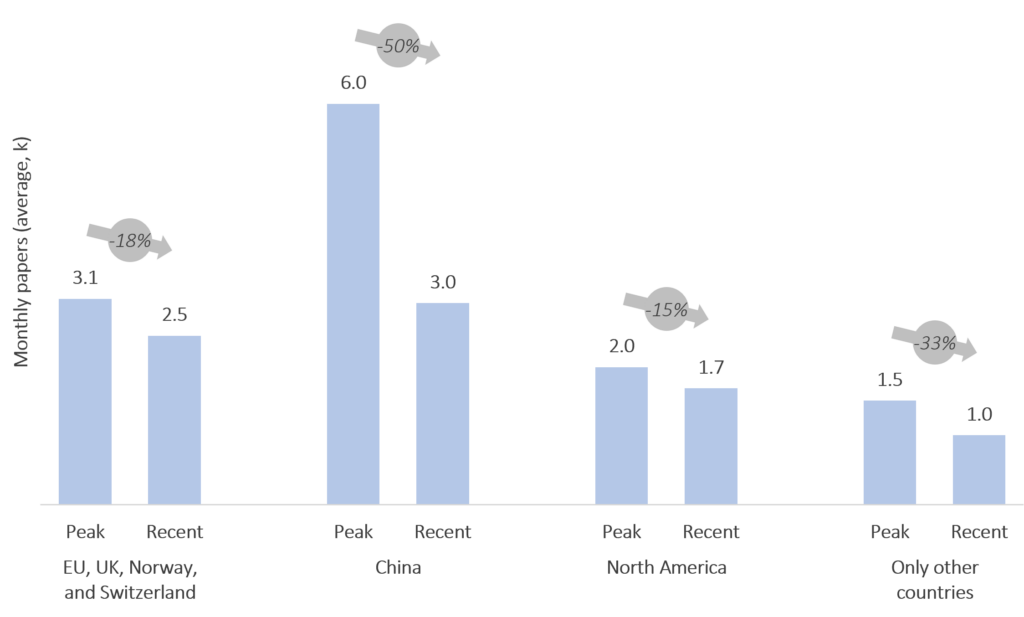

Hindawi and Frontiers had something in common before the retraction news surfaced: the wholesale application of Guest Edited collections and a high exposure to papers from China, where perverse incentives have resulted in papermill activity. Much of Frontiers recent growth had come from that region; at the time of the announcement, about half of Frontiers’ papers had authors based in China. The retractions at Hindawi might have made Frontiers uneasy. Frontiers’ ensuing slowdown was the steepest in China, as the country’s paper contribution dropped by half.

The timeline of events suggests that Frontiers self-moderated its paper output by applying additional checks and stricter editorial criteria. When its paper output first declined by 17% from Q3 to Q4 2022, the output of MDPI grew by 19%, suggesting that Hindawi’s retractions did not deter authors from the fully-OA route, and the contraction at Frontiers was the publisher’s choice. While the contraction at MDPI has been geographically agnostic, the contraction at Frontiers has been highest in China, where it had an unhealthy exposure, again indicating a choice rather than market dynamics. Finally, the contraction of Frontiers has been steeper than that of MDPI, despite Frontiers not facing a direct reputational challenge as MDPI, pointing once more at a self-moderation choice.

When asked, Tom Ciavarella, Head of Public Affairs and Advocacy, North America for Frontiers, shared a statement that largely aligns with the conclusions of the analysis. ‘The publishing market has been cooling since 2021 and there will be many reasons for that, not least the economic overhang of the pandemic. In that context, all research publishers are seeing slower article submissions. At the same time, the threat of fraudulent science is growing, exploiting goodwill and eroding trust, so we have responded with even tighter thresholds for acceptance and we are rejecting a higher proportion of papers than ever.’

Controlling the narrative

Why would Frontiers moderate its paper output? The publisher is likely focusing on its brand, sacrificing short-term profitability to stay in control of the narrative. It has taken the time to pursue the right editorial action to protect its reputation and eventually return to growth.

We might already be seeing the fruit of this intervention. In a recent post, Frontiers announced the retraction of 38 papers linked to the practice of authorship-for-sale. Such schemes rely on authorship changes after submission. In response, Frontiers announced policy changes, whereby ‘requests for authorship changes will only be granted under exceptional circumstances’.

It remains to be seen whether these retractions conclude a period of introspection or whether they are the pebble that starts a landslide as in the case of Hindawi. Either way, this is in line with Frontiers attempting to stay in control of the narrative. As their statement concluded, ‘like any business, scientific publishers live and die by their reputation, and any publisher that prioritizes profit over their customers is likely to fail’.

Meanwhile, authorship-for-sale papers have been flagged at MDPI since September 2021, but MDPI does not appear to have taken action in a timely manner to the frustration of the researchers that flagged the papers in the first place.

The unholy grail of publishing

The publishing model of MDPI and Frontiers has resonated with thousands of researchers. The two publishers offer a high likelihood of acceptance and speedy publication in well-ranked journals, typically coupled with peer recognition (and pressure) when publishing in guest-edited collections.

MDPI and Frontiers are not the ideal destination for every paper of every author, but at one point, they combined for about 500,000 papers (annualized), while growing at a rapid pace. This translates into nearly $1,000,000,000 annual revenue, powered by papers in Guest Edited collections.

Although the Guest Editor model is associated with high reputational risk and unpredictable performance, publishers will not let an opportunity of this size drop. Instead, they will keep tweaking the model until they find the right formula. By the time that one or more publishers achieve sustainable success, a few will have tapped out.

There are several contenders facing a diverse array of challenges. MDPI will have to prove that it is adaptable and not a one-trick pony. Frontiers will have to prove that it did not inadvertently expose itself to unmanageable risk before tweaking its editorial policies. And newcomers to this publishing model, such as Springer Nature’s Discover series and Taylor & Francis’ Elevate series, will have to learn from the lessons at Hindawi, MDPI, and Frontiers before pursuing the model at scale.

Discussion

41 Thoughts on "Guest Post — Reputation and Publication Volume at MDPI and Frontiers"

My researcher colleagues and I find one aspect of the workings of these publishers particularly annoying and a real put-off and that it the stream of invites to edit a journal, edit a guest issue or write a paper when the discipline mentioned in the invite is not one in which we publish. Clearly we are in databases that are not looked at by an expert human being who knows their field. I guess it costs to involve such people but I suggest that it is a cost that a publisher seeking reputation should incur.

Hello Anthony, I think this is one of the key considerations when it comes to the sustainability of the model. Publishers will have to find the right balance between over-inviting (and annoying) researchers and under-inviting researchers to the expense of operations and publishing speed. As you mention, they also need to improve the accuracy of their invitations. There are too many anecdotes of poorly targetted invitations.

One of the other questions about sustainability of reputation is Frontiers’ reliance on massive quantities of review articles:

https://www.ce-strategy.com/the-brief/progress/#1

“Frontiers has published just over 464,000 articles (all article types) since 2007: 20% are classified as “review”, “opinion”, “mini review” or “perspective”; and, in fact, only 64% of Frontiers’ output is classified as “original research” according to the metrics provided on their website. Review articles are cited more frequently than original research papers, which helps to boost the average citations per paper.”

Is there a point where the market gets saturated with review articles and authors are less inclined to write them (particularly for a paid APC journal)?

Maybe you could ask the publisher of the entire Annual Reviews series that? They seem to be doing fine. https://www.annualreviews.org/

I’m not sure it’s an apt comparison given the scale involved. Annual Reviews as a publisher put out around 1,389 articles last year. Frontiers published 126,430, and if 20% were reviews, then that means 25,286 review articles. It’s also worth considering that Annual Reviews operates on a subscription business model (S2O) where quality of articles and appealing to readers is paramount for financial reward, whereas Frontiers relies on the APC business model which is volume-based.

If my memory serves me right, MDPI also had way too many reviews. I guess it becomes unsustainable after a high publication volume.

Great post!

I doubt it explains entirely what you’re seeing here, but I guess one possible reason why publication *rates* are decreasing globally might be slower peer-review https://medium.com/@clearskiesadam/is-science-getting-slower-31fac0bbe998

Hope that’s of interest!

Thank you, Adam. To my best knowledge, speed is not a factor in this case. It would not explain more than a couple of percentage points of the contraction of either publisher in the analysis.

Yes, that sounds right. Interesting, thanks!

Here is something that has just come in by email: I should add that although I was for much of my working life a scientific publisher my research is now in information science and I was originally an ecclesiastical publisher. How did they get my name?

— Original Message ——

From: “Bioanalytical Methods Editor”

To: anthony.watkinson@btinternet.com

Sent: Monday, 18 Sep, 2023 At 13:50

Subject: Hi Anthony Watkinson, Ready to submit your research?

Dear Anthony Watkinson

Good day. I hope this email finds you well.

The Journal of Bioanalytical Methods and Techniques is a multidisciplinary, peer-reviewed, scholarly refereed e-journal that is published internationally.

It publishes scientific, academic research papers and articles in the field of Bioanalytical Methods and Bioanalytical Techniques. It accepts and publishes high-quality authentic and innovative research papers, reviews, short remarks, case studies, and communications on various topics covering frontier topics in the field. The Journal accepts papers/manuscripts that meet the standard criteria of importance and scientific excellence for submission.

We respectfully invite you to submit your unpublished manuscript for possible inclusion in the upcoming issue, Volume 3 Issue 1, of our prestigious journal.

You can submit your manuscript as an email attachment to this email.

Kindly inform me if you and your colleagues have an interest in submitting a manuscript for the upcoming issue.

You are welcome to distribute this paper submission invitation within your scientific community.

Eagerly awaiting your response.

Regards

Francis Barin | Editorial Assistant

Journal of Bioanalytical Methods and Techniques

Facebook: @annexopenaccess

Twitter: @annexopenaccess

———————————

#9587 Nittany, Dr Apt 103

Manassas, Virginia 20110, USA

one possibility is they got your name by searching scopus / pubmed / wos for keywords like ‘covid’, ‘pandemic’, ‘medical’, etc. which surfaced a few of your recent papers — if you’ve got your contact info listed in any of those, your email got picked up that way

another one is they crawled publisher websites / preprint servers for those same keywords, which surfaced e.g. https://advance.sagepub.com/articles/preprint/Insights_into_the_impact_of_the_pandemic_on_early_career_researchers_the_case_of_remote_teaching/16870627/1 — if all your coauthors received the same invitation this paper is the likely vector

some publishers will put more effort in to filter the results thus making their invitations more targeted; others — like the one that contacted you — will put in less effort, send out mass invitations and call it a day.

Thanks Sasa. My email is easy to find on the web but what surprised me was Bioanalytical Methods and Bioanalytical Techniques. Why?

Anthony

they likely weren’t looking for you specifically; whoever manages the journal ran a query as described above, and your email got picked up along with thousands of others so you got an invitation

it’s like myself getting regular invitations to submit my work from SAGE, and i never even published anything. my email is somewhere in their database since ’07 when they had a month of free access to their catalog and i signed up. i’ve been getting invitations to submit to their various journals to this day (well up to 2023-09-20 to be precise)… hundreds if not thousands of invitations + tons of other ads and promo messages

it doesn’t matter that never during those 16 years have i ever submitted anything to them nor published anything at all in any field — they’ll happily continue sending me invitations and i’ll happily continue ignoring them

why? they’re trying to make money and it obviously costs them very little to cast a wide net

it’s just how publishers operate in this OA era

Our Web of Science quality criteria (https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/editorial-selection-process/editorial-selection-process/) are transparent, and all decisions are based on observable output and characteristics of a journal. We do not base our decisions on publisher reputation.

The quality of the content we accept into the Web of Science Core Collection is paramount to us. The responsibility for protecting the integrity of the scholarly record is shared by all those involved in the creation, delivery, and assessment of academic literature, and we take our part in that collective responsibility very seriously.

Anna Treadway

Director, Publication Selection Strategy, Web of Science

This is a reassuring intervention to the discussion. I was worried about the tone of the original article where all the emphasis was on “reputation” rather than scientific or scholarly quality. Indeed, I don’t think I was the term quality mentioned once. I would hate to think that the scientific and scholarly community was now trading in reputation rather than the quality of what’s being published, whether it adds value and whether it is worth reading. Furthermore, the original post refers to a journal being delisted subject to the “whim” of an external quality regulator/body. One assumes that this term misses the mark. I have always assumed that what we are trying to achieve in scientific and scholarly publishing is quality outcomes as judged according to various reasonably objective and agreed upon criteria, of which none include reputation or whim.

I think there’s at least a perception that there’s a lack of systematic rules and equal application of those rules in many aspects of the indexing and abstracting and metrics services. Christos wrote about the lack of transparency, for example, around the delisting of IJERPH (https://scholarlykitchen.sspnet.org/2023/03/30/guest-post-of-special-issues-and-journal-purges/) as did others (https://www.ce-strategy.com/the-brief/not-so-special/#2). It’s never been clear to anyone outside of Clarivate why some journals are rapidly placed into SCIE and get an impact factor (https://scholarlykitchen.sspnet.org/2014/08/21/the-mystery-of-a-partial-impact-factor/) while others remain in seemingly permanent purgatory in the ESCI (https://scholarlykitchen.sspnet.org/2022/07/26/the-end-of-journal-impact-factor-purgatory-and-numbers-to-the-thousandths/). Further questions were raised back in 2017 about the amount of citation manipulation allowable before a journal gets in trouble (quite a bit, apparently https://scholarlykitchen.sspnet.org/2017/05/30/how-much-citation-manipulation-is-acceptable/).

Without transparency, from the outside things appear random or done by “whim”. This stems from both a lack of consistency in behavior and a lack of openly stated rules (and equal application of those rules should we find out what they are).

It’s not just Clarivate though, and one shouldn’t just single them out. For example, one could say much the same thing about qualifying for Medline indexing through the NLM or the super confusing tangle that PubMed has become these days.

Yes WoS does make its criteria explicit, but with quite a degree of hypocrisy I feel.

It is like research in environmental quality: you only find what you measure and your definition of “healthiness” reflects what you have decided to measure or not. It is an open secret that powerful interests weigh in to influence what is measured or not, how, and what the acceptable thresholds are.

I see no reason why the publishing business would escape this commonplace situation.

Journals are considered ‘healthy’ (i.e. an IF is attributed to them) as long as you keep your criteria to a minimum.

As a scientist I have received countless sollicitations from citation rings, journals, brokers, ‘colleagues’, etc to indulge in unethical behaviours.

Paper mills and citation rings have been exposed and neither the publishers nor Clarivate bulge or seem to notice.

The cancer is growing and we discuss the delisting of ONE journal!

Take any MDPI journal and look at the articles cited 100+ times in one year, that feed the IF ; see the % of cites from the past two years in the reference lists ; % of self-citations; cites of papers unrelated to the topic ; general statements followed by [1-10] and allowing multiple cross-references, etc.

While it might not be so easy to establish a criteria of uncontroversial fraud, Clarivate is clarly not showing much interest in stemming the tide, let alone turning it. After all, they also are a for-profit organization.

While there are free and open websites are available. The valuable research must be posted there and reviewed by other colleagues that would be selected by the team or the comments of other potential researchers be invited. Universities must not run for IF rankings. If your research work is of worth then all people in your field know it without journal tag. According to my experience all journals have confusing system of review and publishing. Much of positive energy of faculty members is wasted by following the rankings and impact factors of the journals. Or each university must have it’s own fair and free review system on the open website like research gate, YouTube, or other such sites

It would be useful to know if the publication fees have risen substantially. For cash-strapped labs, an increase in that fee could have authors looking elsewhere.

MDPI had a massive hike in its baseline APCs about five to six years ago.

Great post, really informative. I was wondering how trustworthy WoS really is. Surely more trustworthy than the publishers, but they could also be favoring some vs others. Many of the analyses of big data like the thousands of articles, millions of citations, could be also slightly partial, or not?

Clearly, MDPI and Frontiers have traded reputations risk and scientific quality for volume and APC driven profits. The deluge of guest-edited special collections massively outruns the need and serves largely to sate the need to show “productivity” by scientists. We are guilty of feeding the beast, pushed by perverse incentives to publish. It’s a double-edged sword for authors as well as publishers, and is dependent of a Faustian bargain whereby if no one questions the quality of the product or the service then money changes hands and all is well. MDPI had their veneer of respectability punctured by Clarivate calling out the emperors obvious lack of underwear and the authors took note. Their paymasters care only for JIF and with that gone, so was the incentive to pay. Frontiers seems to have been caught in the collateral pressure wave but bear responsibility for also treading the tightrope in the race to capture APCs.

It is too easy to point at these particular publishers, though. Many others look all too jealously at the financial returns and want a part of it. The “success”of the high volume publishers has not only been due to their questionable rigour but also their lower APCs and fast turnaround (the latter being related to the former). The simple fact is we publish too much because this is the measure our agencies and universities have settled on to manage their resource process. Hence, the ever rapacious demand for venues to publish in, coupled to a failure to recognize the damage done to scientific progress leads to a massive literature that is a mile wide and an inch deep.

Instead of trying to artificially manage this industry as if we were making wine (with grand, premiere and autre Crus, aka plonk), perhaps it really is time to look inwards and reassess how scientific progress should be disseminated. Because the current track is either going to lead to vastly more pollution or the public will catch on and question their support of science.

Hear hear. Actually it is within our grasp. Professional and rigorous peer review, professional editors with integrity, discerning readers who only cite quality work … these are, or should be, the hallmarks of science and scholarship doing its work properly and according to acceptable standards. If WoS and other “regulators”/monitors of journal publishing are reflecting those values and standards in their judgements, then all strength to their arm, and the sooner they sort out the questionable publishers the better. Obviously there are blind spots. For example, WoS covers more traditional sciences and does not cover citations in non-journal publications, but I have found Google Scholar an adequate alternative in these respects.

and PlumX also covers citation in social media

Spot on Jim.

It’s a market and supply responds to demand. But as with any ‘free market’ basic safeguards could be established to restore a degree of sanity in this ‘industry’.

Clarivate is like S&P or other rating agencies, that so miserably failed to avert financial crises because they are part of the business they are expected to help regulate

one sideway comment: ok, IF is relevant; but the way papers are technically handled means also to authors; to be a little bit more precise, a very personal aspect I must admit: one of my best thought of papers, ok, according to me, a very original approach, bis, was extremely poorly handled by Frontiers (in Physics); the worst I have ever had; I even gave up after n-galley proofs; to the point that I’m somewhat ashamed to mention the paper; so, technical incompetence of “assistant editors’ was (is?) a criterion not to be forgotten; I will not go on about new (misinterpreting Clarivate) rules at assistant editor desks at submission time

All the efforts over decades to find a way for authors to retain their copyright and not hand it over to publishers, has been replaced by an open spigot of cash going from researchers and their institutions to the tune of one billion dollars to just two publishers? ! Unbelievable.

I recall the history a bit differently. I recall it as “all the efforts” being about making sure that everyone in the world, not just people affiliated with wealthy universities, could read the results of “public” research, and that is what OA is well on its way to accomplish, at least in some fields. Retaining copyright has been fought yes, but was not the primary focus of the OA movement at all. Your understanding of the point of the OA movement may certainly differ from mine.

Agree 100% — the misnamed “rights retention” work has all been about forcing authors to relinquish their rights to the public (instead of to publishers) but is not at all about retaining those rights in any meaningful way.

https://scholarlykitchen.sspnet.org/2020/07/20/coalition-ss-rights-confiscation-strategy-continues/

Quality: I’ve reviewed dozens of articles over the years, earlier for the traditional publishers but more recently for MDPI. I like to think of myself as a rigorous reviewer and overall I reject more than I accept, on the basis of quality of research. Could I discern between submissions to traditional and MDPI journals? Probably not, except that some submissions to MDPI are quite old research which for whatever reason hasn’t been published yet. By the way, I don’t think MDPI journals are particularly cheap to publish in.

A senior colleague and myself (82) recently had our first and bewildering contact with Open access, Frontiers in Microbiology. The topic was molecular pathogenesis of bacterial co-infection in COVID-19 patients. The response from an Assistant Editor seeemed like AI-generated using standard phrases. For example, the AE had not at all grasped the main point (a new idea supported by data) of the text. Furthermore, the AE lacked a discussion/ideas about future research into this topic. But that is hard to do because the pandemic is over, as stated by the WHO May 2023.

We returned a handful of points about the AE´s peculiar text to the Chief Editor. Quick answer: our manuscript did not meet the standards of the journal.

PS

Why are both Open access journals based in Switzerland? The country with an infamous banking system.

It is a doubtful discussion because all journals (not only MDPI and Frontiers ones) could have ups and downs. These phenomena occur in all significant publishers with more than 1000-2000 journals. MDPI (with over 400 Journals) is considered a business with expensive charges. The payments can be compared, observing that those of similar journals are greater than in MDPI – everyone can check and see this reality.

Furthermore, other famous publishers have new personalized logos (e.g., “an informa business,” or “Science+Business Media”). However, for several years, it has been trendy to apply big horse’ glasses on the nose, be careful not to see anything around, and hit MDPI.

In my opinion, it is a mischievous play, leading to putting doubt on the Web of Science authority and stirring up a general storm between the vessels of the scholarly kitchen. And if they all break, who is responsible for buying others, of better quality?

Isn’t this basically the same as what happens to a business which makes all its money from clever SEO tricks when Google changes their ranking system?

Yes, Google shouldn’t have so much power to determine which sites get traffic, but if your whole business model is based on exploiting the difference between what the ranking system is supposed to do vs what it actually does, you’re not exactly a sympathetic victim.

Good piece, thanks.

About this part “Given the currently accessible information, discerning the impact of author flight versus the impact of the new editorial policy on MDPI’s slowdown is a challenge”, I could scrape data from MDPI that give at least partly the answer — it proved possible to disentangle the decline in submissions from the reaction by MDPI by increasing its rejection rate. While it is hard to exactly pin down the relative contributions, it is clear that MDPI did react by quite drastically increasing its rejection rate at some journals — even amid a decrease in submissions.

For instance, the rejection rate at IJERPH jumped from 52% in February 2023 to 67% in March. Sustainability, 53 to 62%. Over all of MDPI, the rejection rate jumped 5 percentage points from February to March, 46 to 51%.

In some months, for some journals, submissions did increase month on month (eg IJMS, June, saw a 2.6% increase in submissions with respect to May), but total published papers still decreased ( -8%) due to an increase in rejection rate (+5 percentage points in June as compared to May).

This of course could in principle be due to random monthly fluctuations in paper quality, but the increase in rejection rate is quite substantial and quite widespread — nearly universal across MDPI — not to be a deliberate move.

Three detailed tables for people interested:

Monthly % change in submitted papers @MDPI: https://www.dropbox.com/scl/fi/caxdu8sb7bcxioj7satv5/MDPI_submitted_monthly_top25_hires.png?rlkey=l5vrea8prymovs4eqnippzyo2&dl=1

Monthly % change in accepted papers @MDPI:

https://www.dropbox.com/scl/fi/06glvj2kdo3khww3jfv31/MDPI_accepted_monthly_top25.png?rlkey=fbz6m746ooh2s64227l8urrsy&dl=1

Monthly rejection rates @MDPI:

https://www.dropbox.com/scl/fi/l0m2z7hks5cqnckocqgae/MDPI_rejection_rates_top25.png?rlkey=8jp352o8nzl1t5sa80eu8mi1u&dl=1

Thank you, Paolo. That certainly adds to the analysis.

If I am reading the data correctly, submission flight accounts for 70% of the decline in accepted papers. The remaining 30% should indeed be the result of the new editorial criteria by MDPI and potentially the result of flight of their best submissions. The decline in Europe was a bit steeper than that in China, a country that typically faces lower acceptance rates.

I had no idea that it is possible to retrieve submissions from MDPI’s site. Is there a straighforward way to do that?

It is tricky to do the exact correct math given the data, but I think the 30% estimate does the job, then you have variations by journal.

About rejection rates: it used to be possible, because of a glitch in MDPIs journal stats web pages. Back some years ago MDPI shipped acceptance and rejection numbers per month in the /stats page of each journal, via a plot. Then they discontinued that, but in the source code of the page they still shipped a .JSON with all the data — it was three, just not displayed. This is where the data in the tables comes from.

Recently, we shared our preprint on publication strain (this one: https://arxiv.org/abs/2309.15884 ) with MDPI for comments prior to the release; they were surprised that we knew their rejection rates, so they asked us how we did it. We told them. Now the monthly and yearly rejection rates are no more present in their .JSON in those stats pages.

Thank you for the clear explanation. I thought I was going mad after spending three hours on the HTML without any data to show for it…

You can still retrieve my very same data using the internet way back machine. It’s slower but MDPI deleted those data about mid September, so you can still access it if you use a snapshot from end of August.

Hi Christos, Paolo,

As a note as well: Table 1supp1 in the preprint explicitly shows the effect of the Chinese Academy of Science early warning list and Clarivate delistings on the submission rates to MDPI. It is cited in the main text to make the point that authority bodies can have significant impacts on publisher success, and their decisions should be made with more transparency.

Thanks for the analysis here Christos! Was obviously of interest to our group as we were dotting i’s and crossing t’s on our own work.

Cheers,

Mark

The paper by Hanson et al is relevant.

https://doi.org/10.48550/arXiv.2309.15884 The strain on scientific publishing

where do they see an exponential growth ? it’s a mere power law