Editor’s Note: Today’s post is by Dror Kolodkin-Gal. a scientist, researcher, and entrepreneur. Full disclosure: Dror is the founder of Proofig AI, a platform dedicated to automated image proofing for scientific integrity.

The prevalence of research integrity issues has become a growing concern in the academic publishing community, particularly in the life sciences. Several studies suggest that the frequency of image-related issues is increasing, which, over time, could reduce the credibility of scientific literature, lead to costly investigations, and cause reputational damage. As high profile cases about image integrity problems in scientific papers become more frequent, the community must consider how to overcome the issues with the manual image review process and the benefits of AI in rapidly detecting, and potentially preventing, these issues.

The US Office for Research Integrity (ORI), for example, has previously noted a significant increase in the number of allegations involving questionable scientific images. Between 2007 and 2008, the ORI reported that68 percent of all the cases it opened regarding research misconduct involved image manipulation, compared with approximately 2.5 percent in the period of 1989 to 1990.

This issue is still ongoing, with Nature reporting in December 2023 that the number of retractions issued for research articles in 2023 surpassed 10,000. According to Jana Christopher, an image data integrity analyst at FEBS Press, the percentage of manuscripts flagged for image-related problems in manuscripts ready for publication ranges from 20 to 35 percent. This statement, derived from expert interviews conducted by the UK Research Integrity Office (UKRIO), suggests a significant concern in the life-sciences community. These findings by Jana Christopher are supported by the statistical data from Proofig AI, which specializes in detecting duplications and manipulations in scientific images.

The rising percentages indicate that more researchers may be at risk for been reported for potential image issues within some of their publications. If these issues are reported post-publication, publishers and research integrity officers may need to investigate, which can take months or even years. No matter the result, researchers, their institutions and the publishers involved will often face reputational damage because of the accusations. This could limit a researcher’s, and sometimes even the institution’s, ability to gain funding in the future, while lowering the perception of quality for the journal that initially published the piece.

Image intentions

Understandably, researchers are often fearful of the reputational damage associated with allegations of fraud or retractions of their paper. On the other hand, others may believe that it’ll never happen to them, because they know they are not including fraudulent information. However, when looking more closely at allegations or investigations over time, only a small portion of issues can be categorized as intentional manipulation.

For example, the AACR conducted a trial that ran from January 2021 to May 2022, where the team used Proofig AI to screen 1,367 papers accepted for publication. Of the papers reviewed, 207 papers required author contact to clear up issues such as mistaken duplications, and only four papers were withdrawn. In almost all cases (204 cases), there was no evidence of intentional image manipulation, but rather the problems were simply honest mistakes.

This suggests that all researchers should consider how to maintain image integrity and how to uncover any potential issues before publication. So what should principal investigators and editors do to find all these mistakes prior to publication (and ideally, before submission)?

How issues occur

Image issues can occur for several reasons, whether it’s accidentally mislabeling images, or overlapping sections of a sample when capturing them. These intricate details are incredibly difficult to find by eye, particularly when researchers and editors must review hundreds of similar images, which often results in hundreds of thousands of comparisons between subimages.

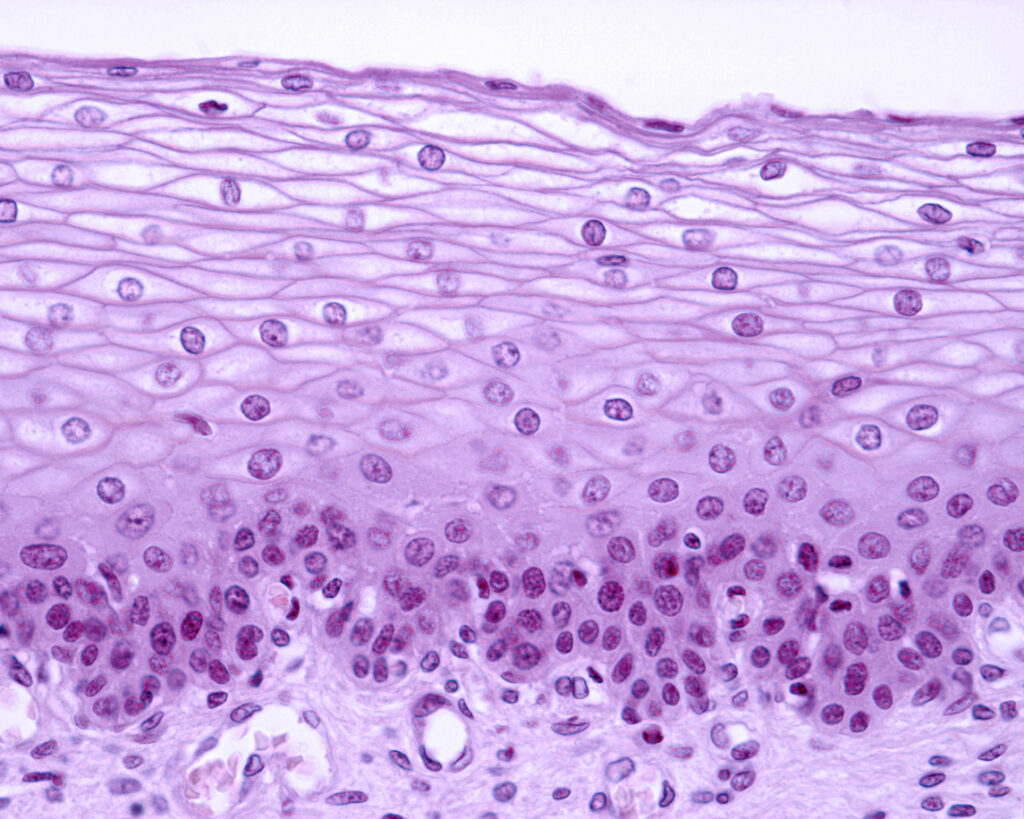

In life science papers, microscopy images are typically the source of detected image issues, and duplications commonly occur during experimentation. While capturing specimen images, researchers move the microscope across the slide to document each section. The microscope will not alert the user if there are any overlaps as they capture images and if the researcher regularly changes the magnification, they may inadvertently duplicate specific sample areas. Details in the figures are very intricate, so it will be difficult to detect these overlaps by eye.

These types of errors should not be confused with deliberate attempts to mislead, but could lead to accusations about the validity of the research. It serves all involved to head off these human errors prior to publication.

The problem with papermills

While honest mistakes make up some portion of image integrity issues in the research literature, instances of fraud are also on the rise, particularly with the emergence of papermills. These services create fraudulent content resembling academic papers, which are often difficult to identify and therefore regularly published.

A BMJ study found that, in 2021, papermill retractions accounted for around 21.8 percent of total retractions. As generative artificial intelligence (AI) models become more sophisticated by learning from other submissions, we may see papermills produce content that appears more and more authentic.

Just as AI can create seemingly authentic images, the technology could also be part of the solution to detecting fraudulent content. Some researchers are already using machine learning to develop algorithms that could detect paper mill text. AI image integrity software is already helping to detect suspected image manipulation to help combat the issues associated with papermill content.

Improving detection

It’s clear that researchers have a vested interest in ensuring there are no mistakes in their own work, but publishers also have an important role to play in maintaining image integrity by removing mistakes and detecting fraud prior to publication. Investigations and potential retractions can be damaging to a publishing house, both financially and reputationally. Wiley reported that an acquisition of an open access publisher cost the company around $18 million in revenue in one financial quarter because of thousands of retractions related to paper mills.

In this instance, the publisher eventually discontinued the brand (Hindawi) behind the acquired journals to limit reputational damage. Examples like this showcase the potential cost of publishing mistakes. Addressing issues before publication can help reduce the risk of lost revenue while maintaining audience trust, and as a result, more reputable publishers have integrated AI image integrity tools into their editorial review process.

Automating image checks

Proactively checking images and catching all these mistakes is not a simple task. The sheer number of submissions that journals receive, alongside the intricacy of the hundreds of figures included in each individual paper, makes the editorial review process a mammoth task.

Take this example — a paper that contains 100 sub-images means comparing more than 10,000 potential duplication and image issues for each manuscript. The image review process therefore becomes an extremely time-consuming task for editors, who may find it difficult, or nearly impossible, to review all these images by eye with complete accuracy. There’s little chance that every instance of a subtle image variation, such as slight overlaps or rotations, or different kinds of manipulation, will be found. But now tools exist that can support editors during the review process to streamline image checks, similar to grammar checking software that helps assess written text.

Developing image integrity tools

Creating image integrity software tailored to the life sciences research industry has historically been a challenge because of the intricate and varied nature of scientific images. Image integrity issues can range from subtle alterations to complex modifications, so analysis requires very sophisticated software, which did not exist until recently. The advancement of AI changed this, but the development of solutions still requires some level of field specific knowledge. Creating software that meets the needs of the life science industry requires a convergence of AI and software expertise with a deep understanding of life sciences, its principles, and editorial guidelines, which should be developed by both researchers and software engineers.

Developing sophisticated software for this specific application requires extensive research into the intricacies of computer vision and potential algorithms and how they could be applied to life sciences. Projects like this also require significant investment, not only financially, but also in attracting experts in software, life sciences, and image integrity to develop a system capable of detecting even the most subtle of image manipulations.

To ensure image integrity software can continue identifying issues in future, the industry must regularly adapt and improve technology, ensuring that it evolves with technological advancements, is scalable to meet the needs of different institutions, and is secure.

Using image integrity software

Specialist software can support the review process. Consider the previous example — once uploaded to the platform, AI software can detect and flag the potential issues in a manuscript, ranging from types of duplication such as overlap and rotation, to types of manipulation such as altering western blot bands. The software is just there to flag what it perceives as potential issues. The editor can then review the text to see if permissible duplications are correctly labeled and therefore allowable, if the paper should be rejected, or if the image issue requires further investigation. The tool cannot make final judgements or replace human intuition, but rather exists to streamline the process by offering editors advanced capabilities in immediately identifying image issues.

Reports of retractions may reduce trust in science. Nature’s report of over 10,000 retractions, for example, is more than double the number seen in 2022. While many of these fraudulent papers were found to be generated by papermills, the damage can be felt by everyone. As noted by microbiologist and scientific integrity consultant Elisabeth Bik, this increase in fraudulent content, “has resulted in a dilution of the scientific literature with papers that are not adding to the scientific knowledge, and that might even contain falsified or fabricated results. Not only does that make it harder for everybody to find good-quality papers, but it might also result in a lot of wasted research effort and grant money if researchers try to replicate and build on those fake papers.”

Principal investigators and their institutions must prioritize investments in advanced technology for real-time, proactive checks before publishing. This approach not only helps in correcting unintentional image duplications, but also in learning to avoid such issues in the future. Moreover, it’s crucial for taking decisive action in the rare instances of discovering genuinely manipulated data in their research.

Equally, publishers should consider automating their review processes to safeguard image integrity before publication. Investing in these tools reduces the risks of reputational and significant financial harm, while also ensuring that the public has access to trustworthy scientific literature.

As the landscape of image integrity issues continues to change, with papermills developing new techniques to create more authoritative content, proactive image checks will only become more important. We must continuously invest in research and development to counteract future image manipulation methods. The future of trust in scientific research will require collaboration between image integrity experts, research scientists, and reputable institutions in order to safeguard the authenticity of images in academic publications.

To preserve the integrity and quality of science, an escalation in awareness and proactive actions is indispensable. This causes the adoption of the most advanced tools available for identifying errors and attempts at manipulation in both images and text. Such tools should not only be used by individual researchers, but should also be supported at higher levels by universities that will actively provide these essential resources to all their scientists. In parallel, those tools should serve also the publishing houses to prevent publications with integrity issues. By fostering an environment that encourages the vigilant use of these tools, we can better safeguard the scientific endeavour against inaccuracies and manipulations, ensuring its continued advancement and the trust of the wider public.