As a managing editor, one of the most common questions I get is about the journal’s acceptance rate. I’m typically puzzled by this because acceptance rate tells you very little about the likely fate of any one submission.

If all the submissions for a month went into a hat and a blindfolded editor pulled out a proportion of them to publish, the acceptance rate would indeed be a good indicator of an individual paper’s chances of publication. In reality, if a paper is below the quality threshold for the journal, it’s almost certain to be rejected; and if it’s above that threshold, then it’s almost certain to be accepted.

The interest in acceptance rate seems to be linked to the attitude that peer review is a coin toss, and hence the overall acceptance rate can predict the fate of each paper. Where does this attitude come from? Does it have any basis in reality?

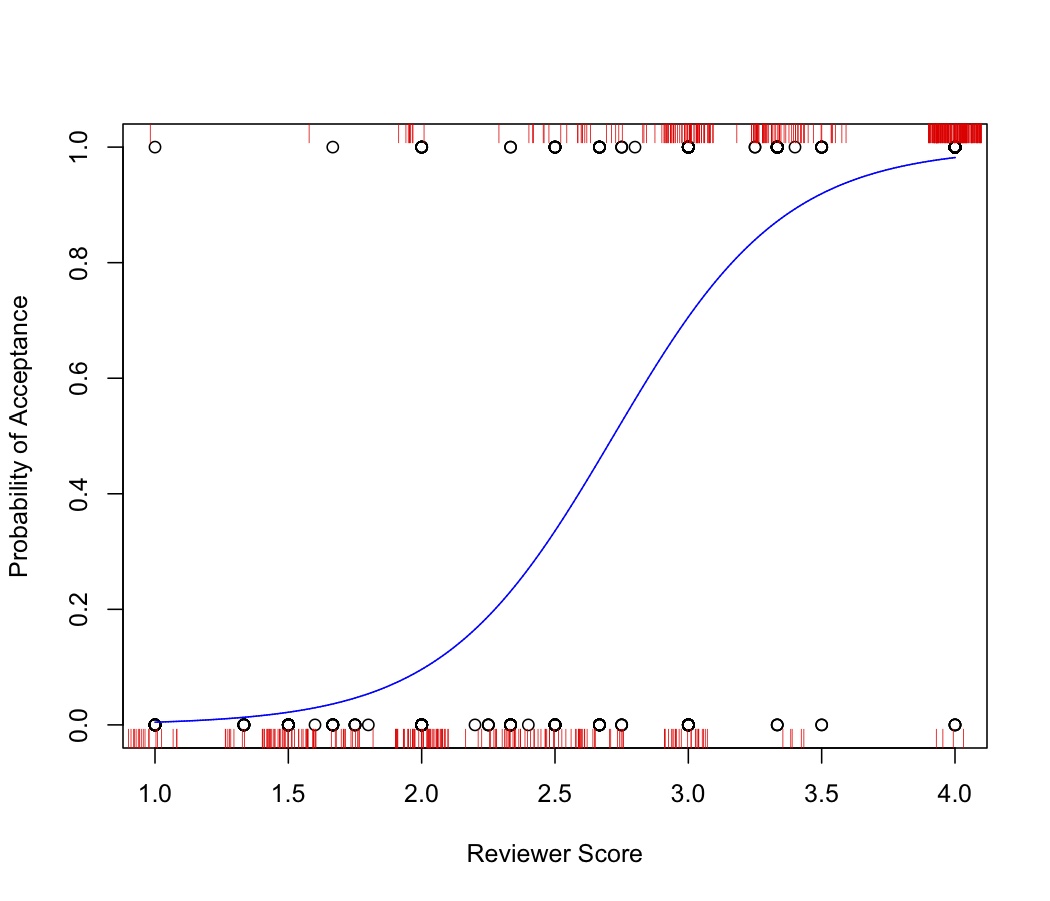

Part of the answer comes from the binary nature of editorial decisions themselves — papers are ultimately either accepted for publication or rejected — whereas quality is a continuous variable. Imposing a binary outcome on a continuous variable leads to a situation nicely described by the logistic model. For the sake of argument, I’ve put together this logistic curve for Molecular Ecology papers decided in 2006. In that year, we accepted 332 papers and rejected 369.

The x axis is “quality,” as measured by the average reviewer score a paper received for its final decision. A “reject” recommendation is assigned a score of 1, “reject, encourage resubmission” is assigned 2, and “accept, minor revisions” gets a 4. These scores are then averaged across reviewers. (Molecular Ecology doesn’t offer reviewers the option of “accept, major revisions,” but this does exist as an editorial decision, which is why the score skips from 2 to 4.) The circles are the actual data points, but since many are superimposed, the approximate density can be seen in the ‘rug’ of vertical lines. The curve shows how the probability of acceptance changes with average reviewer score.

If peer review were a pure lottery, the curve would be flat, as all papers would have an equal chance of acceptance. However, there’s clearly a steep transition between an average reviewer score of 2.25 and 3.25 (corresponding roughly to acceptance probability increasing from 0.2 to 0.8). Only 25% of papers fall in this range; the remaining 75% are a clear “accept” or “reject.” Knowing the average acceptance rate for a journal is thus a hopeless predictor for the fate of a single paper — it all depends on how good it is relative to the journal’s standards.

A second part of the perception that peer review is a lottery stems from the disconnect between the needs of authors and the needs of journals. Authors need to publish their work in the highest impact journal possible, which, taken to the extreme, means that their paper should be the worst published by that journal that year. If it’s not the worst, then it’s possible it might have been accepted somewhere else even more prestigious. By contrast, journals need to maintain their current status by accepting only average or better-than-average papers, or otherwise their overall quality will decline. The end result is that authors will try to pick journals where their paper falls into the transition part of the figure — too far to the left, and they will almost certainly be rejected; too far to the right, and their paper will be easily accepted and should have been sent somewhere better. Authors following this strategy thus aim for a more lottery-like peer review process, a behavior apparently encouraged by directly rewarding authors for each publication.

Authors are generally careful about where they first submit. If not, the top journals would get swamped by millions of submissions each year. Journals also discourage the lottery approach by offering better service to authors with better quality papers, such as highlighting their research with “news and views” pieces or fast-track publication. These enticements help journals attract papers that are well to the right on the quality-acceptance figure. One could also view the multitude of submission and formatting requirements as a deterrent to submitting the same paper to a succession of different journals, although this is clearly less effective in the digital age. Authors mostly pay with their time.

At the end of the day, does it really matter that peer review is viewed by some to be a lottery? From a global standpoint the answer is unequivocally, “Yes.”

Authors who view the peer-review system as a lottery put unnecessary burden on the reviewer community as the same papers are reviewed over and over as they cascade down the hierarchy of journals.

The attitude that peer review is a lottery may also discourage authors from using reviewer comments to improve their paper before they resubmit elsewhere — after all, if acceptance is unrelated to quality, what’s the point? This behavior induces also weary cynicism in the community, as spending many hours reviewing a paper for one journal and then being asked to review an identical version for another induces a mix of rage and despair.

In summary, the peer review process can be a lottery if you want it to be, but the decision on most papers will depend strongly on quality (as adjudged by the reviewers). The real loser of the “lottery attitude” is the reviewer community, but what can be done to fix this problem is still up for debate.

Discussion

52 Thoughts on "Is Peer Review a Coin Toss?"

You’ve answered your own question about papers to the right end of the curve with the phrase, “Authors mostly pay with their time.” Speed of publication has become a key consideration in choosing a journal for one’s paper. Everyone has a grant renewal deadline or a tenure committee meeting approaching, or is about to hit the job market, or they just want to beat a competitor and get public credit for their ideas.

Journals get papers that are far to the right because the author has deliberately selected a journal where they know the paper will be accepted, and they won’t have to go through the submit-review-reject-resubmit elsewhere cycle multiple times.

This is why a journal like PLoS ONE remains attractive to so many authors despite the fact that their time from submission to publication has slowed greatly as they’ve expanded. The author knows that with a 70% acceptance rate, their paper is almost guaranteed of being accepted and no time will be wasted on going through the cycle multiple times with multiple journals.

Asking about the acceptance rate doesn’t mean that you view the review process as a lottery. It’s taken as a proxy of the quality threshold of the journal. If it is low and you don’t think your paper is so good then you won’t submit. I guess though that the reason why low acceptance rates are proxies for quality is because a lot of authors do treat it as a lottery and send poor quality papers to top journals on the chance that they’ll be accepted. Or do authors just not have a clue how good their papers are?

It is not always the case, sometimes it is because the work is too interdisciplinary and the reviewers themselves reject the paper due to their lack of knowledge/understanding.

In an ideal world, authors know the quality of their own manuscripts, submit them to the most appropriate outlets, which ultimately accept them. Incentives to do so otherwise, such as paying authors based on where their manuscripts are published, create a moral hazard that makes the system worse off for everyone. It also puts more of the burden the top of the review-reject-and-resubmit cascade.

I can think of various changes that would help reduce the lottery mentality:

1. Stop rewarding authors with cash bonuses when they publish in high-impact journals

2. Somewhat more difficult –institutions reducing emphasis on impact metrics

3. Charge submission fees

4. If #3 is impossible, make authors of inappropriate submissions wait longer until they get a rejection decision.

To me, #4 seems the easiest to implement. Top-tier journals are very good about providing authors with fast decisions. And when manuscripts do not go out for peer review, that decision is often made within 48 hours. Instead, imagine that Science and Nature held your manuscript for 4-12 weeks. The gamble the unwitting author makes is now balanced with a real risk of having them lose valuable time.

If time is the real currency for scientists, they need to put more of it on the table if they want to play the lottery.

#4 would immediately be undermined by other journals that didn’t have that policy- after all, who would submit to a journal that promised to hold your paper for six weeks even if they’d decided to reject it two days after it came in?

Nice post Tim. I especially like your point about how authors make the process lottery-like by aiming for journals at which their chances for success are uncertain.

One quibble: in my experience, the perception of peer review as a lottery arises in large part because authors don’t think the average of two referee scores is a precise estimate of the quality of a paper. For instance, I once had a paper rejected without external review by a leading journal in my field, because in the view of the EiC it was obviously not high enough quality for the journal to publish. I resubmitted the ms to another, equally-selective and equally-leading journal, and it got the best reviews I’ve ever received.

Now, it could well be that referees are less able to precisely estimate quality (or, equivalently, less likely to agree on quality) for papers near the quality threshold for the journal. That would really increase the lottery-like nature of the process for papers near the journal’s quality threshold.

You could try to look at this with your data, looking at the variance of the two referee scores as a function of their average. But it’d be a little tricky, because you’d have to allow for the fact that it’s mathematically impossible for papers with very low or very high average scores to have high variance.

Hi Jeremy,

Did you ever see the paper by Neff & Olden in Bioscience (here? They show that the ‘randomness’ of the review outcome declines significantly between two and three reviewers, especially if there’s a editorial pre-selection step. However, this ignores the fact that editors actively seek out reviewers with complementary expertise who are inherently more likely to give conflicting recommendations. I’ve tried to look at whether reviews are more congruent or more opposed than by chance, but haven’t gotten very far yet.

Hadn’t seen the Neff & Olden piece, thanks, will be interested to read it. I’m not surprised that they find that using three referees rather than two significantly decreases ‘randomness’. Going from two to three referees increases your ‘sample size’ by 50%. I’d imagine that going from three to four referees would make less of a difference, and going from, say, fifty to fifty-one would make hardly any difference at all.

Will be interested to see what they have to say about editorial pre-selection. The most random decisions to which my own mss have been subjected have been when a single handling editor decided to reject them without external review. In every case when this has happened to me, I’ve resubmitted to another, similar journal and gotten positive (in one case extraordinarily positive) reviews. But my own experience is of course a very small sample size.

As a handling editor, I do often seek out reviewers with complementary expertise, anticipating that their recommendations to accept or reject may well conflict. But I care first and foremost about their comments on the strengths and weaknesses of the ms within their area of expertise. These comments are valuable to me in helping me come to a decision. So I’m not too bothered when referees’ accept/reject recommendations conflict. Not that I entirely ignore the accept/reject recommendations of the referees, but I don’t just ‘tally up the votes’ and render a decision on that basis.

What is meant by quality – validity of the science? robustness of the results? interest to the general research community? interest to specialists? smoothness of prose? ability to follow journal formatting requirements? novelty of the results? likelihood of future citation?

Speaking as an author, my interest in the acceptance rate is to judge how much a journal weighs perceived potential impact or appeal of the manuscript to the broader scientific community or editorial taste when making a decision. For example, a well-written paper on a new species of dinosaur is important, but if it’s just another species of a well-known group, the manuscript will be of much narrower interest than a paper describing something really, really bizarre and from a poorly-known group. This paper would be a shoe-in for a specialist journal, but would have little chance at one of the places with a high impact factor. On the other hand, a manuscript describing a novel species of a poorly known group from an unusual geographic area might be a good candidate for one of the big journals, but. . .there is certainly a bit of a “lottery” feel in this case. It’s usually not so much a case of peer review being a coin toss as the mood/interests of the editor.

Of course, I’ve never actually asked a journal editor what the acceptance rate is – it strikes me as being in the category of annoying questions. Instead, I usually make a judgement from talking to colleagues.

Hi Andy,

If authors sent their submissions to a random journal they’d be a very strong relationship between acceptance rate and impact factor, and the top journals would have tiny acceptance rates. The other extreme, where authors selected the best journal that was still likely to accept their work, would mean no relationship between the two. The fact that there’s a weak relationship implies either that there’s a mix of strategies out there or that authors are willing to submit to journals where there’s a chance they’ll be rejected. In any case, acceptance rate is more a product of submission behaviour than any innate property of the journal.

This is such a nice, clear set of thoughts about a topic that people find surprisingly hard to talk about, perhaps because it’s a bit touchy. I remember an editor telling me that “some papers have a 100% acceptance rate, some have a 0% acceptance rate,” which is a convoluted way of saying the same thing as “if it’s great, it’s in; if it’s lousy, it’s out; if it’s on the borderline, we’ll see.”

Authors do like to shoot for the moon sometimes, and there’s little downside in most cases to submitting to the top journals. Rationalizing a 10% acceptance rate as a 10% chance of success can provide peace of mind during the submission process, but the two are not equivalent.

Authors are interested to know your acceptance rate, therefore we must conclude that they view peer review as a lottery? Perhaps they understand that there is a quality threshold for acceptance, and acceptance rate is a kind of proxy for how high that threshold is set.

My point above is that acceptance rate is a poor proxy for journal quality, as it entirely depends on the relationship between the papers submitted and the journal’s quality threshold. Two journals could both have an acceptance rate of 35% and differ enormously in the quality of the papers they publish.

How do you know that the scores given to papers by reviewers are not a lottery? It’s hardly surprising that you are more likely to accept papers ranked highly by your reviewers. These data don’t tell us anything very useful.

Your comment on authors choosing where to submit is interesting in view of the increasingly common practice in the UK of university administrations requiring that their researchers publish only in journals on an ‘approved’ list. I have a colleague who teaches information in a management school and, in effect, there are only two or three ‘approved’ journals in which he is expected to publish. Needless to say, these are leading journals in the field and I imagine that they are indeed beginning to be swamped by submissions. This practice is also going on in other parts of Europe and, for example, in Australia. It’s based on an assumption that acceptance by a quality journal will guarantee citations and that those citations will be a measure of the researchers ‘quality’ – a rather doubtful assumption, I think you’ll agree 🙂

I agree with Richard Smith. Your data show that there is a reasonable correlation between the score provided by the reviewers and the probability of acceptance (and it would be utterly shocking if this were not the case, of course). Your data do NOT show that there is a good correlation between the objective quality of a submission and its score from reviewers, which is (I suspect) what most critics of peer review see as the key weakness in the process.

In other words, your data are still perfectly consistent with peer review being a totally random process, so long as the randomness occurs at the level of review rather than at the editorial stage.

“objective quality of a submission”

and how would that be assessed? except by… reviewers.

I think what you are arguing is that there is not a good correlation between the “true quality” of a submission (which is unmeasurable) and the “objective quality” (which is the reviewer score).

I hoped this point would come up. First, what I’m attempting to do here is use data to examine the charge that ‘peer review is broken’. The critics of peer review tend to argue from individual instances (“I had a really bad decision on a paper once”), but as these are a highly non-random selection of all decisions they’re not strong evidence for anything.

Second, peer review largely represents a serious attempt by several experts to evaluate a manuscript, and hence it’s definitely useable as a proxy of ‘quality’. I’d be intrigued to hear a convincing argument otherwise. There are other measures (like citation rate) that are also useable, but to keep this post short and sweet I didn’t bring in those data.

Richard- I guess I ‘know’ that the scores given to papers by reviewers aren’t random with respect to quality because I see ~ 6000 reviews per year for ~ 2000 papers, and the recommendations match the comments quite well.

“Second, peer review largely represents a serious attempt by several experts to evaluate a manuscript, and hence it’s definitely useable as a proxy of ‘quality’. I’d be intrigued to hear a convincing argument otherwise.”

In the Twitter discussion about this post, Joe Pickrell pointed to this article:

http://www.plosone.org/article/info%3Adoi%2F10.1371%2Fjournal.pone.0010072

According to the pretty well-powered analysis described there, concordance between reviewer recommendations is barely better than chance. In other words, this looks like a near-random process.

In your analysis above you average the scores from the reviewers for each submission. Have you taken a look at how well the scores correlate with each other?

I’d seen this PLoS One paper before but only just gave it a good read. I very much admire what they’ve tried to do, but I don’t think they’ve made the most of their data, and hence I think the level of concordance between reviewers is underestimated. The authors treat ‘reject’ as one category, and everything from ‘revise and resubmit’ to ‘accept’ as the second. This ignores the fact that the recommendations are ordinal: ‘reject’ and ‘revise and resubmit’ reflect a more similar reviewer opinion than ‘reject’ and ‘accept’. I’d like to see these data re-analysed taking this into account.

Hi Tim,

“The authors treat ‘reject’ as one category, and everything from ‘revise and resubmit’ to ‘accept’ as the second. This ignores the fact that the recommendations are ordinal: ‘reject’ and ‘revise and resubmit’ reflect a more similar reviewer opinion than ‘reject’ and ‘accept’. I’d like to see these data re-analysed taking this into account.”

Couldn’t you perform this analysis using your own reviewer data? Your sample size is smaller, but you’d still have reasonable power to assess concordance. I agree that treating the data as ordinal rather than binary is a good idea.

However, despite the limitations of their binary analysis, I think we can all agree that it’s rather worrying that reviewers agree only slightly more often than expected by chance whether or not a manuscript should be rejected.

“the “objective quality” (which is the reviewer score).”

You can’t simply define reviewer scores as objective! That’s precisely what’s under discussion here, and the fact that individual reviewer scores correlate barely better than chance (see comment below) suggests that in general these aren’t objective indicators of truth.

I agree that there is no perfect measurement of the true quality of a manuscript, but citation count is likely to be a far better proxy than reviewer score.

Yes, reviewer’s judgements are most certainly subjective.

My issue is with your implication that there can be some measurable “objective quality of submission” which exists independently of the subjective judgement of a reader (whether a reviewer or another scientist choosing to cite a paper). Without a way of measuring the “true” quality of a submission, it is impossible to assert (as you did) that reviewer scores poorly reflect this unmeasurable quantity.

Since we can’t (and, I would argue, ought not) remove subjectivity from the assessment of an article’s quality, the best we can hope for in a measurable proxy of quality (in a metric) is an objective measure of the subjective assessment(s)… such as the scores of reviewers.

Maybe citation count is a better metric, but it is (a) not relevant to pre-publication quality assessment and (b) also subjective (as citation selections are influenced by journal impact, etc).

Hi Justin,

Ah – note that I never argued that the “real” quality of a paper is actually measurable. I see it as a quality that is real but inaccessible, so we can only assess it using various proxies (as is true, I should point out, for most properties of nature assessed by researchers). The important question is whether anonymous reviewer assessments are reasonable proxies to use to decide whether a paper is worthy of publication.

While true manuscript quality is inaccessible, we can measure whether reviewer scores are a reliable proxy by seeing whether they correlate with one another. As discussed above, this appears not to be the case, at least in the one large study I could find of this. The fact that reviewers agree with each other about the same manuscript barely more than expected by chance suggests that, in general, they are not accurate reflections of some underlying reality.

Citation count is obviously not a useful metric for pre-publication peer review. Indeed, perhaps we don’t have ANY reliable metric for publication quality that can be applied before a manuscript is released into the wild. In that case, we need to consider whether pre-publication peer review should just be scrapped entirely, as I believe is already largely the case (for instance) in physics. In such a world papers would not have to pass through the opaque and at least somewhat arbitrary peer review process before they were published, and their quality could instead be assessed post hoc through citation metrics.

In any case, I’ve already moved well beyond my expertise in this discussion – my main purpose here was to point out that Tim’s graph does not in fact address the question posed in the title of the post, and I think I’ve done that.

I’m puzzled that there seem to be such discrepancies among reviewer reactions to journal articles in the sciences whereas, in the peer review of monographs in the humanities and social sciences in my more than forty years of experience as an acquiring editor, disagreements about quality occur probably less than 15% of the time. Does anyone have an explanation for why there would be this difference?

Hi Sandy,

I find that extremely surprising. Does anyone know if this kind of deep, quantitative analysis of reviewer scores has been performed in non-scientific fields, or indeed across multiple fields in science? It would be interesting to see whether (for instance) reviewer disagreement was sharpest in more fast-moving or competitive fields, where the review process may take on a more political flavour…

I don’t know about studies of peer review in other disciplines, though i am now reading Kathleen Fitzpatrick’s new book titled “Planned Obsolescence: Publishing, Technology, and the Future of the Academy” (which I am reviewing for the Journal oif Scholarly Publishing) and it has a strong focus on peer review, offering ideas about how it might be improved and modified for the digital environment. You talk about how “political” bias might enter into reviewing of scientific articles, Daniel, but imagine how much this can potentially affect reviewing of inherently controversial fields like political theory. As an editor, one tries to avoid this kind of bias coming into play as much as possible. E.g., you don’t seek a Marxist to review a book written by a libertarian, or ask an Anglo-American analytic philosopher to review a book by a Continental philosopher (unless he is an unusual person like Richard Rorty was). The trick is to get a fair and critical review while eliminating as many potential sources of bias as possible but also not just engaging readers who will automatically endorse anything that shares their point of view. Over time one comes to learn what set of reviewers best fit these criteria.

You’re right- I can do this analysis with Mol Ecol data, and probably should. One reason for not expecting a very high correlation between reviews is that editors typically seek out complementary expertise on the ms, whether that be a mix of junior and senior scientists (the former comment more on the details and the latter on the overall importance), or researchers from different areas. I don’t think trying to eliminate this diversity of opinion in favour of ensuring conformity would lead to better peer review.

Second, even in the PLoS One paper they have the sentence “just under half received reviews that were in complete agreement not to reject (i.e., all reviewers recommended accept/revise)”, which doesn’t fit well with the doom and gloom message elsewhere in the paper. However, this agreement does include reviewers recommending ‘accept’ and ‘revise and resubmit’, which isn’t really agreement in my book.

So rather than knowing what the acceptance rate for a journal is, what you really want is a measure of the extent to which the reviewers score predicts the acceptance.

An ROC curve for every journal, with the average reviewer score for a paper as the continuous variable predicting the paper’s acceptance.

I wonder how much acceptances differ from journal to journal because of considerations other than just the ratings by the external reviewers? E.g., how do considerations of the editor’s own taste and preferences enter into the final decision, or considerations like balance among different topics? In the Latin American Research Review (the official journal of the Latin American Studies Association), some consideration is provided to balance contributions among different disciplines–history, political science, sociology, literature, etc.–so that quality alone does not determine whether any given article gets accepted. This is more like what happens in college admissions, where elite colleges have an abundance of well-qualified applicants and aim for a “well-rounded” class based on considerations other than just SAT scores and grade averages. Is there anything wrong with using peer review in this way, viz., as a way of establishing a cutoff threshold, beyond which other considerations come into play in making the final selection of what gets published? Certainly, that is the way peer review functions in scholarly book publishing.

Tim – You say “A reject recommendation is assigned a score of 1, “reject, encourage resubmission” is assigned 2, etc”. Are reviewers aware of these numerical interpretations of their recommendations when they make them?

My biomedical general journal will apply to MEDLINE in January. I have always wondered why on earth they ask for my acceptance/rejection rates. Can anyone give me a clue? Especially after reading the blog and comments, I am even more intrigued.

This may be a niggling point, but speaking of chance I object to the term “quality” in this context. We are really talking about importance. The difference is that quality can be controlled for, but the importance of one’s discovery is often a matter of chance. It is wrong to say that someone does low quality work when they were just unlucky, their gamble did not pay off, and vice versa.

At (Editorial Manager) User Group meetings I’ve suggested that journals should experiment with new workflows such as the introduction of a paid “pre-submission inquiry” step before full submission. This would allow authors to pay for initial assessment of manuscript relevance by editorial staff before formal submission and peer review. This workflow would (a) reduce author anxiety (b) reduce unwanted papers (c) avoid unnecessary peer review, and (d) generate new revenue for journals. While trivial to implement, I’m not aware that any journal has experimented with this approach.

We do encourage authors to make pre-submission inquiries, but definitely don’t charge for them (they’re just an email with an abstract). They are definitely helpful for the reasons you outline above.

The FASEB Journal requires a pre-submission inquiry and charges $50 if I remember correctly. Also see a Report on Submission fees by Mark Ware for Knowledge Exchange.

Tim,

Thank you for this excellent analysis. I love the graph. I agree with David, we have to be careful, we should not confuse quality with importance. It is actually the importance of an article that drives the citation index more than the quality of the article. As an example, I cite the New England Journal of Medicine (the medical journal with the highest impact factor). The NEJM conveniently lists the citations for all their articles. The majority of articles generally have less than five citations. There are a significant number of outlining articles with 100 or more citations. The rejection rate for the NEJM is 92%. The high rejection rate of the NEJM does not guarantee that every article will receive a large number of citations. In addition, a very famous investigator who has published in the NEJM was recently alleged to have conducted scientific misconduct. A quick review of the author’s article shows that it has received over 450 citations. So citations are no guarantee of quality but rather reflect the perceived importance of the article.

I have recently returned to the US after a few years of living in China. The concept of peer review and article acceptance is a topic of great interest in China. Peer review, as it is conducted in the West, is a very foreign concept to the Chinese. Consequently, I was asked on many occasions to explain the secrets of getting published in a Western journal. Once again, I find myself agreeing with David. The review process is essentially objective not subjective. The medical journals have explicitly explained the criteria used to evaluate manuscripts through CONSORT and the ICMJE Uniform Requirements. The quality of medical research is judged on the following criteria:

Have the authors asked the right question? In other words, will the answer to the question confirm current knowledge, redefine or further explain the mechanism of disease or demonstrate the efficacy and safety of a therapy?

Was the study designed well? In other words, is the study designed in such a way that it will answer the question with enough statistical power so that the answer can be applied to a larger population? Were the methods used well documented so that others could replicate the study and did the authors use ethical means to collect store and protect their data?

Do the authors conclusions correspond to the study results? In other words, did the authors prove or disprove their thesis and did they then conclude an appropriate applicability of their findings to a larger population?

It is fairly straightforward, if the answer to all of the above questions is yes, then by definition, you have a “quality” paper.

But there are many other reasons why a journal can reject a paper. The subject matter of the paper does not fit the editorial profile of the journal. The subject matter has already been covered by the journal. The subject matter is too narrow or too broad for the journal. There isn’t enough space to publish the article. The editors don’t think the article will be important enough (editors like high citations as well as authors). All of these issues come to play in the acceptance and rejection rates of journals.

I often tell Chinese researchers that though your paper has to be of high quality to get in the door of a leading journal, quality is not the sole criteria used in deciding whether or not the paper will be accepted. I don’t think that many authors understand that there are many reasons beyond the “quality” of their research that can cause a journal to reject or accept a manuscript and it is for this reason that they perceive the process as a bit of a coin toss.

I think this differentiation between quality and importance is useful. Probably most researchers have a better handle on the quality of their research than the importance of their research. They probably think it is more important than the average researcher in the field thinks it is, because otherwise why did they research that topic. So this can generate a lot of apparently random submission at top journals. And then rejection rates reflect the “quality” of the journal in terms of how much important research they publish. The latter is definitely true that rejection rates are seen as a quality indicator. The former is just a hypothesis.

I’m a little late to the party, but I’ll point to a related study in the Journal of Clinical Investigation:

http://www.jci.org/articles/view/117177

This goes a little further into things by looking at the agreement between reviewers and decision outcome. We haven’t undertaken a similarly rigorous analysis since this study (1994), but a superficial view of recent JCI data appears not entirely different from the probability curve above.

I’m not sure if this has been raised in other comments, but it is not true that “If peer review were a pure lottery, the curve would be flat, as all papers would have an equal chance of acceptance”.

All your graph shows is that in a journal where reviewers are asked to assess the “quality” of submissions on a single continuous scale, the editors tend to follow the recommendations of the reviewers, rejecting those deemed “low quality” and accepting those deemed “high quality”.

What is the external validity of the “reviewer score”? For example, is there a correlation between this score and citations or views of published articles? Does a higher score in a rejected article predict eventual publication elsewhere?

It definitely is a problem if peer-review is seen as a lottery. But I think there is some validity to this perception. As suggested in some of the other comments, the problem is in the selection of the reviewers – a sample of 2-3 experts is a poor way to assess the likely impact of an article across the entire community.

As noted by Matt Hodgkinson, the analysis presented simply shows that editors follow reviewers’ recommendations but does not assess the reproducibility of those recommendations.

One of the biggest problems is that the recommendations are based on the reviewer’s sense of the “importance” of the journal, which is highly subjective, rather than the validity and quality of the science, which ca be more accurately assessed by sampling the community more broadly.

See the recent paper below for more thoughts these issues:

http://www.frontiersin.org/Computational_Neuroscience/10.3389/fncom.2011.00055/abstract

This is why the peer-review process that is done for books is more adequate, as it involves judgments not just by “experts” but by professional staff editors and also faculty editorial boards of university presses, which represent a wide span of disciplines and who can speak to the “importance” of a work in a sense much more likely to be representative of the broader audience for it.

Thanks for the comments. I agree that the nature of the link between reviewer score and a measure like citation rate needs further exploration, but the hypothesis ‘reviewer scores are unrelated to the quality or importance of the manuscript’ is hard to defend. I would still like to see good evidence that a highly qualified expert who devotes 6+ hours carefully evaluating the robustness and importance of a paper is unable to say anything about either of those things.

I am just beginning, and I have been accepted 3X and rejected twice within the last year or so from international journals in Ecology, which I would like to think indicates that I am setting my standards for where I submit at about the right level.

When I have been rejected, the editor has accompanied the rejection both times with a statement about the journal’s rejection rate (both above 80%). So it seems to me that some editors attach rejection rate to their journal’s quality.

What discourages me about peer review (including NSF proposal review) is that reviewer enthusiasm regarding a submission for my submissions has varied wildly. Where one reviewer doesn’t see generality or novelty, another will say “this is exciting”,”this is an important development”, or even “this should definately be accepted”. When I have one reviewer make comments like these and another reviewer (and it seems to usually be just one or two other reviewers) is lukewarm, it is hard not to regard peer review as a lottery…it makes me feel like had I flipped heads twice in a row, I would have been in, tails twice in a row I would have been out for sure…so far editors have gone both ways on me when I split evenly among positive and indifferent/negative reviews.

It also doesn’t help when it is clear from a reviewers comments that they completely missed something that was prominently presented in the paper and use the perceived absence as the basis for negativity. This has happened to me with all of my submissions (accepted or rejected), although only about half individual reviewers have done this.

I obviously have a small sample size (5 journal submissions and one DDIG proposal). Still, I do feel as if I won’t be able to find a job, if I don’t publish in the best journals possible, even if those journals aren’t the best place to convey my results audiences where they will be most valuable. I I should also say that I have pressure from my adviser to aim high–higher than my discretion would allow at times. I consider myself high-achieving, independent, and self-motivated, but as someone that is a lot more productive when not driven to worry about such things, I find myself alienated by the review and funding process as I wrap up my doctorate.

I found this Googling around for the rejection rate of a journal I just applied to by the way.