We all recognize that the Internet has transformed not only how much information is available, but also how – or whether – that information is discovered, consumed and disseminated. But how do we separate trustworthy information from questionable “facts” in a world where technology has disrupted the traditional knowledge supply chain controlled by libraries and publishers? In this post Julia Kostova (Oxford University Press) and Patrick H. Alexander (The Pennsylvania State University Press) consider some of the issues raised for the role of publishers as custodians of quality and reliable information.

Last March, a report that Google was planning to change its search algorithm made the news. According to the story, picked up by numerous media outlets, Google would rank pages based not on popularity, as it does currently with its PageRank algorithm, but on veracity. Under the new plan, instead of ranking sites according to the number of in-links, which inadvertently conflates trustworthy results and spurious claims, Google would rank sites by weighing the reliability of the information they contain against Google’s repository, the Knowledge Vault. The more accurate the information, the higher it would rank in search results.

Given the exponential — and unstructured –- growth of online resources, organizing and vetting information in the digital space has become a priority. For Google, which in 2014 received 68% of all internet searches – some 5.7 billion searches per day or 2 trillion searches per year — and where the number one position in Google search gets 33% of search traffic, providing dependable information to users is critical if they are to continue to view the search engine as essential to information discovery. But Google’s mission to organize knowledge raises questions about who decides what information is trustworthy in the digital-era knowledge-supply economy, and what role scholarly publishers will play in it.

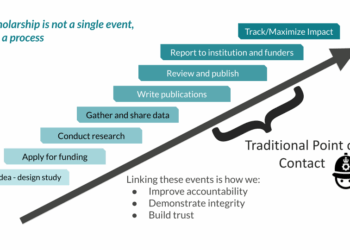

The internet has transformed not only how much information is available, but also how – and whether – that information is discovered, consumed and disseminated. It has also altered how we determine what is trustworthy and what is not. Before the internet, publishers and libraries, together with the academic community, controlled the knowledge supply chain that assured “trustworthiness” and minimized the amount of misinformation. The academic community vetted knowledge, and libraries, in particular, were the final validation of research by virtue of collecting trustworthy (i.e., “peer-reviewed” or otherwise verified) information in research libraries. But in the digital-world supply chain, where the internet, and not the library, has become the first point of departure for researchers, libraries have been disintermediated, cut out not only of the distribution link in the supply chain but also of establishing what is trustworthy. As a result, in the digital space, information – whether reliable or questionable – swirls together in an algorithmic stew, affecting research outcomes as well as their interpretations.

For example, internet search results are highly selective, both at the search/discovery level and at the very level of information. Resources that are not available digitally are being excluded. Conversely, an overabundance of information distorts research outcomes. Buried by an avalanche of too much (mis)information, researchers can’t always dig out the good from the bad. On the other end of the spectrum, misinformation persists online despite its untrustworthiness. The Scholarly Kitchen has discussed in the past the afterlife of retracted papers, which often continue to circulate in the digital space, even after retraction, possibly still impacting research. A recent report in Inside Higher Ed noted that “predatory publishing” by Open Access journals with “questionable peer review and marketing” is on the rise. The presentation of information as factually accurate when it is not can torment the most diligent researcher.

The question of trustworthiness is therefore more important than ever, as it can affect research outcomes. Google’s desire to determine veracity raises questions about the future place of scholarly publishers in the knowledge supply chain. To be sure, university presses do a lot more than simply manage and ensure rigorous peer review. In an information-overload environment, where “facts” can easily be collected, published and located, the vetting and curating of expert knowledge that scholarly publishers provide is arguably more critical than ever, and can’t be dislodged by improved search technology.

But as new players enter the fray – technology companies like Google and Facebook, crowd-sourced projects like Wikipedia, commercial publishers, and others, whose commitment to ensuring the accuracy of knowledge may differ from those of peer reviewed scholarly research – they upend the established knowledge validation process. Currently publishers of all stripes obligingly send Google and other search engines their metadata, DOIs, and keywords, and are rushing to supply full-text XML so that it can be crawled by search engines. We cross our fingers and throw salt over our left shoulders in the hope that our prestige as scholarly publishers will ensure the integrity of our publications and this, in turn, will put them in the primary position in search outcomes.

But what will happen as information from outside the traditional, peer-reviewed supply chain enters the stream via metadata, online publications, or other forms of content? With the blurring of lines between what constitutes commercial, not-for-profit, society, university, and institution-based publishing — and as was vividly illustrated in the recent announcement of Random House’s partnership with Author Solutions to create “a new model of academic publishing,” Alliant University Press — new players are entering the space of trustworthy research. Can scholarly publishers maintain control of the standards for trustworthy scholarship, or do they risk a disintermediation not unlike what happened to libraries?

As it turns out, the story about Google’s Trust-Based algorithm turned out to be less-than-accurate: the search engine giant was merely exploring the possibility in theory, in a research paper — not that you’d be able to tell this was only theory by the 1.5 million hits on the search expression “Google truth algorithm.” Many took the report as reflecting concrete plans or as at least a portent of the future. Whatever Google’s plans, it provides an occasion to reconsider the role of academic publishers as custodians of trust in the knowledge-creation supply chain.

Discussion

34 Thoughts on "The Knowledge Supply Chain in the Internet Age: Who Decides What Information Is Trustworthy?"

This article seems to ignore, or even deplore, the vast expansion of the marketplace of ideas that the Internet has facilitated. There is no knowledge supply chain, but if there was one then I am glad we have finally broken free of it. The answer to the question who decides what information is trustworthy is very simple: each of us. Of course there is a downside to this new freedom, as with all freedoms, but the value is far greater. The genie of the free and global exchange of ideas is not about to go back into the bottle. I like to think that publishing, especially the broadsides and radical pamphlets, created the same sort of consternation in the establishment, when first it appeared.

You may be surprised, but I can agree with most of what you’ve said. The “vast expansion of the marketplace of ideas” prompted the post and the post is not suggesting that the genie should be put back in the bottle, only that now that the genie’s out, how do we decide what’s trustworthy? While you may trust your own judgment to know what is “trustworthy,” I’m not quite that confident. I need some help in knowing that what I’m reading or viewing is trustworthy. The unfettered Internet seems not to help me in that regard the way that libraries, for example, do when it comes to helping me judge the trustworthiness of information.

Personally I find the unfettered Internet very good at helping me decide who to believe, in controversial cases. But “ask someone you trust” is a fine heuristic. In my case it does not happen to be a librarian, because the last time I visited a library was to get a demonstration of this new gadget called the World Wide Web.

“The answer to the question who decides what information is trustworthy is very simple: each of us…”

That approach might work when trying to decide if Donald Trump is an entertainer or a serious contender, but when applied to the scholarly information and research, misinformation and chaos are likely outcomes. Most people are not qualified to evaluate the veracity of a cutting edge immunology study versus an uniformed blog post on utility of vaccinations, for example.

The point is that if the people asking the question are not trained in the science then theirs is not a scientific question. Hence trust the journals is not an answer, because they could not possibly understand the journal article. I find the blogs very good at shredding questionable knowledge claims. That is probably one of their primary attractions.

I think the point is that changes to the infrastructure within which we do research affect the nature and outcomes of research. It’s as true for diligent researchers as it is for casual info seekers. I’m fond of citing this recent study that looks at order bias on an econ listserv and that shows that what’s at the top gets the most views, downloads and citations. That’s here: http://www.nber.org/papers/w21141

Now when you think that research overwhelmingly starts on Google, the question of trust on the internet is actually critical for schoalrship.

Actually, while ordinary search heavily (not overwhelmingly) starts with Google, a lot of scientific search starts with Google Scholar, Scopus or WOS. When I am looking for science I never start with Google. Moreover, STM put out data a few years ago suggesting that for researchers it also starts with journals and a variety of other sources. Search engines were less than 30% of the starting points, as I recall.

But this does not really address the issue of trust as raised in today’s post. I think, first, that the idea that we are being swamped with misinformation is incorrect. Second, to the extent that we are the publishers are in no position to change that.

The “assumption that we are being swamped with misinformation” is actually not our assumption, but Google’s. That stands behind Google’s interest in developing a “Knowledge-Based Trust” algorithm. As to whether publishers are in a position to change anything does not fall within the scope of the post, except to point out that on the Internet, the inability to discern what is reliable information remains problematic for publisher who is presenting its information as reliable vis à vis other “information” providers whose primary objective is not trust but money.

This is getting a bit strange. First of all, I just reread the post and the misinformation claims appear to be yours, not Google’s. Second, most Internet content is free, so it would appear that the publishers are the ones making money. Not that I object to that, because they are doing the heavy lifting.

But I think we have driffted away from the topic, which is one I have studied at great length. There are probably fewer than 7 million publishing researchers in a world of over 7 billion people. How those billions get their information is a fascinating topic of research. But in no case are the publishers and libraries going to control that flow. The floodgates of information, and misinformation, and disinformation, have opened for good.

I believe that the first issue that needs to be addressed is that not all knowledge (new or assembled) is produced by academics or that all work of academics appears in scholarly journals. Next, it is also clear that not all such knowledge is permanent or accepted by all academics as valid in theory and practice. As Einstein is supposed to have said to Heisenberg: “Whether you can observe a thing or not depends on the theory which you use. It is theory which decides what can be observed”.

Academic journals have been used to control such knowledge and whose knowledge is accepted. Hence the proliferation of contrarian journals. Additionally, publishing, particularly for academics serves as a major default for their promotion and tenure with all the ramifications beyond truth validation.

The Internet is laying such issues and more bare and, as this editorial notes, providing alternatives to bypass the gatekeepers claiming the true path to validating knowledge. Academics, increasingly, at least those whose future is to be tenure track faculty, have a vested interest in maintaining this relationship. With Watson and future children able to scour the literature, and as pointed out here, to actually get down to text, graphics and other data, the ability to provide measures of current validity challenges the rather cumbersome and self-serving functions represented by the current model of academic publishing. It can also meet the concerns voiced in the SK about Open Access of various stripes and colors.

As those who study resistance to innovation, the rationale often stated is often not the underlying reason for the concern and resistance. The issue of journals serving as validators and possible loss of that self-stated position does not address the much more complex issues faced by publishers of journals regardless of whether they are for or not for profit or even societal journals.

Good point about the emergence of new, alternative venues for research and knowledge. Worth thinking more about what that would mean for schoalrship down the road.

Descartes said: I think therefore I am,

John Doe said: I think, I put it on the internet, therefore it is

Did Google actually change its algorithm? Given the intellectually notorious problems with computer rankings of relevance, I’d like to see the algorithm for veracity.

See the last paragraph of the article above.

As far as I know, the article (arXiv:1502.03519v1) was just a “what-if” paper. Of course, Google’s algorithm is probably not what it was back in Feb. 2015, when the article originally appeared.

I realize this post is more about publishers but there is a discussion of libraries that I’d like to engage. As much as I believe that libraries have many important roles in scholarship and information supply, I worry that the characterization of history does a disservice to the diversity of materials that research libraries have collected from the beginning:

“The academic community vetted knowledge, and libraries, in particular, were the final validation of research by virtue of collecting trustworthy (i.e., “peer-reviewed” or otherwise verified) information in research libraries.”

Yes, our collections include peer-reviewed and otherwise verified materials. But, they never included only those materials! It may not have been as vast a set of resources as what is now within clicks on the web but pamphlet files, archives of primary sources, newspapers, popular magazines, and more have been part of research library collections from the beginning. Anyone assuming that everything in the library was somehow scholarly-verified/trustworthy would have been making a mistake (as indeed more than one student learned when citing Time or Newsweek in a paper – to give a simple example).

For what it is worth, my experience is that many of our users are looking to libraries more now than before to help judge trustworthiness. Not making the decision because the library has an item in its collections but as a source of training in strategies and heuristics for evaluation and decision-making about quality.

First, thanks so much for your thoughtful comment. While the post does focus on the question of peer-review and/or who validates research, your point about the importance of library holdings is spot on. It underscores the problem of the “disintermediation of libraries” and how much amazing information remains obscured to the average internet user. Even formidable researchers are either not aware that some of their resources are actually library-related, or they fail to realize how much valuable material exists in libraries because they are no longer going to the library to do research and even a Google scholar search does not reveal all that libraries can offer. Your point that not everything a library holds is peer reviewed cannot be denied, but can we agree that someone in the library judged what materials were worthy of acquiring (like Time or Newsweek)? While I wish that more people were looking to libraries to “help judge trustworthiness,” if the data about how people perform research has any grain of truth, libraries are, unfortunately, not in the loop. That is of deep concern to anyone who cares about education or the need for an informed citizenry.

Truth is really the wrong direction to take for any discussion of knowledge. Truth is a moral and religious term. God may reveal truth, I say MAY, but neither science nor any other form of human understanding deals with both truth and knowledge together. Scientists rely on empiricism. Simply put, they observe. They observe things around them and also observe things they arrange to happen, such as experiments. Then other scientists observe also. Over time if the scientists agree on the observation results a consensus is created. This consensus is knowledge. It is trustworthy knowledge. At least according to scientists. But a lot of knowledge besides science is based on observation. But generally it’s not verified as closely and no consensus on its validity is established. My conclusion is that trustworthy knowledge is the result of observation, more observation, and some level of consensus on the contents of the observations and what next steps should be taken to provide greater consensus. But trustworthy is also a relationship. That is, even with multiple observations about which there is a high level of consensus, knowledge might still be judged unreliable if those involved in the relationships deem the sources of such knowledge biased or too narrow.

As an analytic philosopher of science, I have to disagree with most of this. Truth is a core concept in logic, which has nothing to do with religion or morality. In epistemology, knowledge is often defined as justified, true belief. So the two concepts are intimately connected. Beyond that, while science is certainly heavily dependent on careful observation, there is a great deal more to it. There are also the complex theories which explain those observations, often invoking mechanisms which are not directly observable. Quantum theory for example. This too is knowledge, or it can be provided it is true. Consensus is also important for progress, but it is merely a general agreement, at a certain time, as to what is accepted as true. As we all know, consensus can be wrong. Science is not simple.

David, you and I approach this conversation from two different starting points. If I were to approach this from the standpoint of “professional” philosophy I would begin with this from William James, “TRUE IDEAS ARE THOSE THAT WE CAN ASSIMILATE, VALIDATE, CORROBORATE, AND VERIFY. FALSE IDEAS ARE THOSE THAT WE CANNOT. That is the practical difference it makes to us to have true ideas; that therefore is the meaning of truth, for it is all that truth is known as.” …. “The truth of an idea is not a stagnant property inherent in it. Truth HAPPENS to an idea. It BECOMES true, is MADE true by events.” I concur with James on true and truth, as a matter of philosophy. However, the “person in the street” while accepting some of this often mixes it with moral and religious beliefs, and assumes that the sources of truth cannot me immoral, and thus must originate from moral standards that in large part are similar to her/his.

As to science, please give some insight on what the “great deal more to it” that makes up science apart from observation. I assume you mean the moral code of science – to observe and report honestly and fully? Quantum theory is now supported by a wide consensus among scientists and philosophers. This makes it “true,” scientifically speaking. Scientists continue test it by observation. In such cases I always apply the Maxim of Pragmatism, “Consider what effects that might conceivably have practical bearings we conceive the object of our conception to have: then, our conception of those effects is the whole of our conceptions of the object.”

Peirce Edition Project, Peirce Edition (1998-06-22). The Essential Peirce, Volume 2: Selected Philosophical Writings (1893-1913) (p. 135). Ingram Distribution. Kindle Edition.

James, William (2009-10-22). Works of William James. The Varieties of Religious Experience, Pragmatism, A Pluralistic Universe, Meaning of Truth and more (Mobi Collected Works) (Kindle Locations 21-24). MobileReference. Kindle Edition.

Ken, I certainly do not agree with James, although I am a big fan of Peirce. But in any case we have learned a great deal since these folks wrote. Philosophy progresses just as science does. As for science, what I mean by a great deal more is the entire theoretical and explanatory side. (Interestingly, Peirce was one of the first to write about this.) Explanation and observation are two very different things. In fact explanation is the primary job of science.

Consensus does not make beliefs true. It merely means they are accepted as true for the time being.

David, Peirce is certainly more to be credited with advancing Pragmatism. But James set the standard for American Philosophy, and for Psychology as well. His work has influenced literally thousands of scientists and philosophers. As to science method and theory, it isn’t what most assume it to be. There is no “scientific method,” other than to observe as frequently and from as many perspectives as possible. Scientific theory is merely a short hand to allow the observations to shine through more fully and clearly. In other words science is a relatively simple process, but very hard work and requiring honesty and commitment to record keeping beyond the capability or inclination of most people. And consensus for the time being, for the moment if you will is all you ever get from all this work. And in most instances we don’t even achieve that. We’re just ignored.

Ken, I disagree completely. Science is a very complex and poorly understood process, one I have spent many decades working on. Your “shine through” metaphor is not very explanatory.

David, read Feynman, Einstein, etc. Science is not a particularly complex process. The things scientists attempt to observe and record their observations about can be very complex and difficult to make sense of. That’s where all the hard work of observing again and again, with different tools and techniques comes in. If we observe long enough and with sufficient imagination in tools and processes of observation perhaps we will eventually observe enough for the working arrangements of our objects of observation to “reveal themselves” to us. And that’s what it is, a revelation. The objects exist apart from us, have no obligation to reveal themselves to us, and don’t care if we don’t like that. We observe them in hopes of finding how and why they look and operate as they do And 99% of the time we fail. Under these conditions who in their right mind would want to be a scientist? I ask you, who?

Scientlsts do thats who

I have read Feynman and Einstein, as this is my field (or one of them). Feynman is a good example of someone exhorting science to be as they think it should be, rather than trying to figure out how it actually is. Both were great physicists who to my knowledge never actually studied the nature of science. This is a little like star athletes who think they are experts in sports medicine.

Here is an example of complexity that is about consensus. Awhile back my research team discovered that there is a fundamental transition in the structure of the co-authorship networks of a subfield when consensus is achieved. Prior to consensus there are a number of distinct clusters, as different groups pursue different possible hypotheses. Then there is a relatively rapid transition to a supercluster. The question is whether this is a universal property of science.

My long time colleague William Watson discusses this, with a link to my team’s report, here:

http://www.osti.gov/home/ostiblog/why-might-critical-point-behavior-coauthorship-networks-be-universal-symmetry-group-assoc-0

Science is arguably the most complexly integrated of all human systems. Millions of people, all over the world, building on one another’s work for hundreds of years. That is its power.

As to science what you say is not so. The correct statement would be that at the moment this is the prevailing theory until proven wrong. That is very different from saying something is true.

Harvey, it’s not possible to prove any theory, any notion at all wrong. Science operates via consensus as to what the observations show and what they mean. That’s the best that can be done.

Ken, Technically, proof and disproof are not part of science, they are math concepts. In science we have the weight of evidence, for and against a given theory or hypothesis. This weight can change over time, causing accepted theories to be rejected. One assumes that the consensus, when it occurs, is based on the weight of evidence. This is basic rationality so I am not sure what you mean by the best that can be done? What is missing?

So how do account for the first law of thermodynamics

What sort of account are you looking for Harvey, beyond tracing its development and acceptance? I do not understand the question. I hope you are not claiming that the first law cannot someday be found to be false, because of course it can. Stranger things have happened in science. But this is no reason to doubt the first law. As Descartes pointed out, the fact that any belief may be false is no evidence against it.

David, just following up on my boyhood mentors, Einstein and Feynman. Einstein — “The whole of science is nothing more than a refinement of everyday thinking.” Feynman — Science is our way of describing — as best we can — how the world works. The world, it is presumed, works perfectly well without us. Our thinking about it makes no important difference. It is out there, being the world. We are locked in, busy in our minds. And when our minds make a guess about what’s happening out there, if we put our guess to the test, and we don’t get the results we expect, as Feynman says, there can be only one conclusion: we’re wrong. Both remarks are somewhat oversimplified, but they get the points across. Problem being, humans have lots of other things going on, including emotions and the ‘struggle for survival” which sort of divert their attention from thinking clearly, or at times thinking at all. All of this makes “observing and recording” no simple or easy task.