The opinions of highly respected senior scientists tend to get a lot of attention, and a number (here, here, and here) have lamented the state of peer review. But what if the reviewer experience for high-profile researchers is the exception and not the rule?

One common complaint voiced by big name scientists is that the rapid growth in the number of papers submitted to journals has led to a massive increase in the demands on the reviewer community. After all, 10 years ago, there were fewer papers being published (Figure O-13 here), and now they’re getting a huge number of requests to review. A reasonable conclusion is that the peer review system is more overloaded now than ever before — it may even be close to the point of collapse.

This alarming conclusion may instead reflect a more benign change: the steady increase in their reputation over the last decade. If seniority alone is a good predictor of the number of review requests, these big name scientists would have seen a steady rise in their review workload even if overall submissions had stayed the same. So while it’s natural to join the dots between rising submissions and your own workload, and from there to the imminent demise of peer review, the connections may not really exist.

To start with, there’s no evidence that increasing submissions increases the per individual review burden — our study with data from the journal Molecular Ecology found that neither the average number of reviews per person nor the number of requests required to get a single review changed much between 2001 and 2010, even though the number of submissions almost doubled (see here and here).

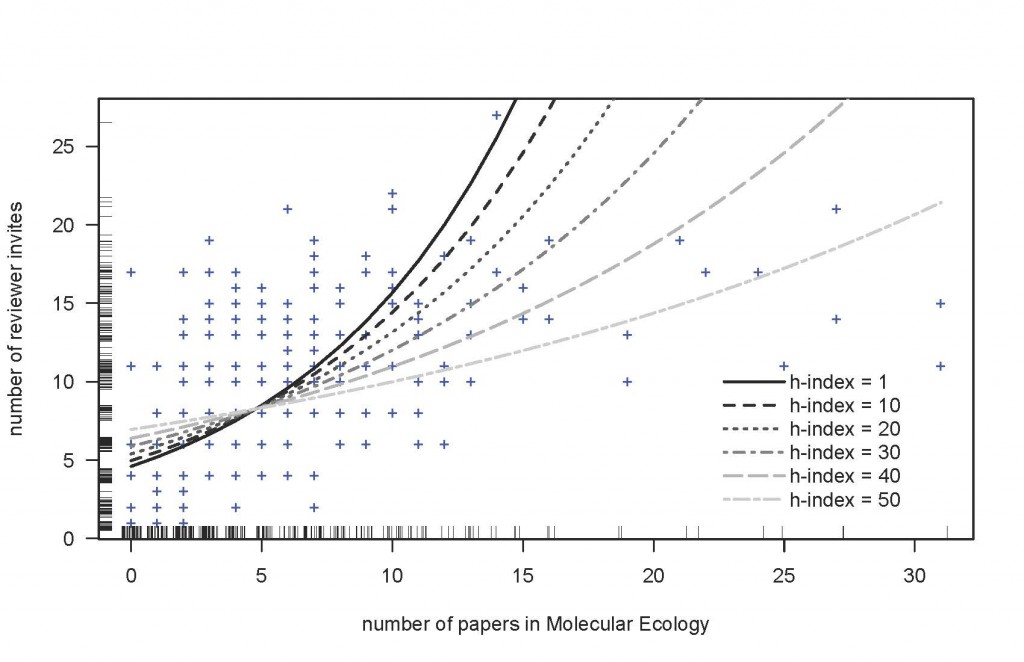

Second, what is the actual relationship between “fame” and the number of reviewer invitations? To get a feel for this (again with Molecular Ecology data), we* picked sets of 30 reviewers who had received 1, 2, 4, 6, 8, 10, or 11 invitations since April 2008 and everyone who had received more than 13. To measure “fame,” we used Web of Science to calculate h-index values for everyone. Lastly, to approximate the match between the researcher’s interests and the scope of Molecular Ecology, we counted how many papers they had published in the journal.

These data are plotted in Figure 1; the lines give the relationship between number of Molecular Ecology papers and reviewer invites for a range of h-index values. Plots for separate bins of h-indices can be seen here.

Surprisingly, the main predictor of how often someone was invited was how much they published in Molecular Ecology. This makes sense because people who do lots of research in core molecular ecology areas will be suitable reviewers for a high proportion of our papers.

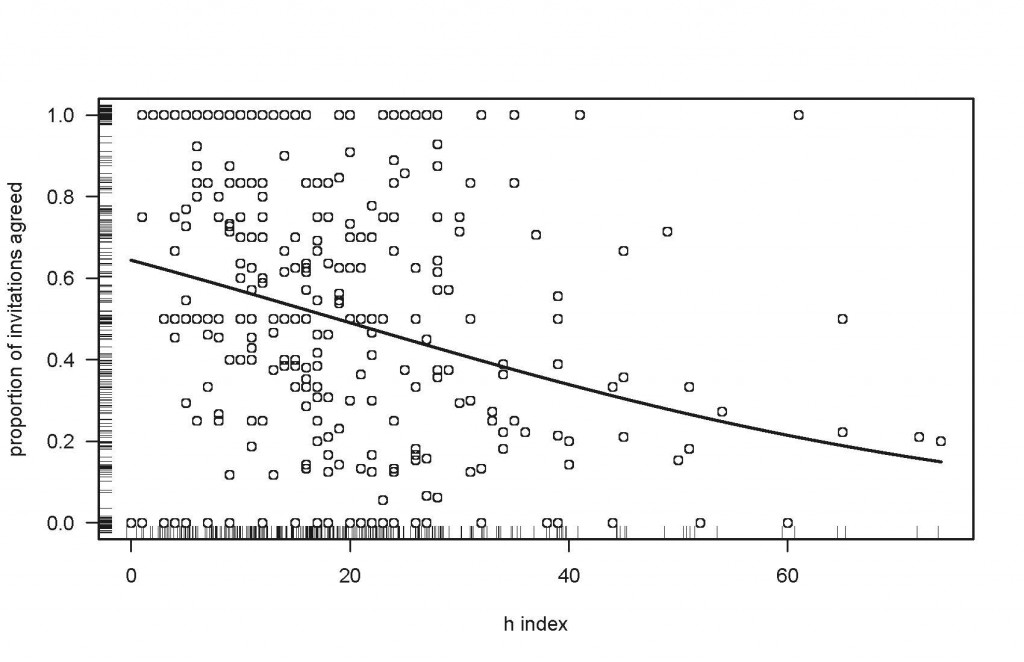

More surprisingly still, fame mostly reduced the number of review invitations: above about five Molecular Ecology papers, a famous scientist would get fewer invites than a lower h-index researcher with the same number of papers. This implies that editors deliberately pick more junior scientists from groups working on “core” molecular ecology topics, probably because that big name will decline their invite (see Figure 3).

For researchers with fewer than five papers in the journal, the opposite is true — the more famous these “peripheral” molecular ecologists are, the more invites they get. This is probably because the topic of the paper is quite far from our normal scope and the editor gravitates towards researchers they’ve heard of rather than taking a risk with an unknown junior.

The data above actually suggest that being famous generally makes you less likely to be invited, especially if you’ve publish with us often. However, as a researcher you can only be central to one field, and peripheral to lots of others, and hence being famous may mean you attract review invitations from many different disciplines.

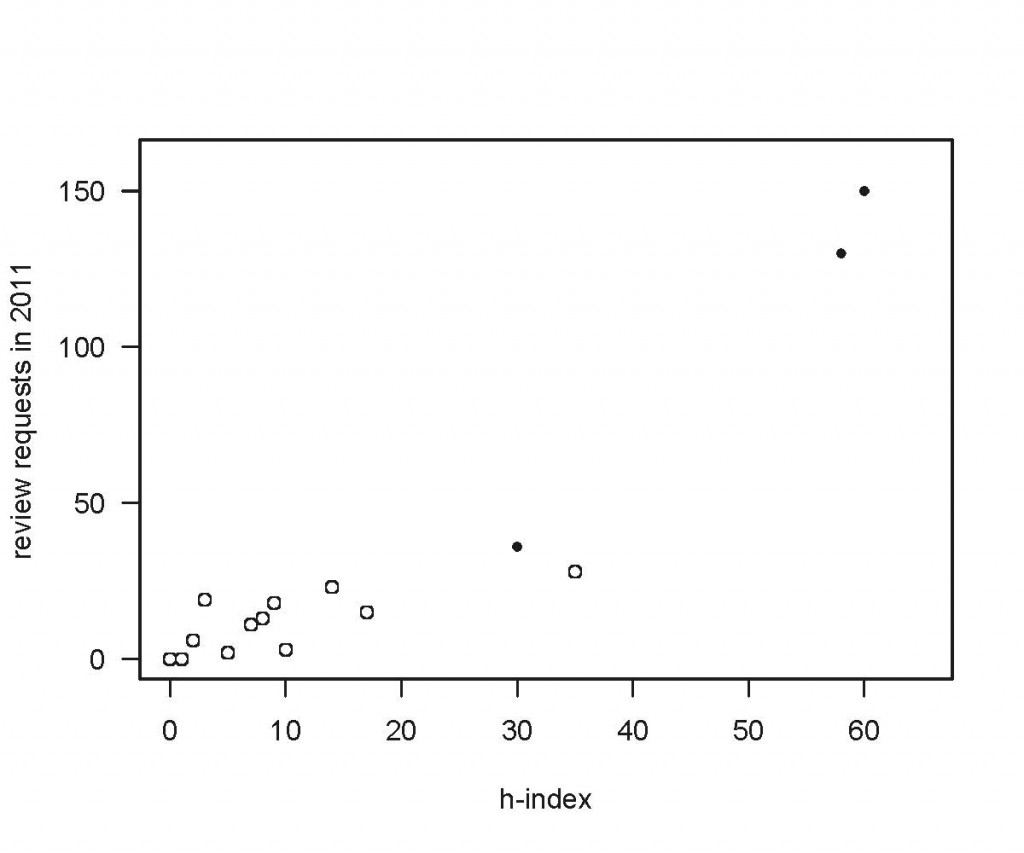

Since this can’t be examined with data from just one journal, I did a quick survey around the biodiversity department here at the University of British Columbia, and asked 15 people with varying seniority how many review requests they’d had since the start of 2011; the resulting graph is below (the h-index data come from Web of Science). The black dots are estimates based on a monthly or weekly rate — even given considerable error in these, you can see that scientists with high h-indices get almost an order of magnitude more requests than everyone else.

This huge volume of review requests hitting senior academics means that they decline a much higher proportion than everyone else. This can clearly be seen in the Molecular Ecology data — a plot of proportion of review invites accepted against h-index gives a fairly strong negative slope. Furthermore, this slope is not driven by the points on the far right of the graph, as a plot of h-index just ranging from 0 to 20 yields a very similar relationship.

The big picture here is that senior academics are being bombarded with requests to review papers. However, since their journey along the x-axis of Figure 2 has coincided with a rapid growth in scientific publications, they may be misdiagnosing their review workload as a symptom of a system increasingly in distress. These academics then feel compelled to write editorials and opinion pieces complaining about the dire state of peer review, and the steady repetition of the “peer review is broken” mantra has led to a growing sense of panic in the research community.

In fact, there’s little evidence above that junior researchers (i.e., most of the people in the figures) are overburdened, and the evidence for a crisis is weak. Grouse all you like, but please stop yanking the emergency chain . . .

* Thanks very much to Loren Rieseberg and Arianne Albert for discussions and help with the data analysis

Discussion

15 Thoughts on "The Famous Grouse — Do Prominent Scientists Have Biased Perceptions of Peer Review?"

Interesting stuff Tim, but I am a bit confused. Just under Figure 1 you conclude from your ME data that “More surprisingly still, fame mostly reduced the number of review invitations….”

Then you seem to find the opposite from your (admittedly sparse) Departmental data, and you conclude that “The big picture here is that senior academics are being bombarded with requests to review papers.”

These two conclusions seem contradictory. What am I missing?

Hi David,

It’s superficially contradictory, but my reasoning is as follows:

1) Imagine you’re in a lab that studies core molecular ecology themes, and that your group regularly submits papers to our journal (Molecular Ecology). Our editors tend to direct review requests to the more junior members of the lab because they’re more likely to agree and will finish it more quickly.

2) The pattern within Molecular Ecology is thus that (for the same number of Molecular Ecology publications) researchers with a lower h-index will get invited more often.

3) Although the high profile lab head is receiving relatively fewer invites from us, they’re also getting invites from all the other journals whose scope is close to ours, and a fair number from even more distant journals.

4) A researcher can only have a few ‘core’ journals, but the number of adjacent journals rises very quickly. For example, if journal scope was simplified to interlocking hexagons, you have one ‘focal’ hexagon, six immediately adjacent and 12 in the next ring out.

5) The lab head can thus have fewer review invitations from Molecular Ecology, but because their higher profile means that they attract invites from many more journals, their total number is much larger.

Hope that helps….

[Figure 1] implies that editors deliberately pick more junior scientists from groups working on “core” molecular ecology topics, probably because that big name will decline their invite (see Figure 3).

Tim,

Could editors be deliberately picking junior scientists because they know they will get higher-quality and/or more timely reviews from them?

http://scholarlykitchen.sspnet.org/2011/12/19/quality-reviewing-declines-with-experience/

If every paper is reviewed by two peers, the review system would certainly break down from overload. Scientists everywhere, including China and the Francophone countries are now publishing in English which means that the load on English journals has increased dramatically.

In the journal that I help edit, we take care not to abuse the review system. We screen all papers and reject those that do not fit the scope or style of our journal, that are not sufficiently interesting, useful or original, or that fail to meet the scientific standards we set. More that half the papers we receive do not pass our screening process. Our reviewers only get to see papers that have passed editorial screening.

I wonder how widespread is the practice of editorial screening prior to review?

Hi Francis,

We definitely do screen papers before sending them out for review, and the remaining ones are seen by 2.6 reviewers each (on average).

One thing we’ve found is that the authors of all the new submissions also become the new reviewers, so that adding more and more submissions doesn’t actually increase the per individual reviewer burden. In fact, since the average paper has over four authors and we only get 2.6 reviews, each author only needs to do 0.6 reviews per submission to make the system balance out.

Tim

Too bad that no editor distinguished between “lead” and “led.” 🙂

You’re also missing the word “than” between “now and “ever” in the next-to-last line of the second paragraph. But this is a very interesting article!

Reblogged this on Feral Librarian and commented:

This is an excellent data-driven analysis of peer review, which calls into question the conventional wisdom that increasing demands on key scholars for reviews has broken the peer review system. By looking at actual aggregate data, Tim Vines shows that “…while it’s natural to join the dots between rising submissions and your own workload, and from there to the imminent demise of peer review, the connections may not really exist.” In fact, it appears that while senior scholars may be being bombarded by review requests, the increase is due to their own increased prominence, not to any systemic factors.