Just as sap flowing in my maple tree alerts me of Spring in upstate New York, I can also tell the season by the type of consulting questions that come my way. For me, Spring means predicting journal Impact Factors before they bloom in June.

The work is routine, detailed, but not altogether challenging. If I’m lucky, I succeed at bundling more elaborate calculations, tests and analysis into my consulting. I could survive as a statistical consultant without predicting journal Impact Factors, but I’m not going to give it up while the work still lasts. The work comes because Thomson Reuters (the publishers of both the Web of Science and the Journal Citation Report) is not adequately addressing the needs of publishers and editorial boards; and in that void, consultants like me find a business niche.

In this post, I’m going to describe how the journal Impact Factor is calculated and highlight its major weaknesses, but mostly focus on how Thomson Reuters’ citation services could be reconfigured to more adequately satisfy the needs of publishers and editors.

Deceptively simple

The journal Impact Factor (IF) measures, for any given year, the citation performance of articles in their second and third year of publication. For example, 2013 Impact Factors (due for release in mid-June, 2014) will be calculated using the following method:

IF2013 = 2013 citations to articles published in 2011 and 2012 / articles published in 2011 and 2012

Simple enough? Let’s get into its complications:

1. What counts as an article? While the numerator of the Impact Factor includes all citations made to the journal in a given year, the denominator of the IF is composed of just “citable items,” which usually means research articles and reviews. News, editorials, letters to the editor, and corrections (among others) are excluded from the count. Yet, for some journals, there exists a grey zone of article types (perspectives, essays, highlights, spotlights, opinions, among others) that could go either way.

The classification of citable items is determined by a number of characteristics of the article-type, such as whether it has a descriptive title, whether there are named authors and addresses, whether there is an abstract, the article length, whether it contains cited references, and the density those cited references (see McVeigh and Mann, 2009). As Marie McVeigh once described this process to me, “If it looks like a duck, swims like a duck, and quacks like a duck, we’re going to classify it as a duck.”

This approach attempts to deal with the strategy of some editors of labeling research articles and reviews as editorial material, yet it leaves room open for interpretation and ambiguity. And where there is ambiguity, some publishers will contest their classification, because fewer citable items means a higher Impact Factor score.

2. Two methods for counting citations. Authors routinely make errors when citing other papers. When sufficient information is provided, a correct link between the citing and cited papers can be made and the citation is counted in the summary record in the Web of Science. When insufficient or inaccurate citation information prevents resolution to the target article, it may still be counted in the Journal Citation Report, which relies on just the journal name (along with common variants) and year of publication. According to Hubbard and McVeigh (2011), the Journal Citation Report relies on an extensive list of article title variants–10 title variations per journal, on average, with some requiring more than 50 title variants. Because of the two distinct methods for counting citations, it is not possible to calculate a journal’s Impact Factor with complete accuracy by using article citation data from the Web of Science.

3. Reporting delays and error corrections. Waiting six or seven months to receive a citation report may not have been onerous when the Journal Citation Report began publication in 1975; however, it does seem increasingly anachronistic in an age where article-level metrics are being reported in real-time. Thomson Reuters’ method of extracting, correcting and reporting metrics as a batch process once per year, impedes the communication of results and propagates citation errors. When discovered, errors found in the Journal Citation Report need to be addressed, corrected and reported in a series of updates. As one editor confided in me after working to have their Impact Factor corrected, “The damage [to our reputation] has already been done.”

4. Reliance on the date of print publication. For most scientific journals, publication is a continuous process; however, many journals list two separate publication dates for their articles: an online publication date and a print publication date. The difference between online and print publication dates can range from several days, for some titles, to over a year in others. For articles that reach their citation peak in year four (rather than in years two or three), delaying the print publication date can substantially inflate a journal’s Impact Factor, research suggests.

Furthermore, as the Impact Factor is based on calendar year of the final publication, there is a temptation to hold articles back from entering their two-year Impact Factor observation window if you think they are likely to gather more citations later in their life cycle. I’ve occasionally heard accusations that editors purposefully post-date their journal issues or push issues from December to January specifically to gain an extra calendar year for their articles.

Summary of problems with the current system

The problems with the current WoS-JCR model can be summed up as:

- Ambiguity over article classification

- Separate citation counting systems

- Delay in reporting performance measures

- Reliance on print publication dates

As a result of these fundamental issues, we are left with a two-product solution that causes general angst and uncertainty, allows guys like me to exploit that uncertainty as a business, and creates an opportunity for some academics to make a career out of ridiculing the system.

I don’t know of any altmetrics advocate who believes that citations are not worth measuring, so I’d like to spend the rest of the time proposing a one-product solution for Thomson Reuters which, in the long run, will make their product much more useful and valuable to the scientific community and to those who wish to measure its publication performance.

One-product solution

Imagine a system where article-level citation data in the Web of Science are used to create real-time journal-level statistics and reports based on the date of online article publication, rather than the calendar year of print publication.

In order to deal with citation error, the onus of providing correct reference information should rest squarely in the hands of the publisher: If you don’t provide us with correct citation data, we can’t count your citations. In this one-product solution, authors would also be incentivized to report errors in the database. Feedback forms are currently provided in the Web of Science, but they are buried and do not encourage wide participation. Incentivizing authors to seek out and correct reference errors to their own papers would eliminate the need to create a separate citation counting system. To actively encourage accurate reference data, imagine, as the corresponding author of a paper, receiving the following email:

Congratulations on having your recent article indexed in the Web of Science. Please take a few minutes to check whether your title, author list, abstract and references are all listed correctly. This link will take you directly to your record and provide you with the tools to suggest any needed corrections. We will also provide you with free 24-hour access to our author metrics section so that you can see how your article is being cited by others, calculate your h-index, and provide you with other useful tools.

An accurate Web of Science citation database would then allow publishers and their editors to view, in real-time, the performance of their journals based on the date of online article publication. While the system would still calculate annual Journal Impact Factors, the date of online publication could also be used to provide users with a continuous (rolling) observation window. Journals that do not issue print or post-date article publication would not be put at a disadvantage in this model.

Acts of gaming would be more transparent and easier to detect. Based on a real-time reporting system, the detailed citation profile of your competitor’s titles become as transparent as your own. A rapid and unexpected rise in a competitor’s rolling Impact Factor, for example, may signal an atrocious case of self-citation, or the emergence of a citation cartel. In the current two-product model, an editor, spotting a rise in a competitor’s 2013 Impact Factor in June 2014 (when the Journal Citation Report is released), might need to comb through an entire year’s citation records in the Web of Science to spot the offending article published nearly a year and a half earlier. In a one-product model, identifying and reporting cases of citation gaming becomes much more immediate.

A one-system solution would also reduce the ambiguity in how Thomson Reuters classifies “citable items” as the calculated metric would be linked back to the articles that composed its construction. Impact Factors based on just the performances of research articles could be calculated on the fly by eliminating the contributions of reviews, editorials, letters, among other citing–but rarely cited–document types (see recommendations 11 and 14 of the DORA declaration). My personal opinion is that Thomson Reuters should abandon the classification system altogether and base classification as a “citable item” on whether the article includes any citations itself.

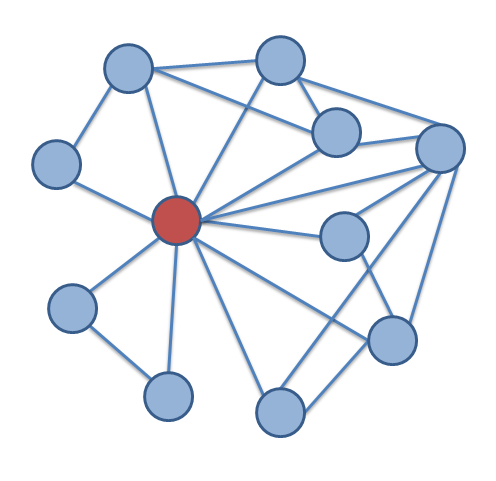

Last, a single product, based on a database of linked citations could provide users with visualization tools that create journal maps from citation data. With such data, we would be able to see the flow of citations to, and from, each journal in the network, identify important (central) journals from the graph, understand the relationships among disciplines (clusters), detect titles with high levels of self-citation and uncomfortably close citation relationships among two or more journals (cartels).

In conclusion, the Journal Citation Report is based on a process that made perfect sense in 1975 but looks more and more archaic each year. Combining two citation services into a single product would make explicit the way citation metrics are calculated and create a suite of new, dynamic tools directed at the author, editor and publisher. Such a revamping of the two-model system would require some major changes to a company that currently enjoys a strong and loyal customer base; nevertheless, most would agree that if we were to conceive and build a new citation reporting service today, it would look very little like the current system.

Until this product changes, other companies will continue to nibble on the heels of Thomson Reuters, develop new products that address its limitations, or like me, create livelihoods on doing what they could be doing themselves.

Discussion

16 Thoughts on "Reinventing the Impact Factor for the 21st century"

The elephant in the room is that Journal Impact Factor is of no help in evaluating individual articles, individual scientists or fields of study, yet that is precisely what it is most widely used for. If JIF has any value or interest, it is for a specialist audience of publishers and editorial boards (but then only with their ‘publishing competitiveness’ hats on, and then within a discipline)

As everyone in science knows, the problem is that JIF is widely taken as a surrogate index of ‘journal quality’, which it cannot be. This index is then over-extended yet further and deployed to evaluate a given scientist’s work. The many weaknesses and distortions that have accrued in this unwarranted extrapolation process play havoc with real lives and careers as well as distorting research funding and assessment. (see eg my recent article in Physiology News / Winter 2013 / Issue 93 pp12-14, available at http://www.physoc.org)

It’s good that Phil’s piece highlights some of the shortcomings in JIF in its own terms. But the article overlooks the point I make; JIF generates very wide ‘interest’ merely because of the extent of its misuse. Thus any further entanglement of accurate citation status individual scientists’ publications with a revised JIF will only encourage this misuse to persist. So, that said, I heartily endorse Phil’s recommendation that Thomson-Reuters abandon JIF, albeit mostly for different reasons.

If the JIF is making good money for TR then I see no reason to abandon it just because some people object to how some other people are using it.

While I agree with your sentiments in general, a few misconceptions here. First, “the elephant in the room” is a phrase that is used to refer to, “an obvious truth that is either being ignored or going unaddressed. The idiomatic expression also applies to an obvious problem or risk no one wants to discuss.” (https://en.wikipedia.org/wiki/Elephant_in_the_room). The problems with the IF are well-known and have been discussed ad nauseam, both on this blog and on hundreds, if not thousands of others.

Second, it is incorrect to suggest that the IF is not meant as a measurement of “journal quality.” That is exactly what it is designed to be. One can argue how well it fulfills that purpose, but that’s what it’s there for. The problems arise because it is used for purposes other than comparing one journal to another, particularly applying it on the wrong level, that of an individual paper or the work of an individual author.

Phil, could the real time service you describe simply be built outside of TR, using WoS data via a contract with TR? Such a startup app approach seems easier than having TR restructure it’s flagship products. The latter requires some pretty serious business analysis.

Phil, I understand your point about why Web of Science predictions won’t completely accurately match JCR values of impact factors. However, are you able to comment on any specific journals? In particular, the predicted value for mBio seems a surprisingly long way off the official value! thanks –

I just did a Cited Reference Search within the Web of Science for articles citing mBio. It appears that there are many that do not resolve to the article record, meaning that they would count toward the Journal Impact Factor but just not show up in the Web of Science article record. I don’t know whether the Web of Science has a hard time with online article identifiers (e.g. e00325-e10), which mBio uses rather than a print page numbering system, or whether the DOI, which should help Web of Science resolve to the right article, is at fault. Perhaps someone at Thomson Reuters would respond. I have seen high discrepancies for other online-only journals.

They do, in a way. TR does not have a way of supporting continuous publication models, which is too bad given how many journals are published in this way. If a publisher makes no change to a paper other than bundling the already published paper into an “issue,” it should start counting toward the current IF when published. The fact that this is NOT happening is unfortunate. They “count” those citations but hold them until they have an issue date. I would love to see what happens when you have one issue at the end of the year.

As a long-time WoS user, I’ll reply. When you look at the set of cited refs, you are not seeing some of the original metadata in the reference, but you are also seeing the results of the cited ref linking that takes place in the WoS data processing. A cited ref that is linked (whether by doi or traditional bibliographic data) will show reference metadata created from the WoS-indexed source item. The unlinked references show you something closer to the original – in fact – you should see a pretty complete set of original data in the expanded ref view.

The fact is that, for all their limitations in the electronic environment, traditional citation formats are very familiar, pretty standardized and, while sometimes wrong in details, not often wrong in completeness: author, source title, volume, page, year. With e-only journals, it is still the case that citing authors don’t necessarily cite effectively. It’s not uncommon to see just Author name, journal title (maybe) and year. That’s not enough to make a definitive article match – but it would often be enough to identify the journal.

There are ways to get pretty darn close, or even – with some luck – spot-on to a JIF prediction using WoS. It’s gotten much, much easier in recent iterations of WoS, but you need patience, some familiarity with the data-structure of WoS and JCR, and good timing.

***

The larger issue seems to turn on the basic difference between a journal metric and a sum-of-articles metric. Both are possible – both are published in various ways by Thomson Reuters – but they are different.

WoS (and any resource that links citations to sources) will let you sum the citations to any segment of content – within or between journals. You can calculate the “impact factor” of any set of results. WoS citation report feature actually makes that pretty easy. Define a self-assembled journal as any set of results – consider only those that were published in 2012 or 2011 – and sum the year 2013 citations to that group – you can even use Citation Report in WoS so you don’t have to work too hard. It’s an interesting exercise and I would really encourage you to try it – try using just the Editorials about a newsworthy topic…and compare controversial subjects.

JCR takes a very particular view of the journal as a whole thing, with a name. A pile of feathers and bones and feet is not a duck, and doesn’t quack. How much of an “impact” did mBio have among things written in 2013? Count the number of times a thing named mBio was cited by scholars publishing in 2013 and scale that roughly according to how much scholarly “stuff” was published under that name. That’s the rough definition of JIF – not average cites, but the ratio between the citations to a journal name and the size of the journal.

For good or ill, a journal is more than a site that lists articles – or arXiv is a journal. A journal is a history and a reputation – in effect, a brand name – a coded notion implying rigorous standards, careful management and a particular mission for the content.

By all means, use article metrics for articles – and for groups of articles, however you want to define that group. Use author metrics for authors. Use journal metrics for journals. It’s not that any of these are inherently “better” – but that they are designed to measure different things.

Thank you for this description. My organization does not pay for access to WoS due to the expense so I have no idea what anything looks like in the system or what is or is not included. It is our authors that tell us when things are wonky.

There is the San Francisco Declaration on Research Assessment (DORA) of course, as you briefly mention. A publisher or society can look to define and promote other ways of assessing quality and impact of scientific research. Publishers can’t ignore the realities of the role IF plays in a scientist’s career, yet we can influence perceptions of quality beyond the IF.

Nice article – lots of interesting ideas to consider. My only disagreement is with your suggestion that citable items should be anything that includes citations itself; often editorial matter (including editorials) cite things (though sometimes parenthetically, so it may not be so obvious), meaning that basically everything would become citable. The immediate impact of that, barring substantial changes to the use of the IF (or its complete demise) would be that publishers would stop publishing all editorial content, which I think would be disappointing. Disclaimer: I work at a scientific publishing house.

Phil,

Thank you for sharing your thoughts and ideas around the journal impact factor and the Web of Science. I found your piece to be insightful and am grateful for the opportunity to address the key issues it raised.

I think you will be pleased with our recent work to advance user experience and workflow by integrating the Journal Citation Reports into the newly enhanced InCites research analytics platform, which directly connects to the Web of Science. This will allow users to easily go beyond the journal impact factor by drilling down to the article level with a seamless connection to the Web of Science for transparent source information and historical data.

In 2013 we embarked on a major redesign of our analytical and discovery capabilities with a focus on supporting the most granular level analysis at the document level directly from the Web of Science. The integration of the Journal Citation Reports into InCites provides users with access to real-time performance measures and visualizations based reporting capabilities. For example, users will be able to create side-by-side comparisons that illustrate the performance of two journals or to analyze a citation network.

Within InCites we provide article level citation metrics based on the field normalization. These are then used to aggregate at the document type, journal, categorization, institution, country, or person level and link directly into the articles in Web of Science. We perform offline validation and processing of the data to account for variations in representations of entities and to support additional field categorization of the articles such as those used in global regions (Brazil, China, UK, OECD, Italy, to name a few) and policy or geopolitical aggregations AAU organizations, EU nations. This level of normalization and aggregation allows the user to create like-for-like comparisons and to benchmark entities based on similar attributes.

Our goal in continuing to provide the Journal Citation Reports— both integrated into and independent of Web of Science and InCites—is to offer a comprehensive, systematic, objective way to critically evaluate the world’s leading journals, with quantifiable, statistical information on the journals and extensive citation data for building reports and analyses. This annual data view complements the more frequently updated article level analyses now available via InCites.

Essential Science Indicators has also been integrated into the InCites platform, allowing for the exploration of journals and highly cited articles all in one interoperable environment.

You can already see evidence of the value of this integration with the information overlay that brings key journal information, including impact indicators, into the Web of Science.

To your point on citation errors, we are continually working with publishers and authors to ensure they are providing the highest degree of citation accuracy to citable items. At the same time, we recognize the need for separate measurements of journal-level citation activity, e.g. how a citation to a journal title and a year, with no additional metadata, would never link to a specific item in Web of Science, but would still be counted in the Journal Citation Reports. Since the citation aggregation for the Journal Citation Reports is more inclusive, it accounts for such broad acknowledgement of a journal title.

The Web of Science is updated weekly with the capability for article-level, real-time statistics and reports. With its direct connection to InCites—which refreshes its data bimonthly, processing directly from Web of Science—there are greater opportunities to use it for journal, article, and people level analysis and evaluation, with access to the underlying data.

We continually look to make our processes and information as effective as possible. I can provide updates to you as enhancements or changes are implemented.

Again, Phil, I thank you for your piece and for facilitating this discussion. I look forward to learning more about your thoughts as we advance in implementing these and other changes to the Web of Science and InCites.

Chris Burghardt

Thomson Reuters

The missed citations are a major concern. Your site allows only 8 corrections for day. For any given journal these missed citations could be as high as 10%. Take for example Angew Chem. with an JIF of 13.734. According to JCR 3654 articles received 50185 citations. On the other hand WoS citations indicate only 45167 citations. How do you explain the discrepancy of 10% of missing citations in WoS or is there a separate counting method?

Anytime an author misquotes a journal, author name, page number etc., WoS dos not link the citation with the original record. Correcting missed citation rightfully increases h-index, citations etc.

For those of you interested in correcting their own missed citations in WoS, please refer to step by step method presented in the supporting information of the article Cite with a Sight http://dx.doi.org/10.1021/jz500430j WoS accepts 8 corrections per day, per email per IP address.