Over the past few weeks, I’ve been involved in a number of discussions over the role of alternative metrics in research evaluation. Amongst them, I moderated a session at SSP on the evaluation gap, took part in the short course on journal metrics, prior to the CSE conference in Philadelphia, and moderated a webinar on the subject. These experiences have taught me a lot about both the promise of, and challenges surrounding altmetrics, and how they fit into the broader research metrics challenge that funders and institutions face today. Particularly, I’ve become much more aware of the field of Informetrics, the academic discipline that supports research metrics, and have begun to think that we, as scholarly communication professionals and innovators have been neglecting a valuable source of information and guidance.

It seems that broadly, everybody agrees that the Impact Factor is a poor way to measure research quality. The most important objection is that it is designed to measure the academic impact of journals, and is therefore only a rough proxy for the quality of the research contained within those journals. As a result, article-level metrics are becoming increasingly common and are supported by Web of Science, Scopus and Google Scholar. There are also a number of alternative ways to measure citation impact for researchers themselves. In 2005 Jorge Hirsch, a physicist from UCSD, proposed the h-index, which is intended to be a direct measure of a researcher’s academic impact through citations. There are also a range of alternatives and refinements with names like m-index, c-index, and s-index, each with their own particular spin on how best to calculate individual contribution.

While the h-index and similar metrics are good attempts to tackle the problem of the impact factor being a proxy measure of research quality, they can’t speak to a problem that has been identified over the last few years and is becoming known as the Evaluation Gap.

Defining the Evaluation Gap

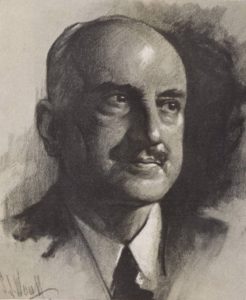

The Evaluation Gap is a concept that was introduced in a 2014 post by Paul Wouters, on the citation culture blog which he co-authors with Sarah de Rijcke, both of whom are scholars at the University of Leiden. The idea of the gap is summed up by Prof Wouters as:

…the emergence of a more fundamental gap between on the one hand the dominant criteria in scientific quality control (in peer review as well as in metrics approaches), and on the other hand the new roles of research in society.

In other words, research plays many different roles in society. Medical research, for example, can lead to new treatments and better outcomes for patients. There are clear economic impacts of work that leads to patents or the formation of new companies. Add to that legislative, policy and best practice impact, as well as education and public engagement, and we see just how broad the ways are in which research and society interact. Peer review of scholarly content and citation counts are a good way to understand the impact of research on the advancement of knowledge within the academy but a poor representation of the way in which research informs activities outside of the ivory tower.

Go0dhart’s Law: When a measure becomes a target it ceases to be a good measure

In April of this year, the Leiden manifesto, which was written by Diana Hicks and Paul Wouters, was published in nature. There has been surprisingly little discussion about it in publishing circles. It certainly seems to have been met with less buzz than the now iconic altmetrics manifesto, which Jason Priem et al., published in 2010. As Cassidy Sugimoto (@csugimoto) pointed out in the session at SSP that I moderated, the Leiden manifesto serves as a note of caution.

Hicks and Woulters point out that obsession with the Impact Factor is a relatively new phenomenon, with the number of academic articles with the words ‘impact factor’ in the title having steadily risen from almost none, to around 8 per 100,000 a few years ago. The misuse of this simple and rather crude metric to inform decisions that it was never intended to inform has distorted the academic landscape by over-incentivizing the authorship of high impact articles, and discounting other valuable contributions to knowledge, as well as giving rise to more sinister forms of gaming like citation cartels, stacking and excessive self-citation. In many ways, citation counting and altmetrics share some common risks. Both can be susceptible to gaming and as Hicks and Wouters put it…

….assessors must not be tempted to cede the decision-making to the numbers

Is history repeating itself?

Eugene Garfield is the founder of ISI and an important figure in bibliometrics. In his original 1955 article he makes an argument uncannily similar to the argument that Jason Priem made in the altmetrics manifesto (emphasis my own)

It is too much to expect a research worker to spend an inordinate amount of time searching for the bibliographic descendants of antecedent papers.

As the volume of academic literature explodes, scholars rely on filters to select the most relevant and significant sources from the rest. Unfortunately, scholarship’s three main filters for importance are failing.

In the case of both citation tracking and altmetrics, the original problem was one of discovery in the face of information overload but people inevitably start to look at anything that you can count as way to increase the amount of automation in assessment. How do we stop altmetrics heading down the same path as Impact Factor and distorting the process of research?

Engagement exists on a spectrum. While some online mentions, for example tweets, are superficial, requiring little effort to produce and conveying only the most basic commentary, some mentions are of very high value. For example, a medical article that is cited in up-to-date.com would not contribute to traditional citation counts but would inform the practice of countless physicians. What is important is context. To reach their full potential, altmetrics solutions and processes must not rely purely on scores but place sufficient weight on qualitative context based assessment.

The Research Excellence Framework (REF), is a good example of how some assessors are thinking positively about this issue. The REF is an assessment of higher education institutions across the UK, the results of which are used to allocate a government block grant that makes up approximately 15-20% of university funding. The framework currently contains no metrics of any kind and according to Stephen Hill of HEFCE, assessment panels are specifically told not to use Impact Factor as a proxy for research quality. Instead, institutions submit written impact statements and are assessed on a broad range of criteria including their formal academic contributions, economic impact of their work, influence on government policy, their public outreach efforts and their contribution to training the next generation of academics. HEFCE are treading carefully when it comes to metrics and are consulting with informaticians about how to properly incorporate metrics without distorting researcher behavior. Unfortunately, as Jonathan Adams, chief scientist as Digital Science notes, some researchers are already seeing evidence that the REF is affecting researcher behavior.

The importance of learning from the experts

I’ve only really touched very lightly on some of the issues facing altmetrics and informetrics. When I’ve spoken to people who work in the field, I get the impression they feel there isn’t enough flow of information from the discipline into the debate about the future of scholarly communication, leading to a risk that new efforts will suffer the same pitfalls as previous endeavors.

As a result, many in the field have been trying very hard to be heard by those of us working at the cutting edge of publishing innovation. The Leiden manifesto (which has been translated into an excellent and easy to understand video) as well as earlier documents like the San Francisco Declaration on Research Assessment (DORA), (available as a poster, here) are examples of these outreach efforts. These resources are more than opinion pieces, they are attempts to summarize aspects of state of the art thought in the discipline, to make is easier for publishers, librarians, funders and technologists to learn about them.

Funders and institutions clearly feel that they need to improve the way that research is assessed for the good of society and the advancement of human knowledge. Much of the criticism of altmetrics focuses on problems that traditional bibliometrics also suffer from, over matricization, the use of a score as an intellectual shortcut, the lack of subject normalization, and the risks of gaming. At the same time, people working in the field of informetrics have good ideas to address these issues. Publishers have a role to play in all of this by supporting the continued development of tools that enable better assessment.

Instead of thinking about criticisms of altmetrics as arguments against updating how we assess research, let’s instead think of them as helpful guidance as to how to improve the situation yet further. Altmetrics as they stand today are not a panacea and there is still work to be done. Now that we have the power of the web at our disposal however, it should be possible with some thought, and by learning from those who study informetrics, to continue to work towards a more complete and more useful system of research assessment.

Discussion

14 Thoughts on "Altmetrics and Research Assessment: How Not to Let History Repeat Itself"

Your opening statement: “It seems that broadly, everybody agrees that the Impact Factor is a poor way to measure research quality”. Does not seem to hold water with the research community! On what do you base this statement?

I hope it is not your short course!

I was in STEM publishing for over 40 years and the pride most of my authors and editors felt when their papers or journals were accepted by or considered to be the highest ranking journal in their field was evident to all with whom they communicated.

I do not think IF is the only measure or tool but would like to see a grouping that does not have fewer perceived failings than the IF.

Thanks Harvey.

In my personal experience, that of my friends and colleagues and from talking to many researchers, I can report that people spend an awful lot of time thinking about Impact Factor because they perceive that it’s critically important for their career advancement. Under those conditions, of course they’re happy when they get a high impact paper. That doesn’t translate to them thinking that it’s fair to use the IFs of the journals they publish in as a proxy for their research performance.

I think in our industry, it’s easy to ask a researcher what they need and come away with the wrong impression because many researchers feel locked into existing research metrics and are also (quite rightly) too focused on the research itself to think about how scholarly communication should evolve. I wrote a post about what you might hear when talking to a researcher over a beer a few weeks ago on my own blog.

There is indeed a lot of R&D going on in this area. Those interested might subscribe to the SIGMETRICS listserv at http://mail.asis.org/mailman/listinfo/sigmetrics. Gene Garfield frequently posts pointers to important articles and we sometimes have heated discussions, although it is not primarily a discussion list.

Then there is what I think of as the deep research problem with altmetrics. This is that societal impact can be either positive or negative, so how do we make that distinction? For example, cold fusion got a lot of attention but it was a bust. This is where semantic analysis comes in. Another interesting issue is identifying bias, especially something I call paradigm protection, which can happen at the journal level. I am presently working on this one.

Good points, David, thanks.

It’s worth noting that citation counting suffers the same problem of negative impact. Andrew Wakefield’s fraudulent article linking the MMR vaccine to autism has been cited almost 800 times and that’s bad science by any and all measures.

Yes and no, Phil. My conjecture is that altmetrics are picking up controversy to a much greater extent than citations do. Negative citations are pretty rare, Wakefield notwithstanding. while negative blogs, news and tweets are common. There may well be more negative blogs than positive blogs, as it were. This is another good research topic. Mind you this is not insurmountable, just a grand challenge.

It would be difficult to test that. The challenge with academic citations is that they’re rarely overtly negative in the way that blog posts and tweets can be. It’s not good academic discourse to say that somebody is flat wrong, but academics will often point out when observations are not consistent and offer explanations of differences in findings that are really criticisms of another researchers methodology or interpretation. I wouldn’t say that they were rare. You have to be pretty hip deep in a field sometimes to recognize when a citation is actually negative. Most articles in neuroscience at least generally have one or two of these in the discussion section. Negative citations are more common in review articles, just as an aside.

On top of that, there are lot of neutral citations where a researcher is just citing a paper because they were told to by their supervisor, the editor, or more commonly the reviewers, sometimes the citations are ones that the author hasn’t even read. The interesting thing to me is that because citations have become currency, we’ve developed a set of rules about what you should cite, rather than citing the reference that would be most useful to the reader. Some people don’t follow the rules, making the whole thing inconsistent. Then there’s coercive citations, citation cartels among journal editors, as well as quid pro quo citations among researchers and a whole host of funny reasons that people cite what they cite.

The meaning of any type of mention, whether an academic citation, a news article, a blog post or a tweet, seems quite variable. That’s quite a challenge as you say, but I think that scientometricians and informetricians might say that the solution is to have multiple measures as a way to smooth out the noise.

As a scientometrician, I think it is important to know what we are measuring. I have said before that we need a taxonomy of citation types and even done some work in this area. The same is true for altmetrics. As for negative citations, I think if you take a random sample of highly cited articles, you will find that almost all of them are leading papers in their field. This suggests that most of the citations are positive. But it is an empirical question, so mine is just a conjecture. As for negative mentions in altmetrics, I think that problem is well known, because people are working on it. I recall seeing several papers go by, looking at positive, neutral and negative tweets.

You said:

As for negative citations, I think if you take a random sample of highly cited articles, you will find that almost all of them are leading papers in their field.

You’re certainly right, but that really doesn’t prove your point. It just shows that the most highly cited articles are generally considered important. In other words elite articles get cited a lot. It doesn’t tell us whether all articles that are cited a lot, a fair amount, or just once or twice are good, or whether most citations are good, bad or indifferent.

Initially, you wrote that negative citations are ‘rare’, I don’t think that’s true. I agree that the majority of cites are positive, but that’s not the same thing. It would be interesting to know the percentage. As a complete wild guess, I’d put it at between 2-5%.

We will have to get clearer about what constitutes a negative citation, in order to do the study. I have been assuming it is one attached to a statement that the cited result is wrong, or the methodology flawed, etc. (We see a lot of blog posts to that effect here at TSK.)

I have also assumed that all citations that are not negative are positive. Why the article is cited is therefore irrelevant; it is just a matter of what is said or implied about it. It there is a third category of citation, which is neither positive or negative, then we need to define that in order to know how many positive citations there are, but not to know how many negative citations there are.

My wild guess is that the percentage of negative citations, as described above, is a fraction of 1%, perhaps a small fraction, because many papers do not have any. However, if being negative merely means suggesting that the cited result is incomplete or the method could be improved then there is indeed a lot of that, because that is how science works.

Not a simple study, but doable. Scientometrics, or as I call it the science of science, is fun.

This just came in on the Sigmetrics listserv. SIG/MET is another source of metrics R&D info.

“METRICS 2015 – ASIS&T WORKSHOP ON INFORMETRIC AND SCIENTOMETRIC RESEARCH

Workshop sponsored by ASIS&T SIG/MET

ASIS&T 2015 Annual Meeting

Saturday, November 7th, 2015, 9:00am –5:00pm

Hyatt Regency St. Louis at the Arch, US

The ASIS&T Special Interest Group for Metrics (SIG/MET) will host a workshop prior to the ASIS&T Annual Meeting in St. Louis, Missouri. This workshop will provide an opportunity for presentations and in-depth conversations on (alt-) metric-related issues, including the latest theories, approaches, applications, innovations, and tools. The workshop is envisioned as a combination of short presentations, posters and open discussion.

SIG/MET is the Special Interest Group for the measurement of information production and use. It encourages the development and networking of all those interested in the measurement of information and, thus, encompasses not only bibliometrics and scientometrics, but informetrics in a larger sense including measurement of the Web and the Internet, applications running on these platforms, and metrics related to network analysis, visualization, and scholarly communication. Hence, metric research recently expanded beyond traditional research products – such as articles and citations – to include web-based products, such as blogs, tweets and other social media, so-called altmetrics. These social media are changing some of the ways research is being disseminated and, in turn, influences the field of metrics.

Research on metrics has been growing significantly over the last decades. In addition to account for a significant proportion of the literature published in core LIS journals, there is also a large proportion of metrics literature published in general science journals as well as in medical journals.”

With questions, please contact

Isabella Peters (Chair, SIG/MET)

i.peters@zbw.eu

………………………………………………………………………………………………………………………..

PROF. DR. ISABELLA PETERS

Professor of Web Science, CAU Kiel

ZBW – German National Library of Economics

Leibniz Information Centre for Economics

Düsternbrooker Weg 120

24105 Kiel

Germany

T: +49–431–8814–623

M: +49–172–6747771

F: +49–431–8814–520

E: i.peters@zbw.eu

Thanks for the interesting article Phil – altmetrics should indeed at least be considered in research assessment. In fact, downloads should also be part of this. Combined with citations they will give an impression of the reach and impact of an article on society and academic community. And in fact, why stop at journal articles? Books – especially in humanities and social sciences – play a pivotal role in the academic communication and their reach and impact should also be taken in account in research evaluation. This has been one of the motivations for us to build Bookmetrix.

Regarding the topic of this blog I can highly recommend attending the 2:AM conference (AltmetricsConference.com) where we will have 2 session on this topic, one of them organized by the Dutch Research Organisation NWO.

(minor conflict of interest – I am one of the co-organizers)

Thanks for this enlightening article Phil. Just one comment, for the benefit of the appropriated authorship recognition, the Leiden Manifesto was signed by Diana Hicks, Paul Wouters, Ludo Waltman, Sarah de Rijcke & Ismael Rafols.

This is my first time leaving a comment here. I am working at a company providing scholarly journal platforms to Japanese clients. Yes, I should stop my criticisms of altmetrics. Instead, I think there should be something to do with Searching but I can’t find an answer of how to improve the algorithm of the sort by “Relevance” and “Really Useful” in Search. I mean both of the cases of Search engines and Search in any journal sites.

This may be similar to users’ reviews in Gourmet sites. When you want to go for a French dinner but you have no idea of a good restaurant to go, reviews or points on sites (Impact) can help you. Although finally you may not appreciate the food there but it may be a good start for you to find a “Real” one further. For undergraduate students who still have no idea of what they should read or cite for their reports, IF can help them. The “Talk of the town” articles easily found via SNS can motivate them. On the other hand, researchers who already have their topics need to search for “Really Useful” ones. However, to lead them to a satisfied article, the question to my circular argument is that what can judge articles “Really Useful” or “Valuable to cite”.