Mr. Market, the personification of the marketplace, has been credited with many things. He matches buyers and sellers, strives for an efficient use of capital (and hence of human effort), and spurs various parties to compete with one another, with one outcome being improved goods and services. He has not, however, at least as far as I know, been given credit for one of his more daring cultural enterprises. He is as much Maxwell Perkins as he is the embodiment of free-market theory. Mr. Market is a brilliant editor. When we look for the finest publications, we should pay serious attention to what Mr. Market recommends.

Someone will immediately object, Isn’t the market precisely what got us into this mess in the first place? The burning of fossil fuels, the corruption of governments, economic inequality, and, not incidentally, the inability to get a timely customer service call from your cable provider! And we haven’t even gotten to Elsevier yet! I am no free-markets guy myself, but those who are critical of the marketplace would be advised to consider whether what they object to is a function of marketplace economics or their absence. This point was recently made incisively in an essay by Yale economist William Nordhaus in the New York Review of Books. Nordhaus notes that Pope Francis has it all wrong, that what the Pope pins on markets is in fact an indictment of interference in markets. I think there is little likelihood that this amiable Pope will become an economist or a Presbyterian, but if he continues to argue against modern economics, it will not be out of compassion but ignorance.

My personal discovery of Mr. Market’s editorial genius came about while researching altmetrics. And here I want to thank my fellow Kitchen Chef Phill Jones (he of two ells) for helping me navigate a rich, complex, and growing field. For those who are still not comfortable with the various forms altmetrics can take and their implications, I heartily recommend a white paper by Richard Cave, which is as good an overview as you are likely to find. My own view of altmetrics appeared on the Kitchen a while ago My conclusion: baseball does it better.

Altmetrics have come about because just about everybody is in full-throated agreement that Journal Impact Factor (JIF), the metric of yesteryear, is a crock (“In the light of ever more devious ruses of editors, the JIF indicator has now lost most of its credibility”). JIF has been derided because editors have learned to game the system, because it fails to include some journals, discriminates against new journals, focuses on journals instead of articles, was slow to pick up on the growing body of open access literature, is calculated over a short and arbitrary period of time, and takes the broad and complex matter of “impact” and tosses out such things as how many people read an article or how it has been shared across the wider scholarly community — and, for that matter, by interested laypeople. Impact factor, in other words, has been dismissed as virtually fraudulent by everyone except those who make decisions.

As feckless as the altmetrics movement has been to date (has anyone yet gotten tenure on the basis of their number of Twitter followers?), it is hard to argue with its underlying motivation and that is to broaden the measurement of scientific literature. Altmetrics, in other words, seeks to be an über-metric, not something dismissive of traditional notions of impact but rather a method of incorporating a large suite of various measurements all properly weighted.

I wish the altmetrics crowd the very best, but in the meantime it has to be said that such an über-metric already exists. It is called sales and its avatar is Mr. Market. How much is something worth? Well, let’s see. Let’s add up the number of citations to the number of Tweets, Facebook Likes, blog posts, downloads, Web page views — everything. We then observe that the world of scholarly communications, like it or not, operates in the marketplace where individuals and institutions make mostly rational decisions about how to allocate their capital. Do I purchase this journal or article or the other one? Mr. Market makes that decision, incorporating all metrics, and points readers to the literature of highest quality. To insist that Mr. Market is not a good editor is to argue that librarians are stupid and that the individuals who subscribe to publications directly don’t know what they are doing.

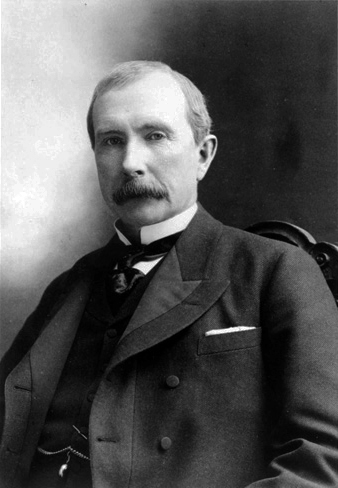

The problem that Mr. Market currently is having in doing his job is that no publisher wants the marketplace to work; they want to be able to control the marketplace, and in many instances they do. (With reference to the marketplace, John D. Rockefeller railed against “ruinous competition,” and he would know.) The Big Deal, for all its many virtues (lower cost per article, lower cost per download, reduced administrative costs, etc.) was conceived and is supported by the largest publishers not because it is a natural expression of the marketplace but of its corruption. Please go back and read that Nordhaus article again, and when you finish with that, take a look at Paul Krugman’s review of Robert Reich’s Saving Capitalism, which points to the problem of a lack of enforcement of anti-trust law. Librarians are not suffering because of Mr. Market but with him.

So let’s hear it for Mr. Market. He really deserves our support, at least if identifying the finest materials is our goal. And rather than work to find “alternatives” to the marketplace, perhaps we would do better to ensure that the marketplace is given the room to operate with its own natural rhythms.

Discussion

12 Thoughts on "Mr. Market is a Brilliant Editor"

The marketplace will tell you that articles on cold fusion and vaccinations causing autism were wildly popular, but not that they were bad science. It will tell you that “The Bell Curve” by Herrnstein and Murray sold oodles of copies, but would not tell you it was highly criticized by scholars. I can cite any number of examples of books published by the university presses at Princeton and Penn State that won major awards from scholarly associations but did not sell well. If one were to judge by sales alone, then Harry Frankfurt’s little book “On Bullshit” (revised from a journal article) must have been one of the most important books Princeton ever published. Sales are not insignificant, of course, but they only tell you part of the story in scholarly publishing.

I am not sure that there is full agreement that the journal Impact Factor is a crock. Given it is still a extremely important criteria in the sciences for hiring, tenure and promotion, and of all things funding by NIH, NSF and other funding organizations. Every year when the new Impact Factor numbers come out I receive countless number of PR messages from every major publisher sharing the good news that their JIF has increased. I know a number of STM publishers that have changed editors or directors of publication due to a drop in Impact factor for even a few of their journals. Aggregators often pay a higher royalty for titles that have a high impact factor. So while some community may believe that the impact factor is declining in importance and influence, there is still a very large community that places a high value on it.

The extortion demanded by journal publishers who have established large monopolies goes on unchecked. Anti-trust law suits never get filed.

Librarians need to band together to effect bulk purchasing consortia. Bulk purchasing drove down the price of pharmaceutical drugs in New Zealand.

If all the university librarians in Canada negotiated academic journal subscriptions as a unified force, the extortion pay outs would stop or go way down.

The Netherlands stood up and negotiated lower subscription prices. Courage comes easier if a country wide block of university libraries negotiate together.

Marketplace indicators are in fact a type of altmetrics: some altmetrics tools already include Amazon reviews, number of libraries holding books (via Worldcat), and other “do people own this item”-related data.

One of the biggest misunderstandings of altmetrics is that the field only looks to social media for potential impacts. It’s much more complex and diverse than that.

On a related note, this made me wince: “As feckless as the altmetrics movement has been to date (has anyone yet gotten tenure on the basis of their number of Twitter followers?)” Those sorts of casual, inaccurate characterizations of altmetrics are what lead to others’ misunderstandings of the field in general. You put it so correctly in the next sentence (“not something dismissive of traditional notions of impact but rather a method of incorporating a large suite of various measurements all properly weighted”) that I’m surprised you would characterize the field in that way.

And anyway, I’d much rather live in a world where number of Twitter followers (as a proxy for one’s outreach prowess) is just one one of many types of data considered in a tenure case than where we’re at (for the most part) now: tenure cases that consider a small number of decontextualized, sometimes inaccurate (in the case of JIF) metrics that tell us nothing of the impacts of research beyond the ivory tower.

I’m not with you on this, Stacy. First, I think you should identify yourself as being affiliated with Altmetric.com. Second, I don’t think the issue is that you don’t agree with me but that you don’t understand what I wrote. To which I say: read more slowly. To repeat my point: the marketplace is a meta-metric; it encompasses all others. As such it weights JIF, Twitter engagement, etc. and puts them into one larger measure. Nothing wrong with altmetrics, and nothing wrong with JIF. They simply are not the whole story. What troubles me is that the marketplace-as-metric is being ignored, and that is surely wrong.

Definitely not trying to hide my affiliation; apologies that I left it out. I do not respond on behalf of Altmetric, however–I’m responding as myself, a librarian with a long interest in research impact metrics and their applications.

I understand your larger argument perfectly well. To that end, I agree completely with Sandy: just because something has been deemed valuable by the Market does *not* mean it’s of high quality, which is why your argument that ‘the Market serves as a useful uber-metric’ is flawed. Your point about adding up all other available metrics…well, I had to fill in some of the gaps there, as I assume that what you meant by that point is that when academics “allocate their capital”, they’re allocating their attention in the form of cites, tweets, blogs, etc. But–again–the research with the most number of metrics doesn’t always equal the highest quality research. And that which is purchased the most does not always equal the most valuable research, either.

Sorry, Stacy, but you are just wrong about this. In fact your argument undermines the entire altmetrics pont of view. Nothing sells that is not of superior quality. The question is what is the nature of that quality. A citation has one kind of quality, a Tweet another. A book or article could be dead wrong but have yet another kind of quality (e.g., it appeals to our yearnings). This is what altmetrics is about, different measures for different things. If you are only measuring scholarly quality, why muck around with Pins and tweets? Altmetrics is a post-modern concept, and once you enter that world, you have to be prepared to find the ground shift beneath your feet.

Joe, I’ve worked with altmetrics for a number of years (and am even the only person to work for more than one altmetrics service)–I know the aims of our field. Please spare me the lecture.

It seems that this discussion is coming down to semantics. When you say “quality”, what most people hear is “scientific soundness of research”; that’s why no one tends to argue that any research impact metrics measure quality.

What they do measure, however, is commonly thought of as “influence” or “impact”–of which there can be many types of flavors, as Heather Piwowar so colorfully illustrated a few years back on her blog. Different measures for different types of things, indeed.

Whatever you call it, we seem to agree that altmetrics is much better at helping us to understand the diverse “impacts” or “qualities” of research than any one metric in isolation ever could. Where we disagree is that one single metric–the Market, in this case–is superior in identifying the “impacts” or “qualities” of scholarship overall. It is but one genre of indicator of many that we need to understand holistically the value of scholarship. And under the larger “Market” umbrella, there lives distinct market indicators (many of which also reside under the “altmetrics” umbrella) that give us insights into the nuances of the “qualities” (as you call them) of scholarship (for example, something that’s got a lot of reviews on Amazon may not have the same qualities as something that’s owned by a lot of libraries).

Now, we can argue all day about what is an altmetric and what is not; there’s no “right” definition, and the meaning I tend to work from is that an altmetric is anything that we can source from the social web to give us insights into the value of scholarship (distinct from citations and usage statistics). That would include many market-related metrics and data, in my book. If you don’t abide by that definition, well, then we’ll just have to agree to disagree.

The point of a market metric is (a) that it is being ignored and (b) that it is a meta-metric, unlike otehr metrics (JIF, altmetrics, etc.). A market-related metric is not simply another measure. It measures what people are willing to pay for and thus necessarily must incorporate all the other measures, duly weighted.

“Nothing sells that is not of superior quality”? Wow, talk about a sweeping generalization! Does that mean that “Fifty Shades of Grey” is superior in quality to, say, “Moby Dick,” which had almost no sales right out of the gate. As Stacey says, you must be re-defining “quality” here in a way that does not address the central concerns of scholarship. I would venture, instead, another generalization: there is no one-to-one correlation between sales and merit in academic scholarship. You can as easily find examples of low sales and high quality (as measured by academic prizes, strong book reviews, citations by future researchers, etc.) as you can high sales and low quality, and low sales and low quality, and high sales and high quality. The market is one guide to assessing quality but hardly the mega-measure you make it out to be, Joe.

I understood you to be arguing that sales function as a kind of uber-metric incorporating all sorts of other metrics conveniently into one as a way of identifying quality. Is that not what you mean by saying “When we look for the finest publications, we should pay serious attention to what Mr. Market recommends.” If that is not what you mean, then please enlighten me. Others commenting here seem to be similarly confused if that is not your meaning.