While predicting the future is fraught with peril, there seems a fairly clear consensus about where the research community would like to see things go. Our efforts these days are focused on broadening access to the research literature and research results, and toward improving their quality, through better transparency and reproducibility. Academia is a notoriously conservative community though, and has been slower to move in these directions than some would prefer. Rather than waiting decades for consensus, many research funders, governments, publishers and institutions have chosen instead to push things forward through policies that impose requirements on researchers.

All of these policies come with a cost, and one of the biggest costs comes in monitoring and enforcing compliance. A policy without teeth — without actual consequences for non-compliance — loses all effectiveness. We know that researchers are overburdened and short on time. Anything that they don’t have to do, they won’t do. When the NIH’s PubMed Central deposit policy was not actively enforced, compliance was poor. Now that it has been tied to grant renewal and receiving future grants, compliance has improved (although is still not 100% and is still heavily reliant upon the efforts of publishers on behalf of authors). MIT continues to struggle with public access and archiving policy compliance, as does Oregon State University.

Understanding the cost of compliance is vital to the effective design of the policy itself. The RCUK has a complex open access policy, with complicated reporting requirements. This complexity and the costs it generated were not predicted by policy makers, and as a result, universities have been hit hard financially, and more money than was expected is being spent on paperwork and bureaucracy.

The NIH’s policy, in comparison, seems vastly simpler, at least in terms of the compliance burden. There are no required institutional reports to compile, and no spending records to track. Researchers are simply required to note the PMC ID on any paper they list as having resulted from NIH funding when they write up progress reports or apply for further funds. Even still, there are costs with NIH compliance. Aside from the costs of the PMC repository itself, a system that generates and keeps track of PMC ID numbers had to be built, and must be maintained to ensure that they perpetually resolve to the right paper. Furthermore, when an applicant lists such a number, someone somewhere must actually check that it is an accurate number that corresponds with an actual paper. Quantity becomes a factor as well. The NIH is dealing with more than 50,000 applications per year and greater than 60,000 ongoing grants. That’s a lot of numbers to check. Universities also spend varying amounts to track their researchers and make sure they are following the rules.

For journals, policies can range from the simple to the complex. The recently announced policies requiring ORCID iDs for article submissions is fairly inexpensive and straightforward, at least as far as compliance. More expense comes from the integration of the iD with the paper and the journal platform, but that’s a separate cost from tracking compliance. A required field asking for the author’s ORCID iD is added to the journal’s paper submission system. The researcher cannot proceed further with their submission until this field is filled, so no manual intervention is needed by the journal. Presumably the iD number is checked at some point before publication for accuracy, although this could be done at the copyediting stage for accepted articles, reducing the number of checks needed by only dealing with the smaller group of submissions that made it through peer review. Costs might be higher for a policy signatory journal like PLOS ONE should they choose to monitor compliance in this manner, given the large number of papers accepted and the absence of a copyediting stage. Adding in a time-consuming additional step to the production workflow can be expensive, both in terms of paying for labor and in potential delays in publication.

PLOS, however, seems to have taken something of a hands-off approach toward enforcing their data publication policy. The publisher made a big splash with the 2014 implementation of a policy requiring authors to make all data behind their articles publicly available. Later that year, Scholarly Kitchen alumnus Tim Vines and colleagues did several studies to see how well authors were complying, and found that many were not making the required data publicly available. PLOS’ response was that the responsibility for checking the availability of data had been passed along to the external peer reviewers, with seemingly little, if any oversight happening at PLOS itself. Not surprisingly, a requirement without any enforcement was not taken seriously by authors.

In recent months, this lack of policy enforcement came to a head when researchers asked to see the data behind a controversial PLOS ONE paper on carcinogens in laboratory animal food. Despite repeated requests and promises that action was being taken, the data has still not been made publicly available and the paper remains in the journal with no indication of this apparent violation of a mandatory policy. (A similar issue has arisen with a different PLOS ONE paper, but one that was published before the data policy went into effect).

With no enforcement, is data archiving really a requirement or just a gentle suggestion? Without effective monitoring and enforcement, the policy becomes an empty promise. But how would a journal go about enforcing such a policy? One of the pioneers in data publication, GigaScience, requires authors to deposit their data in the journal’s own database, and assigns a peer reviewer to specifically review that data. No data, no paper.

But GigaScience has the advantage of being associated with a major genomics company, BGI, which provides subsidized server space for this repository. That may not be feasible for journals without a similar partner. Still, there are reputable repositories that could serve a curation and monitoring function here. Most journals won’t publish DNA sequence without an accession number from GenBank or the like, proof that the sequence has been made publicly available in an established repository. Similar arrangements could be built into the article publication process for the entire data set via reputable public repositories.

This would only provide an indication that some data was available, and careful review would still be needed to determine whether it was complete and usable. Asking a peer reviewer to do this work may have variable results. GigaScience reports an enthusiastic response from their data reviewers who enjoyed the novelty of the requested task. Different fields may react differently though, and once the process becomes routine, finding a data reviewer may become even more difficult than finding a reviewer for the paper itself, an increasingly onerous task for journal editors.

And that doesn’t even touch on the notion of monitoring over time. Is it enough that the data are available upon publication? What happens in a year? Five years? Ten? Does an effective policy require permanent availability of the data, at least as long as the paper is made available?

All of which means more costs; costs to store the data and serve the data. Funding for several key data resources is already in jeopardy. Costs must also be covered for curation of the data and monitoring the data. Costs are incurred by the journal when it spends the time to check each data set to confirm that it is available and accurate. The costs of customer service, time spent explaining the policy to authors as well as responding to complaints when the data is found to be missing or inadequate, must also be factored in, as must the costs of monitoring the availability of those same data sets over time.

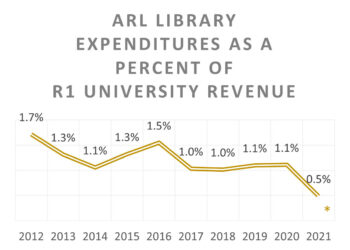

It has been proposed that journals are potentially the key vehicle for improving transparency and reproducibility of research results. This is not an insurmountable task, and indeed is a service that journals and publishers could provide. But it is not something that will just happen, and it’s not something that can effectively be done for free. PLOS’ hands-off policy has proven this. So who will pay for it? We know that library budgets are flat, if not declining. Institutions continuously complain about subscription prices for journals and author charges for open access articles.

If it’s worth doing, then it’s worth doing right. Does the research community value data publication enough to actually pay for it?

Discussion

8 Thoughts on "What Price Progress: The Costs of an Effective Data Publishing Policy"

Clearly scientific publishing is becoming a regulatory regime, where there is always a lot of devil in the complex details. One wonders if this is an unintended consequence of the open science movement?

David, you are so right – data publishing costs money and time, if it’s to be done properly. At OECD, where we publish more than 300 data sets and many data-rich publications, we employ six full-time data editors who spend a significant part of their time ensuring that the OECD’s statisticians maintain our datasets to the ‘house’ standards on open-ness and accessibility (all OECD data sets are now open). This is a Sisyphean task because our statisticians are not that interested in customer service beyond their own peer group, they are much more interested in gathering and processing new data because they are, after all, statisticians not publishers. User needs like cite-ability, persistence, archiving, version control and so on are not considered very important – even persuading them not to take datasets offline for ‘maintenance’ or when they are ‘out of date’ is a challenge. In addition to the staff costs on the publishing side, the IT cost of maintaining our data ‘engine’ is not trivial despite our sharing its ongoing development costs with a dozen other statistical agencies. And with the recent decision to make all OECD data sets open, we’ve lost the sales income we used to have to meet a large part of these costs and our funders have decided that we have to replace this lost income by finding ‘efficiences’. I fear the answer to your final question is – I doubt it.

Another important question is data size. Its one thing to require putting a spreadsheet with a few hundred rows in a repository. It is quite another to ask a research project with a terabyte of data (and yes I see these volumes of data as both an editor and out of my own research) to publish the data. The burden of transferring such large amounts of data on an author is significant. And I know few no/low cost repositories that are set up to take that volume of data.

Good point! I know imaging labs that regularly generate terabytes of data on a weekly basis (doing a 48 hour time lapse movie of hundreds of different cell cultures takes up a lot of hard drive space). Most repositories aren’t equipped to handle that much data, and there’s still the question of how long you’re supposed to keep it for. I look back at the data I generated in graduate school 20+ years ago and think that there’s been so much methodological and technological progress since then that I would be in much better shape generating new data than in trying to use the old stuff that would have cost a lot to preserve and convert to new file formats over the years.

A brief comment on the ORCID integration: if done right this requires zero manual intervention and no staff effort for checking that the iDs are correct. When the ORCID API is used, authors authenticate by logging into their ORCID account – that process ensures that the iD is correct.

Regarding the issue raised by Brian: Zenodo is an example of a data repository that effectively offers unlimited storage for datasets, free at the point of use.You still need to transfer the data, but as long as CERN support Zenodo it is a good alternative for those without a suitable disciplinary or institutional solution

I do not want this to be mistaken for a statement that data management and publishing is effectively free.

Thanks Torsten, I appreciate the correction on ORCID. Is the researcher required to log-in to their ORCID account as part of the submission process (and thus provide verification) at that point, or is it potentially something a managing editor would need to request they do?

Zenodo is a good example, but as you point out, it’s not free (at least to build and run). I always worry a bit about resources like this that rely on donations or grant funding. A change in the political winds can result in what should be a permanent record disappearing (see: http://science.sciencemag.org/content/351/6268/14.summary ), which is why I tend to favor not-for-profit efforts that have a sustainable business model

It depends on how the submission system has integrated ORCID. ORCID recommend to use OAuth, to ensure that there are no errors. Manually typing in a “unique” iD is not such a good idea. If the publishers has fully integrated ORCID, this process should be used. Authors log into their ORCID account and give the publisher system permission to verify the iD.

Generally, I agree with your Zenodo/business model comment, also from personal experience: I worked at the Arts and Humanities Data Service when, following an external review that suggested expanding the service, it was suddenly axed by the funder, with little concern for the data hosted by AHDS. In the case of Zenodo though I wonder whether CERN or figshare (to name a for-profit) are more likely to still be here in 20 years. My money would be on CERN. However, this is no guarantee that CERN would still be willing to support Zenodo of course, and figshare would probably point to arrangements to safeguard the data in the event of the company going out of business.Whichever route we chose at this stage, having an exit strategy is critical.

The UK HE sector is currently exploring whether we can join forces for a shared research data infrastructure – with an SLA (something Zenodo does not currently offer).