Yesterday, Clarivate Analytics released their 2018 Journal Citation Reports (JCR) — an annual compendium of journal-level citation metrics. While the JCR publishes an entire panel of performance scores, most editors, publishers, and authors will be focused on Journal Impact Factor (JIF) scores for 2017.

There are two notable changes in this year’s JCR. First, the 2018 JCR will now include citations from Clarivate’s Book Citation Index. This addition will inflate JIF scores from last year by about 1%, on average, for science and engineering titles and 2% for journals in the social sciences and humanities. In 2016, Clarivate added — without public announcement — citations from their Emerging Sources Citation Index, which had an overall effect of inflating JIFs by 5% and 13%, respectively. Unbeknownst, many publishers breathlessly extolled their 2015 journal scores as performance improvements and not simple changes in JCR’s citation dataset.

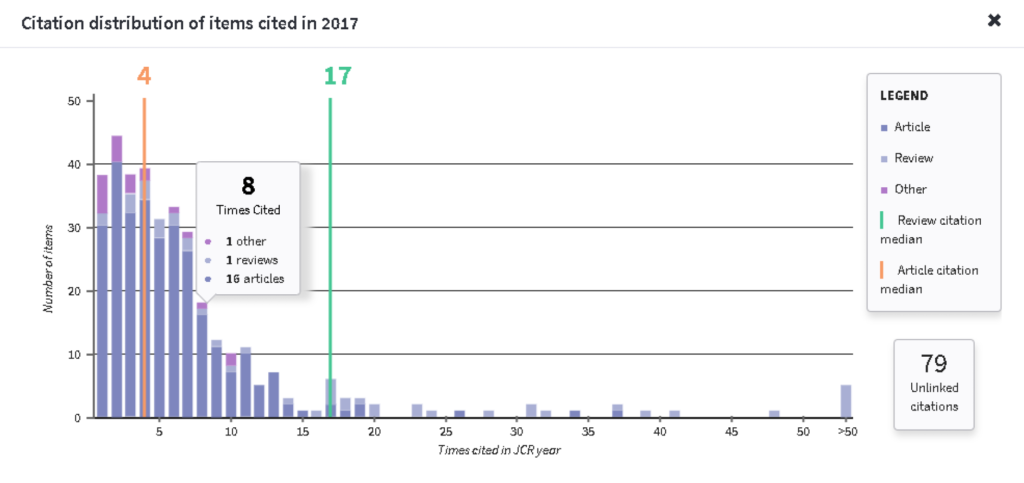

Secondly, and more importantly, the JCR will now publish journal citation distributions. This change appears to be a response to the widely influential 2016 proposal authored by a bibliometrician along with several high-profile editors and publishers. The Scholarly Kitchen, featured three critiques to their proposal (here, here, and here).

Clarivate’s journal distributions addresses, and improves upon, several shortcomings of the original proposal by:

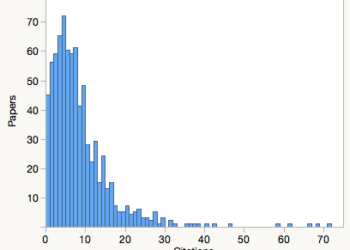

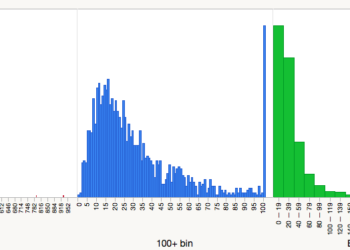

- Standardizing the histograms. This will prevent publishers from modifying their axes to favorably present their journals and will help facilitate cross-journal comparisons.

- Standardizing the data included in each histogram. This will prevent editors and publishers from cherry-picking the papers they want profiled.

- Providing descriptive statistics (median paper performance) based on article type (Article vs. Review), as was proposed in the San Francisco Declaration on Research Assessment (DORA) recommendation #14.

- Including a disclosure of unlinked citations, i.e., citations that count toward a journal’s score but don’t include sufficient information to unambiguously identify a specific article.

It is not clear that Clarivate wants these histograms be reused, however. There is no way to download or export them as a simple image file. More importantly, each histogram lacks a journal title, source attribution, and permitted use statement. As a consequence, legitimate publishers may be uneasy about putting these distributions on their websites while illegitimate publishers may exploit these shortcomings by misappropriating citation distributions of real journals for their own. Given that the JCR is released just once per year, it is unlikely that periodic updates to these histograms will get noticed. The proverbial horse has left the stable.

In sum, the 2018 edition of the JCR shows an earnest attempt to address constructively the demands of the research community. However, poor implementation of their journal performance dashboard may prevent these improvements from being used and may actively encourage misuse and abuse by predatory publishers.

Discussion

9 Thoughts on "2017 Journal Impact Factors Feature Citation Distributions"

Interesting take, Phil, and thank you for your encouragement. You’ll recall we have actually been profiling citation distributions, rather than point metrics of citation impact, for about a decade – see my Scientometrics paper at https://rd.springer.com/article/10.1007%2Fs11192-007-1696-x. We were encouraged by Vincent and team’s ‘simple proposal’ to think about how this could be extended to journals. I think it is a really valuable change in perspective and we’ll take on board your critique on presentation.

Phil, is there a point at which we should be concerned about unlinked citations? I am seeing some numbers in the 25-35% of citations being unlinked. Is there any way to fix that?

Unliked citations are common for papers that are published online in one year (e.g. December 2015) but are designated to print in another (e.g. January 2016). References that cite this paper as Cochran, 2016 would be linked to Clarivate’s article metadata, while Cochran, 2015 would not.

For journals that publish online before print, a solution would be to properly designate the paper with a year, volume, issue, page/article number, before it is posted online. This would largely solve the problem of referencing ambiguity.

As for fixing the problem for older papers, a user can submit reference corrections from within the Web of Science; however, this process would likely be very time consuming for an editor or publisher. I’m going to allow Clarivate a chance to comment directly to your question.

Is it just me, or the citation distribution does not show how many items received 0 citation? Wouldn’t including that give a better picture of the journal?

I think that citations from the Emerging Sources Citation Index may have been included in the Web of Science and also the JCR already in the impact factors for 2016. Could you please check that for me? Thanks.

From the article above: “In 2016, Clarivate added — without public announcement — citations from their Emerging Sources Citation Index, which had an overall effect of inflating JIFs by 5% and 13%, respectively.”

So wouldn’t that have influenced the increase in impact factors from 2015 to 2016, rather than the increase from 2016 to 2017 as implied in your essay?

Sorry, it gets complicated because JIF is a lagging indicator. This year (2018) saw the release of the 2017 Impact Factor rankings. In 2016, the 2015 Impact Factors were released, and at that point the inflation due to ESCI addition occurred. So as per the article, the addition in 2016 of these extra citations caused an increase in 2015 journal scores.

Got it now. Thanks.