Editor’s note: Today’s guest post is co-authored by Brooks Hanson (American Geophysical Union), Daniella Lowenberg (California Digital Library), Patricia Cruse and Helena Cousijn (both DataCite)

Not so long ago, publishers put up with incomplete and erroneous references to the literature, to the detriment of readers and scholars. Even after papers were online, tracking citations forward or backward involved significant hand work. Even after online publishing started in earnest, references were entered by authors, re-keyed by typesetters, and checked manually (as much as time allowed) by reviewers, editors, copy editors, and proofreaders. This often involved pouring over indices or, in some cases, searching for the exact paper in volume after volume in the library or basement. The fallout was that priority was usually given to your own journal citations. Errors in authors, volumes, year, and pages were rampant and persisted as authors copied erroneous references in later publications.

It took a concerted effort to achieve accuracy, reduce manual effort required, and improve efficiency for authors and publishers alike. In turn, linked and tagged references and other identifiers related to authors (ORCID IDs for researchers) and funders (Crossref’s Funder Registry) emerged. They helped secure additional links and generated new studies about how science is done, collaboration networks, and research outcomes. The formation of Crossref for registering article DOIs, and DataCite for non-journal content, were critical steps. So was the adoption of standards around DOIs and citation download formats (e.g., RIS [Research Information Systems] file format), which allowed authors to ingest citations into reference managers (no more typing errors and 404 errors). Open APIs to Crossref, DataCite, and Pubmed, to name a few, as well as digitized backfiles, allowed publishers to quickly check the accuracy of references and, better still, rebuild them from scratch accurately, to a particular style, and to add links to the references, as well as to forward and backward citation information, and more. Standard ways of tagging references were developed and adopted (thank you, JATS [Journal Article Tag Suite]), allowing easy machine recognition of reference sources (journal vs. book chapter vs. some other type).

Recent efforts to open references (i4oc) aim to take this research even further, and artificial intelligence and semantic tools may soon allow understanding not just of whether a reference is cited, but of how often, and in which contexts throughout a paper, discipline, and over time.

The community now has an opportunity to continue this forward progress by extending this process to link datasets functionally between repositories and journals, thereby aligning scholarship with FAIR (Findable, Accessible, Interoperable, and Reusable) principles. This would improve data discoverability and research transparency, provide much more accurate research on reuse and outcomes, and allow for data to be valued like articles and to become part of the academic reward system. Indeed, DataCite was created in part to enable research data to follow the same process as Crossref enables for the scholarly literature. This process is more challenging, however, as research data are distributed across numerous international repositories with even less standardization than existed for journal references; in fact, data have not been regularly cited in references even when they are in a repository.

The good news is that all the pieces are in place now to enable the community to move forward. Many publishers have taken the bold step of signing onto the Data Citation Principles, aimed at ensuring citations to data are included in both papers and reference lists. Repositories, too, are taking up the mantle, housing data and providing permanent identifiers and landing pages for datasets, in compliance with the FAIR principles. A new level of certification of repositories, CoreTrustSeal, supports these efforts. Many repositories are now registering DOIs through DataCite, which then enables connections between Crossref and DataCite DOIs and metadata. The Enabling FAIR Data Initiative in the Earth, space, and environmental sciences is a good example of implementing these connections across a discipline. These activities, together with citation links, are the focus of an interoperability initiative, Scholix.

A key missing piece in implementing these connections is that, while many publishers have signed onto the principle of citing data, for the most part the community is not yet benefiting from this. To help with this, a group of publishers, under the auspices of the FORCE11 Data Citation Implementation Pilot, have just published a complete “roadmap for scholarly publishers” in Scientific Data (Open Access).

This article walks through all stages in the life cycle of a paper to demonstrate which actions publishers should take at each stage. We highlight here three of the most important steps for making data count — steps that publishers can take immediately. The first is in their guidelines, the second is in their production workflow, and the third is in the metadata deposit to Crossref.

The first step, as in the Enabling FAIR data effort above, is to direct research data into appropriate repositories and include data citations, together with their persistent identifier, in publications. This can be a DOI assigned by a repository through DataCite, or another recognized identifier (such as a GenBank ID). There are now many repositories, domain-specific or general, including institutional repositories, that can house most datasets. The FAQs that publishers and repositories developed for the FAIR data effort include additional information on this. It is a step that many publishers have already taken.

However, even when datasets are cited, they are often not tagged in a way that can be identified as a machine-readable data citation. This means that any effort that researchers are making in following best practices, or that publishers are putting into advocating or requiring that researchers cite data in references, are moot. For these citations to have value, they need to be tagged as what they are — a data citation — so that they can be appropriately recognized and linked. The requirements for taking this second step are described in the publishers roadmap. As a third and final step, tagged data citations need to be included in the publishers’ feed to Crossref.

The paper describes the two options for tagging and deposit to Crossref — see the “Production” and “Downstream delivery to Crossref” sections. For more detailed information, you can also take a look at this recent Crossref blog post on the topic.

When data citations are included, tagged correctly, and provided to Crossref, links become available to the community, thereby providing a complete view of — and increased access to — research. These data citations are included in the Crossref/DataCite Event Data Service, and can then be picked by other services via an open API made available by Crossref, as well as the DataCite API. In addition, the ScholExplorer service collects data citations for datasets using identifiers other than a DOI. This allows all organizations to discover and display the relations between articles and datasets. The connection is complete!

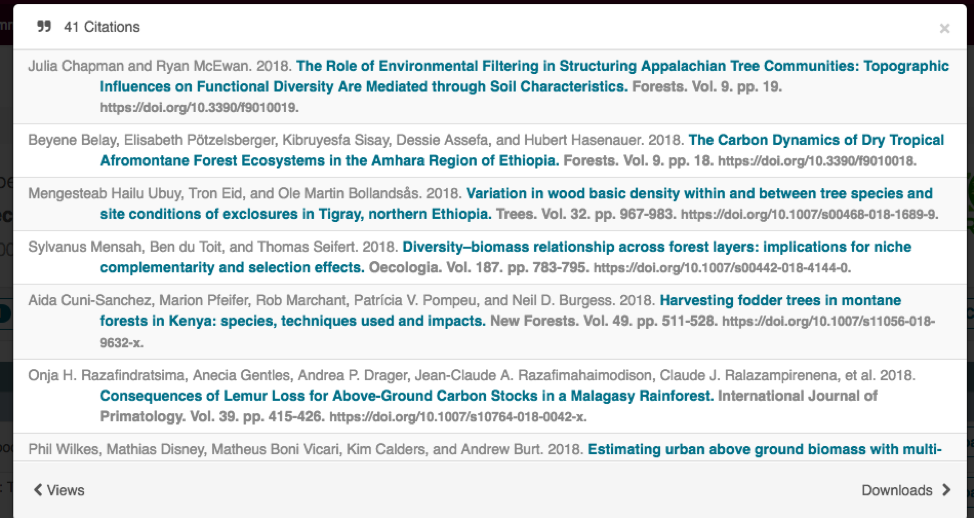

Researchers, repositories, scholars and others benefit by being able to follow links, whether they start with the data or literature. Properly tagged and deposited data references can also be displayed (and counted) at the repository level (courtesy of Make Data Count) as shown here:

(Photos courtesy of DataONE)

Adopting these standards and practices will go a long way to empowering data publishing, and to providing researchers with access to the data they need to validate conclusions and answer new research questions. If we believe data should be valued like other research outputs, we must take action to achieve this. Supporting the open data movement means providing proper support for data citations.

Help us make the magic of scholarship happen — prioritize implementing data citations for your journals!

Note, this post was updated on December 18, 2018 to correct the title of the journal Scientific Data

Discussion

9 Thoughts on "Making Magic Happen: Implementing and Contributing Data Citations in Support of Today’s Scholarship"

I guess the above activity should be added to the 200 plus things publishers do. I wonder if the EU, etal are considering this activity in Plan S and accounting for the cost (snort, snort).

Excellent post. I have never understood why the fundamentalist zeal of the open-access advocates doesn’t extend to open-data and open-science. As if the published article is the science. The data are the science. Rick Anderson’s 26 November 2018 post on concerns with Plan S garnered 107 comments within 2 days, and this comprehensive piece on open data and the importance of data citations garnered 2. (Every time I see the “Plan S” moniker, I am first reminded of the fabulously terrible movie Plan 9 from Outer Space. Unless it’s a Rick Anderson post. Then Plan 10 from Outer Space, also known as Utah, comes to mind). I’m not sure these mental associations are completely irrelevant.)

Back to data citations, as one who’s been required to have data reviewed and published concurrent with articles, it is not a trivial matter curating one’s data to be navigable by others and navigating the posting indexing and cross linking. Most of the publishers I’ve dealt with don’t know what to tell the authors. Often the journal will require a statement on data availability but will accept some vague statement in lieu of a functional cross link “contact authors about data availability.” Even when the authors do provide proper links, they just appear like a grey lit citation in the references with no obvious tags distinguishing them as a dataset. Then there’s the circle dance: you don’t want to publicly post (and lock) your data until you have the doi crosslink to the article, and you don’t want your article published until you have the doi crosslink to the data. I think most authors and editors don’t have a clue. I recently saw an article in which the authors used the data availability statement to explain how they got a hold of their supporting data, not how others could get the data they relied upon. This in a journal that makes hay about its open data, open reviews, and open everything policy.

What “machine readable data” is a loose concept as well. For some, its to facilitate big data crunching analyses to see in the whole forest what was lost in the weeds of individual studies. The data would be read and assembled by bots and code. Yet for lots of small studies, context matters and a spreadsheet is perfectly suitable for datasharing. (‘Please send your data, preferably a scanned pdf’ said no one ever) Some diverse fields such as genomics and oceanography have it worked out with searchable libraries for genomics. Early on oceanographers figured out that individual research cruises can’t touch the vastness of the oceans and they had to share and use each others data, and they needed a common metadata language to do so.

So yes, magic happens and there have been great strides on open data, but many publishers don’t walk the talk on open data, and only a minority of authors do.

Thank you for your on point comment, Chris. We couldn’t agree more. There is at least as much value in scientific data as there is in articles, and yet, we as a community have not convincingly put resources into or emphasis on research data. Data availability statements were the first step in announcing to the research community that data (beyond those that had standards like microarrays and gene sequencing) are an important scholarly output. But with the implementation of data availability statements we saw the publishing community call this a success (meanwhile many publishers were endorsing the data citation principles), and we have not seen sufficient movement on the data citation front. We know that we have a long way to go before we can really “credit” researchers for sharing research data, and we would not call open data the final goal. But to get there we have to continue to make data publishing and citing practices more seamless for researchers, and the publishing community is a crucial part in this.

– Daniella, Trisha, Helena, and Brooks

The promise of “open science” rests partly on the ability of publishers, authors, data archives, and other players to embed accurate and verified metadata such as ORCIDs and DOIs for scholarly objects. To “make the magic happen,” as promised in this post, publishers need to follow their own rules. Having just spent nearly two days of work with my co-authors cleaning up a proof from a Wiley journal where the copy editors REMOVED almost all of the DOIs, swapped the order of the three authors (but got the ORCIDs correct), created ambiguities in APA references that were accurately disambiguated, introduced errors in quotations with a “change all” command to replace “paper” with “article,” and made other errors that conflict with the Chicago Manual of Style, one wonders what we are paying publishers to do – and about the black magic required to accomplish this vision.

Defenses against the dark arts is doubtfully the magic desired. Were the copy editors working to enforce a legacy citation style? I’ve had similar experiences, coincidentally also with Wiley. If they match in CrossRef, I suppose visible DOIs might not be important, there always seem to be some dead links in papers. But why on earth every journal thinks it needs its own idiosyncratic reference style is beyond me. EndNote, for example, ships with over 1000 journal style definitions and taxonomies which doesn’t cover it all. None of the ones I needed were exactly correct. Two styles would seem sufficient for about all literature- either author-year or numbered.

We completely agree that this “magic” has no ground on its own. Data citations are critical for the advancement of research data publishing and open science. But this has to happen in conjunction with better metadata practices. It is frustrating that we continue to hear these stories of problems with persistent identifiers and standardized metadata practices. Authors like you and your group do a tremendous amount of work to create and analyze data, create metadata, collect references, etc. and yet this work is often lost and does not become part of the lasting scholarly record.

– Daniella, Trisha, Helena, and Brooks

Would that the copy editors even knew their own style… We use Zotero to generate bib refs accurately for the stated style of the journal. These are APA-conforming refs for an APA journal being undone. The journal bib style situation is far worse than Chris Mebane suggests. Rather, Zotero lists over 9200 styles, which cluster into about 1900 “unique” styles: https://www.zotero.org/styles. Visible DOIs and ORCIDs offer hope for CrossRef and other networking services to function effectively, to the extent that authors, copy editors, and publishers provide accurate information.

In some ways accessible, discoverable, re-usable, citable data are more important than open access to the published article. It is the data that researchers need to continue to make discoveries from published research. It is the data that the funding supported and thus that is what should be available. The funder did not support the work of the publishers to vet, edit, proof, host, archive, tag, and publish the article.