Editor’s Note: Today’s guest post is from Einar Ryvarden, the Digital manager of The Journal of the Norwegian Medical Association (Tidsskrift for Den norske legeforening). He has worked with online media since 1994 as an editor-in-chief and head of the development department in a Norwegian media group, running 15 websites.

The current system for indexing and sharing content and data eats up unnecessary resources and creates a barrier for small and new scientific journals. We need change to better democratize scientific publishing.

I am the digital manager of The Journal of The Norwegian Medical Association (Tidsskrift for Den norske legeforening in Norwegian). With a staff of 20, we are a fairly well-resourced independent scientific journal, but we strive to explore, understand, select, and fulfill the various needs of possible systems and partners in the scientific publishing community.

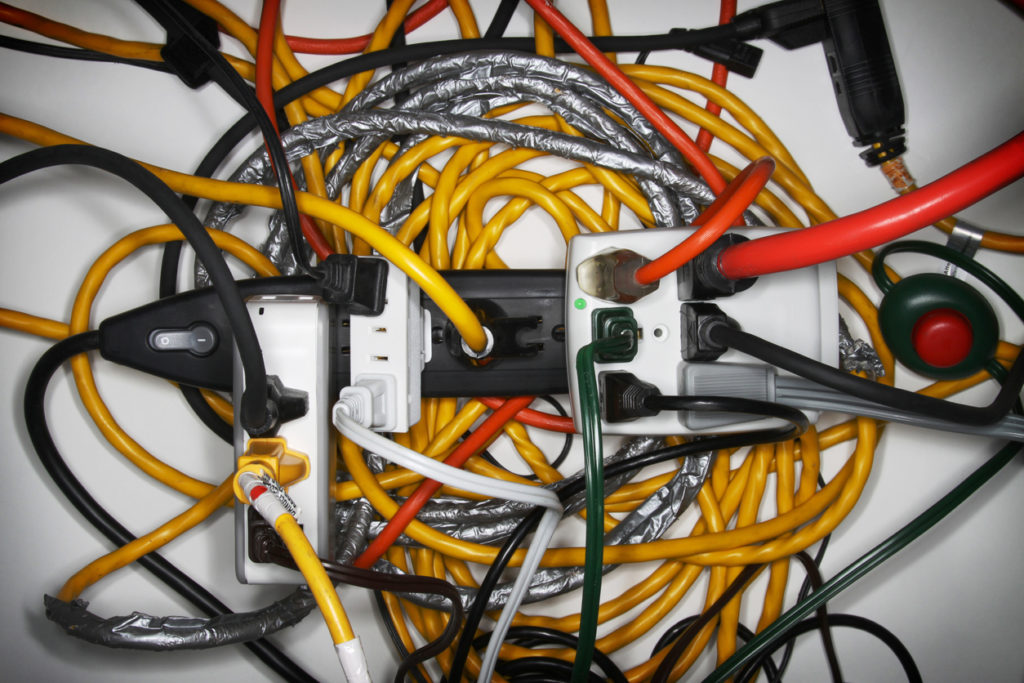

The complexity involved in the understanding and setting up of services like Crossref, Pubmed, Emerging Sources Citation Index (ESCI), and Google Scholar, to mention but a few, is daunting to very many journals, especially outside the US and Western Europe. Without using a platform partner, such as Silverchair or Atypon, or being part of a large publishing company, it is very costly to build and use these important data exchange services.

The complexity of indexing and sharing data with repositories and other third parties is a barrier to change for smaller operations. Managing metadata agreements, setting up data feeds, and adding metadata is a time-consuming task, but a necessary cost of doing business. That said, once you realize the time commitment for each exchange or agreement, you may, by nature, become conservative.

The more complexity you take on, the higher the risk and cost. Further, by setting up these technical connections, the thought of someday – eventually- moving platforms or content management system becomes increasingly difficult and expensive.

The scientific publishing community needs all the capacity possible for change, due to a number of factors:

- The rate of general technical evolution has increased

- The industry is facing some major challenges like open access and an increased focus on privacy, putting pressure on current business models and the ability to fund IT-changes.

- A drop in advertising revenue and more expensive physical distribution puts pressure on print-only journals to move to the web.

In order to support these digital services, creating and maintaining internal IT systems is getting more complex and thus more costly. Several factors have created a global dearth of developers throughout the western world. Already, several hundred thousand IT positions in the US are unfilled and it is set to get worse.

While well-established journals and societies might have the internal resources to understand, maintain, and experiment with new partners and demands, smaller and younger publications may struggle with not just the financial resources, but also the staff know-how to choose and maintain industry standard technology and processes. It is probably easy to forget for those of you who have worked in scientific publishing for 10-20 years, but less experienced people are often feel overwhelmed by the tsunami of abbreviations and potential partners. I have worked with online media since 1994 as an editor-in-chief and head of a development department in a large media house when I joined Tidsskriftet three years ago. But I can’t count the hours I have spent asking, reading, and evaluating the need and value of connecting with various partners. And I still regularly bump into new standards and partners.

The following three examples point out the unnecessary complexity of scientific publishing. My goal is not to shame anybody, I merely want to illustrate the combined complexity that journals like Tidsskriftet encounter:

The standard for submitting content to PubMed for indexing dates back to 2008 and discusses “electronic journals.” Journals publishing both in print and online have to create two different XML files in a custom format, log on, and upload these files to PubMed manually. In PDF-format, the documentation for creating and uploading the XMLs is 43 pages long. It probably cost us at least a week of developer time going back and forth, creating and tuning the XMLs.

Another example is the Directory of Open Access Journals (DOAJ). Tidsskriftet is planning to become an open access journal, but to be indexed by DOAJ you have to create a different XML-feed or set up your systems to work with their submission APIs.

Another example of the complexity in the standards journals must meet is Google Scholar. Google Scholar wants metatags in articles, but they don’t use Google’s schema-standard metatags, but prefers instead Highwire Press-style. These two tag standards provide pretty much the same data, just in different formats. As most journals and other websites, including Tidsskriftet, are experiencing a strong growth in traffic coming from Google.com, maximizing the search engine optimization with tags requires that the publisher do both. I am still trying to understand if Google.com prefers the general news article schema or if it is better to use the more specific Medical/Scholarly Article schema.

How can these barriers to become a full member of the scientific publishing world be significantly lowered? A piecemeal simplification done one partner at the time will not give the publishing community the significant help it needs.

We need to flip the current system of submitting data to instead facilitate third parties’ ability to easily gather the data they need. Instead of trying to simplify current standards and give each journal new work, it is the small group of receivers who should change.

The current receivers should fetch the data they need instead of each journal creating and sending data. Economically, it’s a simple choice: Let a handful of indexing and data collectors change their input systems instead of having a whole industry try to agree and then change thousands of systems.

This is how Google and other search engines work. They monitor the sites they want to index in two ways: They crawl the site and use the sitemap.xml file. Crawling is less reliable — a software robot is given one URL, copies and indexes that page, and then follows all links found on that page. It keeps on doing so on a site until it finds no more new links. The sitemap-system is better. Modern content management systems (CMS) generates and updates a sitemap.xml file or set of files, for example here. These files list all articles and the search engines visit the sitemap regularly, quickly discovering new content in a managed manner.

If all content was tagged with one of the more common metatag formats, the data receivers would find and pick up new content. The data receivers should be permissive and support several standards. By using these already widely used technologies, services such as PubMed, various Crossref services, Scopus, Mendeley, and others can all crawl and collect what they want. Permissions would still be required but could be simplified. It is also possible to create some simple standards to inform about permissions — for example you can index all data, but you can’t serve the full articles to end-users.

Switching to crawling will take time and a change of heart by the data collectors, but that can and must be solved. The alternative is to keep on adding and changing the requirements, one by one and prolonging the fragmented, undemocratic situation we now are in.

Discussion

8 Thoughts on "The Need to Simplify Indexing and Data Sharing"

You dismiss the obvious answer in a subordinate clause early in this post, “without using a platform partner…”. I’m really curious to understand why you dismiss that solution. I really know nothing about the financial and other contractual aspects of such a partnership but am interested in learning more about it (I’m a librarian). From my perspective, having smaller journals in such partnerships makes it a lot easier for us, eg. to get reliable COUNTER data, get reliable KBART data sent to our knowledgebase products, etc. By the way, I would also add Highwire to the list of such potential partners who would solve all those problems for you. Are they really too expensive or impose unacceptable burdens of their own on small publishers?

Einar, thank you for this post! Your suggestions would make it easier for a lot of small publishers to share data and make our journals available for a bigger audience.

Melissa, I am a digital manager for a German media group. The platform partners mentioned above offer a lot of benefits for publishers and their customers but they are too expensive for small publishers. The operating costs amount to threetimes of our revenue and you have to sign a contract for at least 3 years, usually you have to sign for 5 years.

It is kind of crazy that there are so many standards for metadata. How many ways are there to say the title of an article is this and it was written by these people? Why not standardise against Crossref? Everyone is already registering DOIs, their data is enriched with funder IDs, citations and more. Since there is a cost with Crossref membership, keep Dublin Core and OAI-PMH around and you’re down to two standards.

Of course there are the usual suspects who have an interest in keeping out new entrants and whose scale allows them to efficiently deal with unnecessary complexity. So I don’t expect much will change unfortunately.

MECA (Manuscript Exchange Common Approach, https://www.manuscriptexchange.org/), referenced in this previous Scholarly Kitchen article (https://scholarlykitchen.sspnet.org/2018/07/25/guest-post-manuscript-exchange-meca-can-academic-publishing-world-cant/), should be noted as an emerging approach to deal with these issues that face small and large publishing operations. As one example, PKP’s Open Journal Systems, which serves thousands of small library publishing opreations, has partner institutions are discussing the implementation of MECA.

I would suggest to move your journal to Open Journals Systems (PKP). It has all necessary plugins to upload metadata to CrossRef, PubMed, Google Scholar etc.

I agree with VINCAS. I’m sure you could get some help from the Open Access publishing services at the Norwegian universities, e.g. BOAP (UiB), FRITT (UiO), Septentrio (UiT).

https://www.uib.no/ub/71882/bergen-open-access-publishing

https://www.ub.uio.no/skrive-publisere/open-access/fritt/index.html

https://septentrio.uit.no/

Hi Einar,

You wrote: “Another example is the Directory of Open Access Journals (DOAJ). Tidsskriftet is planning to become an open access journal, but to be indexed by DOAJ you have to create a different XML-feed or set up your systems to work with their submission APIs.”

I just wanted to clarify that a journal does not need to send XML to DOAJ in order to be indexed and you do not need to integrate with our API either.

We will soon be releasing compatibility with Crossref XML (Feb 2020) too so if you already produce that, you won’t need to spend time or money establishing a DOAJ XML feed.

We look forward to receiving your application!

As Margit says, the platform partners really are unaffordable for small publishers. For example, one of my clients has 6 PubMed listed journals and would like to have better metadata handling. The owners spent time looking into it, but having seen the prices, they simply cannot see the business case for this. And I can’t blame them! The journals have tight margins and to pay for one of these platforms is unaffordable.