Editor’s Note: Today’s post is by Rachel Helps, the Wikipedian-in-residence at the Brigham Young University library. She was on the editorial board for the Wikijournal of Humanities from 2018 to 2020. She and her student employees create and edit Wikipedia pages related to the library’s collections.

When I tell people that my job is a “Wikipedian-in-residence,” I often follow-up with an apology: “I know. It sounds made-up.”

It’s hard to believe that an academic library would have a staff member dedicated to editing Wikipedia. They’re places which grant patrons access to specialized resources like scholarly databases, academic journals, and subject librarians. Wikipedia is the populist encyclopedia that “anyone can edit”; academic libraries are professionally curated collections for an academic audience.

While academic libraries and Wikipedia have many differences, they have a unified purpose: to promote access to information. Many Wikipedia pages summarize, cite, and curate scholarly information in an accessible format. Students, professors, judges, and junior physicians consult Wikipedia frequently. They also consult the footnotes on Wikipedia pages.

A well-written Wikipedia page will cite scholarly publications with links to the articles in those citations that can be accessed immediately by users. At the 2019 Charleston Conference keynote, Internet Archive founder Brewster Kahle claimed that 6% of Wikipedia readers click on a link in the footnotes (although another study found that it was more like 0.03%). In 2016, Wikipedia was the 6th-largest referrer for DOIs, with half of referrals successfully authenticating to access the article. External links on Wikipedia produce an estimated 7 million dollars of revenue per month. Given that Wikipedia is such a popular website, it’s unsurprising that academic publishers are actively pursuing ways to promote their work on Wikipedia.

Scholarly publishers have reported increased traffic as a result of giving access to their publications to Wikipedia editors, and a controlled experiment on Wikipedia shows that they are right to value Wikipedia citations. Works cited on Wikipedia have an outsized influence on scholarly work — specifically in its literature reviews. Additionally, one research article found that open-access (OA) articles were cited more frequently than non-OA articles on Wikipedia in 2014, an idea supported by the generally increased readership of OA articles compared to paid-access articles (all of these ideas are explained in more detail below).

Publishers want their work cited on Wikipedia: The Wikipedia Library

For-profit publishers are promoting their work on Wikipedia through a service called The Wikipedia Library. The Wikipedia Library began as the brainchild of Jake Orlowitz. He was using the free trial of HighBeam, a powerful full-text search tool owned by Gale, to increase his sources for research on a public figure. After his free trial expired, Orlowitz cold-called HighBeam to ask for free subscriptions for himself and a few of his Wikipedia-editing friends. When they gave him 1,000 free accounts, he realized that other publishers could be interested in a similar deal. Working at the Wikimedia Foundation, he was able to negotiate individualized database access for selected, highly-productive Wikipedia editors. In 2015, The Wikipedia Library had distributed over 5,000 logins. In 2017, the service was still growing, but suffered from usability issues like having logins limited to a short time, a three-week-long application process, and no centralized access. In January of this year, The Wikipedia Library announced a centralized access and search for their program, which gives productive (those with over 500 edits and an account older than 6 months, with at least 10 edits in the last month) Wikipedia editors access to “over 90 of the world’s top subscription-only databases,” including ProQuest, newspapers.com, JSTOR, and Wiley. In a blog post describing their partnership with The Wikipedia Library, Wiley stated:

As Wikipedia editors begin to use their access to Wiley Online Library, we expect to see an increase in direct Wiley citations on the platform, which leads to better discovery for Wiley content. For authors and our society partners, this means better visibility for content and new audiences who might not search for scholarly work through traditional scholarly discovery tools.

Orlowitz himself said that donating access to The Wikipedia library “costs them basically nothing […], and in return they are cited more on a tremendously popular website.” He wrote that their partners “have seen incoming traffic rise as much as 200% as readers click citations on live Wikipedia articles” and that The Wikipedia Library drives “the creation of 50 thousand citations each year.”

The increased traffic to publishers from Wikipedia citations agrees with a recent study, which looked at how citations to academic articles on Wikipedia influenced citations in academic literature.

Works cited on Wikipedia have a demonstrable influence on academic research

An experiment from 2020 found that authors of scholarly articles are more likely to use language and citations that are used in Wikipedia pages than language and citations that are not on Wikipedia. The paper, posted as a preprint on SSRN by Neil Thompson and Douglas Hanley, found causal evidence through a randomized control trial that Wikipedia articles influence academic articles.

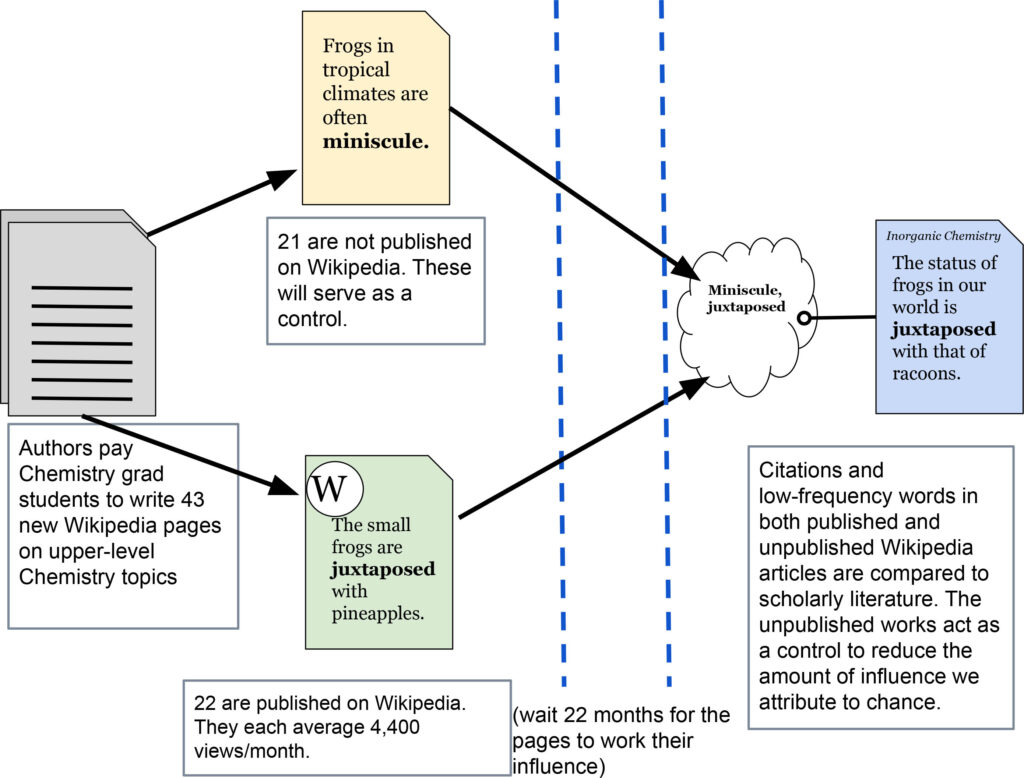

Their method was a bit unusual, so I will describe it in detail: The authors paid graduate and PhD students to write new chemistry articles for Wikipedia. They published only half of these articles on Wikipedia, deliberately not publishing the other half to serve as a control. The chemistry-themed Wikipedia articles they published received an average of over 4,000 views per month, which is unusually popular for new Wikipedia articles. They compared the published Wikipedia articles’ citations and low-frequency words to the new scientific literature. The Wikipedia articles were published in January 2015, while the scientific literature was examined for similarities in November 2016.

Thompson and Hanley found that “scientific articles referenced in the treatment Wikipedia articles get e^0.6490 − 1 ≈ 91% more citations than those referenced in the control Wikipedia articles, and this difference is highly significant.” (p.31-32) The control group allowed the researchers to say there was a significant difference between the influence of the published and unpublished Wikipedia articles. The literature review segment of the scientific articles showed the largest influence of any section. This pattern suggests that authors of scholarly articles use Wikipedia articles to help them find other articles to reference in their literature reviews.

While their figures are impressive, I would not expect the impact of creating new upper-level chemistry pages to be the same as the impact of creating a new biography page, for instance. The authors had also commissioned new Wikipedia pages on econometrics, but since their average views were very low, they were “statistically underpowered” (p. 22) and not included in the experimental analysis. Not every content gap on Wikipedia is in demand.

The experimental design is unusual, and the authors suppressed statistically insignificant results. A peer researcher of research impact said that the authors’ goal was “really tricky.” I believe that the effect size of citation influence is not generalizable to all citations on Wikipedia, but that Wikipedia articles do influence academic articles. That influence explains why publishers are eager to get their work cited on Wikipedia through The Wikipedia Library. In contrast to articles accessed through The Wikipedia Library, open-access articles are accessible to everyone on the internet. Does this increased access affect how often they are cited on Wikipedia?

OA Articles may be cited more on Wikipedia

In a study about the use of open access articles on Wikipedia published in 2017, Misha Teplitskiy, Grace Lu, and Eamon Duede studied the number of citations to OA journals on Wikipedia. To do so, they used Scopus journal data to find the 250 highest-impact journals in 26 major subject areas. This gave them a list of 4,721 unique journals, 335 of which were categorized as OA in the Directory of Open Access Journals (some journals were cross-listed across subject areas and duplicates were removed). For their citation data, they extracted all of the references in a 2014 database of Wikipedia articles that used the cite-journal template (all 300,000 of them). Using that data, they created a statistical model to predict what kind of article is likely to be cited on Wikipedia, given the qualities of the journal it was published in.

Based on their model, they predicted that “the odds that an article is referenced on Wikipedia increase by 47% if the article is published in an OA journal.” In their conclusion, they stated:

[…] open access policies have a tremendous impact on the diffusion of science to the broader general public through an intermediary like Wikipedia. This effect, previously a matter primarily of speculation, has empirical support. As millions of people search for scientific information on Wikipedia, the science they find distilled and referenced in its articles consists of a disproportionate quantity of open access science.

The article discusses a really interesting part of Wikipedia: its citations. But it uses data from 2014, and the number of citations to journals in their dataset seems suspiciously small. There were 4.4 million articles on Wikipedia in 2014, but according to the authors of the article, only 300,000 of over 4 million citations were using the cite-journal template? Still, the idea that open source articles are more likely to be cited on Wikipedia tracks with the limitations most volunteer Wikipedia editors face when editing articles. OA articles do enjoy increased readership compared to articles that are not accessible online. It would be interesting to see if the selection bias for OA articles (or any article available online) in Wikipedia citations has changed with more editors being able to access paid-access articles through The Wikipedia Library.

OA Publishers are adding citations

The selection bias towards citing OA publications on Wikipedia is easily explained by the fact that OA papers are more readily available to Wikipedia editors who may not be able to access other kinds of sources. But there is another contributor to this selection bias. Annual Reviews (AR), a nonprofit publisher, has hired a Wikipedian-in-residence to add citations from their “subscribe to open” journals to Wikipedia. In the original job description, AR wrote that the goal of the position is “to improve Wikipedia’s coverage of the sciences by citing expert articles from Annual Reviews’ journals.” This sentiment is echoed on Mary Mark Ockerbloom’s user page, where she states that her goal is to “integrate high-quality secondary sources in the life, biomedical, physical, and social sciences into Wikipedia articles.”

A look at Ockerbloom’s edit history shows that she’s not solely citing AR articles, but also other reliable sources in her work, which is beneficial to Wikipedia. This isn’t just out of politeness and good ethics; if Ockerbloom consistently cited only publications from a single publisher, she could be found in violation of the conflict-of-interest guideline. The conflict-of-interest noticeboard describes violations succinctly: the problem is when an editor “is using Wikipedia to promote their own interests at the expense of neutrality.”

Speaking from personal experience, I can say that having your entire Wikipedia edit history examined for bias on the conflict-of-interest noticeboard is an intensely anxiety-provoking experience. Being banned from editing would have ended my employment. Luckily, I have had the flexibility in my position to present controversial aspects of pages I’ve worked on as neutrally as I know how. When my contributions were discussed on the conflict-of-interest noticeboard, my colleagues argued on my behalf, and I’ve been able to continue my work as a Wikipedian-in-residence.

Publishers take note: you can hire someone to cite your publications on Wikipedia, which I believe is an effective way to disseminate research findings, but that person needs to cite other publications as well.

Conclusion

Wikipedia’s articles are popular stepping stones to other places on the internet. While not very many Wikipedia readers typically click on links in references, there are so many readers that even that small percentage has an effect. We can see that effect manifested in the success of The Wikipedia Library, which grants active Wikipedia editors access to academic databases in a way that is very unusual outside of academic libraries. The two studies discussed here have some serious limitations, but point to interesting interactions between scholarly articles cited on Wikipedia and their influence on the authors of scholarly publications. If you work at an academic library or publisher, you may want to consider hiring a Wikipedian-in-residence, but they need to have flexibility to balance Wikipedia’s interest’s with yours, or they could be found to have a conflict of interest that prevents them from editing neutrally.

Discussion

15 Thoughts on "Guest Post – Wikipedia’s Citations Are Influencing Scholars and Publishers"

Thanks Rachel for the interesting post. I must admit confusion with the Thompson and Hanley comparison of the influence of published and unpublished Wikipedia articles. What is an unpublished Wikipedia article? Same thing as a letter written but never sent? So essentially just a way of pre-identifying comparison citations?

yes, exactly like a letter written but never sent. To take your analogy further, you would compare the low-frequency words used in sent letters and unsent letters to the recipient’s later writings. You know that the unsent letters couldn’t have influenced the recipient. They can help you know how much variation of influence you would expect by chance.

The unpublished Wikipedia articles probably stayed in a draft space on Wikipedia or in a Word document somewhere. After the study, the authors say that they published the “control” group of articles on Wikipedia, presumably in order to not waste labor.

Thanks . Glad to hear the “written but not sent” chemistry Wikipedia articles were eventually published. I wouldn’t be very happy to be deceived into writing commissioned articles as a subject matter expert, only to learn that my efforts were for a study and never intended to see the light of day. Presumably all was disclosed to study participants. Ingenious study.

Whether the publisher is non-profit or not, and whether the mission of the non-profit overlaps with the mission of the Wikipedia Foundation, seems to me a requirement to avoid a conflict of interest. Flexibility to balance Wikipedia’s interest’s with a publisher’s interest is not sufficient. IMO, an employee automatically has a conflict of interest if the publisher is for-profit or has a mission that does not overlap with Wikimedia’s mission “to empower and engage people around the world to collect and develop educational content under a free license or in the public domain, and to disseminate it effectively and globally.”

I confess I had an knee-jerk negative reaction when I read that Annual Reviews has a Wikipedian-in-Residence. But Annual Reviews is a non-profit “dedicated to synthesizing and integrating knowledge for the progress of science and the benefit of society.” [1] To the extent Annual Reviews is successfully executing on that mission, then there is little conflict of interest.

I would not extend the same judgement to non-profits with incompatible missions or for-profits in incompatible markets (which I wager are all publishing markets).

One of Wikipedia’s core values is to “assume good faith” about other editors. I believe that we should evaluate conflict-of-interest editing based on the actual edits a user and not on the nonprofit status of their employer. But I agree with you that is more difficult for employees of for-profit institutions to edit neutrally.

Great point about good faith. Perhaps a middle view between type of employer and employee edit history is to ask the question why an employer is paying an employee to edit Wikipedia. The answer to that question might reveal a conflict-of-interest with Wikipedia, with the employer, or neither. Depends on the employment. Some jobs demonstrate bad faith by accepting responsibility of the job (e.g. lawyer of adversary). I wouldn’t trust me for some of the jobs I had in the past! 🙂

I’ve been thinking a lot about this comment. Is the idea that it is in violation of Wikipedia’s mission or a conflict of interest if one adds to or edits a Wikipedia page for any rationale other than “to empower and engage people around the world to collect and develop educational content under a free license or in the public domain, and to disseminate it effectively and globally”?

If so, I’m wondering how much of the content there stands in violation. Wikipedia is seen as an essential tool for marketing for many spheres, from entertainment to business. Wikipedia is probably also broadly used in SEO strategies. What about classes where students are assigned the task of creating or editing a Wikipedia entry? If they’re doing it to complete the assignment does that mean they’re doing it in bad faith? There’s been a laudable movement to create pages for female scientists — this clearly has some agendas behind it beyond Wikipedia’s core mission. Is that okay? What about the relatively small cadre of editors who do huge amounts of edits, often competitively to gain status on the site — are they doing so in bad faith?

Yes, Wikipedia’s neutrality and the conflict of interest behavioral guideline are aspirational, not actual. Someone editing Wikipedia for free is not free of a conflict of interest. When I edit Wikipedia outside of my work, it’s on topics I’m a fan of. That’s why I think it’s better to judge edits based on their content rather than editors based on why they are editing or where they are employed.

I’m not a Wikipedia policy expert, but my quick take on your first question is “no”. For your hypothetical examples, I think they would be fine in the vast majority of cases, but that’s not guaranteed. Depends on the resultant actions.

Perhaps the key aspect to note here is that people can have multiple rationales for doing something, and that’s fine. Students, feminists and editors can all act in good faith to help the Wikipedia mission AND simultaneously be motivated to do that for other reasons (that are not in conflict).

Regarding marketing… I imagine marketers are having an extremely difficult time using Wikipedia as a marketing tool. At least if my first edit experience, on the “Binary number” page of Wikipedia, is anything like typical Wikipedia pages. In less than half an hour, my edit was tagged as “possible conflict of interest”, labeled “linkspam” and promptly removed. Less than 30 minutes. On a page about binary numbers.

https://en.wikipedia.org/w/index.php?title=Binary_number&diff=332151526&oldid=332146552

I also remember getting labeled a sock puppet. But I think the labels maybe have been softened over the past 13 years. My edit was not even remotely for-profit. It was silly fun recreational math. Useless though. Removing it was the right thing to do. I’ve made a few edits on more esoteric math pages since then and had no problems. But they have been small edits that correct mathematical errors.

Wikipedia also has a lot of policies about paid editing generally and how to reveal that you might have a conflict of interest with the topic. So, for example, it’s okay to be paid by Whole Foods and edit the Whole Foods article as long as you disclose that you’re paid by Whole Foods on your user page and otherwise adhere to Wikipedia guidelines. Paid editing is a topic that has often split the Wikipedia community but there are now a lot of rules about it, not just generalized ideas about whether it might or might not be okay.

Rachel’s post is packed with so many informative cases and examples. I learned so much; thank you Rachel and the folks behind this blog! As an additional informative case, I share the following event in the history of open-source, specifically the Linux kernel (an open-source project which almost all of us use without even realizing it). This event is indicative of the degree to which key leaders in open-source will judge contributions based on the institution they are associated with. I wager similar sentiments can be found with some leaders in Wikipedia and open access. The short summary is that the maintainer of the Linux kernel banned the entire University of Minnesota due to bad faith contributions motivated by the publication of one single paper. The full picture is full of subtleties. Here’s the original ban of the University:

https://lore.kernel.org/lkml/20210421130105.1226686-1-gregkh@linuxfoundation.org/

and various links from the University with more details:

https://cse.umn.edu/cs/linux-incident

Internet searches will show many post and articles about this event.

a researcher at UNM was submitting “compromised code submissions” to the Linux kernel to see/study if anyone would notice? My mind is being blown.

Rachel, is it a possibly confounding factor in the “infrequent words” analysis illustrated in your article that one of the highlighted words is misspelled? (I mean, I’d hope that any misspelled word in a Wikipedia entry appears infrequently at best.) In my role as an editor and fact-checker, I’d be extremely reluctant to cite, link to, or rely on any article in Wikipedia or anywhere featuring a misspelled or misused relevant term, and would suggest to my client that they find another source.

This brings up an interesting related problem with accuracy of references in peer-reviewed medical articles for example this is one type of study on this issue in medical surgical journals. It certainly makes one think about “evidence-based medicine” in a somewhat different light with such errors not being detected by the editorial process:

“Accuracy of references in general surgical journals — An old problem revisited” (2008) doi.org/10.1016/S1479-666X(08)80067-4 | PMID: 18488770

The best way to appreciate how Wikipedia is nothing more than a tool of manipulation by conflicted and/or manipulative parties is to appreciate the Wiki entry for “predatory publishing”:

https://en.wikipedia.org/wiki/Predatory_publishing

An academic joke?!