Editor’s Note: Today’s post is by Minhaj Rais. Minhaj is Senior Manager, Strategy & Corporate Development, at Cactus. Minhaj collates industry insights and conducts in-depth analyses to help shape global organizational strategy and product incubation.

Ethan Mollick, Associate Professor at the Wharton School, recently concluded in conversation with the CEOs of Turnitin and GPTZero that, “There is no tool that can reliably detect ChatGPT-4/ Bing/ Bard writing. None!” Even if some of the AI-detection tools do develop the capability to detect AI writing, users don’t need sophisticated tools to pass ChatGPT detectors with flying colors — making minor changes to AI-generated text usually does the trick.

The fact that there are dozens of paraphrasing tools out there that can help users rephrase entire papers within seconds has provided further ammunition to bad actors looking to plagiarize and churn out fraudulent manuscripts at a faster pace. While the challenges for publishers battling ills such as plagiarism and paper mills continue to become more complicated, I posit that human intervention (human intelligence tasks aka HITs in MTurk parlance) coupled with the prowess of AI detection tools could present a viable way forward.

In this article, we explore how HITs and not simply more AI tools (to detect the use of generative AI tools) could be the way forward as a reliable and scalable solution for maintaining research integrity within the scholarly record.

ChatGPT has deeply penetrated the scholarly ecosystem

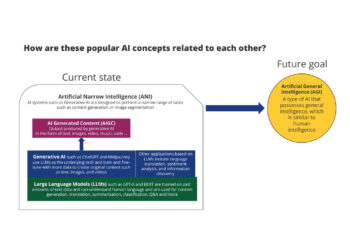

While ChatGPT continues to witness unprecedented usage, stakeholders across industry verticals are not only excited, but also worried about how it could impact businesses, education, creative (including research) output, and much more. There is an increasing realization that ChatGPT in itself is not going to be a “solve-all” tool; hallucinations, inherent biases, and the inability to assess the quality and validity of research are just some of the limitations that limit the free use of LLMs.

However, while various stakeholders within the scholarly ecosystem realize the pitfalls of using tools such as ChatGPT, it has also opened doors for AI tools to have a greater impact in various domains—with domain-specific tools oftentimes perceived as being more trustworthy. Hence, one of the outcomes has been a visible uptick in AI-powered apps for different purposes.

Surprisingly, the uptick in usage has not been limited to apps powered on the ChatGPT API; instead, it has led to an upsurge in the usage of standalone AI-powered apps, such as Paperpal, scite, and Scholarcy. For example, both Paperpal and scite now have over 350K users, and the Scholarcy graph below is indicative of the massive growth they have witnessed in the post-ChatGPT era. Moreover, several AI-powered products have gained more acceptance in the wake of ChatGPT.

I'm not going to lie, I initially worried that ChatGPT would make Scholarcy obsolete. But the opposite happened. And what's great is that we have our own models and our own IP too. pic.twitter.com/kzunFX2av3

— Phil Gooch (@Phil_Gooch) May 3, 2023

Generative AI tools have added wings to paper mills

As the usage of generative AI tools surges, the complexities will multiply, and there will be newer ways to quickly generate more content while escaping the eyes of even the best detection tools, leading to multiple challenges across industries. For now, let us focus on some challenges specific to the scholarly publishing industry.

The problems raised by paper mills and how they have managed to penetrate the scholarly record will only worsen with the advent of generative AI tools. Paper mills are now able to offer faster turnaround times, earn better margins with less human involvement, generate fake graphs and images on the fly, and paraphrase plagiarized text with greater ease. Jennifer Byrne from the University of Sydney sums it up well: “The capacity of paper mills to generate increasingly plausible raw data is just going to be skyrocketing with AI.”

Given the sophistication of paper mills, generative AI is a gift from the skies for nefarious actors! Sophisticated and coordinated operations by paper mills have resulted in harmful repercussions for a major publisher, including discontinuation of special issues and a hit to revenues, which eventually led to massive losses to the tune of hundreds of millions of dollars; generative AI only makes such bad actors more powerful. In addition, as soon as new tools are developed to detect something generated by ChatGPT, other tools are developed to beat them. It also does not help that paraphrasing tools such as QuillBot are gaining unprecedented popularity across age and user groups.

Detecting AI-generated text is going to be problematic

Trying to quantify what is allowed and what is not might not be an effective way to address the burning issue of AI-generated text, given the explosive growth in AI-powered tools. While industry bodies such as the STM Integrity Hubare working to provide a cloud-based environment for publishers to check submitted articles for research integrity issues, it is going to be an uphill task as bad actors within the publishing ecosystem up their game too. Researchers have established that none of the AI-generated text detectors are foolproof, all of them are vulnerable to workarounds, and it’s unlikely they will ever be as reliable as we’d like them to be.

Not all researchers understand the pitfalls of generative AI tools

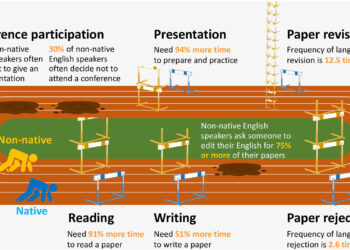

Moreover, while there are several bad actors employing generative AI to generate and submit fake and unsubstantiated content as genuine, we also need to give space to the fact that many users might be victims of unintended consequences. Elsevier, for example, states that, “where authors use AI and AI-assisted technologies in the writing process, these technologies should only be used to improve readability and language of the work and not to replace key authoring tasks such as producing scientific, pedagogic, or medical insights, drawing scientific conclusions, or providing clinical recommendations.”

But what if a non-native English-speaking author uses ChatGPT to only edit their paper, which is then flagged for AI-generated content? They would not be able to negate the fact that they did use ChatGPT, but at the same time, they actually did not harbor any malicious intent and had opted for ChatGPT only as a cheaper means to fix language issues in their manuscript. How does one disprove an AI tool that has flagged their content as AI generated?

Another example could be researchers falling prey to hallucinations. While concerns about ChatGPT hallucinations are increasing, and some researchers worry that they are not fixable, some researchers aren’t cognizant of the fact that they need to double check citations and end up blindly trusting content generated by AI tools such as ChatGPT. This could simply be the case of an early career researcher struggling to complete their literature review in time and lacking knowledge of the right reference tools resorting to ChatGPT, assuming that it is a free and powerful tool that can assist them in their research.

How many of ChatGPT’s purported 600M+ users have a good understanding of the tool’s pitfalls is anyone’s guess. While we have discussed just a few examples above, generative AI tools are certain to further complicate the challenges faced by the scholarly publishing community.

HITs might present a viable and scalable alternative

So, how can we navigate these challenges presented by the emergence of generative AI tools? Over time, the accuracy and reliability of AI tools will be enhanced, but all of this will depend a lot on human intervention to train these AI and ML tools to encourage ethical use. Using AI tools to detect AI content might not be the best workaround to battle current challenges, especially given the biases built in owing to faulty or biased training data. While the jury is still out on whether humans will be able to do a better job at detecting paper mill output, adopting an integrated approach might be the best alternative.

Some industry players including Cactus have begun offering AI solutions complemented by human intervention at the right stage and in the right measure to avoid obvious pitfalls, while retaining the advantage of AI-powered tools for scalability. These solutions provide publishers with much needed specialized human intelligence to help them retain research integrity while maintaining the scalability of their operations.

How can HITs be deployed to safeguard research integrity?

Solutions such as IntegrityGUARD offered by Cactus allow for the integration of human subject-matter experts (SMEs) within publishing workflows and can be employed to complement the STM Integrity Hub or tools such as Paperpal Preflight to ensure that publishers can get the best of both worlds—the scalability of AI tools coupled with discerning, trained human eyes.

Employing SMEs for tasks such as verifying author profiles and their institutional affiliations, checking for tortured phrases (typical signs of paper mill submissions), vetting recommended reviewers, and exploring potential conflicts of interest allows for making up the limitations and, more importantly, biases of AI-powered tools. The key aspect here is that there are obvious signs and patterns that a trained human eye can spot better than a machine.

The fact that such tasks still need human intervention to provide more reliable and unbiased results establishes the use-case for HITs. Although many publishers are still keen on completely automated solutions, it is more likely that solutions supported by HITs might be the means to achieve their end goals. Ensuring and protecting research integrity requires attributes such as creativity, critical thinking, careful verification, emotional intelligence, and complex problem-solving skills — most of which are still not easily replicable in complex settings, such as making unbiased publishing decisions.

Challenges in deploying HITs and potential solutions

While HITs-backed research integrity solutions might seem like a silver lining, there will be various challenges such as scalability (manually verifying millions of papers submitted each year would be impractical and slow down the already arduous publishing process), availability of SME talent, and the additional cost burden on publishers and/or researchers.

However, most of these challenges can be overcome with an optimal mix of HITs and AI-powered tools in that AI-powered tools can be the default scalable solution, while HITs-backed solutions can be deployed to verify flagged papers before a binding editorial decision on rejections/retractions. Moreover, these deployments can also be used to train and refine AI tools to ensure fairer decision making. The key aspect in this approach is that the deployment of HITs should be perceived from the lens of augmenting the reliability of AI tools.

This leaves the sticky issue of footing the bill for such an endeavor. Ideally, the gatekeepers or entities responsible for ensuring and maintaining research integrity (read as “publishers”) should be footing the bill. Moreover, while research integrity issues damage the reputations of not only publishers, but also institutions and researchers, publishers seem to bear the highest share and direct financial brunt of such issues. That said, given the various complexities around reduced margins in the APC regime and APCs already being perceived as too expensive, the answer might not be as simple and might vary depending on the publishing model in question.

On a related note, it may also be time for publishers to consider recalibrating their talent to complement AI-enabled workflows. As many AI experts say, the key is using generative AI to augment, not automate, human creativity and democratize innovation.

Hence, while there is no doubt that generative AI is here to stay, the need of the hour is probably to pivot to complement AI tools with human intelligence to our advantage. The earlier players within the scholarly publishing industry realize this, the better it will be to maintain the integrity of the scholarly record.

Discussion

1 Thought on "Guest Post — Are HIT-backed AI Research Integrity Solutions the Need of the Hour?"

A timely post, in light of so many commentators talking about generative AI tools replacing humans: better to understand what these tools can do and how to make the most effective use of them.