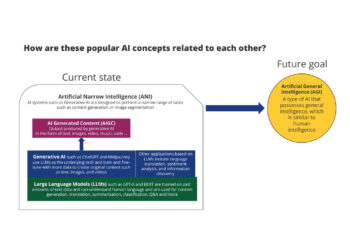

Generative AI—and its poster child, ChatGPT — is one of 2023’s most buzzworthy topics. It has been at the center of discussion and controversy, from a group of prominent computer scientists and other tech industry notables calling for a 6-month pause to consider the risks, to Emma Watkins’ “(Slightly) Hopeful View” here on The Scholarly Kitchen.

I attribute ChatGPT’s success to four important things it has done correctly.

- Tech: Its creators chose the right technology, and it was trained to align with human values and better understand human needs.

- Engineering: From the beginning, it was designed to be scalable and extensible for real applications, not as a research prototype.

- Product: OpenAI first built the super app ChatGPT, then moved to an ecosystem platform with ChatGPT API and plugins.

- Business: OpenAI collaborated with Microsoft to get funding and resources to target the B2B market, compete with Google, and cover the B2C market.

Since the launch of ChatGPT, my team and I have been internally evaluating the potential applications of large language models (LLMs) in scholarly publishing and the impact and opportunities they can bring.

My group within Wiley Partner Solutions designs and develops intelligent services that leverage advanced AI, big data, and cloud technologies to support publishers and researchers in open access and open science environments. To identify both benefits and risks of generative AI for our industry, we tested ChatGPT and Google Bard for authoring, for submission and reviews, for publishing, and for discovery and dissemination. I hope that our findings will inspire you to find fresh ideas for using Generative AI, and will stimulate further conversation about this new and controversial but potentially beneficial tool.

The content we used in the experiments described below include content created by the team, OA content, or publicly accessible titles, abstracts, or other metadata.

Generative AI (LLMs) for Authoring

We applied ChatGPT and Google Bard to four stages in the authoring process: enhancing writing quality and readability; generating abstracts and titles; suggesting relevant journals; and generating topic suggestions.

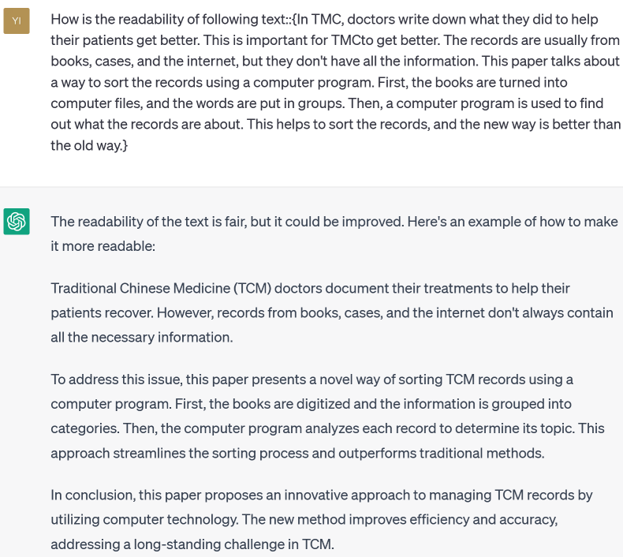

Checking readability and writing quality and suggesting improvements are common applications of LLMs.

When we applied ChatGPT to manuscripts, we found that it can:

- Evaluate the writing quality and give reasonable feedback: In our exercise, ChatGPT’s response was, “the readability is fair but could be improved.” This feedback concurs with a human opinion.

- Understand specific terms, even in abbreviated form, and correct them: We deliberately used an incorrect abbreviation for traditional Chinese medicine, “TMC”; ChatGPT interpreted the context and corrected the abbreviation to “TCM.”

- Rewrite the manuscript with better sentence structure and vocabulary to improve readability: In our example, we found ChatGPT improved readability, as shown below.

Bard has similar capabilities and can also automatically calculate a readability score. However, it is not as good at rewriting as ChatGPT.

To test the ability to generate abstracts and titles, I used ChatGPT to generate a title for another Scholarly Kitchenpost, “The Intelligence Revolution: What’s Happening and What’s to Come in Generative AI.” I was satisfied with the suggested title, but it was ultimately changed in editing. ChatGPT also generated an abstract based on the full text of that post, which I found accurate and useful. ChatGPT was able to provide logical explanations for the abstract and title.

Next, we tried out some AI tools’ ability to suggest appropriate journals for a manuscript — one of the most popular AI applications in scholarly publishing. Using a paper titled “Neoadjuvant and Adjuvant Chemotherapy for Locally Advanced Bladder Carcinoma: Development of Novel Bladder Preservation Approach, Osaka Medical College Regimen,” we compared suggestions from ChatGPT with Wiley’s Journal Finder to see which tool offered the best results.

ChatGPT suggested four journals:

- Journal of Clinical Oncology

- The Lancet Oncology

- Breast Cancer Research and Treatment

- Cancer

Wiley’s Journal Finder suggested three:

- European Journal of Cancer

- The New England Journal of Medicine

- NPJ breast cancer

Like ChatGPT, Bard recommended relevant top-tier journals, but only the dedicated Journal Finder gave the correct answer: the paper was published in The New England Journal of Medicine. Most journal publishers offer a journal finder. Compare ChatGPT and Bard to your favorite!

We then asked ChatGPT and Bard to suggest research topics, using this prompt:

“I am a PHD student and focus on NLP area. I am really interested in large language models currently. Can you suggest a good topic for PhD research and paper writing” and “I am a journal editor in computer science, I would like to create a new journal or special issue, please suggest some important and popular topics for the new journal and special issue to have enough submission to make the journal or special issue successful.”

ChatGPT suggested a good topic and detailed research objectives. It also provided a general framework and methodology to help students approach the topic.

Bard gave more emerging and up-to-date ideas, but with less detail.

Generative AI (LLMs) for Submission and Review

At the submission and review stage, many tasks may benefit from generative AI help. We tested five of them: extracting metadata; screening novelty, relevance, and accuracy; reference quality analysis; reviewer suggestion; and detecting personal identifiable information.

To evaluate the ability to extract metadata from unstructured text, we used this prompt:

“I have the following text which contains title, author information. Can you extract the metadata information from the text?”

ChatGPT was able to successfully identify the title, authors, author affiliation, and contact information, but it could not recognize or disambiguate author and institution, nor could it link text to publicly available databases such as ORCID. The most serious problem was that when we asked ChatGPT to find the authors of a given paper, it instead generated and returned fake names.

Fake names returned by ChatGPT:

Yingying Zhang, Desen Zhou, Siqin Chen, Shenghua Gao, and Yi Ma

Correct author names for the manuscript in question:

Zhi-Qi Cheng, Jun-Xiu Li, Qi Dai, Xiao Wu, Jun-Yan He, Alexander G. Gauptmann

Bard can return the author’s ORCID, but it sometimes generated a fake ORCID.

In an exercise to see how well ChatGPT can screen papers based on predefined criteria and identify the most relevant papers for specific journals, we provided four OA papers from nanotechnology and materials science; electrical engineering and applied physics; robotics and mechanical engineering; and AI and neuromorphic computing. ChatGPT correctly identified the most relevant paper for each journal and identified research fields, article types, and which articles were more novel. But it had trouble understanding complex scientific details. For example, it incorrectly suggested that one materials science paper was more relevant to AI than to the materials science field.

Based on these two exercises, it appears that, currently, ChatGPT could be useful to an editorial assistant running initial screenings. How useful depends on whether and how scientific publication data is used to train or fine-tune AI models.

References are important metadata for both publishers and readers. We asked ChatGPT and Bard to detect self-citations; to identify retracted, questionable, or outdated references; and to measure topic variance.

ChatGPT cannot accurately identify self-citations, especially when non-English names are involved. Here Bard did a much better job: it was able to find self-citations, identify outdated references, and check the topic variance, as well as giving a breakdown of references by topic. It also pointed out a questionable reference that has been criticized for lack of rigor and flagged outdated info from several sources, but was not able to show the evidence. Neither ChatGPT nor Bard could detect retracted reference in the experiment. Our in-house reference quality analysis tools can accurately answer these four questions with evidence.

In most cases, publishers rely on the networks and knowledge of editors and authors to find qualified reviewers. We asked ChatGPT, Bard, and our own review finder to suggest relevant reviewers for a title and paper abstract. In both cases, the results contained serious issues: ChatGPT often suggested non-existent reviewers, and Bard sometimes returned the authors of the papers as suggested reviewers. Wiley’s dedicated reviewer suggestion services give more reliable results with richer information.

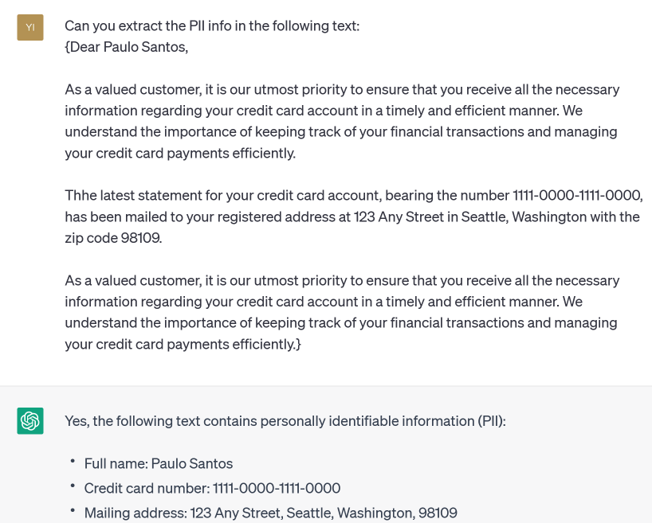

Our last experiment in this category used ChatGPT to detect personal identifiable information (PII) that could be sensitive and should be flagged before publishing. We asked, “Can you extract the PII info in the following text?”ChatGPT not only understood the meaning of PII but also extracted the PII correctly:

Bard was not able to detect PII.

Generative AI (LLMs) for Publishing

ChatGPT may help enrich content at the publishing stage. However, it currently cannot tag content based on a customer-specific taxonomy, and it often generates fake tags or IDs.

To look at content summarization, we compared ChatGPT to an extractive abstract generated by our Intelligent Services Group. The extractive abstract pulls out important, detailed information from the original text, but it is not very readable; we found that the ChatGPT abstract was highly readable but contained less detailed information. Based on these findings, we used extractive abstracts and ChatGPT together, allowing ChatGPT to generate a new abstractfrom the extractive one. The new abstract maintained both detail and good readability, as well as circumventing limitations on input length and reducing the risk of false information. ChatGPT generally produced a better and more fluent summary than Bard, but was also much more expensive, in commercial applications, and generated less detail.

Funding information is important and valuable to publications, especially for OA articles. We asked ChatGPT and Bard to extract funders and corresponding grant IDs, and to link funder registration in Crossref. We found that ChatGPT can extract funders and grant information accurately, but cannot access the Crossref funder database; Bard missed four funders and created a fake Crossref ID.

In our tests of auto-translation between English and Chinese, the version of ChatGPT we tested, which was based on the GPT-3.5 model, did not perform as well on biomedical abstracts as commercial tools like Google and DeepL, but did produce good results for spoken language. However, other researchers have found that the new ChatGPT based on the GPT-4 model performs just as well as commercial translation tools.

Generative AI (LLMs) for Discovery and Dissemination

In the crucial dissemination and discovery steps of scholarly publishing, AI is significantly enhancing the quality of search results while also introducing new ways of discovering information.

In evaluating ChatGPT, Microsoft Bing, and Google Bard, we asked, “What is the latest study progress about large pre-trained language models?”

We found that:

- Bard is faster, provides more detailed answers, and can generate search queries.

- Both Bard and ChatGPT limit their answers to their own data. While Microsoft Bing is slower and produces shorter responses, it generates its results based on web search and provides links to real, recent related articles.

- For queries related to scholarly research, Bing gives better results than either Bard or ChatGPT.

- ChatGPT is more intelligent and better understands questions and tasks, but has limited knowledge of world events since 2021.

- ChatGPT supports both English and Chinese input, while Bard currently supports English only. On Microsoft Bing, the English search is better than the Chinese search.

To generate personalized recommendations, we used the following prompt for ChatGPT:

“I am a research scientist in NLP area. I am currently interested in large language models like GPT-3. Can you recommend some relative papers about this area?”

ChatGPT recommended five papers published before 2021.

We then asked, “Can you recommend some latest papers?” ChatGPT suggested five more papers:

- “GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding” (2021)

- “Simplifying Pre-training of Large Language Models with Multiple Causal Language Modelling” (2021)

- “AdaM: Adapters for Modular Language Modeling” (2021)

- “Hierarchical Transformers for Long Document Processing” (2021)

- “Scaling Up Sparse Features with Mega-Learning” (2022)

In this example, although the prompt only asked ChatGPT to recommend the latest papers, it understood what topics we were looking for based on the previous conversation. But there is some danger here! If you try to find any of these articles online, you will discover that the papers ChatGPT suggested do not in fact exist. You can also see that because ChatGPT’s model includes no data after 2021, its non-existent suggestions are much less recent than we would expect. Based on these experiments, it is clear that ChatGPT can anticipate human intention and generate human-like text very well, but has some crucial limitations.

It is important to recognize that AI governance is far behind AI capabilities. Research to ensure that AI aligns with and is governed by human goals and values, and is compliant with applicable laws, is massively lagging when it comes to progressing the capabilities of AI. There are many risks in content generation, dissemination, and consumption. AI TRiSM (trust, risk, and security management) are becoming increasingly critical. The legal and ethical issues related to AI-generated content also need to be taken seriously, including AI-generated content infringing on human creators’ intellectual property or privacy rights.

I hope that our exploratory work brings insight and fosters discussion and further consideration of how generative AI could be used in scholarly publishing. I encourage you to do your own evaluations of ChatGPT, Google Bard, and similar tools.

A special thank you to Sylvia Izzo Hunter for her editorial assistance on this post. Sylvia is Manager, Product Marketing, Community & Content, at Wiley Partner Solutions; previously she was marketing manager at Inera and community manager at Atypon, following a 20-year career in scholarly journal and ebook publishing.

Discussion

9 Thoughts on "Generative AI, ChatGPT, and Google Bard: Evaluating the Impact and Opportunities for Scholarly Publishing"

Great! I suggest that you try the latest version of Bing from Microsoft as well.

This is a great post, and Hong, you and your team should be commended for your proactive stance in applying generative to these areas within scientific publishing. That being said, readers should be reminded that neither ChatGPT nor Bard, or askPi for that matter, or any of the consumer facing tools were designed for scientific data analysis. While they are useful for evaluating a proof of principle, if we are seeking to apply GenAI to speed time to publication and decision, it will likely be with customized applications and purpose built models. In the future, it will also be great to see approaches that look to innovate other aspects of the publication process utilizing generative, such as peer review and enabling readers to interact with underlying data assets post-publication.

Fantastic work! So Kudos to you and your team Hong. From the user perspective (both academic and editorial), this all sounds really promising and is very exciting to see. From a developer perspective, if the use cases are good enough, I think it becomes worthwhile to invest in risk mitigation strategies, very much the same way OpenAI are doing with their Red Team (https://www.ft.com/content/0876687a-f8b7-4b39-b513-5fee942831e8). It’s certainly looking that way. Good luck! And thanks for sharing.

Great post! You have shown how generative AI can be used effectively for scholarly publishing. Also, you have shown the limitations of the tools discussed, which I think will be an eye-opener for anyone using these tools. Thanks for sharing!

Fantastic Work!!!

The fact that Bard was not able to conceptualize PII is darkly funny.

Thanks for the comment. Our goal is to share the opportunities and impact in which GAI could help at different stages of scholarly publishing but not to focus on conclusion and results from the experiment. The LLMs/GAI have grown very quickly. This info is easily becoming outdated.

The fact only refers to the moment at which we run the experiment :).At the time we tested it two months ago, Bard said it’s just a language model, and cannot detect such info. Now it indeed works.

Thanks for the comment. Our goal is to share the opportunities and impact in which GAI could help at different stages of scholarly publishing but not to focus on conclusion and results from the experiment. The LLMs/GAI have grown very quickly. This info is easily becoming outdated.

The fact only refers to the moment at which we run the experiment :).At the time we tested it two months ago, Bard said it’s just a language model, and cannot detect such info. Now it indeed works.

Apologies for what may be a question reflecting my unfamiliarity with generative AI. What is the reason these products provide fake results, rather than indicating “no results” or something similar? It seems like this adds more work to identify/remove the fakes, instead of simply pointing to areas where a human should focus their attention. Thank you!