Editor’s Note: Today’s post is by Mary Miskin. Mary is Operations Director for Charlesworth, an Enago company, as well as a Board Director for the International Society of Managing and Technical Editors (ISMTE).

In December 2020, the Chinese Academy of Sciences released the first version of its journal Early Warning List. Three years on, Prof. Dr. Liying Yang, Director of the Scientometrics and Research Assessment Unit at the National Science Library, Chinese Academy of Sciences, who manages the Early Warning List, will host a research integrity conference in China, bringing together experts from around the world. In advance of the event, Mary Miskin caught up with Prof. Dr. Liying Yang.

Please can you tell us about yourself and your career?

In 2007, I gained my PhD in scientometrics from the National Science Library (NSLC) at the Chinese Academy of Sciences (CAS), which is where I currently work. Scientometric study uses publication data to describe research activities. After completing my PhD, I continued to work at NSLC. In 2014, I moved to Germany to undertake a postdoctoral position at the Fraunhofer Institute for Systems and Innovation Research, and I maintain a research fellowship there.

In 2016, I was involved in the launch of a new journal, the Journal of Data and Information Science, an English-language journal focused on scientometrics and indexed in the Web of Science (WoS, Emerging Sources Citation Index) and Scopus. I am now Co-Editor-in-Chief for this journal.

My work within the NSLC has been primarily within the Scientometrics and Research Assessment Unit.

Can you tell us about the work of the Scientometrics and Research Assessment Unit?

Sure! First, let me give some context to where the unit sits within the wider NSLC and CAS, as people often wonder why a library would have such a department! The NSLC is a central library that belongs to CAS. CAS is the largest national organization in China and includes over 100 research institutes and two universities. The research field of CAS covers natural sciences, interdisciplinary fields, and high-tech fields. NSLC provides information resources, promotes research progress, and supports policymaking for science and technology development in CAS and in China, thus NSLC is not a traditional library, but serves various purposes other than providing resources to researchers. My department is one of many units within NSLC.

My unit uses data to describe research activities and conduct research assessments. Our work is wide ranging, covering studies on scientometric indicators, analysis of scientific fields, evaluation of research performance, and support for research policies. We provide data and statistics to the Ministry of Science and Technology (MOST), National Natural Science Foundation (NSFC), and CAS. In addition, we monitor and investigate the evolution of scientific research in China based on publication data, patent data, etc. Internationally, we are most well-known for the CAS Journal Ranking and the Early Warning Journal List (EWL).

What is the CAS Journal Ranking?

The CAS Journal Ranking is an academic impact ranking list for the journals indexed by WoS. It was first released by my unit in 2003 and has become widely accepted within the Chinese research community and has been officially recognized by more than 500 research universities nationally. Looking back to 20 years ago, when CAS Journal Ranking was being developed, most Chinese researchers were not familiar with international journals and there was limited information to determine the differences in impact of international journals. This brought difficulties when choosing where to submit manuscripts for publication. The CAS Journal Ranking supplied a useful channel for Chinese researchers to select where to publish their research. This is primarily how the ranking was utilized for the first 10 years. However, the ranking now has a secondary use in research performance evaluation at a university level. We promote its use at an institutional level, but we do not advocate its use in the evaluation and promotion of individual researchers. For the assessment of individual researchers, it is better to integrate the results from peer review. In recent years, my colleagues have interviewed many Chinese universities and tried to provide usage guidance of the CAS Journal Ranking for research performance evaluation, aiming to demonstrate the best practice for individual and institutional assessments.

What is the methodology behind the CAS Journal Ranking? Do other national lists and journal analytics feed into your journal ranking?

The CAS Journal Ranking is based solely on data, we do not utilize peer review feedback in this ranking. The data used are taken mostly from WoS, and the journals in our ranking are almost the same as those on the WoS list; however, we also include English-language Chinese native journals, as we want to encourage the top-performing English-language journals within China. The methodology of our journal ranking is distinct from that of the Journal Citation Report (JCR) released by Clarivate. The CAS Journal Ranking was first released in 2003 and Clarivate released the first JCR quartile ranking in 2007. When we developed the CAS Journal Ranking, we considered a crucial point — natural distribution in social society — that is, the 80:20 rule (also known as Pareto distribution). This rule or logic is reasonable in the distribution of journal impact: the top level has greater value than others, so we give higher weighting to top-level journals.

We divide all journals from a specific field into four tiers. The number of journals in each tier is not equal but is close to the shape of a pyramid. For example:

| Tiers (1 = highest) | Number of journals as a percentage of the total |

| 1 | 5%–10% |

| 2 | 11%–20% |

| 3 | 20%–50% |

| 4 | 51% |

Another important aspect of CAS Journal Ranking is the novel indicator, the Journal Success Index. CAS Journal Ranking previously utilized Journal Impact Factor (JIF) as the indicator to measure journal impact. Journal Success Index was introduced in 2020 to provide a more robust indicator that avoided the disadvantages of a mean indicator such as JIF. Additional measures introduced by CAS Journal Ranking include a more refined classification system at the paper level (to replace the journal-level field classification system) to better reveal journal impact.

In addition to the CAS Journal Ranking, your unit also releases the EWL. What was the background behind the EWL? What problem were you trying to solve?

The Chinese government had issued many policies to improve the situation of research integrity, and measures were needed to implement these policies. The EWL aimed to put such policies into practice. Without the list, we had many policies, but the situation had not improved significantly.

The EWL reminds journals to coordinate with the research community to improve research integrity. If a journal has poor quality control, as demonstrated by inclusion in the EWL, then these journals will not attract Chinese authors, as the list is highly influential to Chinese researchers when choosing where to submit their work.

The journal Science has published a study showing the impact of the EWL and other tools on papermill publications and demonstrating improvement in the integrity of these publications.

How does the CAS EWL differentiate itself from other international lists such as Beall’s?

Beall’s list is different as it is for predatory journals. Chinese researchers focus on publication in WoS or Scopus journals, and as most predatory journals from Beall’s list are not indexed by WoS or Scopus, I don’t think Beall’s list is effective in China. For us, we needed a list that identified problematic journals that attract Chinese authorship. The EWL aims to solve problems faced by the Chinese research community.

There are three different categories in the EWL, please can you explain the differences between these?

The three levels are from very different perspectives.

The highest level focuses on papermill publication and takes information from social media of the research community, for example, For Better Science, PubPeer, and websites of the Chinese government. Colleagues from my unit investigate and collect information from international and Chinese social media on a daily basis.

Do you include data from Retraction Watch to inform your highest level?

Retraction Watch is a useful platform for stakeholders to get the collected information for retractions. However, because it takes time for publishers to investigate and make the final retraction decision, there is a lag time. Since the aim of EWL is early warning, we don’t include data from Retraction Watch in our highest-level journal.

The lowest level focuses on journals where the impact has decreased sharply within a couple of years. This could be an indicator of a publisher seeking a sharp increase in output without maintaining required quality standards.

The middle level is the most difficult to explain, it is a compound perspective looking at whether journals have:

- An unreasonable article processing charge (APC)

- A very high percentage of Chinese authorship, but relatively low impact

To inform the inclusion of journals in this level, we investigate the journals within the Chinese researcher community, particularly to understand their experience within the peer review process. Some journals may have no peer review process, particularly fully Gold open access (OA) titles, or they may show other indicators of a lack of quality control. We also ask publishers for their information on peer review processes.

We have a WeChat channel with over 1.3 million followers, and we gather a lot of Chinese research community feedback here. Also, we have many connections with top Chinese scientists from different fields who provide input.

When reviewing the list, would you expect a user to assign different levels of importance to the low, medium, and high levels?

Most universities do not discriminate against the different levels, they give them equal weighting and avoid submitting to any journal at any level. We consider the high- and medium-level journals to be more problematic than the lower-level journals.

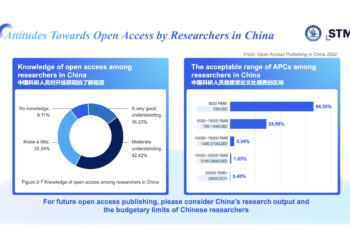

A journal can be listed for having an unreasonable APC, what constitutes a reasonable APC?

We have a formula that we use to calculate a reasonable APC. The logic for a reasonable APC is not seen as the absolute value, it takes the rejection rate into account. It is reasonable for a journal with a higher rejection rate to have a higher APC.

How does the EWL team work with publishers when formulating and reviewing the EWL?

After the EWL was first released on 31st December 2020, the EWL team received lots of feedback from international publishers. We appreciated and valued this feedback, some of which was very constructive and inspiring, so we started to establish a feedback mechanism. Before we release the EWL, we now send the preliminary journal list with related statistics to the publishers involved in the list, meaning the final decision integrates feedback from the publisher and the Chinese research community.

In recent years, the Chinese research community and administrations have encountered various challenges, including academic misconduct and the quality of scientific research. Also, in developing countries such as China, APCs are related to the effective use of research funds. These challenges need to be faced together with publishers.

We appreciate the tremendous contribution that international publishers have made to the advancement of Chinese research. Through the EWL, we aim to remind publishers to strengthen quality control while also focusing on issues that the Chinese research community must confront. We want to avoid situations where a small number of problematic journals could lead to more serious consequences, such as imposing a comprehensive ban on a combination of journals that implicates more journals than those that are known to be problematic.

The list was 65 titles and is now 28 titles, do you foresee this number dropping in the future?

Each year the data are recalculated. For each publisher, if they only have a few titles listed, we share our data and discuss the specific problems of individual journals. What the publisher does with that data and the feedback that we receive from them are used in combination to determine the final results. Sometimes the feedback from publishers is effective in having journals removed from the list. For example, we had a journal that was listed because the impact had decreased sharply; the publisher advised us that a journal merge had markedly increased output as there was a high backlog of acceptances that needed to be published, thus this journal will not be included in the list in the following year.

I don’t know if the number will decrease or increase, for sure I hope it will decrease, although artificial intelligence (AI) technology brings many new challenges to the publishers.

While the journal ranking and EWL are very influential at CAS, there are several institutional level lists within Chinese universities, hospitals, and academic societies. Some of these are influenced by the CAS list and others use different methodologies. What are your views on these lists?

The EWL team noticed these additional institutional lists at the beginning of 2023. I am looking forward to discussing them in more detail at the upcoming Zhuhai conference, where I have invited guests from Chinese hospitals that have released long journal blacklists. I think there are two different situations where an institution may have its own list. One is where an institution may optimize the lists based on their own field, using their subject experts to expand our list to cover more journals. CAS advocates for these types of additional lists.

The other situation is where an institution may release a much longer “blacklist” (I prefer the name “grey list”) of titles relevant to their subject areas. I fully understand that they are trying to overcome research integrity concerns, but it is unreasonable and overextending to list entire journal portfolios owned by a publisher. On the current EWL, we only put the journal into our list after we find the necessary evidence to make the final determination.

Several publishers have had to make large-scale retractions of papers from authors based in China due to papermill activity. In what ways are CAS, Chinese policymakers and the wider Chinese academic community working to solve the problem of papermill publications?

I hold another position at NSTL, I am the Executive Director of the Research Integrity Study Center. The task of that unit is to support policymaking related to research integrity. The Chinese government and Chinese institutions are undertaking considerable activity to tackle the problems of research integrity in China. Many policies have been drafted by my colleagues, submitted to administration, and subsequently released as national-level policy; examples include Opinions on Strengthening Ethical Governance of Science and Technology and Rules for Investigation and Handling of Academic Misconduct. Almost all institutions now have a research integrity office. From a policy and mechanisms perspective, many measures are being implemented to improve the integrity of research in China, including stakeholders from different parts of Chinese ecosystems taking measures to try to improve the situation. But there are still many things we need to do, the EWL cannot solve all the problems. We are constantly searching for opportunities to coordinate with all stakeholders in the ecosystem, we need to connect policymakers, researchers, and international stakeholders to work together on the situation. The upcoming Zhuhai conference is an example of our initiatives, and I look forward to continuing our discussions with the international community at this event.

Discussion

1 Thought on "Guest Post: An Interview with Prof. Dr. Liying Yang of the Chinese Academy of Sciences"

“For reasons unknown, NSL/CAS has been particularly kind to Hindawi journals. NSL/CAS released their controversial Early Warning Journal List in December 2020, December 2021 and January 2023. In the latest version, the Hindawi journals Biomed Research International, Complexity, Advances in Civil Engineering, Shock and Vibration, Scientific Programming and Journal of Mathematics, which had been on the blacklist, were reinstated. Other problematic Hindawi journals were never on the blacklist.”

https://deevybee.blogspot.com/2023/07/is-hindawi-well-positioned-for.html