It happened with music, and we’re not talking about subjective measures — the quality of music reproduction decreased measurably in order to make digital music’s capacity and portability possible. While digital recording created stunning fidelity, playback flagged. MP3 files were routinely downsampled to a fraction of their original fidelity, and sound systems — made smaller and lighter for the age of portability — became comparatively weak and tinny. Yet, few complained. The benefits of being able to carry around your entire music collection, make your own playlists, and buy only songs outweighed the degradation of quality for most. In fact, many of those adopting the new digital music technologies no longer know what they’re missing.

The standard has reset at a lower level.

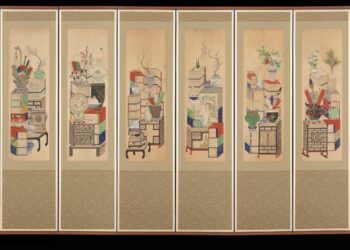

It happened to books, and started long ago. The illuminated manuscript and early codex books were beautiful, exquisite works of art, replete with precious materials (gold, jewels, tints). The printing press brought the power of mass production to the form, and the manufactured book shed many of its artistic qualities — after a brief flirtation with recreating the fidelity of the manuscript. Now, with e-books, more elements are being shed. Nice printed books reflected their craft heritage with deckle-edge pages, fine typography, embossed covers, and metallic inks. E-books are mainly text files that eliminate thoughtful typography, distinctive physical design elements, and other intrinsic sources of aesthetic value.

Telephony is another area that seems to have decreased in quality as it has increased in availability. Compared to land lines, cell calls get dropped, words are mangled by interference, delays make crisp give-and-take difficult — yet we soldier on. Cell phones provide portability, peace of mind (our car breaks down, we can call someone), and convenience. Who cares if your friend’s voice isn’t as resonant and clear as it would be on a land line?

In all three cases, I doubt we’ll suddenly pull a u-turn and return to an era of fragile, non-portable music (vinyl records), expensive, wasteful books (paper), and tethered, low-functionality phones (landlines).

Information goods seem strangely susceptible to this trade-off between availability and quality. The wide availability of food has improved quality and variety simultaneously. I can almost hear the foodies groaning about fast food and the like, but that’s just one niche, and kind of proves my point — food can get worse, but doesn’t have to. The quality of fresh fruits, of affordable whole grains, of organics, and of clean water has all improved as availability has increased. In fact, the pressure on the worst foods in the market largely comes from the awareness supported by shared availability — that is, we can assume that people eating junk food have access to better foods, but just need to choose differently. Wal-Mart’s recent move to make better food available on a widespread basis seems to argue in favor of this direct correlation.

Automobiles are another case. Originally, these were idiosyncratic, unreliable, and low-quality. The fact that Scientific American said in 1909 “[t]hat the automobile has practically reached the limit of its development is suggested by the fact that during the past year no improvements of a radical nature have been introduced” provides a source of amusement.

Mass production has only made cars better, and robotomation has made them truly reliable and beautiful in this day and age. You can bank on your car lasting for 100,000 miles of driving with few problems. You can choose from many stylish, durable cars for under $20,000. Widespread availability has only made cars better.

So why does availability seem to drive down quality around information goods?

It’s been said that when information is widely used, it becomes more valuable. Yet, to be widely used, it seems information decreases in quality in some meaningful way — fidelity, reliability, beauty, relevance, or durability.

It’s not like the information about availability can’t improve. For instance, Google is not only better than Yahoo! or Bing or AltaVista, it’s a constantly improving source of information about information. The more information there is for Google to consume, the better it gets. Wikipedia is an improvable and improving reference work that has become the de facto encyclopedia of our age. Its model suggests it may hold sway for decades. It can address its deficiencies. It can improve.

In scientific publishing, increased capacity has led to a measurable decrease in aggregate quality of the corpus, if you accept that the proportion of papers making a meaningful contribution is a measure of quality — that is, if 100% papers were cited and read, every paper could be said to be of some quality. But as more papers flood out each year, a higher percentage go unread or uncited. This is not a sign of increasing quality, just increased capacity.

Clearly, the answer is that it’s easier to increase capacity than it is to increase quality. To return to the automobile, the Model T was produced in relatively large quantities, but quality didn’t increase at the same time. Quality came along much later. With Japanese autos coming into America in the 1970s, a volume of them arrived first, and their reputation for quality arrived later.

But improving the quality of information takes effort, expense, and discipline. Google and Wikipedia have expended a large amount of energy improving and maintaining quality. They’ve invested in systems, definitions, and rigor in a way I’m not sure we’re prepared to do. And when we increase capacity, we don’t engineer it well. For instance, PubMed Central is not a well-engineered content repository — self-healing, scaling quality with content, and so forth. It’s merely an exercise of storage capacity and accessibility, not quality enhancement.

We can’t imagine it will just “take care of itself.” Unless we improve the parameters of scientific information and invest in creating a system that is somehow better at improving as it grows, we’ll continue to drive down the quality of scientific information even as we increase its availability.

(Thanks to Bruce Rosenblum and Ann Michael for helpful conversations while this post was simmering.)

Discussion

33 Thoughts on "Why Does Availability Seem to Drive Down the Quality of Information Goods?"

Amen to this – in my view your post underscores the importance of cooperation of ALL stakeholders in the information value chain. If publishers, libraries, OA advocacy organizations do not engineer, curate, and operationalize well, scholars can write the most lucid articles (which most of them do for a living, or at least attempt to do, next to all their other duties), but they will not be enhanced and facilitated. Scaleability, interoperability, and ‘updateability’ will remain key issues – that’s why Wikipedia and Google have become so huge. Now I am not saying that the scholarly information sector will become that large, but I do agree with you that facilitators can learn a lot from taking a close look at what both hardware and software industries have been doing in the last decades.

You folks talk about quality as though that term were well understood, but I find it to be one of the most confused concepts I have met in 40 years of concept analysis. For example, when you talk of the “aggregate quality of the corpus” are you referring to the information or the science being reported? You seem to be talking about the importance of the results, which is not about the information and is arguably not about quality either, because it is beyond the author’s control.

It seems obvious that if a million articles are published then the average importance will be lower than if only a thousand are published, but so what? This is not a reason to ration availability, which is what you seem to be calling for. Unless I have misunderstood you, due to the vagueness of the concept.

I suggest you folks stop using the word quality, unless you can be very specific about what you mean. It has too many pejorative connotations.

Quality is about generating something that advances the goal of the field. In music, fidelity to performance and/or production has long been the goal — MP3s have taken us a step back. In nutrition, food availability and safety are part of having achieving health and nourishment. In science publishing, we’re getting end-products that aren’t serving to advance science but rather are cynical fealty to the publish-or-perish paradigm. I don’t think this is as debatable as you do. Each field is endeavoring to achieve something. Science is attempting to better identify reality. Publishing practices that further cloud the exploration of reality seem a diminution of quality to me.

If you think that scientific publishing controls science then you are mistaken.

Not at all. But scientific publishing can help or hinder discovery of interesting science for working scientists; can make it easier for practitioners to remain current with best practices; and can help educate and train future scientists, among other things. If we do it poorly, we’re not helping. If we’re not part of the solution, we’re part of the problem. Publishing more AND publishing it better seems like the goal to me. We’re only doing the first part.

I agree that this analogy is something of a stretch. There’s a big difference between increasing the quality of a manufactured good and increasing the quality of individual inspiration and clarity of thought. I can make an mp3 sound better by using a higher bit rate, but I can’t make a mediocre thinker into a genius.

Is the “quality” of science really declining? Or is it just that genius is a rare thing that doesn’t necessarily scale? More and more people are doing research so the overall quantity has increased, perhaps making the rare geniuses seem more sparse even if they’re as common as they’ve ever been.

Factor in also that we’ve created a system that often drives away the best and the brightest into other careers that are easier and provide better material rewards. How many mathematical geniuses head to Wall Street instead of MIT?

More and more people doing science doesn’t mean that the quantity of published results has to go up. Having the quantity of published results increase doesn’t mean that aggregate quality has to decrease. As noted, there are information approaches that scale quality along with quantity. How can we do this?

It may even make doing science more rewarding, keeping more in the fold.

I agree that the current model falls down in many ways, but what you are contemplating is a restructuring of the way science gets done (particularly WRT granting and who-works-where)rather than a simple rejiggering of the publication system

Not necessarily. If taxonomies and standards could be uniformly embraced, scientific publication may become scalable ala Google and others. But we’re fragmented, idiosyncratic, evolved, and article-driven. Until we rethink, we’re going to be stuck making the same things more available in a system that breaks further with each new set of findings — becomes harder to use, less high-yield, more confusing, and less relevant.

I’m sorry, no, more people doing science means more results, and since those results must be communicated to be useful, that means more papers published. Furthermore, since the paper is the currency of the field, the way funding agencies and employers measure productivity, if you limit the number of papers being published, then you will simply have lots of formerly employed scientists.

And as I said above, some things may not scale, may not be improved by brute force approaches. Putting more people in front of easels may not result in more masterpieces. We live in an era where access to instruments, recording tools and the means of distribution of music are more widespread than ever. Has this produced a golden age where music today is head and shoulders superior to any previous era?

I think the three examples you’re citing – MP3s, mobile telephony, e-books – are cases of disruptive technology in their early (or maturing) stages. Initially, the disruptive technology’s performance/quality is lower than the that of the incumbent technology, but over time, it will reach and then surpass it.

Today’s standard MP3/AAC files sound as good as CDs. Perhaps I’m an optimist, but I believe that beautiful typography, aesthetics, and audiovisual enhancements will find their way into e-books sooner or later, and that mobile telephony will reach HD voice/video quality in a couple of years.

I agree, Victor, but we have to work to make it happen. Digital music is improving because engineers are working to make it happen. There are engineers working in STM publishing and communication to improve things and make quality scale with volume — semantics is one big hope here. But if we just keep shoveling articles out as if that’s helping us achieve our ends, I think we’re diminishing quality.

PubMed Central actually serves the purpose of making publicly funded content (plus a whole range of low to high quality content provided by many publishers) available quite well. And if you use PubMed as the gate way to accessing it’s content, rather than the no frills PMC site, you have a high quality database front end that many researchers use to access scientific publications.

As for audio files and books, sigh. I’m buying most of my music on CDs and then burning to MP3s. And I’ll miss book covers when I finally break down and get an eReader.

Yes, PMC increases availability but does nothing to increase quality. This is part of my point. Some systems can do both. Those seem to me to be better systems — inherently superior. Just doing one (increasing availability) falls short.

The PMC site has room for improvement and I’m guessing it will do so incrementally, however it isn’t a publisher and I don’t believe it is accepting content based on the decisions made by publishers.

If the post is concerned with quality then perhaps it would have been better to provide as an example either a specific publisher of less than quality papers or, better yet, the collective increase in number of journals made available by the majority of both open access and subscription based publishers.

I’d question whether the audio quality of a piece of Mozart or Beethoven diminishes any of the musical quality of the composer. It may diminish the quality of the experience, but not of the content. I value substance over style and the examples you mention in the beginning concern style, not quality.

But nobody can agree with anybody on what quality actually is, so perhaps it’s not surprising we don’t agree on what quality actually is.

However, I’d like to ask you for your source on this statement: “But as more papers flood out each year, a higher percentage go unread or uncited. This is not a sign of increasing quality, just increased capacity.”

Ideally, this should come in a way that makes comparisons between then and now easy such as download numbers, limits on the numbers of references in articles, publications per working scholar, etc. Otherwise, there are just too many alternative explanations to be allowed to make that statement.

I’ve listened to Mozart and Beethoven performances (note — music is experienced via performance, unless you’re a savant who can scan a composition’s notes and play it in your head) on many music reproduction systems. Those that achieve the closest fidelity to the actual performance deliver the content in a way that is clearly superior. Diminishing away from this hurts the experience, so much so that you can miss subtleties and even whole notes.

Nobody can agree with anybody on what quality is? I disagree. There’s usually a large consensus, and even standards in many fields.

We’ve covered the numbers around increasing publications and decreasing citations here a number of times.

Googeling:

increasing publications decreasing citations site:http://scholarlykitchen.sspnet.

Yields 165 results.

Googeling:

“increasing publications” “decreasing citations” site:http://scholarlykitchen.sspnet.

Yields none.

High-quality referencing and source disclosure looks different to me – but as usual, our concept of quality seems to differ.

Here’s the post where I covered this most extensively. Divergence is occurring — more papers, a lower proportion cited.

You can’t talk about “quality” as an abstract value when you are measuring only one variable. Particularly when we are discussing information, we need to keep in mind the needs of the user. To some degree, all of these are examples of the message being more important than the container.

Kids (i.e. anyone younger than me) are the primary consumers of Pop music. They do favor quantity over sonic quality in a way that an opera buff never would (and their needs drive the recording industry).

Along the same lines, folks who make phone calls typically privilege concerns about being able to make more calls over those about sonic fidelity.

By the same token, lovely books are lovely, but they are mostly long gone in the trade and scholarly markets. The people who use those books, whether Twilight fans or scholars (or Twilight scholars for that matter) care more about the content of the work than the quality of the binding.

Yes, there has been a gut-wrenching explosion of information over the past several decades, and that is making all of our lives more difficult in some ways. But in publishing, much of this explosion is driven more on the message-supply side (increased grant monies, faculty hired, ever-more-stringent promotion requirements) than it is by the number of journals or journal-like outlets.

There is little chance of ever getting the Genie back in the bottle now, regardless of what happens to OA or conventional publishers….

In the UK we have a variation on this scenario in terms of TV channels.

The combined machinations of the Thatcher government and the Murdoch empire in the 1980’s has allowed multiple channels of TV garbage to proliferate over the past 20 years.

The result is that we have lost the high quality of TV that we used to enjoy, and are pitched into a sea of pap.

If it weren’t for the valiant efforts of a handful of channels, almost all of them on the BBC, our TV culture would be permanently damaged.

Are you comparing apples and oranges, quality of content with quality of format? The MP3 analogy would seem to apply best to the transition from offset to digital printing, which was awful at first but has been getting better but may never achieve the quality of the best offset printing, just as MP3s and CDs have gotten better but may never achieve the sonic quality of LPs. The content, however, may have remained at the same level of quality while the formats have changed. You apparently are arguing that it has not. My hypothesis, based on 40+ years of experience in university press publishing, is that the amount of A+ content has always been small and has not changed much over the years, but that the amount of B and C quality content has grown as the pool of researchers has grown in number.

I don’t question your praise of book quality in earlier times or of LPs over modern music media (and I own both rare books and many LPs for just that reason), but I do wonder about the quality of cars when mine has been recalled for repairs frequently in recent years and Toyota’s once vaunted reputation is now in tatters.

Well, you CAN compare apples to oranges, as this clever infographic shows us.

Kidding aside, I was trying to choose dimensions of quality that mattered at one point, faltered when availability increased, and either continue to lag or are slowly catching up. Some areas have improved dramatically, and some information services are built so they never seem to falter. Our dimension of quality that seems to have faltered with increased availability on both the input and output sides seems to be “yield” or “impact.” We’re publishing more, but getting less for it as far as advances per paper.

I would still take a Toyota today over a Ford from 5 years ago. In fact, I’d take a Ford today over a Ford from 5 years ago. Auto quality continues to be imperfect but improving. I’m not advocating perfection, just arguing that we could do better if we found ways to improve quality while improving availability. We seem to be impressed with our abilities to do the latter while leaving the former more than a bit underserved.

I think it depends upon what is basis of competition among products. Publishing has been, I think, overwhelmed by competition for speed (publishing a story or finding first) and authors evaluated on volume. As you pointed out, early digital music competed on portability and convenience. Then Amazaon started competing with less-compressed tracks without DRM. This has started to result in changes even at the iTunes store. Not sure I entirely agree with you about food. Falling food price came at the expense of quality, because it required standardization. Think of how heirloom varieties are not sold, and how crappy most standard peaches are. Then, some stores started competing not on price, but on organic, or greater variety, or locally grown, and now you start to see some of that at more mainstream stores. Question is, what will you compete on other than price, and is there a market for it?

It’s a tricky set of comparisons, that’s for sure. But it does seem that some things scale up nicely while others don’t, even information goods. As for food, the variety, freshness, abundance, and choice we have today compares very favorably to what we had 30 years ago, I’d argue. I remember when England was a culinary nightmare, but it’s now a culinary delight.

Price can be a dimension of value, but for music, I’d argue that quality is still about fidelity to the performance and artist’s goals/vision. If they’d wanted to make a tinny version, that can be done in the studio and preserved through to the listener. Having that happen post-production is a decrement in quality.

Thinking more about this, I’m wondering how much of what you’re seeing is correlation rather than causation. The reason that the quality of many of the things you’re discussing is lower is based on economics and on maximizing profits by lowering costs than it is on the technology used or the size of the distribution network.

Apple could have populated iTunes with full bandwidth AIFF files or with Apple Lossless files. They didn’t, because it’s cheaper for them to store and distribute smaller files that take less server capacity and less hard drive space on an iPod. Book quality has decreased as publishers have cut production costs to increase profits, using cheaper materials, small paperbacks made of thinner paper, and now put very little effort into preparing digital files. Automobiles saw decreasing quality as manufacturers cut corners to increase profits (think of Ford deciding that settling lawsuits was cheaper than fixing what was wrong with the Pinto). Cel phones have terrible service because building a really good network is expensive, particularly in a country as large geographically as the US (though cel phone service is incredibly superior to what it was a few years ago and continues to improve). The bottom line is that it’s cheaper to get by with a network that’s just on the right side of “good enough”.

And there’s that phrase, &”good enough” which is often a highly profitable business model based not on delivering quality, but on maximizing profits by offering the bare minimum that customers will accept. This is indeed creeping into science publishing in the form of all the new journals that offer minimal editorial oversight. If you think there’s any reason for the swift rise in publishers entering this market other than the extreme profitability of such low overhead journals, then I have a bridge you might be interested in buying.

I can’t tell you why some markets shift from focusing on lower prices to higher quality, perhaps an economist could. It may depend on the maturity of the market or on the level of competition. The PC market is seeing a growth in high end Apple sales after decades of commodity PC’s dominating. There’s a rise in the market for high quality organic foods. WalMart’s dominance seems to be declining in favor of higher quality outlets. I’m willing to be that as storage and bandwidth get cheaper, you’ll see higher and higher quality files offered in the digital music market.

In summary, I’m not convinced that availability is a determining factor here, and as a result your argument gets a bit muddled. Companies try to make more money by offering the minimum their customers will accept. Some markets demand quality more than low prices.

Remember that when you apply these arguments to scholarly publishing you’re talking about two distinct markets, readers and authors. The interests of readers are to have a limited literature that is easily parsed and delivers the highest quality results relevant to their interests. The interests of authors is to publish as often and in as great a quantity as possible. It’s not in their best interest to enforce limits as they’re rewarded for publishing more. Not sure what this has to do with “availability”.

So for MP3s there’s a pretty good body of evidence out there that with a good encoder and mechanism for reading the data off a disc, somewhere between 192kb and 320kb (depending on the encoding method) delivers a lossy copy that >95% of those hearing, cannot differentiate from the source. This is proper blind ABX testing by the way. (check hydrogenaudio for all you ever wanted to know about how to do a proper mp3 encode). Some styles of music present challenges to the encoder, but that’s another story.

In reading this, I keep coming back to what one of the commentators said – What is quality? I’m not convinced by the idea that decreased citation per article is an indicator of a decrease in quality. There’s too many confounding variables at play. Ok a good amount of science right now, might be of the ‘fill in the blanks’ variety, but I don’t think that automatically consigns it to the poor quality pile. Science has plenty of examples of obscure papers that suddenly started an area of research many years after publication – again intrinsic quality hard to measure.

The only quality metric I can think of that would indicate whether an original paper was of high quality or not is that of reproducibility… Now in contentious areas this does happen – and there’s some numbers out there I think on retractions and conflicting results – but I’m not sure those numbers are comprehensive? But even then – does failure to reproduce a result, indicate low quality science? I’m not sure one can argue that it does. That pesky probability will rear its head.

Kent, I think there’s something in here – but I’m not sure I understand exactly what it is you are uncomfortable with. I suspect you think that science/publishing is being gamed or subject to framing (life on mars/meteorites?*) in some way – If that’s the case, I think you are right, but quality as a measure, I’m not so sure of. It’s so contextual and prone to post hoc rationalisation.

*Carl Sagen said “Extraordinary claims require extraordinary proof”, perhaps that’s the quality measure here.

David,

All fair points. What I’m concerned about is that we seem to be allowing information proliferation without doing the engineering and work to make such increases in capacity also maintain perceived and/or functional quality. Scientists are overwhelmed with information, and search anxiety is real. Google scales for discovery, but we aren’t scaling for offering refined information that provides meaningful increments of discovery. Instead, we have a clutter — and my concern is that it’s driving practitioners and the public away from the science.

So coming back to another theme you’ve expanded on, is this about putting the scholar at the center (the me centric approach) and looking at surfacing relevant info based on non-traditional metrics of use etc? In writing this, I’m thinking about things such as foursquare etc and the algorithms they use wrt to the rewards that keep people checking back in.

The public? postmodernist BS is the problem there I think. The insistence that opinions have to be opposite, no matter how unhinged, in order for the output to be balanced is one of the greatest tragedies of modern times I feel. The consequences of this can be seen in the fact that in the UK we no longer have herd immunity from Measles due to one academic paper and a campaign led entirely by a media with a need to feed “the controversy”.

A good corollary. If we want to personalize experiences, we need to be able to scale up the information environment so we can slice and direct it more reliably.

The MMR vaccine issue was truly sad and unnecessary. It took FAR TOO LONG to correct, and part of that reason was that good information couldn’t find its way out of the shouting match.