Are journal editors an anachronism? A throwback to an age of print publishing that no longer exists? An institution, like the British monarchy, that continues to exist more for symbolism than for functionality? An institution whose purpose is to perpetuate an unfair power relationship with authors and readers?

If you’ve been reading the Guardian recently, you’ll have noted a recurring revolutionary theme: publishing must be taken back from editors and the institutions that help to maintain their social standing — journals and publishers — and returned rightfully to the people.

Luckily, this kind of revolution doesn’t require a call to arms, but the simple use of the disruptive tools of the Internet, which are available to us all. As David Colquhoun proposed recently:

Publish your paper yourself on the web and open the comments. This sort of post-publication review would reduce costs enormously, and the results would be open for anyone to read without paying. It would also destroy the hegemony of half a dozen high-status journals.

Would self-publishing, along with the defenestration of a few prized editors, really do the trick to revolutionize science? Or does Colquhoun fail to see the value of editors and their journals? In this blog post, I’m going to argue why we still need them both and — perhaps even more than ever before.

Information overload isn’t just a receiver problem — We all understand the attention economy pretty well: too much to read, too little time, too many distractions. Many call this situation “information overload,” and the proposed solution has, almost entirely, focused on the receiving end of the equation — the reader.

Unfortunately, this fixation on the receiver, promoted by people like Clay Shirky in catchy phrases like, “It’s not information overload. It’s filter failure,” only reinforces this one-sided view.

Indeed, the very phrase “information overload” implies that the problem and solution rests with the receiver. For this reason, I’m only going to refer to “hyperinformation,” the overabundance of information.

By focusing on the receiver as the locus of the problem and its solution we run into some publishing paradoxes that cannot be explained, such as:

- Why do authors still use the journal system when they can reach readers directly and do so much faster and cheaper themselves?

- At a time when we can disaggregate all of the functions of journals into service components, why do journals still persist in a mostly unaltered state?

- Why have repository publishing and overlay journals failed to get beyond the conceptual stage?

- Why has post-publication review also failed to gain serious uptake?

I will argue that hyperinformation is a problem of quality signaling between senders (authors) and receivers (or readers), and contend that the role of editors in mediating these signals becomes enhanced — not diminished — in an expanding world of information.

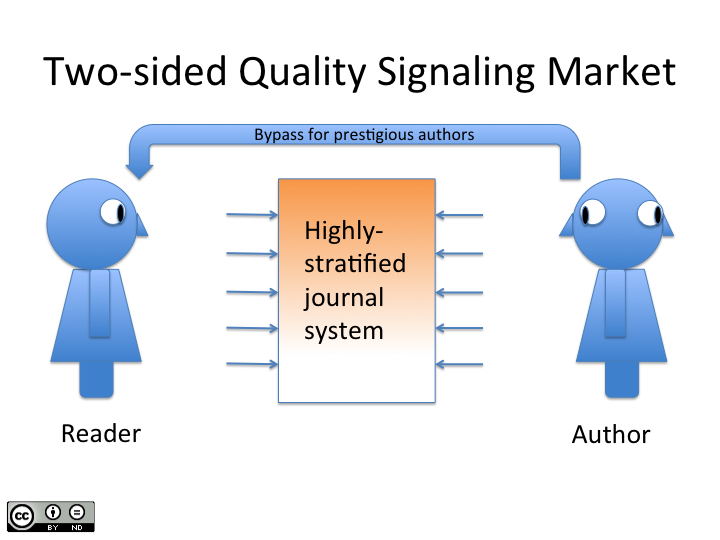

The Role of Journals in a Quality Signaling Market — First, let’s think of scholarly communication not as a linear transfer of information from sender to receiver, but as a two-sided market with authors at one end, readers on the other, and the publisher forming the intermediary between the two.

Second, let’s understand that authors are also readers, and that many readers are also authors.

Now let’s detail the assumptions about what we already know of this system:

- There is too much to read and too little time.

- Quality is heterogeneous across papers. Meaning, there are a few very excellent papers, lots of good papers, and a huge number of mediocre and lousy papers.

- Authors have more knowledge about the quality of their paper than potential readers. This is known as “asymmetric information.”

- Readers are active agents that routinely seek out relevant, high-quality sources of information. They are not passive receivers.

- Authors want to be read and acknowledged for their contribution to the scholarly record.

- Building a reputation for publishing quality research takes a long time, and yet

- Most authors appear only once in the scholarly record.

If we agree with all of the stated assumptions, then we can derive the following reader behaviors:

- That, in a large market of academic papers, where quality is heterogeneous, information about quality is asymmetric, and because the vast majority of authors appear only once, active readers will seek out quality signals unrelated to authors which help to identify what is worth their time.

- A reader is not always able to identify quality and often turns to experts, peers, or “institutions of trust” to guide them on what is worth reading.

- Readers will develop heuristics based on previous experiences with an information brand. These brands may be a journal, an author (if known), or an author affiliation (like, belongs to a particular lab or university).

- Quality signals may be amplified by experts like editors.

- And last, as the market gets larger, quality signals becomes more important.

Now let’s take our assumptions and derive the following author behaviors:

- Authors understand what quality signals and heuristics readers use to identify content. Remember that authors are readers, too.

- In attempting to increase the attention given to their papers, authors will seek out certification for their articles in order to send out quality signals to potential readers. This decision is made in spite of the fact that the certification process is slow, costly, and detracts the author from publishing more articles.

- Most authors have a good understanding of the quality of their article and will send it to journals of commensurate quality (with some exceptions noted). This saves them the time of multiple review and rejection.

- High-status authors — those who have established a quality brand over time — can bypass the certification process and communicate directly with readers. They do not require the imprimatur of the journal or publisher to convey their quality signal.

Here is a graphic representation of the signaling market:

From this two-sided signal market, it’s not difficult understand why the journal is still in the center of the scholarly communication process even though digital publishing has allowed its functions to become — in theory — disaggregated. The journal remains the organizing structure within the quality signaling market.

Let me state this more declaratively:

The principal function of the journal is to organize and mediate quality signaling within the author-reader market. The role of the editor is simply to make this happen.

From this signaling perspective, the essential function of peer review is not to improve an article, an argument maintained by journal publishers, but to stratify the literature into tiers of journals that strengthen quality signals for potential readers and therefore make the information seeking process more efficient. The greater the stratification, the more efficient the system.

The quality signaling model explains why repository publishing has failed to get beyond the conceptual stage for the simple reason that repositories are unable to generate clear quality signals to potential readers.

The signaling model can explain particular paradoxes, such as why high-status individuals are able to bypass journal publishing and reach authors directly, by say, posting a manuscript on an preprint server. This is not evidence that such a system of publishing can work for all scholars — as some proponents of preprint services believe — only that it can work for a narrow group of scholars who have already built a reputation for quality.

And last, as community-generated metrics take time to aggregate (citations, comments, and to a lesser extent downloads), they are of little help to guide readers to what has been recently published. They also tend to provide little additional information beyond the quality brand of the journal.

In conclusion, the first step in addressing hyperinformation is to stop thinking of it as receiver problem but as a market problem in which authors compete for the limited attention of readers.

If we view journals as mediators of quality signals in a crowded information space — a space that is getting a little more crowded each year — the future of the journal presents many more opportunities than when it is seen as a mechanism to control the distribution of scientific research.

(Adapted from a talk given at the OUP Journals Day, 15 September 2011)

Discussion

39 Thoughts on "Have Journal Editors Become Anachronisms?"

Thanks for writing this up Phil, I think it accurately portrays an important aspect of the our current journal system for the vast majority of readers. Every researcher I know has a “favorite” journal, the first place they turn for information, and an informal hierarchy they use to prioritize their reading.

One thought that occurred to me though about the role of editors in the signaling process–should editorial selection, the sorts of “Editor’s Choice” articles that are highlighted in many journals, be considered a form of post-publication peer review? These decisions are made after the formal peer review process and the articles are accepted. Given the attention these featured articles usually draw (they were by far the most-read articles in the journal I used to edit), could we think of this as a highly successful form of post-publication peer review (or at least simultaneous-with-publication peer review)?

Editor’s Choice articles are a successful form of post-publication peer review and they have an incentive system built in since the editor selects an article from his/her own journal. On the other hand, the model doesn’t scale very well and the editor may base her selection criteria on particular qualities that are not widely-shared. This is not to say that post-publication review is unhelpful, only that it will continue to be a supplement to, and not a replacement for, pre-publication review.

How about the FACULTYof1000. Seem to me inherent to Phil’s argument is the need for more and more of such services. Why not just say so.

Agreed, as noted above, the more filters, the better. It’s interesting though, that both the things mentioned here are editorially-driven, rather than relying solely on crowdsourcing and serendipity.

Last year, I covered the limited literature on post-publication review. Please see:

Post-Publication Review: Does It Add Anything New and Useful?

Some comments from a working academic…

1) ‘Tis true that journals help prioritize one’s attention, but only to some degree and usually for topics not immediately germane to our own research. Stuff that comes up in a literature search that seems DIRECTLY relevant to our work gets read regardless of where it is published. This is true for nearly every academic I know, and many manuscript reviewers are only to happy to remind authors of obscure yet relevant papers that they seem to have overlooked…

2) “Editor’s Choice” puff pieces are not necessarily from their own journals. In certain cases those are papers originally rejected from the journal that is now highlighting them, which seems to be one of the few ways for glamor journal editors to signal when they are not happy with a decision they had to make!

3) Both ‘Editor’s Choice’ or F1000 are used in academia to only one end, as part of the PR and spin machine activated for promotion purposes. It’s another way of collecting merit badges, and almost equally useless in the long run. Do you know anybody who selects his/her reading list based on rec’s from F1000?

One point 1) I cannot agree more. You are trained to be able to evaluate the merit of papers that closely resembles your own work. For those papers tangential to your expertise, it is useful to look for quality signals that guide you to what is worth reading. The journal brand, as well as other high-quality signals help you to do that.

Phil, I would expand this point by questioning the concept of “quality” in the model (which I otherwise like very much). You seem to think quality is independent of the community, a property of the paper itself, as it were. Perhaps so but then we also need a parameter for importance to others. Journals aggregate articles on particular topics, precisely because enough people are working on that topic. Within that aggregation we often also find papers that are only important to one, or a few people, but very important.

In short we need a more relativistic concept than simple quality. The diffusion is not that simple.

Hi David,

Yes, you are correct that “quality” is far more complex than how I describe it in my post and that I ignore other aspects of a paper, such as “relevance,” “importance” and the like. Let me fill out in more detail what I mean by quality:

- Quality is an abstract construct. It doesn’t exist except in our heads. We often know it, however, when we see it.

- Quality is multi-dimensional. It is made up of many different components, each one of them may say something different about what we’re trying to evaluate.

- Quality ultimately reflects a private evaluation

- Yet, our private evaluation is influenced – often to a great deal – by the evaluations of others. This is because we are often unsure of our own evaluations and seek the evaluations of others—especially those of experts. We are also social creatures that prefer consensus.

- Lastly, quality is often associative. What we think of the group as a whole affects what we think of its individual members. This is why journal branding is so important.

In spite of its complexity, I do think we can talk about article “quality” understanding that in order to build an information signalling model, we necessarily need to reduce its complexity into a one-dimensional quantity.

I really think we are talking about importance, not quality. Quality suggests that some people do better science than others, when the reality is that some discoveries are more important than others. By analogy, the geologist that finds the big strike is not necessarily a better geologist, he or she is just luckier.

My concern is that we not lose sight of the fact that nature is out there without us. We are the explorers. Every research project is a gamble, with all that implies.

So as a minimum, I want two different parameters in my model — quality and importance. I leave it to you to operationally define quality. I can measure importance with things like hits, mentions, downloads and citations. Quality eludes me as a modeling concept. Importance is social, hence easily measurable, more or less.

I would argue that some people do indeed do better science than others. That perhaps falls more into the design and execution of an experiment than the actual discovery, but it is something that should be recognized and rewarded. Those breaking ground with novel techniques and new approaches add much to the literature.

I do agree that one could separate out “importance” or as most in publishing refer to it, “impact”. There are many metrics for measuring this, each with its own particular flaws (a reason I favor a panel of metrics rather than choosing only one).

I will point out though, that several that you mention, hits, mentions and downloads are indications of popularity, not of importance. The infamous and refuted Wakefield paper on autism and vaccines has likely been downloaded and mentioned far more often, and seen more hits than reputable papers in the field. A quest for popularity puts pressure toward sensationalism and crowd pleasing, rather than scientific merit, so those are perhaps dangerous metrics to use.

There are two issues here, David C., and perhaps we need to continue below with more space to work in. First, what you describe in terms of new methods, etc., are always to be welcomed, and I would call them innovation, not quality. And yes it is very important. Second, impact is a good term for the importance dimension but it has been co-opted by the impact-factor folks. It is also a tad hyperbolic for my taste, but that is just me.

As for downloads, this is an interesting measurement issue. There are certain areas where science has been sucked into the policy apparatus, alas, and this is one. Climate change is another. As you may know the editor of Remote Sensing recently resigned, primarily because a little paper by Roy Spencer got 50,000 downloads and a lot of hyperbolic press. I don’t see this as a significant feature of science as a whole. Thankfully the NYT does not have a policy on string theory, not yet anyway. But it is worth worrying about if one does modeling, or filtering.

In any case I would stay away from the term quality. It is like the term intelligence, which when seriously applied is worse than useless. On the other hand, Phil has provided us with the rough guts of a model of scientific publishing. Dare we call it the Davis model? Woohoo!

I would argue that the measurement of quality discussed by Phil is not in any way independent of the community. The editor is nearly always a highly respected member of the community, and if they’re doing their job right, the peer review process includes representative members of the community.

I do agree though, that there are important measurements necessary that go well beyond this first placement into the hierarchy.

Very true. The vast majority of readers come to journals via search, though I would argue that if one comes across two articles of apparent equal interest, the quality signal provided by the journals where they were published is often used in prioritizing reading.

As for point 3), “designation”, signaling importance and impact of research to those outside of the research field who make career and funding decisions is also another useful function of journals (http://bit.ly/5EElgV). I’m not sure many tenured and funded professors would consider things that helped them achieve that level of success to be “useless”.

That said, this level of post-publication peer review (like F1000) are all supplemental, and used in addition to other filtering mechanisms. I do know people who regularly scan/search F1000 in their areas of interest. This is not how they assemble their reading list, but it is one way they add to it and filter it.

As David points out, the “reader” is not the only “customer” for editors’ work. Without the benefit of editor decisions, academic promotion committees would be forced to trawl through mountains of self-interested Internet postings and related ad hoc commentary from unsubstantiated sources. While promotion committees rarely actually read articles cited by every candidate, they greatly depend on the “credentialing” function provided by editors/journals. But wait… maybe we should just allow a friendly, independent, low-profit, search filtering company like Google decide which researchers should get funding and promotion? Or, maybe authors could promote themselves based on a totally independent assessment about the quality of their own work?

in my opinion and experience, the change re the mode of publication changes nothing re the role, function, or relevance (as that may be rarely recognized in the academe) of journal editors.

Phil, Repositories are not amplifying the quality signal; as a starting point they are simply amplifying the access signal. That is a receiver problem for many journal users, and it is solvable. The two signals are complementary.

Steve, I’m talking about repository publishing, in which publishing services are provided around a deposited article. Think overlay journal as an example. You are discussing archiving, in which a journal manuscript is placed in a digital archive to provide an additional access point above and beyond the journal.

Phil, I am curious about the idea that most authors only publish one article. This is very important as far a modeling is concerned, and it surprises me. Do we have any distribution data — number of authors versus number of articles? It would have to adjust for author age somehow.

The law that describes the distribution of author production is known as “Lotka’s Law,” named after the polymathic Alfred J. Lotka. The seminal article that investigated the distribution of authors in chemistry was published in 1926. While not perfect, the distribution resembles the inverse square (i.e. 1/n^2, where n is the number of scientific articles published).

Are there any relatively recent studies where this is confirmed? My problem is that I seem to remember a study showing that if one published 3 papers then one was very likely to publish many more. This would make the distribution bipolar, which is very different from Lotka’s law. If we were going to do computer modeling we would have to get this right.

David, there is a whole corpus of literature on author productivity, much of it starts by citing Lotka. The theory behind why such a distribution exists goes under different names, such as “power laws,” “cumulative advantage,” “preferential attachment,” “Matthew Effect” among others. Below are two citations to contemporary reviews that attempt to look at the similarities and differences of skewed distributions. Both are highly readable and quite enjoyable:

Price DJS. 1976. A General Theory of Bibliometric and Other Cumulative Advantage Processes. Journal of the American Society for Information Science 27: 292-306. http://dx.doi.org/10.1002/asi.4630270505

Newman MEJ. 2005. Power laws, Pareto distributions and Zipf’s law. Contemporary Physics 46: 323-51. http://dx.doi.org/10.1080/00107510500052444

Thanks, Phil. I am somewhat familiar with this stuff, in fact I love power laws. But the Newman paper only cites Lotka and the 1976 Price is old and merely says this is somehow well established. It may be that people just like the math.

The point is, you see, that if most authors only publish one paper then publishing has little to do with promotion. So we seem to have two generally accepted principles which contradict one another. Science doesn’t get any better than that.

There are many people listed as authors, many of whom never go on to become researchers themselves. They may go into business, industry, or become a professor at a teaching institution that does not require them to publish for promotion. Of the 3000+ institutions of higher learning in the US, only 300+ or so produce significant work, and about 30+ produce the lion’s share.

The unwritten requirement to include supervisors, department heads and other who had no direct contribution to the writing of the article also creates a distribution where one can be the author of hundreds (even thousands) of articles. “Authorship” here has a different meaning.

Question: how does the multi-author nature of most papers come into play here? If there are 6 authors on a paper, and 4 of them never publish again (let’s assume they all went on to careers outside of academia), that doesn’t negate the positive career advancement received by the other 2 authors. Nor does it negate the help that being on that one paper might have given those 4 in getting their non-academic jobs.

Factor in that we’re now living in an era of “big” science, where papers routinely have hundreds of authors. Not sure how that would swing the statistics….

Just to clarify, we seem to have Lotka’s law versus the law of publish or perish. I suppose this apparent contradiction could be reconciled if those who only publish one or two articles then perish, as it were. That would be interesting.

@Mike_F

I think it shows that you are a working academic, not an editor. The name of the journal has never mattered less. Who looks at print journals these days?

In any case, the fact that citations (fallible as they are as a criterion of quality) are essentially uncorrelated with the impact factor of the journal in which the paper is published, shows that it doesn’t matter a damn where a paper is published (its only importance lies in the exaggerated importance attached to the journal by lazy promotion committees).

I have encountered F1000 occasionally but I have never found it much use.

I agree that post-publication review does not work well at the moment, but I suspect it could do if it were to become the norm.

I can’t expect journal editors to agree, but I think editors add very little to science, certainly not enough to justify the crippling cost of print journals.

The blame for the information overload does not lie with publishers, but with ex-academics who have imposed a publish-or-perish culture. Publishers have merely cashed in on that flood of papers that has resulted, as any business would be expected to do.

David Wojick–starting a new thread here for this one, it’s too crowded up there.

So if we are actually trying to talk about science, and scientific communication, why are we talking about these people? They are just noise in the system.

I think they can’t be ignored because they are doing a great deal of the actual science research. This article talks about the number of PhD students who go on to tenured academic positions, in the US, it’s currently around 15%:

http://www.nature.com/news/2011/110420/full/472276a.html

That leaves an awful lot of research being done by people who get their degree and move on to some other type of job. Ignoring them as “noise” means ignoring the bulk of research that is done.

Thanks David C. Let me clarify my consternation. My model of science has assumed something like a normal, bell shaped distribution of articles published by scientists. This means most of the work is done by career researchers, over their lifetime, and published in journals. My guess would have been that the mean average was maybe 20 articles per career.

Mathematically, a power law distribution is profoundly different, making my model (and the general view, I think) profoundly wrong. If Lotka’s law is right them most of the work is done by short-timers, presumably on their way to somewhere else than basic research. The implications for policy are equally profound, hence my excitement.

Interestingly, I had recently discovered what I call the nomadic view of science, namely that career researchers typically move from topic to topic. But Lotka’s law seems to say that most research is done by temporary residents in the publishing scientific community. If true this is deeply nomadic.

Can it be all of the above? Really, the notion of multi-author papers brings in a great deal of variety. If you’re a career scientist, once you start running a laboratory, you do less and less of the actual experimentation. The hands-on work is done by your students and your postdocs (and sometimes your technicians). That’s going to result in a mixed bag of authors, some of whom are in it for the long haul, and others for whom the lab is a temporary waystation. The senior author (or authors) on the paper are the constants, the others are much more variable, and likely there are more of the latter than the former on any given paper.

It’s important to realize that students and postdocs are the driving force, the people that do most of the actual research. The problem is that the system requires graduate students to fill this role, and when they succeed, they become PhD holders, and the system does not create anywhere for them to go. So few stay on in basic research not out of choice but out of necessity, as there just aren’t enough jobs to satisfy the constant stream of doctorates.

We are talking math here and no, it can’t be both a power law and a normal distribution. If anything the fact that senior leaders get their names on all their doctoral student’s papers means that the power law distribution is actually stronger than it appears, as far as who does the actual research. However, it may well be possible that the seniors are providing valuable direction.

As an analyst I try not to have opinions about the system working well or not. I am just trying to understand it.

I guess by “can it be all?” I meant that you likely have career scientists who follow one path and at the same time you have the majority of people in the system who are on a different path, likely to disappear relatively quickly. I would think that the overall picture would be somewhat confused by this, perhaps it’s better to try to separate them out? Maybe data could be sorted by number of publications (above a certain level would indicate a career scientist versus those fleetingly in the system).

I agree that your model applies generally to explain much about the persistence of the traditions of journal publishing in the digital age, but I’m wondering how it might apply to outliers like the system of journal publishing in law, where law reviews do NOT operate by usual peer-review methods but instead are run by student editors who decide what gets published. Surely, there, the status of the editors is clearly irrelevant and the reputation of the journal must depend more on the status of the institution whose name appears in it than on anything else (Harvard Law Review being more “prestigious,” I suppose, than the Tulsa Law Review). In this field, by the way, some senior scholars have succeeded in making their blogs more authoritative sources of reliable information than the journals in the field.