If the Internet created a burgeoning market of cheap academic journal knockoffs, should we be surprised to witness new knockoff ratings companies?

In a recently published article, “Spurious alternative impact factors: The scale of the problem from an academic perspective” published in BioEssays (2 March 2015), authors, Fredy Gutierrez, Jeffrey Beall, and Diego A. Forero document the emergence of a new industry of indexing and ratings companies, many of which derive their names from various combinations and permutations of the words “Thomson Reuters” and “Impact Factor.”

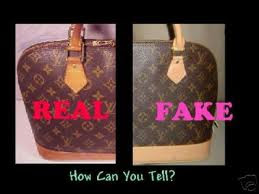

Like the publications exposed by John Bohannon in his exposé of new open access journals, many of these companies create a shroud of deception around their identities, hoping to trick academics and publishers into using their services. And like other Internet scams, it is not entirely clear who or how many actually fall for these scams, yet a list of 23 dubious services provides sufficient evidence that the market is sufficiently large to encourage many entrants. Beall documented just 4 such companies in 2013.

Apart from documenting the existence of such fraudulent online schemes, the paper does little to propose viable solutions, apart from caveat emptor (let the buyer beware). Their proposal that “internationally recognized societies of academic publishers should do more to identify questionable journals and websites” seems empty of anything other than general hand-waving toward the authorities. The authors make a specific claim that Thomson Reuters should lower its prices to researchers located in developing countries, implying that economic barriers to access are, at least, part of the problem. Oddly, the authors specifically point out SCImago and Google Scholar as free ranking services earlier in their paper.

To me, the market we should be attempting to correct is not an economic market but rather a trust market.

Trust (like “quality” or “impact”) is one of those big, abstract, and multidimensional constructs that deserve much more love and attention than can be put in a blog post about a short opinion article. However, I will state briefly that trust is a private evaluation that is often based on prior experience and influenced heavily by the experiences of those around us. When we have very little prior experience–say we are a first-time author–and have no close relationship with someone who does, we have no other resort than to look at herd behavior to guide our own actions. Our personal desire to conform to academic norms forces us to follow the masses, wherever they might lead us. Unfortunately, this is where deceptive marking and fraudulent claims work most effectively.

One can look to other markets to see how trust may be built into a system where transactions may only take place online and there is great potential for fraud and deception. For eBay and online dating services, community trust can be built through repeated evaluations of others in the system. If you are unsure whether that guy selling antique furniture is legitimate, you can look at his ratings. Is that beautiful, single woman on a dating site really who she says she is? Check her ratings. Ditto for hotel and dining reviews. The problem is that it didn’t take long for an entire industry of fake reviewers (human and automated) to spring into existence. You can purchase good reviews, ratings, Likes, and Tweets. It’s that easy.

There are other ways to keep gamers from corrupting the integrity of a system. If you start with a group of trusted people, you can grow that group by requiring each new entrant to be vouched for by an existing member (or two). This is the model of many prestigious academies. If you want to become a member, you have to start by being nominated. This doesn’t prevent any member from becoming corrupt, but it is a model in which trust is invested deeply into the process of building the community.

The system of trust we are most familiar with in academic publishing is the creation of authorities. Individuals become authorities when they have proven themselves over and over and over again to the community that they are leaders with integrity.Trust, however, is one of those fragile constructs that can be so easily destroyed by a single unethical act, such fabricating scientific results or posing as your own peer reviewers. Unlike the financial industry, it is very difficult to walk away with a golden parachute when you’ve broken the trust of your community. Publishing archives for posterity your accomplishments as well as your mistakes.

Beyond individuals, groups can build institutions of trust. An index of academic journals is the product of an institution devoted to such a task. The Directory of Open Access Journals (DOAJ), for example, is one index that is attempting to rebuild the community’s trust after giving legitimacy to hundreds of fraudulent titles. Maintaining a trustworthy list of open access titles is important as many academic libraries require a journal be listed on the DOAJ before releasing article processing fee (APC) support. Here, legitimacy is translated into access to financial resources.

Industry and trade associations also serve as institutions of trust. For example, the Open Access Scholarly Publishers Association (OASPA) strives to build trust in the open access journal market by requiring that members adhere to a strict code of conduct. The legitimacy of membership associations is put to test when it is required to sanction one of its members for inappropriate behavior.

Lastly, ratings agencies serve as institutions of trust. In the case of Thomson Reuters, the rankings of titles, as based on their citation profile, can greatly influence the journals market by driving submissions to one journal over another. Journal ratings can also affect the financial success of individual authors. For some universities, being published in a journal with an Impact Factor comes with financial rewards to its authors; being published in a high ranking journal comes with even more. Given the rewards that flow directly (and indirectly) from academic publishing, no one should be surprised that a burgeoning market of knockoff ratings companies are following the heels of the exploding market of cheap academic knockoff journals.

The important and most difficult question that our industry needs to address is how to build such institutions of trust in a market of growing uncertainty? The answer to this question is not easy. Uncertainty in markets favors strong existing brands, meaning that there are more pressures in publishing toward consolidating around large publishers who can exert and defend a strong trust brand in the marketplace. These are publishing houses with entire teams devoted to marketing, media, and public relations, as well as lawyers skilled at establishing and defending their trademarks.

For some publishers and trade associations, one solution has been to create “good housekeeping” badges, testifying to the integrity of the peer review process. This is a form of quality signaling that is often used in the food and manufacturing industries, as it alerts potential customers that their product is healthier/safer/more humane/better for the environment than a competitor’s product.

Unfortunately, it is much easier to create knockoff badges in the online market than in a physical one. A clothing company claiming to use only organic cotton grown in the United States using fair labor standards has to bring actual shirts, pants and dresses to the market before it can affix a tag claiming their virtues. In contrast, the barriers to entry for new online journals and supporting services are much, much lower. As Angela Cochran, described so thoughtfully in her post about trust in academic publishing, knockoffs can easily create their own networks of trust institutions (like fake societies, indexes, and rankings agencies), make up their own knockoff badges and provide similar signals of legitimacy and authority in the marketplace. And given that these knockoffs may conduct business in countries with little governmental oversight, shutting one down may be like playing a big and expensive game of Whac-a-Mole.

Discussion

5 Thoughts on "Knockoffs Erode Trust in Metrics Market"

I fully agree that trust is what is at stake here, but I’m puzzled by “When we have very little prior experience–say we are a first-time author–and have no close relationship with someone who does, we have no other resort than to look at herd behavior to guide our own actions.” All research is a collective enterprise–it’s not done in a vacuum. It means extensively reading and evaluating the literature of one’s chosen research field and then to contribute to the same literature, not to that of some anonymous herd.

Perhaps the attendant question to ask is whether many researchers are now foregoing the reading and evaluating part and are jumping straight to publication of their own results. If that’s the case, they’re facing challenges that are the result of conducting research that’s disconnected from that of others.

The comments on Beall’s blog post do seem to have some legitimate complaints about Index Copernicus being on his list. Does anyone know anything about this effort from Poland?

When a trust economy becomes unstable, reputation becomes essential, and it inevitably becomes commoditized. Remember this: http://ottawacitizen.com/technology/science/respected-medical-journal-turns-to-dark-side ? Are faux-metrics providers charging to get rankings? are journals purchasing reputation so that authors will purchase publication?

When trust is distributed across a wide field of options, a single instability causes just a minor wobble. When the number of trusted sources decreases, the dependency on any one (or only one) source becomes dangerously heavy. The trusted source has a diminished margin for error, as the need for reliability increases. That same need for reliability creates barriers to entry for new players.

More weight resting on fewer touch points.

The real question what would destabilize that heavy-burdened trust? Two big publishers with reliable reputations got caught-up in the Bohannon sting – but they haven’t vanished – nor have the stung journals visibly suffered for the minor, temporary embarrassment.

The limitations of the JIF have been fodder for alternative metrics for years (as in other citation metrics, not the Altmetrics, PlumX, ImpactStory kind of alternative – that’s a separate matter). And, of course, DORA went after JIF full-bore (http://ar.ascb.org/sfdora.html). Nevertheless, there has not been significant decline in the enthusiasm around JCR’s release each year.

Of course, some journals do not need JCR-JIF to bolster their legitimacy, but for new journals, it can be nearly a make-or-break proposition. For them, the whole weight of the endeavor rests on one point of entry.

Another excellent, thought provoking post Phil. No easy answers here. It’s going to take a group effort, cooperation and probably the expense and time which goes into legal action (in some cases) to combat these types of scams. Unlike a situation where cops could raid someone who is selling knock off rolexes, there seems to be little or no ramifications for those peddling fake metrics etc.

I’m curious about your statement that some have created their own “peer review integrity” badge. As far as I know what we’re doing with PRE-val is unique in this type of verification. Are there examples which you could share?

Adam,

I was thinking of Taylor & Francis’ Peer Review Integrity Logo

see: http://editorresources.taylorandfrancisgroup.com/peerless-peer-review/

In our online world where information can move easily from the meritorious to the meretricious and elide quality questions completely, it is particularly important that academic journals make the case for peer review with clarity. That is why every Taylor & Francis journal can now include a statement of its peer review mechanisms online, and when it does, carries the Peer Review Integrity logo. If your journal has not yet submitted its statement, you may want to talk to your Managing Editor about doing so.